Uncertainty

| Part of a series on |

| Certainty |

|---|

|

Related concepts and fundamentals: |

Uncertainty is a situation which involves imperfect and/or unknown information. However, "uncertainty is an unintelligible expression without a straightforward description".[1] It arises in subtly different ways in a number of fields, including insurance, philosophy, physics, statistics, economics, finance, psychology, sociology, engineering, metrology, and information science. It applies to predictions of future events, to physical measurements that are already made, or to the unknown. Uncertainty arises in partially observable and/or stochastic environments, as well as due to ignorance and/or indolence.[2]

Concepts

Although the terms are used in various ways among the general public, many specialists in decision theory, statistics and other quantitative fields have defined uncertainty, risk, and their measurement as:

- Uncertainty

- The lack of certainty. A state of having limited knowledge where it is impossible to exactly describe the existing state, a future outcome, or more than one possible outcome.

- Measurement of uncertainty

- A set of possible states or outcomes where probabilities are assigned to each possible state or outcome – this also includes the application of a probability density function to continuous variables.

- Risk

- A state of uncertainty where some possible outcomes have an undesired effect or significant loss.

- Measurement of risk

- A set of measured uncertainties where some possible outcomes are losses, and the magnitudes of those losses – this also includes loss functions over continuous variables.[3][4][5][6]

In economics, Frank Knight distinguished risk and uncertainty; uncertainty being risk that is immeasurable, not possible to calculate, and referred to as Knightian uncertainty:

Uncertainty must be taken in a sense radically distinct from the familiar notion of risk, from which it has never been properly separated.... The essential fact is that 'risk' means in some cases a quantity susceptible of measurement, while at other times it is something distinctly not of this character; and there are far-reaching and crucial differences in the bearings of the phenomena depending on which of the two is really present and operating.... It will appear that a measurable uncertainty, or 'risk' proper, as we shall use the term, is so far different from an unmeasurable one that it is not in effect an uncertainty at all.

| “ | You cannot be certain about uncertainty. | ” |

| — Frank Knight | ||

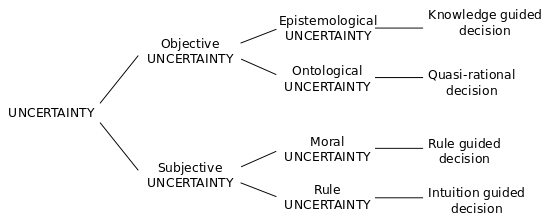

There are other taxonomies of uncertainties and decisions that include a broader sense of uncertainty and how it should be approached from an ethics perspective:[8]

Dennis Lindley, Understanding Uncertainty (2006)

For example, if it is unknown whether or not it will rain tomorrow, then there is a state of uncertainty. If probabilities are applied to the possible outcomes using weather forecasts or even just a calibrated probability assessment, the uncertainty has been quantified. Suppose it is quantified as a 90% chance of sunshine. If there is a major, costly, outdoor event planned for tomorrow then there is a risk since there is a 10% chance of rain, and rain would be undesirable. Furthermore, if this is a business event and $100,000 would be lost if it rains, then the risk has been quantified (a 10% chance of losing $100,000). These situations can be made even more realistic by quantifying light rain vs. heavy rain, the cost of delays vs. outright cancellation, etc.

Some may represent the risk in this example as the "expected opportunity loss" (EOL) or the chance of the loss multiplied by the amount of the loss (10% × $100,000 = $10,000). That is useful if the organizer of the event is "risk neutral", which most people are not. Most would be willing to pay a premium to avoid the loss. An insurance company, for example, would compute an EOL as a minimum for any insurance coverage, then add onto that other operating costs and profit. Since many people are willing to buy insurance for many reasons, then clearly the EOL alone is not the perceived value of avoiding the risk.

Quantitative uses of the terms uncertainty and risk are fairly consistent from fields such as probability theory, actuarial science, and information theory. Some also create new terms without substantially changing the definitions of uncertainty or risk. For example, surprisal is a variation on uncertainty sometimes used in information theory. But outside of the more mathematical uses of the term, usage may vary widely. In cognitive psychology, uncertainty can be real, or just a matter of perception, such as expectations, threats, etc.

Vagueness or ambiguity are sometimes described as "second order uncertainty", where there is uncertainty even about the definitions of uncertain states or outcomes. The difference here is that this uncertainty is about the human definitions and concepts, not an objective fact of nature. It is usually modelled by some variation on Zadeh's fuzzy logic. It has been argued that ambiguity, however, is always avoidable while uncertainty (of the "first order" kind) is not necessarily avoidable.

Uncertainty may be purely a consequence of a lack of knowledge of obtainable facts. That is, there may be uncertainty about whether a new rocket design will work, but this uncertainty can be removed with further analysis and experimentation. At the subatomic level, however, uncertainty may be a fundamental and unavoidable property of the universe. In quantum mechanics, the Heisenberg Uncertainty Principle puts limits on how much an observer can ever know about the position and velocity of a particle. This may not just be ignorance of potentially obtainable facts but that there is no fact to be found. There is some controversy in physics as to whether such uncertainty is an irreducible property of nature or if there are "hidden variables" that would describe the state of a particle even more exactly than Heisenberg's uncertainty principle allows.

Measurements

The most commonly used procedure for calculating measurement uncertainty is described in the "Guide to the Expression of Uncertainty in Measurement" (GUM) published by ISO. A derived work is for example the National Institute for Standards and Technology (NIST) Technical Note 1297, "Guidelines for Evaluating and Expressing the Uncertainty of NIST Measurement Results", and the Eurachem/Citac publication "Quantifying Uncertainty in Analytical Measurement". The uncertainty of the result of a measurement generally consists of several components. The components are regarded as random variables, and may be grouped into two categories according to the method used to estimate their numerical values:

- Type A, those evaluated by statistical methods

- Type B, those evaluated by other means, e.g., by assigning a probability distribution

By propagating the variances of the components through a function relating the components to the measurement result, the combined measurement uncertainty is given as the square root of the resulting variance. The simplest form is the standard deviation of a repeated observation.

In metrology, physics, and engineering, the uncertainty or margin of error of a measurement, when explicitly stated, is given by a range of values likely to enclose the true value. This may be denoted by error bars on a graph, or by the following notations:

- measured value ± uncertainty

- measured value +uncertainty

−uncertainty - measured value (uncertainty)

In the last notation, parentheses are the concise notation for the ± notation. For example, applying 10 1⁄2 meters in a scientific or engineering application, it could be written 10.5 m or 10.50 m, by convention meaning accurate to within one tenth of a meter, or one hundredth. The precision is symmetric around the last digit. In this case it's half a tenth up and half a tenth down, so 10.5 means between 10.45 and 10.55. Thus it is understood that 10.5 means 10.5±0.05, and 10.50 means 10.50±0.005, also written 10.50(5) and 10.500(5) respectively. But if the accuracy is within two tenths, the uncertainty is ± one tenth, and it is required to be explicit: 10.5±0.1 and 10.50±0.01 or 10.5(1) and 10.50(1). The numbers in parenthesis apply to the numeral left of themselves, and are not part of that number, but part of a notation of uncertainty. They apply to the least significant digits. For instance, 1.00794(7) stands for 1.00794±0.00007, while 1.00794(72) stands for 1.00794±0.00072.[9] This concise notation is used for example by IUPAC in stating the atomic mass of elements.

The middle notation is used when the error is not symmetrical about the value – for example 3.4+0.3

−0.2. This can occur when using a logarithmic scale, for example.

Often, the uncertainty of a measurement is found by repeating the measurement enough times to get a good estimate of the standard deviation of the values. Then, any single value has an uncertainty equal to the standard deviation. However, if the values are averaged, then the mean measurement value has a much smaller uncertainty, equal to the standard error of the mean, which is the standard deviation divided by the square root of the number of measurements. This procedure neglects systematic errors, however.

When the uncertainty represents the standard error of the measurement, then about 68.3% of the time, the true value of the measured quantity falls within the stated uncertainty range. For example, it is likely that for 31.7% of the atomic mass values given on the list of elements by atomic mass, the true value lies outside of the stated range. If the width of the interval is doubled, then probably only 4.6% of the true values lie outside the doubled interval, and if the width is tripled, probably only 0.3% lie outside. These values follow from the properties of the normal distribution, and they apply only if the measurement process produces normally distributed errors. In that case, the quoted standard errors are easily converted to 68.3% ("one sigma"), 95.4% ("two sigma"), or 99.7% ("three sigma") confidence intervals.

In this context, uncertainty depends on both the accuracy and precision of the measurement instrument. The lower the accuracy and precision of an instrument, the larger the measurement uncertainty is. Notice that precision is often determined as the standard deviation of the repeated measures of a given value, namely using the same method described above to assess measurement uncertainty. However, this method is correct only when the instrument is accurate. When it is inaccurate, the uncertainty is larger than the standard deviation of the repeated measures, and it appears evident that the uncertainty does not depend only on instrumental precision.

Uncertainty and the media

Uncertainty in science, and science in general, is often interpreted much differently in the public sphere than in the scientific community.[10] This is due in part to the diversity of the public audience, and the tendency for scientists to misunderstand lay audiences and therefore not communicate ideas clearly and effectively.[10] One example is explained by the information deficit model. Also, in the public realm, there are often many scientific voices giving input on a single topic.[10] For example, depending on how an issue is reported in the public sphere, discrepancies between outcomes of multiple scientific studies due to methodological differences could be interpreted by the public as a lack of consensus in a situation where a consensus does in fact exist.[10] This interpretation may have even been intentionally promoted, as scientific uncertainty may be managed to reach certain goals. For example, global warming contrarian activists took the advice of Frank Luntz to frame global warming as an issue of scientific uncertainty, which was a precursor to the conflict frame used by journalists when reporting the issue.[11]

"Indeterminacy can be loosely said to apply to situations in which not all the parameters of the system and their interactions are fully known, whereas ignorance refers to situations in which it is not known what is not known."[12] These unknowns, indeterminacy and ignorance, that exist in science are often "transformed" into uncertainty when reported to the public in order to make issues more manageable, since scientific indeterminacy and ignorance are difficult concepts for scientists to convey without losing credibility.[10] Conversely, uncertainty is often interpreted by the public as ignorance.[13] The transformation of indeterminacy and ignorance into uncertainty may be related to the public's misinterpretation of uncertainty as ignorance.

Journalists often either inflate uncertainty (making the science seem more uncertain than it really is) or downplay uncertainty (making the science seem more certain than it really is).[14] One way that journalists inflate uncertainty is by describing new research that contradicts past research without providing context for the change.[14] Other times, journalists give scientists with minority views equal weight as scientists with majority views, without adequately describing or explaining the state of scientific consensus on the issue.[14] In the same vein, journalists often give non-scientists the same amount of attention and importance as scientists.[14]

Journalists may downplay uncertainty by eliminating "scientists' carefully chosen tentative wording, and by losing these caveats the information is skewed and presented as more certain and conclusive than it really is".[14] Also, stories with a single source or without any context of previous research mean that the subject at hand is presented as more definitive and certain than it is in reality.[14] There is often a "product over process" approach to science journalism that aids, too, in the downplaying of uncertainty.[14] Finally, and most notably for this investigation, when science is framed by journalists as a triumphant quest, uncertainty is erroneously framed as "reducible and resolvable".[14]

Some media routines and organizational factors affect the overstatement of uncertainty; other media routines and organizational factors help inflate the certainty of an issue. Because the general public (in the United States) generally trusts scientists, when science stories are covered without alarm-raising cues from special interest organizations (religious groups, environmental organizations, political factions, etc.) they are often covered in a business related sense, in an economic-development frame or a social progress frame.[15] The nature of these frames is to downplay or eliminate uncertainty, so when economic and scientific promise are focused on early in the issue cycle, as has happened with coverage of plant biotechnology and nanotechnology in the United States, the matter in question seems more definitive and certain.[15]

Sometimes, too, stockholders, owners, or advertising will pressure a media organization to promote the business aspects of a scientific issue, and therefore any uncertainty claims that may compromise the business interests are downplayed or eliminated.[14]

Applications

- Investing in financial markets such as the stock market

- Uncertainty is designed into games, most notably in gambling, where chance is central to play.

- In scientific modelling, in which the prediction of future events should be understood to have a range of expected values

- Uncertainty or error is used in science and engineering notation. Numerical values should only be expressed to those digits that are physically meaningful, which are referred to as significant figures. Uncertainty is involved in every measurement, such as measuring a distance, a temperature, etc., the degree depending upon the instrument or technique used to make the measurement. Similarly, uncertainty is propagated through calculations so that the calculated value has some degree of uncertainty depending upon the uncertainties of the measured values and the equation used in the calculation.[16]

- In physics, the Heisenberg uncertainty principle forms the basis of modern quantum mechanics.

- In engineering, uncertainty can be used in the context of validation and verification of material modeling.[17]

- In weather forecasting, it is now commonplace to include data on the degree of uncertainty in a weather forecast.

- Uncertainty is often an important factor in economics. According to economist Frank Knight, it is different from risk, where there is a specific probability assigned to each outcome (as when flipping a fair coin). Uncertainty involves a situation that has unknown probabilities, while the estimated probabilities of possible outcomes need not add to unity.

- In entrepreneurship: New products, services, firms and even markets are often created in the absence of probability estimates. According to entrepreneurship research, expert entrepreneurs predominantly use experience based heuristics called effectuation (as opposed to causality) to overcome uncertainty.

- In metrology, measurement uncertainty is a central concept quantifying the dispersion one may reasonably attribute to a measurement result. Such an uncertainty can also be referred to as a measurement error. In daily life, measurement uncertainty is often implicit ("He is 6 feet tall" give or take a few inches), while for any serious use an explicit statement of the measurement uncertainty is necessary. The expected measurement uncertainty of many measuring instruments (scales, oscilloscopes, force gages, rulers, thermometers, etc.) is often stated in the manufacturers' specifications.

- Uncertainty has been a common theme in art, both as a thematic device (see, for example, the indecision of Hamlet), and as a quandary for the artist (such as Martin Creed's difficulty with deciding what artworks to make).

See also

- Applied Information Economics

- Buckley's chance

- Certainty

- Dempster–Shafer theory

- Fuzzy set theory

- Game theory

- Information entropy

- Interval finite element

- Measurement uncertainty

- Morphological analysis (problem-solving)

- Propagation of uncertainty

- Randomness

- Schrödinger's cat

- Scientific consensus

- Statistical mechanics

- Uncertainty quantification

- Uncertainty tolerance

- Volatility, uncertainty, complexity and ambiguity

References

- ↑ Antunes, Ricardo; Gonzalez, Vicente (2015-03-03). "A Production Model for Construction: A Theoretical Framework". Buildings. 5 (1): 209–228. doi:10.3390/buildings5010209.

- ↑ Peter Norvig, Sebastian Thrun. Udacity: Introduction to Artificial Intelligence

- ↑ Douglas Hubbard (2010). How to Measure Anything: Finding the Value of Intangibles in Business, 2nd ed. John Wiley & Sons. Description, contents, and preview.

- ↑ Jean-Jacques Laffont (1989). The Economics of Uncertainty and Information, MIT Press. Description and chapter-preview links.

- ↑ Jean-Jacques Laffont (1980). Essays in the Economics of Uncertainty, Harvard University Press. Chapter-preview links.

- ↑ Robert G. Chambers and John Quiggin (2000). Uncertainty, Production, Choice, and Agency: The State-Contingent Approach. Cambridge. Description and preview. ISBN 0-521-62244-1

- ↑ Knight, F. H. (1921). Risk, Uncertainty, and Profit. Boston: Hart, Schaffner & Marx.

- ↑ Tannert C, Elvers HD, Jandrig B (2007). "The ethics of uncertainty. In the light of possible dangers, research becomes a moral duty.". EMBO Rep. 8 (10): 892–6. PMC 2002561

. PMID 17906667. doi:10.1038/sj.embor.7401072.

. PMID 17906667. doi:10.1038/sj.embor.7401072. - ↑ "Standard Uncertainty and Relative Standard Uncertainty". CODATA reference. NIST. Retrieved 26 September 2011.

- 1 2 3 4 5 Zehr, S. C. (1999). Scientists' representation of uncertainty. In Friedman, S.M., Dunwoody, S., & Rogers, C. L. (Eds.), Communicating uncertainty: Media coverage of new and controversial science (3–21). Mahwah, NJ: Lawrence Erlbaum Associates, Inc.

- ↑ Nisbet, M.; Scheufele, D. A. (2009). "What's next for science communication? Promising directions and lingering distractions". American Journal of Botany. 96 (10): 1767–1778. doi:10.3732/ajb.0900041.

- ↑ Shackley, S.; Wynne, B. (1996). "Representing uncertainty in global climate change science and policy: Boundary-ordering devices and authority". Science, Technology, & Human Values. 21 (3): 275–302. doi:10.1177/016224399602100302.

- ↑ Somerville, R. C.; Hassol, S. J. (2011). "Communicating the science of climate change". Physics Today. 64: 48–53. doi:10.1063/pt.3.1296.

- 1 2 3 4 5 6 7 8 9 Stocking, H. (1999). "How journalists deal with scientific uncertainty". In Friedman, S. M.; Dunwoody, S.; Rogers, C. L. Communicating Uncertainty: Media Coverage of New and Controversial Science. Mahwah, NJ: Lawrence Erlbaum. pp. 23–41. ISBN 0-8058-2727-7.

- 1 2 Nisbet, M.; Scheufele, D. A. (2007). "The Future of Public Engagement". The Scientist. 21 (10): 38–44.

- ↑ Gregory, Kent J.; Bibbo, Giovanni; Pattison, John E. (2005). "A Standard Approach to Measurement Uncertainties for Scientists and Engineers in Medicine". Australasian Physical and Engineering Sciences in Medicine. 28 (2): 131–139. doi:10.1007/BF03178705.

- ↑ https://icme.hpc.msstate.edu/mediawiki/index.php/Category:Uncertainty

Further reading

- Lindley, Dennis V. (2006-09-11). Understanding Uncertainty. Wiley-Interscience. ISBN 978-0-470-04383-7.

- Gilboa, Itzhak (2009). Theory of Decision under Uncertainty. Cambridge: Cambridge University Press. ISBN 9780521517324.

- Halpern, Joseph (2005-09-01). Reasoning about Uncertainty. MIT Press. ISBN 9780521517324.

- Smithson, Michael (1989). Ignorance and Uncertainty. New York: Springer-Verlag. ISBN 0-387-96945-4.

External links

| Look up uncertainty in Wiktionary, the free dictionary. |

| Wikiquote has quotations related to: Uncertainty |

- Measurement Uncertainties in Science and Technology, Springer 2005

- Proposal for a New Error Calculus

- Estimation of Measurement Uncertainties — an Alternative to the ISO Guide

- Bibliography of Papers Regarding Measurement Uncertainty

- Guidelines for Evaluating and Expressing the Uncertainty of NIST Measurement Results

- Strategic Engineering: Designing Systems and Products under Uncertainty (MIT Research Group)

- Understanding Uncertainty site from Cambridge's Winton programme

- Bowley, Roger (2009). "∆ – Uncertainty". Sixty Symbols. Brady Haran for the University of Nottingham.