Graphics Core Next

But strictly speaking, GCN originally referred solely to the GCA!

Graphics Core Next (GCN)[1] is the codename for both a series of microarchitectures as well as for an instruction set. GCN was developed by AMD for their GPUs as the successor to TeraScale microarchitecture/instruction set. The first product featuring GCN was launched in 2011.[2]

GCN is a RISC SIMD (or rather SIMT) microarchitecture contrasting the VLIW SIMD architecture of TeraScale. GCN requires considerably more transistors than TeraScale, but offers advantages for GPGPU computation. It makes the compiler simpler and should also lead to better utilization. GCN implements HyperZ.[3]

GCN is fabricated in 28 nm and 14 nm graphics chips, available on selected models in the Radeon HD 7000, HD 8000, Rx 200, Rx 300 and 400 series of AMD graphics cards. GCN is also used in the AMD Accelerated Processing Units code-named "Temash", "Kabini", "Kaveri", "Carrizo", "Beema" and "Mullins", as well as in Liverpool (PlayStation 4) and Durango (Xbox One).

Instruction set

The GCN instruction set is owned by AMD as well as the X86-64 instruction set. The GCN instruction set has been developed specifically for GPUs (and GPGPU) and e.g. has no micro-operation for division.

Documentation is available:

- for Graphics Core Next instruction set

- for GCN 2nd generation instruction set

- for GCN 3rd generation instruction set

- Documentation for GCN 4th generation instruction set is the same as for the 3rd generation.[4]

- for Vega's instruction set

An LLVM code generator (i.e. a compiler back-end) is available for the GCN instruction set.[5] It is used e.g. by Mesa 3D.

MIAOW is an open-source RTL implementation of the AMD Southern Islands GPGPU instruction set (aka Graphics Core Next).

In November 2015, AMD announced the "Boltzmann Initiative". The AMD Boltzmann Initiative shall enable the porting of CUDA-based applications to a common C++ programming model.[6]

At the "Super Computing 15" AMD showed their Heterogeneous Compute Compiler (HCC), a headless Linux driver and HSA runtime infrastructure for cluster-class, High Performance Computing (HPC) and the Heterogeneous-compute Interface for Portability (HIP) tool for porting CUDA-based applications to a common C++ programming model.

Microarchitectures

As of July 2017 the family of microarchitectures implementing the identically called instruction set "Graphics Core Next" has seen five iterations. The differences in the instruction set are rather minimal and do not differentiate too much from one another. An exception is the fifth generation GCN architecture, which heavily modified the stream processors to improve performance and support the simultaneous processing of two lower precision numbers in place of a single higher precision number.[7]

Command processing

Graphics Command Processor

The "Graphics Command Processor" (GCP) is a functional unit of the GCN microarchicture. Among other tasks, it is responsible for Asynchronous Shaders. The short video AMD Asynchronous Shaders visualizes the differences between "multi thread", "preemption" and "Asynchronous Shaders[8]".

Asynchronous Compute Engine

The Asynchronous Compute Engine (ACE) is a distinct functional block serving computing purposes. It purpose is similar to that of the Graphics Command Processor.

Scheduler

Since the third iteration of GCN, the hardware contains two schedulers: One to schedule wavefronts during shader execution (CU Scheduler, see below) and a new one to schedule execution of draw and compute queues. The latter helps performance by executing compute operations when the CUs are underutilized because of graphics commands limited by fixed function pipeline speed or bandwidth limited. This functionality is known as Async Compute.

For a given shader, the gpu drivers also need to select a good instruction order, in order to minimize latency. This is done on cpu, and is sometimes referred as "Scheduling".

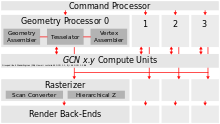

Geometric processor

The geometry processor contains the Geometry Assembler, the Tesselator and the Vertex Assembler.

The GCN Tesselator of the Geometry processor is capable of doing tessellation in hardware as defined by Direct3D 11 and OpenGL 4.5 (see AMD 21-01-2017) in [9]).

The GCN Tesselator is AMD's most current SIP block, earlier units were ATI TruForm and hardware tessellation in TeraScale.

Compute Units

One compute unit combines 64 shader processors with 4 TMUs.[10][11] The compute unit is separate from, but feed into, the Render output units (ROPs).[11] Each Compute Unit consists of a CU Scheduler, a Branch & Message Unit, 4 SIMD Vector Units (each 16-lane wide), 4 64KiB VGPR files, 1 scalar unit, a 4 KiB GPR file, a local data share of 64 KiB, 4 Texture Filter Units, 16 Texture Fetch Load/Store Units and a 16 KiB L1 Cache. Four Compute units are wired to share an Instruction Cache 16 KiB in size and a scalar data cache 32KiB in size. These are backed by the L2 cache. A SIMD-VU operates on 16 elements at a time (per cycle), while a SU can operate on one a time (one/cycle). In addition the SU handles some other operations like branching.[12]

Every SIMD-VU has some private memory where it stores its registers. There are two types of registers: scalar registers (s0, s1, etc.), which hold 4 bytes number each, and vector registers (v0, v1, etc.), which represent a set of 64 4 bytes numbers each. When you operate on the vector registers, every operation is done in parallel on the 64 numbers. Every time you do some work with them, you actually work with 64 inputs. For example, you work on 64 different pixels at a time (for each of them the inputs are slightly different, and thus you get slightly different color at the end).

Every SIMD-VU has room for 512 scalar registers and 256 vector registers.

CU Scheduler

The CU scheduler is the hardware functional block choosing for the SIMD-VU which wavefronts to execute. It picks one SIMD-VU per cycle for scheduling. This is not to be confused with other schedulers, in hardware or software.

- Wavefront

- A 'shader' is a small program written in GLSL which performs graphics processing, and a 'kernel' is a small program written in OpenCL and going GPGPU processing. These processes don't need that many registers, they need to load data from system or graphics memory. This operation comes with significant latency. AMD and Nvidia chose similar approaches to hide this unavoidable latency: the grouping of multiple threads. AMD calls such a group a wavefront, Nvidia calls it a warp. A group of threads is the most basic unit of scheduling of GPUs implementing this approach to hide latency, is minimum size of the data processed in SIMD fashion, the smallest executable unit of code, the way to processes a single instruction over all of the threads in it at the same time.

In all GCN-GPUs, a “wavefront” consists of 64 threads, and in all Nvidia GPUs a “warp” consists of 32 threads.

AMD's solution is, to attribute multiple wavefronts to each SIMD-VU. The hardware distributes the registers to the different wavefronts, and when one wavefront is waiting on some result, which lies in memory, the CU Scheduler decides to make the SIMD-VU work on another wavefront. Wavefronts are attributed per SIMD-VU. SIMD-VUs do not exchange wavefronts. At max 10 wavefronts can be attributed per SIMD-VU (thus 40 per CU).

AMD CodeXL shows tables with the relationship between number of SGPRs and VGPRs to the number of wavefronts, but basically for SGPRS it is min(104, 512/numwavefronts) and VGPRS 256/numwavefronts.

Note that in conjunction with the SSE instructions this concept of most basic level of parallelism is often called a "vector width". The vector width is characterized by the total number of bits in it.

SIMD Vector Unit

Each SIMD Vector Unit has:

- a 16-lane integer and floating point vector Arithmetic Logic Unit (ALU)

- 64 KiB Vector General Purpose Register (VGPR) file

- A 48-bit Program Counter

- Instruction buffer for 10 wavefronts

- A wavefront is a group of 64 threads: the size of one logical VGPR

- A 64-thread wavefront issues to a 16-lane SIMD Unit over four cycles

Each SIMD-VU has 10 wavefront instruction buffer, and it takes 4 cycles to execute one wavefront.

Audio and video acceleration SIP blocks

Many implementations of GCN are typically accompanied by several of AMD's other ASIC blocks. Including but not limited to the Unified Video Decoder, Video Coding Engine, and AMD TrueAudio.

Unified virtual memory

In a preview in 2011, AnandTech wrote about the unified virtual memory, supported by Graphics Core Next.[13]

|

Heterogeneous System Architecture (HSA)

Some of the specific HSA features implemented in the hardware need support from the operating system's kernel (its subsystems) and/or from specific device drivers. For example, in July 2014 AMD published a set of 83 patches to be merged into Linux kernel mainline 3.17 for supporting their Graphics Core Next-based Radeon graphics cards. The special driver titled "HSA kernel driver" resides in the directory /drivers/gpu/hsa while the DRM-graphics device drivers reside in /drivers/gpu/drm[16] and augments the already existent DRM driver for Radeon cards.[17] This very first implementation focuses on a single "Kaveri" APU and works alongside the existing Radeon kernel graphics driver (kgd).

Hardware Schedulers

They are used to perform scheduling[18] and offload the assignment of compute queues to the ACEs from the driver to hardware by buffering these queues until there is at least one empty queue in at least one ACE, causing the HWS to immediately assign buffered queues to the ACEs until all queues are full or there are no more queues to safely assign.[19] Part of the scheduling work performed includes prioritized queues which allow critical tasks to run at a higher priority than other tasks without requiring the lower priority tasks to be preempted to run the high priority task, therefore allowing the tasks to run concurrently with the high priority tasks scheduled to hog the GPU as much as possible while letting other tasks use the resources that the high priority tasks are not using.[18] These are essentially Asynchronous Compute Engines that lack dispatch controllers.[18] They were first introduced in the fourth generation GCN microarchitecture,[18] but were present in the third generation GCN microarchitecture for internal testing purposes.[20] A driver update has enabled the hardware schedulers in third generation GCN parts for production use.[18]

Primitive Discard Accelerator

This unit discards degenerate triangles before they enter the vertex shader and triangles that do not cover any fragments before they enter the fragment shader.[21] This unit was introduced with the fourth generation GCN microarchitecture.[21]

Iterations

Graphics Core Next (Southern Islands)

- Support for 64-bit addressing (x86-64 address space) with unified address space for CPU and GPU[13]

- Support for PCI-E 3.0[22]

- GPU sends interrupt requests to CPU on various events (such as page faults)

- Support for Partially Resident Textures,[23] which enable virtual memory support through DirectX and OpenGL extensions

- AMD PowerTune support, which dynamically adjusts performance to stay within a specific TDP[24]

- Support for Mantle (API)

There are Asynchronous Compute Engines controlling computation and dispatching.[12][25]

ZeroCore Power

ZeroCore Power is a long idle power saving technology, shutting off functional units of the GPU when not in use.[26] AMD ZeroCore Power technology supplements AMD PowerTune.

Chips

Discrete GPUs (Southern Islands family):

- Oland

- Cape Verde

- Pitcairn

- Tahiti

GCN 2nd Generation (Sea Islands)

GCN 2nd generation was introduced with Radeon HD 7790 and is also found in Radeon HD 8770, R7 260/260X, R9 290/290X, R9 295X2, R7 360, R9 390/390X, as well as Steamroller-based Desktop Kaveri APUs and Mobile Kaveri APUs and in the Puma-based "Beema" and "Mullins" APUs. It has multiple advantages over the original GCN, including AMD TrueAudio and a revised version of AMD PowerTune technology.

GCN 2nd generation introduced an entity called "Shader Engine" (SE). A Shader Engine comprises one geometry processor, up to 11 CUs (Hawaii chip), rasterizers, ROPs, and L1 cache. Not part of a Shader Engine is the Graphics Command Processor, the 8 ACEs, the L2 cache and memory controllers as well as the audio and video accelerators, the display controllers, the 2 DMA controllers and the PCIe interface.

The A10-7850K "Kaveri" contains 8 CUs (compute units) and 8 Asynchronous Compute Engines for independent scheduling and work item dispatching.[27]

At AMD Developer Summit (APU) in November 2013 Michael Mantor presented the Radeon R9 290X.[28]

Chips

Discrete GPUs (Sea Islands family):

- Bonaire

- Hawaii

Integrated into APUs:

- Temash

- Kabini

- Liverpool

- Durango

- Kaveri

- Godavari

- Mullins

- Beema

- Carrizo-L

GCN 3rd Generation (Volcanic Islands)

GCN 3rd generation[29] was introduced in 2014 with the Radeon R9 285 and R9 M295X, which have the "Tonga" GPU. It features improved tessellation performance, lossless delta color compression in order to reduce memory bandwidth usage, an updated and more efficient instruction set, a new high quality scaler for video, and a new multimedia engine (video encoder/decoder). Delta color compression is supported in Mesa.[30] However, its double precision performance is worse compared to previous generation.[31]

Chips

Discrete GPUs:

- Tonga (Volcanic Islands family), comes with UVD 5.0 (Unified Video Decoder)

- Fiji (Pirate Islands family), comes with UVD 6.0 and High Bandwidth Memory (HBM 1)

Integrated into APUs:

GCN 4th Generation (Arctic Islands)

GPUs of the Arctic Islands-family were introduced in Q2 of 2016 with AMD Radeon 400 series branded graphics cards, based upon the Polaris architecture. All Polaris-based chips are produced on the 14 nm FinFET process.[33] The fourth generation GCN instruction set architecture is compatible with the third generation. It is an optimization for 14 nm FinFET process enabling higher GPU clock speeds than with the 3rd GCN generation.[34]

Chips

Discrete GPUs:[35]

- Polaris 10 (also codenamed Ellesmere) found on "Radeon RX 470"- and "Radeon RX 480"-branded graphics cards

- Polaris 11 (also codenamed Baffin) found on "Radeon RX 460"-branded graphics card.

- Polaris 12 found on "Radeon RX 550" and "Radeon RX 540"-branded graphics cards.

- Polaris 20, which is a refreshed Polaris 10 with higher clocks, used for "Radeon RX 570" and "Radeon RX 580"-branded graphic cards.[36]

- Polaris 21, which is a refreshed Polaris 11, used for "Radeon RX 560"-branded graphics card.

GCN 5th Generation (Vega)

AMD began releasing details of their next generation of GCN Architecture, termed the 'Next-Generation Compute Unit', in January 2017.[34][37][38] The new design is expected to increase instructions per clock, higher clock speeds, support for HBM2, a larger memory address space, and the High Bandwidth Cache Controller. Additionally, the new chips are expected to include improvements in the Rasterisation and Render output units. The stream processors are heavily modified from the previous generations to support packed math Rapid Pack Math technology for 8-bit, 16-bit, and 32-bit numbers. With this there is a significant performance advantage when lower precision is acceptable (for example: processing two half-precision numbers at the same rate as a single single precision number).

Nvidia introduced tile-based rasterization and binning with Maxwell,[39] and this was a big reason for Maxwell's efficiency increase. In January, AnandTech assumed that Vega would finally catch up with Nvidia regarding energy efficiency optimizations due to the new "Draw Stream Binning Rasterizer" to be introduced with Vega.[40]

It also added support for a new shader stage - primitive shaders.[41][42]

Chips

Integrated into APUs:

- Raven Ridge[43]

GCN 6th Generation (Navi)

Navi is expected in 2018 and will offer "Next Generation Memory" as well as improved scalability.[44]

See also

References

- ↑ https://www.slideshare.net/DevCentralAMD/gs4106-the-amd-gcn-architecture-a-crash-course-by-layla-mah

- ↑ "AMD Launches World’s Fastest Single-GPU Graphics Card – the AMD Radeon HD 7970" (Press release). AMD. 2011-12-22. Retrieved 2015-01-20.

- ↑ "Feature matrix of the free and open-source "Radeon" graphics device driver". Retrieved 2014-07-09.

- ↑ "AMD community forums".

- ↑ "LLVM back-end amdgpu".

- ↑ "AMD Boltzmann Initiative – Heterogeneous-compute Interface for Portability (HIP)". 2015-11-16. Retrieved 2016-01-15.

- ↑ Smith, Ryan (5 January 2017). "The AMD Vega GPU Architecture Preview". Anandtech. Retrieved 11 July 2017.

- ↑

- ↑ https://www.khronos.org/conformance/adopters/conformant-products#opengl

- ↑ Compute Cores Whitepaper (PDF). AMD. 2014. p. 5.

- 1 2 Smith, Ryan (21 December 2011). "AMD's Graphics Core Next Preview". Anandtech. Retrieved 18 April 2017.

- 1 2 Mantor, Michael; Houston, Mike (2011-06-15). "AMD Graphics Core Next" (pdf). AMD. p. 40. Retrieved 2014-07-15.

Asynchronous Compute Engine (ACE)

- 1 2 "Not Just A New Architecture, But New Features Too". AnandTech. 2011-12-21. Retrieved 2014-07-11.

- ↑ "Kaveri microarchitecture". SemiAccurate. 2014-01-15.

- ↑ Dave Airlie (2014-11-26). "Merge AMDKFD". freedesktop.org. Retrieved 2015-01-21.

- ↑ "/drivers/gpu/drm". kernel.org.

- ↑ "[PATCH 00/83] AMD HSA kernel driver". LKML. 2014-07-10. Retrieved 2014-07-11.

- 1 2 3 4 5 Angelini, Chris (June 29, 2016). "AMD Radeon RX 480 8GB Review". Tom's Hardware. p. 1. Retrieved August 11, 2016.

- ↑ "Dissecting the Polaris Architecture" (PDF). 2016. Retrieved August 12, 2016.

- ↑ Shrout, Ryan (June 29, 2016). "The AMD Radeon RX 480 Review - The Polaris Promise". PC Perspective. p. 2. Retrieved August 12, 2016.

- 1 2 Smith, Ryan (June 29, 2016). "The AMD Radeon RX 480 Preview: Polaris Makes Its Mainstream Mark". AnandTech. p. 3. Retrieved August 11, 2016.

- ↑ "AMD Radeon HD 7000 Series to be PCI-Express 3.0 Compliant". TechPowerUp. Retrieved July 21, 2011.

- ↑ "AMD Details Next Gen. GPU Architecture". Retrieved August 3, 2011.

- ↑ Tony Chen, Jason Greaves, "AMD's Graphics Core Next (GCN) Architecture" (PDF), AMD, retrieved 2016-08-13

- ↑ "AMD's Graphics Core Next Preview: AMD's New GPU, Architected For Compute". AnandTech. 2011-12-21. Retrieved 2014-07-15.

AMD's new Asynchronous Compute Engines serve as the command processors for compute operations on GCN. The principal purpose of ACEs will be to accept work and to dispatch it off to the CUs for processing.

- ↑ "Managing Idle Power: Introducing ZeroCore Power". AnandTech. 2011-12-22. Retrieved 2015-04-29.

- ↑ "AMD's Kaveri A10-7850K tested". AnandTech. 2014-01-14. Retrieved 2014-07-07.

- ↑ "AMD Radeon R9-290X". 2013-11-21.

- ↑ http://images.anandtech.com/doci/9319/Slide%2019%20-%20GCN%20Overview.png

- ↑ "Add DCC Support". Freedesktop.org. 2015-10-11.

- ↑ Smith, Ryan (10 September 2014). "AMD Radeon R9 285 Reveiw". Anandtech. Retrieved 13 March 2017.

- 1 2 Cutress, Ian (1 June 2016). "AMD Announces 7th Generation APU". Anandtech.com. Retrieved 1 June 2016.

- ↑ http://www.guru3d.com/articles-pages/radeon-technologies-group-january-2016-amd-polaris-architecture,1.html

- 1 2 Smith, Ryan (5 January 2017). "The AMD Vega Architecture Teaser: Higher IPC, Tiling, & More, coming in H1'2017". Anandtech.com. Retrieved 10 January 2017.

- ↑ WhyCry (24 March 2016). "AMD confirms Polaris 10 is Ellesmere and Polaris 11 is Baffin". VideoCardz. Retrieved 8 April 2016.

- ↑ https://www.3dcenter.org/news/fast-vollstaendige-hardware-daten-zu-amds-radeon-rx-500-serie-geleakt

- ↑ Kampman, Jeff (5 January 2017). "The curtain comes up on AMD's Vega architecture". TechReport.com. Retrieved 10 January 2017.

- ↑ Shrout, Ryan (5 January 2017). "AMD Vega GPU Architecture Preview: Redesigned Memory Architecture". PC Perspective. Retrieved 10 January 2017.

- ↑ Raevenlord (1 March 2017). "On NVIDIA's Tile-Based Rendering". techPowerUp.

- ↑ "Vega Teaser: Draw Stream Binning Rasterizer".

- ↑ http://www.trustedreviews.com/news/amd-vega-specs-performance-release-date-technology-explained

- ↑ http://techreport.com/review/31224/the-curtain-comes-up-on-amd-vega-architecture/2

- ↑ Ferreira, Bruno (16 May 2017). "Ryzen Mobile APUs are coming to a laptop near you". Tech Report. Retrieved 16 May 2017.

- ↑ Slobodan Simic (15 March 2016). "AMD reveals Navi GPU architecture". Fudzilla.