Cuckoo hashing

Cuckoo hashing is a scheme in computer programming for resolving hash collisions of values of hash functions in a table, with worst-case constant lookup time. The name derives from the behavior of some species of cuckoo, where the cuckoo chick pushes the other eggs or young out of the nest when it hatches; analogously, inserting a new key into a cuckoo hashing table may push an older key to a different location in the table.

History

Cuckoo hashing was first described by Rasmus Pagh and Flemming Friche Rodler in 2001.[1]

Operation

Cuckoo hashing is a form of open addressing in which each non-empty cell of a hash table contains a key or key–value pair. A hash function is used to determine the location for each key, and its presence in the table (or the value associated with it) can be found by examining that cell of the table. However, open addressing suffers from collisions, which happen when more than one key is mapped to the same cell. The basic idea of cuckoo hashing is to resolve collisions by using two hash functions instead of only one. This provides two possible locations in the hash table for each key. In one of the commonly used variants of the algorithm, the hash table is split into two smaller tables of equal size, and each hash function provides an index into one of these two tables. It is also possible for both hash functions to provide indexes into a single table.

Lookup requires inspection of just two locations in the hash table, which takes constant time in the worst case (see Big O notation). This is in contrast to many other hash table algorithms, which may not have a constant worst-case bound on the time to do a lookup. Deletions, also, may be performed by blanking the cell containing a key, in constant worst case time, more simply than some other schemes such as linear probing.

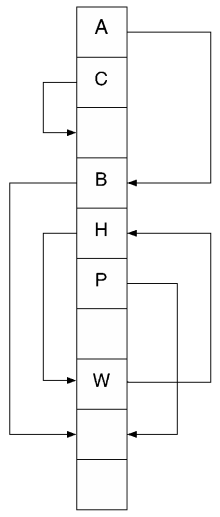

When a new key is inserted, and one of its two cells is empty, it may be placed in that cell. However, when both cells are already full, it will be necessary to move other keys to their second locations (or back to their first locations) to make room for the new key. A greedy algorithm is used: The new key is inserted in one of its two possible locations, "kicking out", that is, displacing, any key that might already reside in this location. This displaced key is then inserted in its alternative location, again kicking out any key that might reside there. The process continues in the same way until an empty position is found, completing the algorithm. However, it is possible for this insertion process to fail, by entering an infinite loop or by finding a very long chain (longer than a preset threshold that is logarithmic in the table size). In this case, the hash table is rebuilt in-place using new hash functions:

There is no need to allocate new tables for the rehashing: We may simply run through the tables to delete and perform the usual insertion procedure on all keys found not to be at their intended position in the table.— Pagh & Rodler, "Cuckoo Hashing"[1]

Theory

Insertions succeed in expected constant time,[1] even considering the possibility of having to rebuild the table, as long as the number of keys is kept below half of the capacity of the hash table, i.e., the load factor is below 50%.

One method of proving this uses the theory of random graphs: one may form an undirected graph called the "cuckoo graph" that has a vertex for each hash table location, and an edge for each hashed value, with the endpoints of the edge being the two possible locations of the value. Then, the greedy insertion algorithm for adding a set of values to a cuckoo hash table succeeds if and only if the cuckoo graph for this set of values is a pseudoforest, a graph with at most one cycle in each of its connected components. Any vertex-induced subgraph with more edges than vertices corresponds to a set of keys for which there are an insufficient number of slots in the hash table. When the hash function is chosen randomly, the cuckoo graph is a random graph in the Erdős–Rényi model. With high probability, for a random graph in which the ratio of the number of edges to the number of vertices is bounded below 1/2, the graph is a pseudoforest and the cuckoo hashing algorithm succeeds in placing all keys. Moreover, the same theory also proves that the expected size of a connected component of the cuckoo graph is small, ensuring that each insertion takes constant expected time.[2]

Example

The following hash functions are given:

| k | h(k) | h'(k) |

|---|---|---|

| 20 | 9 | 1 |

| 50 | 6 | 4 |

| 53 | 9 | 4 |

| 75 | 9 | 6 |

| 100 | 1 | 9 |

| 67 | 1 | 6 |

| 105 | 6 | 9 |

| 3 | 3 | 0 |

| 36 | 3 | 3 |

| 39 | 6 | 3 |

Columns in the following two tables show the state of the hash tables over time as the elements are inserted.

| 1. table for h(k) | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|

| 20 | 50 | 53 | 75 | 100 | 67 | 105 | 3 | 36 | 39 | |

| 0 | ||||||||||

| 1 | 100 | 67 | 67 | 67 | 67 | 100 | ||||

| 2 | ||||||||||

| 3 | 3 | 36 | 36 | |||||||

| 4 | ||||||||||

| 5 | ||||||||||

| 6 | 50 | 50 | 50 | 50 | 50 | 105 | 105 | 105 | 50 | |

| 7 | ||||||||||

| 8 | ||||||||||

| 9 | 20 | 20 | 53 | 75 | 75 | 75 | 53 | 53 | 53 | 75 |

| 2. table for h'(k) | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|

| 20 | 50 | 53 | 75 | 100 | 67 | 105 | 3 | 36 | 39 | |

| 0 | 3 | 3 | ||||||||

| 1 | 20 | 20 | 20 | 20 | 20 | 20 | 20 | 20 | ||

| 2 | ||||||||||

| 3 | 39 | |||||||||

| 4 | 53 | 53 | 53 | 50 | 50 | 50 | 53 | |||

| 5 | ||||||||||

| 6 | 75 | 75 | 75 | 67 | ||||||

| 7 | ||||||||||

| 8 | ||||||||||

| 9 | 100 | 100 | 100 | 100 | 105 | |||||

Cycle

If you now wish to insert the element 6, then you get into a cycle. In the last row of the table we find the same initial situation as at the beginning again.

| considered key | table 1 | table 2 | ||

| old value | new value | old value | new value | |

| 6 | 50 | 6 | 53 | 50 |

| 53 | 75 | 53 | 67 | 75 |

| 67 | 100 | 67 | 105 | 100 |

| 105 | 6 | 105 | 3 | 6 |

| 3 | 36 | 3 | 39 | 36 |

| 39 | 105 | 39 | 100 | 105 |

| 100 | 67 | 100 | 75 | 67 |

| 75 | 53 | 75 | 50 | 53 |

| 50 | 39 | 50 | 36 | 39 |

| 36 | 3 | 36 | 6 | 3 |

| 6 | 50 | 6 | 53 | 50 |

Variations

Several variations of cuckoo hashing have been studied, primarily with the aim of improving its space usage by increasing the load factor that it can tolerate to a number greater than the 50% threshold of the basic algorithm. Some of these methods can also be used to reduce the failure rate of cuckoo hashing, causing rebuilds of the data structure to be much less frequent.

Generalizations of cuckoo hashing that use more than two alternative hash functions can be expected to utilize a larger part of the capacity of the hash table efficiently while sacrificing some lookup and insertion speed. Using just three hash functions increases the load to 91%.[3] Another generalization of cuckoo hashing, called blocked cuckoo hashing consists in using more than one key per bucket. Using just 2 keys per bucket permits a load factor above 80%.[4]

Another variation of cuckoo hashing that has been studied is cuckoo hashing with a stash. The stash, in this data structure, is an array of a constant number of keys, used to store keys that cannot successfully be inserted into the main hash table of the structure. This modification reduces the failure rate of cuckoo hashing to an inverse-polynomial function with an exponent that can be made arbitrarily large by increasing the stash size. However, larger stashes also mean slower searches for keys that are not present or are in the stash. A stash can be used in combination with more than two hash functions or with blocked cuckoo hashing to achieve both high load factors and small failure rates.[5] The analysis of cuckoo hashing with a stash extends to practical hash functions, not just to the random hash function model commonly used in theoretical analysis of hashing.[6]

Some people recommend a simplified generalization of cuckoo hashing called skewed-associative cache in some CPU caches.[7]

Another variation of a cuckoo hash table, called a cuckoo filter, replaces the stored keys of a cuckoo hash table with much shorter fingerprints, computed by applying another hash function to the keys. In order to allow these fingerprints to be moved around within the cuckoo filter, without knowing the keys that they came from, the two locations of each fingerprint may be computed from each other by a bitwise exclusive or operation with the fingerprint, or with a hash of the fingerprint. This data structure forms an approximate set membership data structure with much the same properties as a Bloom filter: it can store the members of a set of keys, and test whether a query key is a member, with some chance of false positives (queries that are incorrectly reported as being part of the set) but no false negatives. However, it improves on a Bloom filter in multiple respects: its memory usage is smaller by a constant factor, it has better locality of reference, and (unlike Bloom filters) it allows for fast deletion of set elements with no additional storage penalty.[8]

Comparison with related structures

A study by Zukowski et al.[9] has shown that cuckoo hashing is much faster than chained hashing for small, cache-resident hash tables on modern processors. Kenneth Ross[10] has shown bucketized versions of cuckoo hashing (variants that use buckets that contain more than one key) to be faster than conventional methods also for large hash tables, when space utilization is high. The performance of the bucketized cuckoo hash table was investigated further by Askitis,[11] with its performance compared against alternative hashing schemes.

A survey by Mitzenmacher[3] presents open problems related to cuckoo hashing as of 2009.

See also

- Perfect hashing

- Linear probing

- Double hashing

- Hash collision

- Hash function

- Quadratic probing

- Hopscotch hashing

References

- 1 2 3 Pagh, Rasmus; Rodler, Flemming Friche (2001). "Cuckoo Hashing". Algorithms — ESA 2001. Lecture Notes in Computer Science. 2161. pp. 121–133. ISBN 978-3-540-42493-2. doi:10.1007/3-540-44676-1_10.

- ↑ Kutzelnigg, Reinhard (2006). Bipartite random graphs and cuckoo hashing (PDF). Fourth Colloquium on Mathematics and Computer Science. Discrete Mathematics and Theoretical Computer Science. AG. pp. 403–406

- 1 2 Mitzenmacher, Michael (2009-09-09). "Some Open Questions Related to Cuckoo Hashing | Proceedings of ESA 2009" (PDF). Retrieved 2010-11-10.

- ↑ Dietzfelbinger, Martin; Weidling, Christoph (2007), "Balanced allocation and dictionaries with tightly packed constant size bins", Theoret. Comput. Sci., 380 (1-2): 47–68, MR 2330641, doi:10.1016/j.tcs.2007.02.054.

- ↑ Kirsch, Adam; Mitzenmacher, Michael D.; Wieder, Udi (2010), "More robust hashing: cuckoo hashing with a stash", SIAM J. Comput., 39 (4): 1543–1561, MR 2580539, doi:10.1137/080728743.

- ↑ Aumüller, Martin; Dietzfelbinger, Martin; Woelfel, Philipp (2014), "Explicit and efficient hash families suffice for cuckoo hashing with a stash", Algorithmica, 70 (3): 428–456, MR 3247374, doi:10.1007/s00453-013-9840-x.

- ↑ "Micro-Architecture".

- ↑ Fan, Bin; Andersen, Dave G.; Kaminsky, Michael; Mitzenmacher, Michael D. (2014), "Cuckoo filter: Practically better than Bloom", Proc. 10th ACM Int. Conf. Emerging Networking Experiments and Technologies (CoNEXT '14), pp. 75–88, doi:10.1145/2674005.2674994

- ↑ Zukowski, Marcin; Heman, Sandor; Boncz, Peter (June 2006). "Architecture-Conscious Hashing" (PDF). Proceedings of the International Workshop on Data Management on New Hardware (DaMoN). Retrieved 2008-10-16.

- ↑ Ross, Kenneth (2006-11-08). "Efficient Hash Probes on Modern Processors" (PDF). IBM Research Report RC24100. RC24100. Retrieved 2008-10-16.

- ↑ Askitis, Nikolas (2009). Fast and Compact Hash Tables for Integer Keys (PDF). Proceedings of the 32nd Australasian Computer Science Conference (ACSC 2009). 91. pp. 113–122. ISBN 978-1-920682-72-9.

External links

- A cool and practical alternative to traditional hash tables, U. Erlingsson, M. Manasse, F. Mcsherry, 2006.

- Cuckoo Hashing for Undergraduates, 2006, R. Pagh, 2006.

- Cuckoo Hashing, Theory and Practice (Part 1, Part 2 and Part 3), Michael Mitzenmacher, 2007.

- Naor, Moni; Segev, Gil; Wieder, Udi (2008). "History-Independent Cuckoo Hashing". International Colloquium on Automata, Languages and Programming (ICALP). Reykjavik, Iceland. Retrieved 2008-07-21.

- Algorithmic Improvements for Fast Concurrent Cuckoo Hashing, X. Li, D. Andersen, M. Kaminsky, M. Freedman. EuroSys 2014.

Examples

- Concurrent high-performance Cuckoo hashtable written in C++

- Cuckoo hash map written in C++

- Static cuckoo hashtable generator for C/C++

- Generic Cuckoo hashmap in Java

- Cuckoo hash table written in Haskell

- Cuckoo hashing for Go