Adversarial machine learning

Adversarial machine learning is a research field that lies at the intersection of machine learning and computer security. It aims to enable the safe adoption of machine learning techniques in adversarial settings like spam filtering, malware detection and biometric recognition.

The problem arises from the fact that machine learning techniques were originally designed for stationary environments in which the training and test data are assumed to be generated from the same (although possibly unknown) distribution. In the presence of intelligent and adaptive adversaries, however, this working hypothesis is likely to be violated to at least some degree (depending on the adversary). In fact, a malicious adversary can carefully manipulate the input data exploiting specific vulnerabilities of learning algorithms to compromise the whole system security.

Examples include: attacks in spam filtering, where spam messages are obfuscated through misspelling of bad words or insertion of good words;[1][2][3][4][5][6][7][8][9][10][11][12] attacks in computer security, e.g., to obfuscate malware code within network packets [13] or mislead signature detection;[14] attacks in biometric recognition, where fake biometric traits may be exploited to impersonate a legitimate user (biometric spoofing) [15] or to compromise users’ template galleries that are adaptively updated over time.[16][17]

Security evaluation

To understand the security properties of learning algorithms in adversarial settings, one should address the following main issues:[18][19][20][21]

- identifying potential vulnerabilities of machine learning algorithms during learning and classification;

- devising appropriate attacks that correspond to the identified threats and evaluating their impact on the targeted system;

- proposing countermeasures to improve the security of machine learning algorithms against the considered attacks.

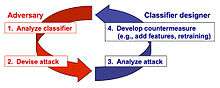

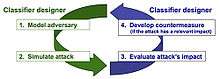

This process amounts to simulating a proactive arms race (instead of a reactive one, as depicted in Figures 1 and 2), where system designers try to anticipate the adversary in order to understand whether there are potential vulnerabilities that should be fixed in advance; for instance, by means of specific countermeasures such as additional features or different learning algorithms. However proactive approaches are not necessarily superior to reactive ones. For instance, in,[22] the authors showed that under some circumstances, reactive approaches are more suitable for improving system security.

Attacks against machine learning algorithms (supervised)

The first step of the above-sketched arms race is identifying potential attacks against machine learning algorithms. A substantial amount of work has been done in this direction.[18][19][20][21][23][24][25][26][27][28]

A taxonomy of potential attacks against machine learning

Attacks against (supervised) machine learning algorithms have been categorized along three primary axes:[21][23][24] their influence on the classifier, the security violation they cause, and their specificity.

- Attack influence. It can be causative, if the attack aims to introduce vulnerabilities (to be exploited at classification phase) by manipulating training data; or exploratory, if the attack aims to find and subsequently exploit vulnerabilities at classification phase.

- Security violation. It can be an integrity violation, if it aims to get malicious samples misclassified as legitimate; or an availability violation, if the goal is to increase the misclassification rate of legitimate samples, making the classifier unusable (e.g., a denial of service).

- Attack specificity. It can be targeted, if specific samples are considered (e.g., the adversary aims to allow a specific intrusion or she wants a given spam email to get past the filter); or indiscriminate.

This taxonomy has been extended into a more comprehensive threat model that allows one to make explicit assumptions on the adversary’s goal, knowledge of the attacked system, capability of manipulating the input data and/or the system components, and on the corresponding (potentially, formally-defined) attack strategy. Details can be found here.[18][19] Two of the main attack scenarios identified according to this threat model are sketched below.

Evasion attacks

Evasion attacks [18][19][25][26][28][29][30] are the most prevalent type of attack that may be encountered in adversarial settings during system operation. For instance, spammers and hackers often attempt to evade detection by obfuscating the content of spam emails and malware code. In the evasion setting, malicious samples are modified at test time to evade detection; that is, to be misclassified as legitimate. No influence over the training data is assumed. A clear example of evasion is image-based spam in which the spam content is embedded within an attached image to evade the textual analysis performed by anti-spam filters. Another example of evasion is given by spoofing attacks against biometric verification systems.[15][16]

Poisoning attacks

Machine learning algorithms are often re-trained on data collected during operation to adapt to changes in the underlying data distribution. For instance, intrusion detection systems (IDSs) are often re-trained on a set of samples collected during network operation. Within this scenario, an attacker may poison the training data by injecting carefully designed samples to eventually compromise the whole learning process. Poisoning may thus be regarded as an adversarial contamination of the training data. Examples of poisoning attacks against machine learning algorithms (including learning in the presence of worst-case adversarial label flips in the training data) can be found in.[14][16][18][19][21][23][24][27][31][32][33][34][35][36][37][38]

Attacks against clustering algorithms

Clustering algorithms have been increasingly adopted in security applications to find dangerous or illicit activities. For instance, clustering of malware and computer viruses aims to identify and categorize different existing malware families, and to generate specific signatures for their detection by anti-viruses, or signature-based intrusion detection systems like Snort. However, clustering algorithms have not been originally devised to deal with deliberate attack attempts that are designed to subvert the clustering process itself. Whether clustering can be safely adopted in such settings thus remains questionable. Preliminary work reporting some vulnerability of clustering can be found in.[39][40][41][42][43]

Secure learning in adversarial settings

A number of defense mechanisms against evasion, poisoning and privacy attacks have been proposed in the field of adversarial machine learning, including:

- The definition of secure learning algorithms;[6][7][8][9][44][45][46][47]

- The use of multiple classifier systems;[3][4][5][10][48][49][50]

- The use of randomization or disinformation to mislead the attacker while acquiring knowledge of the system;[4][21][23][24][51]

- The study of privacy-preserving learning.[19][52]

- Ladder algorithm for Kaggle-style competitions.[53]

- Game theoretic models for adversarial machine learning and data mining. [54][55] [56] [57]

Software

Some software libraries are available, mainly for testing purposes and research.

- AdversariaLib (includes implementation of evasion attacks from [29]).

- AlfaSVMLib. Adversarial Label Flip Attacks against Support Vector Machines.[58]

- Poisoning Attacks against Support Vector Machines,[32] and Attacks against Clustering Algorithms[39]

- deep-pwning Metasploit for deep learning which currently has attacks on deep neural networks using Tensorflow[59]

Journals, conferences, and workshops

- Machine Learning (journal)

- Journal of Machine Learning Research

- Neural Computation (journal)

- Workshop on Artificial Intelligence and Security (AISec) (co-located with CCS)

- International Conference on Machine Learning (ICML) (conference)

- Neural Information Processing Systems (NIPS) (conference)

Past events

- NIPS 2007 Workshop on Machine Learning in Adversarial Environments for Computer Security

- Special Issue on “Machine Learning in Adversarial Environments” in the journal of Machine Learning

- Dagsthul Perspectives Workshop on “Machine Learning Methods for Computer Security” [60]

- Workshop on Artificial Intelligence and Security, (AISec) Series

See also

References

- ↑ N. Dalvi, P. Domingos, Mausam, S. Sanghai, and D. Verma. “Adversarial classification”. In Tenth ACM SIGKDD International Conference on Knowledge Discovery and Data Mining (KDD), pages 99–108, Seattle, 2004.

- ↑ D. Lowd and C. Meek. “Adversarial learning”. In A. Press, editor, Proceedings of the Eleventh ACM SIGKDD International Conference on Knowledge Discovery and Data Mining (KDD), pages 641–647, Chicago, IL., 2005.

- 1 2 B. Biggio, I. Corona, G. Fumera, G. Giacinto, and F. Roli. “Bagging classifiers for fighting poisoning attacks in adversarial classification tasks”. In C. Sansone, J. Kittler, and F. Roli, editors, 10th International Workshop on Multiple Classifier Systems (MCS), volume 6713 of Lecture Notes in Computer Science, pages 350–359. Springer-Verlag, 2011.

- 1 2 3 B. Biggio, G. Fumera, and F. Roli. “Adversarial pattern classification using multiple classifiers and randomisation”. In 12th Joint IAPR International Workshop on Structural and Syntactic Pattern Recognition (SSPR 2008), volume 5342 of Lecture Notes in Computer Science, pages 500–509, Orlando, Florida, USA, 2008. Springer-Verlag.

- 1 2 B. Biggio, G. Fumera, and F. Roli. “Multiple classifier systems for robust classifier design in adversarial environments”. International Journal of Machine Learning and Cybernetics, 1(1):27–41, 2010.

- 1 2 M. Bruckner, C. Kanzow, and T. Scheffer. “Static prediction games for adversarial learning problems”. J. Mach. Learn. Res., 13:2617–2654, 2012.

- 1 2 M. Bruckner and T. Scheffer. “Nash equilibria of static prediction games”. In Y. Bengio, D. Schuurmans, J. Lafferty, C. K. I. Williams, and A. Culotta, editors, Advances in Neural Information Processing Systems 22, pages 171–179. 2009.

- 1 2 M. Bruckner and T. Scheffer. "Stackelberg games for adversarial prediction problems". In Proceedings of the 17th ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, KDD ’11, pages 547–555, New York, NY, USA, 2011. ACM.

- 1 2 A. Globerson and S. T. Roweis. “Nightmare at test time: robust learning by feature deletion”. In W. W. Cohen and A. Moore, editors, Proceedings of the 23rd International Conference on Machine Learning, volume 148, pages 353–360. ACM, 2006.

- 1 2 A. Kolcz and C. H. Teo. “Feature weighting for improved classifier robustness”. In Sixth Conference on Email and Anti-Spam (CEAS), Mountain View, CA, USA, 2009.

- ↑ B. Nelson, M. Barreno, F. J. Chi, A. D. Joseph, B. I. P. Rubinstein, U. Saini, C. Sutton, J. D. Tygar, and K. Xia. “Exploiting machine learning to subvert your spam filter”. In LEET’08: Proceedings of the 1st Usenix Workshop on Large-Scale Exploits and Emergent Threats, pages 1–9, Berkeley, CA, USA, 2008. USENIX Association.

- ↑ G. L. Wittel and S. F. Wu. “On attacking statistical spam filters”. In First Conference on Email and Anti-Spam (CEAS), Microsoft Research Silicon Valley, Mountain View, California, 2004.

- ↑ P. Fogla, M. Sharif, R. Perdisci, O. Kolesnikov, and W. Lee. Polymorphic blending attacks. In USENIX- SS’06: Proc. of the 15th Conf. on USENIX Security Symp., CA, USA, 2006. USENIX Association.

- 1 2 J. Newsome, B. Karp, and D. Song. Paragraph: Thwarting signature learning by training maliciously. In Recent Advances in Intrusion Detection, LNCS, pages 81–105. Springer, 2006.

- 1 2 R. N. Rodrigues, L. L. Ling, and V. Govindaraju. "Robustness of multimodal biometric fusion methods against spoof attacks". J. Vis. Lang. Comput., 20(3):169–179, 2009.

- 1 2 3 B. Biggio, L. Didaci, G. Fumera, and F. Roli. “Poisoning attacks to compromise face templates”. In 6th IAPR Int’l Conf. on Biometrics (ICB 2013), pages 1–7, Madrid, Spain, 2013.

- ↑ M. Torkamani and D. Lowd “Convex Adversarial Collective Classification”. In Proceedings of The 30th International Conference on Machine Learning (pp. 642-650), Atlanta, GA., 2013.

- 1 2 3 4 5 6 7 B. Biggio, G. Fumera, and F. Roli. “Security evaluation of pattern classifiers under attack”. IEEE Transactions on Knowledge and Data Engineering, 26(4):984–996, 2014.

- 1 2 3 4 5 6 7 8 B. Biggio, I. Corona, B. Nelson, B. Rubinstein, D. Maiorca, G. Fumera, G. Giacinto, and F. Roli. “Security evaluation of support vector machines in adversarial environments”. In Y. Ma and G. Guo, editors, Support Vector Machines Applications, pp. 105–153. Springer, 2014.

- 1 2 3 4 B. Biggio, G. Fumera, and F. Roli. “Pattern recognition systems under attack: Design issues and research challenges”. Int’l J. Patt. Recogn. Artif. Intell., 28(7):1460002, 2014.

- 1 2 3 4 5 L. Huang, A. D. Joseph, B. Nelson, B. Rubinstein, and J. D. Tygar. “Adversarial machine learning”. In 4th ACM Workshop on Artificial Intelligence and Security (AISec 2011), pages 43–57, Chicago, IL, USA, October 2011.

- ↑ A. Barth, B. I. P. Rubinstein, M. Sundararajan, J. C. Mitchell, D. Song, and P. L. Bartlett. "A learning-based approach to reactive security. IEEE Transactions on Dependable and Secure Computing", 9(4):482–493, 2012.

- 1 2 3 4 M. Barreno, B. Nelson, R. Sears, A. D. Joseph, and J. D. Tygar. Can machine learning be secure? In ASIACCS ’06: Proceedings of the 2006 ACM Symposium on Information, computer and communications security, pages 16–25, New York, NY, USA, 2006. ACM

- 1 2 3 4 M. Barreno, B. Nelson, A. Joseph, and J. Tygar. “The security of machine learning”. Machine Learning, 81:121–148, 2010

- 1 2 Vorobeychik, Yevgeniy; Li, Bo (2014-01-01). "Optimal Randomized Classification in Adversarial Settings". Proceedings of the 2014 International Conference on Autonomous Agents and Multi-agent Systems. AAMAS '14. Richland, SC: International Foundation for Autonomous Agents and Multiagent Systems: 485–492. ISBN 9781450327381.

- 1 2 Li, Bo; Vorobeychik, Yevgeniy (2014-01-01). "Feature Cross-substitution in Adversarial Classification". Proceedings of the 27th International Conference on Neural Information Processing Systems. NIPS'14. Cambridge, MA, USA: MIT Press: 2087–2095.

- 1 2 Li, Bo; Wang, Yining; Singh, Aarti; Vorobeychik, Yevgeniy (2016-01-01). Lee, D. D.; Sugiyama, M.; Luxburg, U. V.; Guyon, I.; Garnett, R., eds. Advances in Neural Information Processing Systems 29 (PDF). Curran Associates, Inc. pp. 1885–1893.

- 1 2 Bo, Li,; Yevgeniy, Vorobeychik,; Xinyun, Chen, (2016-04-09). "A General Retraining Framework for Scalable Adversarial Classification".

- 1 2 B. Biggio, I. Corona, D. Maiorca, B. Nelson, N. Srndic, P. Laskov, G. Giacinto, and F. Roli. “Evasion attacks against machine learning at test time”. In H. Blockeel, K. Kersting, S. Nijssen, and F. Zelezny, editors, European Conference on Machine Learning and Principles and Practice of Knowledge Discovery in Databases (ECML PKDD), Part III, volume 8190 of Lecture Notes in Computer Science, pages 387– 402. Springer Berlin Heidelberg, 2013.

- ↑ B. Nelson, B. I. Rubinstein, L. Huang, A. D. Joseph, S. J. Lee, S. Rao, and J. D. Tygar. "Query strategies for evading convex-inducing classifiers". J. Mach. Learn. Res., 13:1293–1332, 2012

- ↑ B. Biggio, B. Nelson, and P. Laskov. “Support vector machines under adversarial label noise”. In Journal of Machine Learning Research - Proc. 3rd Asian Conf. Machine Learning, volume 20, pp. 97–112, 2011.

- 1 2 B. Biggio, B. Nelson, and P. Laskov. “Poisoning attacks against support vector machines”. In J. Langford and J. Pineau, editors, 29th Int’l Conf. on Machine Learning. Omnipress, 2012.

- ↑ M. Kloft and P. Laskov. "Online anomaly detection under adversarial impact". In Proceedings of the 13th International Conference on Artificial Intelligence and Statistics (AISTATS), pages 405–412, 2010.

- ↑ M. Kloft and P. Laskov. “Security analysis of online centroid anomaly detection”. Journal of Machine Learning Research, 13:3647–3690, 2012.

- ↑ P. Laskov and M. Kloft. “A framework for quantitative security analysis of machine learning”. In AISec ’09: Proceedings of the 2nd ACM workshop on Security and artificial intelligence, pages 1–4, New York, NY, USA, 2009. ACM.

- ↑ H. X. Han Xiao and C. Eckert. “Adversarial label flips attack on support vector machines”. In 20th European Conference on Artificial Intelligence, 2012.

- ↑ B. I. P. Rubinstein, B. Nelson, L. Huang, A. D. Joseph, S.-h. Lau, S. Rao, N. Taft, and J. D. Tygar. “Antidote: understanding and defending against poisoning of anomaly detectors”. In Proceedings of the 9th ACM SIGCOMM conference on Internet measurement conference, IMC ’09, pages 1–14, New York, NY, USA, 2009. ACM.

- ↑ B. Nelson, B. Biggio, and P. Laskov. "Understanding the risk factors of learning in adversarial environments". In 4th ACM Workshop on Artificial Intelligence and Security, AISec ’11, pages 87–92, Chicago, IL, USA, October 2011

- 1 2 B. Biggio, I. Pillai, S. R. Bulò, D. Ariu, M. Pelillo, and F. Roli. "Is data clustering in adversarial settings secure?" In Proceedings of the 2013 ACM Workshop on Artificial Intelligence and Security, AISec ’13, pages 87–98, New York, NY, USA, 2013. ACM.

- ↑ J. G. Dutrisac and D. Skillicorn. “Hiding clusters in adversarial settings”. In IEEE International Conference on Intelligence and Security Informatics (ISI 2008), pages 185–187, 2008.

- ↑ D. B. Skillicorn. “Adversarial knowledge discovery”. IEEE Intelligent Systems, 24:54–61, 2009.

- ↑ B. Biggio, K. Rieck, D. Ariu, C. Wressnegger, I. Corona, G. Giacinto, and F. Roli. “Poisoning behavioral malware clustering”. In Proc. 2014 Workshop on Artificial Intelligent and Security Workshop, AISec ’14, pages 27–36, New York, NY, USA, 2014. ACM.

- ↑ B. Biggio, S. R. Bulò, I. Pillai, M. Mura, E. Z. Mequanint, M. Pelillo, and F. Roli. “Poisoning complete-linkage hierarchical clustering”. In P. Franti, G. Brown, M. Loog, F. Escolano, and M. Pelillo, editors, Joint IAPR Int’l Workshop on Structural, Syntactic, and Statistical Pattern Recognition, volume 8621 of Lecture Notes in Computer Science, pages 42–52, Joensuu, Finland, 2014. Springer Berlin Heidelberg.

- ↑ O. Dekel, O. Shamir, and L. Xiao. "Learning to classify with missing and corrupted features". Machine Learning, 81:149–178, 2010.

- ↑ M. Grosshans, C. Sawade, M. Bruckner, and T. Scheffer. "Bayesian games for adversarial regression problems". In Journal of Machine Learning Research - Proc. 30th International Conference on Machine Learning (ICML), volume 28, 2013.

- ↑ B. Biggio, G. Fumera, and F. Roli. “Design of robust classifiers for adversarial environments”. In IEEE Int’l Conf. on Systems, Man, and Cybernetics (SMC), pages 977–982, 2011.

- ↑ W. Liu and S. Chawla. “Mining adversarial patterns via regularized loss minimization”. Machine Learning, 81(1):69–83, 2010.

- ↑ B. Biggio, G. Fumera, and F. Roli. “Evade hard multiple classifier systems”. In O. Okun and G. Valentini, editors, Supervised and Unsupervised Ensemble Methods and Their Applications, volume 245 of Studies in Computational Intelligence, pages 15–38. Springer Berlin / Heidelberg, 2009.

- ↑ B. Biggio, G. Fumera, and F. Roli. “Multiple classifier systems for adversarial classification tasks”. In J. A. Benediktsson, J. Kittler, and F. Roli, editors, Proceedings of the 8th International Workshop on Multiple Classifier Systems, volume 5519 of Lecture Notes in Computer Science, pages 132–141. Springer, 2009.

- ↑ B. Biggio, G. Fumera, and F. Roli. “Multiple classifier systems under attack”. In N. E. Gayar, J. Kittler, and F. Roli, editors, MCS, Lecture Notes in Computer Science, pages 74–83. Springer, 2010.

- ↑ Li, B and Vorobeychik, Y. Scalable Optimization of Randomized Operational Decisions in Adversarial Classification Settings. AISTATS, 2015.

- ↑ B. I. P. Rubinstein, P. L. Bartlett, L. Huang, and N. Taft. “Learning in a large function space: Privacy- preserving mechanisms for svm learning”. Journal of Privacy and Confidentiality, 4(1):65–100, 2012.

- ↑ Avrim Blum, Moritz Hardt. "The Ladder: A Reliable Leaderboard for Machine Learning Competitions". 2015.

- ↑ M. Kantarcioglu, B. Xi, C. Clifton. "Classifier Evaluation and Attribute Selection against Active Adversaries". Data Min. Knowl. Discov., 22:291–335, January 2011.

- ↑ Y. Zhou, M. Kantarcioglu, B. Thuraisingham, B. Xi. "Adversarial Support Vector Machine Learning". In Proceedings of the 18th ACM SIGKDD international conference on Knowledge discovery and data mining, KDD ’12, pages 1059–1067, New York, NY, USA, 2012.

- ↑ Y. Zhou, M. Kantarcioglu, B. M. Thuraisingham. "Sparse Bayesian Adversarial Learning Using Relevance Vector Machine Ensembles". In ICDM, pages 1206–1211, 2012.

- ↑ Y. Zhou, M. Kantarcioglu. "Modeling Adversarial Learning as Nested Stackelberg Games”. in Advances in Knowledge Discovery and Data Mining: 20th Pacific-Asia Conference, PAKDD 2016, Auckland, New Zealand, April 19-22, 2016.

- ↑ H. Xiao, B. Biggio, B. Nelson, H. Xiao, C. Eckert, and F. Roli. “Support vector machines under adversarial label contamination”. Neurocomputing, Special Issue on Advances in Learning with Label Noise, In Press.

- ↑ "cchio/deep-pwning". GitHub. Retrieved 2016-08-08.

- ↑ A. D. Joseph, P. Laskov, F. Roli, J. D. Tygar, and B. Nelson. “Machine Learning Methods for Computer Security” (Dagstuhl Perspectives Workshop 12371). Dagstuhl Manifestos, 3(1):1–30, 2013.