Permanent

In linear algebra, the permanent of a square matrix is a function of the matrix similar to the determinant. The permanent, as well as the determinant, is a polynomial in the entries of the matrix.[1] Both permanent and determinant are special cases of a more general function of a matrix called the immanant.

Definition

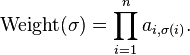

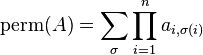

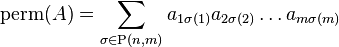

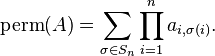

The permanent of an n-by-n matrix A = (ai,j) is defined as

The sum here extends over all elements σ of the symmetric group Sn; i.e. over all permutations of the numbers 1, 2, ..., n.

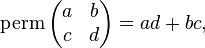

For example,

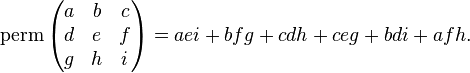

and

The definition of the permanent of A differs from that of the determinant of A in that the signatures of the permutations are not taken into account.

The permanent of a matrix A is denoted per A, perm A, or Per A, sometimes with parentheses around the argument. In his monograph, Minc (1984) uses Per(A) for the permanent of rectangular matrices, and uses per(A) when A is a square matrix. Muir (1882) uses the notation  .

.

The word, permanent, originated with Cauchy in 1812 as “fonctions symétriques permanentes” for a related type of function,[2] and was used by Muir (1882) in the modern, more specific, sense.[3]

Properties and applications

If one views the permanent as a map that takes n vectors as arguments, then it is a multilinear map and it is symmetric (meaning that any order of the vectors results in the same permanent). Furthermore, given a square matrix  of order n, we have:[4]

of order n, we have:[4]

- perm(A) is invariant under arbitrary permutations of the rows and/or columns of A. This property may be written symbolically as perm(A) = perm(PAQ) for any appropriately sized permutation matrices P and Q,

- multiplying any single row or column of A by a scalar s changes perm(A) to s⋅perm(A),

- perm(A) is invariant under transposition, that is, perm(A) = perm(A⊤).

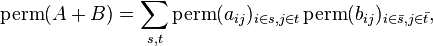

If  and

and  are square matrices of order n then,[5]

are square matrices of order n then,[5]

where s and t are subsets of the same size of {1,2,...,n} and  are their respective complements in that set.

are their respective complements in that set.

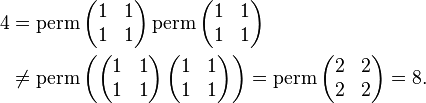

On the other hand, the basic multiplicative property of determinants is not valid for permanents.[6] A simple example shows that this is so.

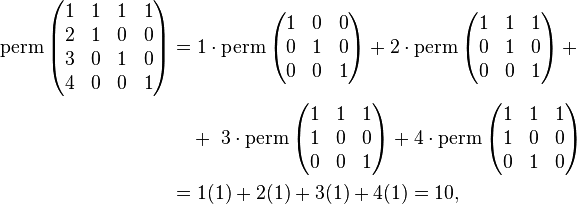

A formula similar to Laplace's for the development of a determinant along a row, column or diagonal is also valid for the permanent;[7] all signs have to be ignored for the permanent. For example, expanding along the first column,

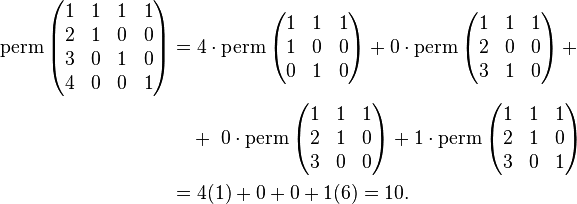

while expanding along the last row gives,

Unlike the determinant, the permanent has no easy geometrical interpretation; it is mainly used in combinatorics and in treating boson Green's functions in quantum field theory. However, it has two graph-theoretic interpretations: as the sum of weights of cycle covers of a directed graph, and as the sum of weights of perfect matchings in a bipartite graph.

Symmetric spaces

The permanent arises naturally in the study of the symmetric tensor power of Hilbert spaces.[8] In particular, for a Hilbert space  , let

, let  denote the

denote the  th symemtric tensor power of

th symemtric tensor power of  , which is the space of symmetric tensors. Note in particular that

, which is the space of symmetric tensors. Note in particular that  is spanned by the Symmetric products of elements in

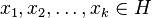

is spanned by the Symmetric products of elements in  . For

. For  , we define the symmetric product of these elements by

, we define the symmetric product of these elements by

If we consider  (as a subspace of

(as a subspace of  , the kth tensor power of

, the kth tensor power of  ) and define the inner product on

) and define the inner product on  accordingly, we find that for

accordingly, we find that for

Applying the Cauchy-Schwarz inequality, we find that ![\operatorname{perm} \left[\langle x_i,x_j \rangle\right]_{i,j = 1}^k \geq 0](../I/m/29ea609cafdb3412fb5729d303e39031.png) , and that

, and that

Cycle covers

Any square matrix  can be viewed as the adjacency matrix of a weighted directed graph, with

can be viewed as the adjacency matrix of a weighted directed graph, with  representing the weight of the arc from vertex i to vertex j.

A cycle cover of a weighted directed graph is a collection of vertex-disjoint directed cycles in the digraph that covers all vertices in the graph. Thus, each vertex i in the digraph has a unique "successor"

representing the weight of the arc from vertex i to vertex j.

A cycle cover of a weighted directed graph is a collection of vertex-disjoint directed cycles in the digraph that covers all vertices in the graph. Thus, each vertex i in the digraph has a unique "successor"  in the cycle cover, and

in the cycle cover, and  is a permutation on

is a permutation on  where n is the number of vertices in the digraph. Conversely, any permutation

where n is the number of vertices in the digraph. Conversely, any permutation  on

on  corresponds to a cycle cover in which there is an arc from vertex i to vertex

corresponds to a cycle cover in which there is an arc from vertex i to vertex  for each i.

for each i.

If the weight of a cycle-cover is defined to be the product of the weights of the arcs in each cycle, then

The permanent of an  matrix A is defined as

matrix A is defined as

where  is a permutation over

is a permutation over  . Thus the permanent of A is equal to the sum of the weights of all cycle-covers of the digraph.

. Thus the permanent of A is equal to the sum of the weights of all cycle-covers of the digraph.

Perfect matchings

A square matrix  can also be viewed as the adjacency matrix of a bipartite graph which has vertices

can also be viewed as the adjacency matrix of a bipartite graph which has vertices  on one side and

on one side and  on the other side, with

on the other side, with  representing the weight of the edge from vertex

representing the weight of the edge from vertex  to vertex

to vertex  . If the weight of a perfect matching

. If the weight of a perfect matching  that matches

that matches  to

to  is defined to be the product of the weights of the edges in the matching, then

is defined to be the product of the weights of the edges in the matching, then

Thus the permanent of A is equal to the sum of the weights of all perfect matchings of the graph.

Permanents of (0,1) matrices

The permanents of matrices that only have 0 and 1 as entries are often the answers to certain counting questions involving the structures that the matrices represent. This is particularly true of adjacency matrices in graph theory and incidence matrices of symmetric block designs.

In an unweighted, directed, simple graph (a digraph), if we set each  to be 1 if there is an edge from vertex i to vertex j, then each nonzero cycle cover has weight 1, and the adjacency matrix has 0-1 entries. Thus the permanent of a (0,1)-matrix is equal to the number of vertex cycle covers of an unweighted directed graph.

to be 1 if there is an edge from vertex i to vertex j, then each nonzero cycle cover has weight 1, and the adjacency matrix has 0-1 entries. Thus the permanent of a (0,1)-matrix is equal to the number of vertex cycle covers of an unweighted directed graph.

For an unweighted bipartite graph, if we set ai,j = 1 if there is an edge between the vertices  and

and  and ai,j = 0 otherwise, then each perfect matching has weight 1. Thus the number of perfect matchings in G is equal to the permanent of matrix A.[9]

and ai,j = 0 otherwise, then each perfect matching has weight 1. Thus the number of perfect matchings in G is equal to the permanent of matrix A.[9]

Let Ω(n,k) be the class of all (0,1)-matrices of order n with each row and column sum equal to k. Every matrix A in this class has perm(A) > 0.[10] The incidence matrices of projective planes are in the class Ω(n2 + n + 1, n + 1) for n an integer > 1. The permanents corresponding to the smallest projective planes have been calculated. For n = 2, 3, and 4 the values are 24, 3852 and 18,534,400 respectively.[10] Let Z be the incidence matrix of the projective plane with n = 2, the Fano plane. Remarkably, perm(Z) = 24 = |det (Z)|, the absolute value of the determinant of Z. This is a consequence of Z being a circulant matrix and the theorem:[11]

- If A is a circulant matrix in the class Ω(n,k) then if k > 3, perm(A) > |det (A)| and if k = 3, perm(A) = |det (A)|. Furthermore, when k = 3, by permuting rows and columns, A can be put into the form of a direct sum of e copies of the matrix Z and consequently, n = 7e and perm(A) = 24e.

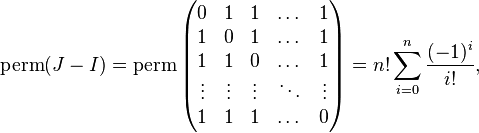

Permanents can also be used to calculate the number of permutations with restricted (prohibited) positions. For the standard n-set, {1,2,...,n}, let  be the (0,1)-matrix where aij = 1 if i → j is allowed in a permutation and aij = 0 otherwise. Then perm(A) counts the number of permutations of the n-set which satisfy all the restrictions.[7] Two well known special cases of this are the solution of the derangement problem (the number of permutations with no fixed points) given by:

be the (0,1)-matrix where aij = 1 if i → j is allowed in a permutation and aij = 0 otherwise. Then perm(A) counts the number of permutations of the n-set which satisfy all the restrictions.[7] Two well known special cases of this are the solution of the derangement problem (the number of permutations with no fixed points) given by:

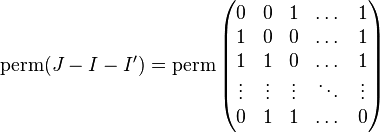

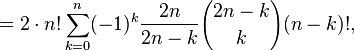

where J is the all 1's matrix and I is the identity matrix, each of order n, and the solution to the ménage problem given by:

where I' is the (0,1)-matrix whose only non-zero entries are on the first superdiagonal.

The Bregman–Minc inequality conjectured by H. Minc in 1963[12] and proved by L. M. Brégman in 1973[13] gives an upper bound for the permanent of an n × n (0,1)-matrix with ri ones in row i, 1 ≤ i ≤ n as

Van der Waerden's conjecture

In 1926 Van der Waerden conjectured that the minimum permanent among all n × n doubly stochastic matrices is n!/nn, achieved by the matrix for which all entries are equal to 1/n.[14] Proofs of this conjecture were published in 1980 by B. Gyires[15] and in 1981 by G. P. Egorychev[16] and D. I. Falikman;[17] Egorychev's proof is an application of the Alexandrov–Fenchel inequality.[18] For this work, Egorychev and Falikman won the Fulkerson Prize in 1982.[19]

Computation

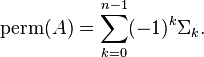

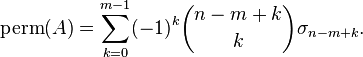

The naïve approach, using the definition, of computing permanents is computationally infeasible even for relatively small matrices. One of the fastest known algorithms is due to H. J. Ryser (Ryser (1963, p. 27)). Ryser’s method is based on an inclusion–exclusion formula that can be given[20] as follows: Let  be obtained from A by deleting k columns, let

be obtained from A by deleting k columns, let  be the product of the row-sums of

be the product of the row-sums of  , and let

, and let  be the sum of the values of

be the sum of the values of  over all possible

over all possible  . Then

. Then

It may be rewritten in terms of the matrix entries as follows:

The permanent is believed to be more difficult to compute than the determinant. While the determinant can be computed in polynomial time by Gaussian elimination, Gaussian elimination cannot be used to compute the permanent. Moreover, computing the permanent of a (0,1)-matrix is #P-complete. Thus, if the permanent can be computed in polynomial time by any method, then FP = #P, which is an even stronger statement than P = NP. When the entries of A are nonnegative, however, the permanent can be computed approximately in probabilistic polynomial time, up to an error of εM, where M is the value of the permanent and ε > 0 is arbitrary.[21]

MacMahon's Master Theorem

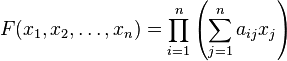

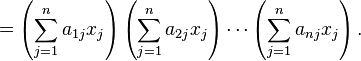

Another way to view permanents is via multivariate generating functions. Let  be a square matrix of order n. Consider the multivariate generating function:

be a square matrix of order n. Consider the multivariate generating function:

The coefficient of  in

in  is perm(A).[22]

is perm(A).[22]

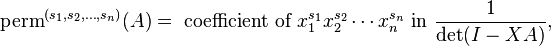

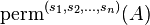

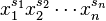

As a generalization, for any sequence of n non-negative integers,  define:

define:

as the coefficient of

as the coefficient of  in

in

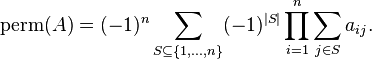

MacMahon's Master Theorem relating permanents and determinants is:[23]

where I is the order n identity matrix and X is the diagonal matrix with diagonal ![[x_1,x_2,\dots,x_n].](../I/m/5c9104c6ae25d858a087f47e1f406094.png)

Permanents of rectangular matrices

The permanent function can be generalized to apply to non-square matrices. Indeed, several authors make this the definition of a permanent and consider the restriction to square matrices a special case.[24] Specifically, for an m × n matrix  with m ≤ n, define

with m ≤ n, define

where P(n,m) is the set of all m-permutations of the n-set {1,2,...,n}.[25]

Ryser's computational result for permanents also generalizes. If A is an m × n matrix with m ≤ n, let  be obtained from A by deleting k columns, let

be obtained from A by deleting k columns, let  be the product of the row-sums of

be the product of the row-sums of  , and let

, and let  be the sum of the values of

be the sum of the values of  over all possible

over all possible  . Then

. Then

Systems of distinct representatives

The generalization of the definition of a permanent to non-square matrices allows the concept to be used in a more natural way in some applications. For instance:

Let S1, S2, ..., Sm be subsets (not necessarily distinct) of an n-set with m ≤ n. The incidence matrix of this collection of subsets is an m × n (0,1)-matrix A. The number of systems of distinct representatives (SDR's) of this collection is perm(A).[26]

See also

- Computing the permanent

- Determinant

- Bapat–Beg theorem, an application of permanent in order statistics

Notes

- ↑ Marcus, Marvin; Minc, Henryk (1965). "Permanents". Amer. Math. Monthly 72: 577–591. doi:10.2307/2313846.

- ↑ Cauchy, A. L. (1815), "Mémoire sur les fonctions qui ne peuvent obtenir que deux valeurs égales et de signes contraires par suite des transpositions opérées entre les variables qu’elles renferment.", Journal de l'École Polytechnique 10: 91–169

- ↑ van Lint & Wilson 2001, p. 108

- ↑ Ryser 1963, pp. 25 – 26

- ↑ Percus 1971, p. 2

- 1 2 Ryser 1963, p. 26

- 1 2 Percus 1971, p. 12

- ↑ Bhatia, Rajendra (1997). Matrix Analysis. New York: Springer-Verlag. pp. 16–19. ISBN 0-387-94846-5.

- ↑ Dexter Kozen. The Design and Analysis of Algorithms. Springer-Verlag, New York, 1991. ISBN 978-0-387-97687-7; pp. 141–142

- 1 2 Ryser 1963, p. 124

- ↑ Ryser 1963, p. 125

- ↑ Minc, Henryk (1963), "Upper bounds for permanents of (0,1)-matrices", Bulletin of the American Mathematical Society 69: 789–791, doi:10.1090/s0002-9904-1963-11031-9

- ↑ van Lint & Wilson 2001, p. 101

- ↑ van der Waerden, B. L. (1926), "Aufgabe 45", Jber. Deutsch. Math.-Verein. 35: 117.

- ↑ Gyires, B. (1980), "The common source of several inequalities concerning doubly stochastic matrices", Publicationes Mathematicae Institutum Mathematicum Universitatis Debreceniensis 27 (3-4): 291–304, MR 604006.

- ↑ Egoryčev, G. P. (1980), Reshenie problemy van-der-Vardena dlya permanentov (in Russian), Krasnoyarsk: Akad. Nauk SSSR Sibirsk. Otdel. Inst. Fiz., p. 12, MR 602332. Egorychev, G. P. (1981), "Proof of the van der Waerden conjecture for permanents", Akademiya Nauk SSSR (in Russian) 22 (6): 65–71, 225, MR 638007. Egorychev, G. P. (1981), "The solution of van der Waerden's problem for permanents", Advances in Mathematics 42 (3): 299–305, doi:10.1016/0001-8708(81)90044-X, MR 642395.

- ↑ Falikman, D. I. (1981), "Proof of the van der Waerden conjecture on the permanent of a doubly stochastic matrix", Akademiya Nauk Soyuza SSR (in Russian) 29 (6): 931–938, 957, MR 625097.

- ↑ Brualdi (2006) p.487

- ↑ Fulkerson Prize, Mathematical Optimization Society, retrieved 2012-08-19.

- ↑ van Lint & Wilson (2001) p. 99

- ↑ Jerrum, M.; Sinclair, A.; Vigoda, E. (2004), "A polynomial-time approximation algorithm for the permanent of a matrix with nonnegative entries", Journal of the ACM 51: 671–697, doi:10.1145/1008731.1008738

- ↑ Percus 1971, p. 14

- ↑ Percus 1971, p. 17

- ↑ In particular, Minc (1984) and Ryser (1963) do this.

- ↑ Ryser 1963, p. 25

- ↑ Ryser 1963, p. 54

References

- Brualdi, Richard A. (2006). Combinatorial matrix classes. Encyclopedia of Mathematics and Its Applications 108. Cambridge: Cambridge University Press. ISBN 0-521-86565-4. Zbl 1106.05001.

- Minc, Henryk (1978). Permanents. Encyclopedia of Mathematics and its Applications 6. With a foreword by Marvin Marcus. Reading, MA: Addison–Wesley. ISSN 0953-4806. OCLC 3980645. Zbl 0401.15005.

- Muir, Thomas; William H. Metzler. (1960) [1882]. A Treatise on the Theory of Determinants. New York: Dover. OCLC 535903.

- Percus, J.K. (1971), Combinatorial Methods, Applied Mathematical Sciences #4, New York: Springer-Verlag, ISBN 0-387-90027-6

- Ryser, Herbert John (1963), Combinatorial Mathematics, The Carus Mathematical Monographs #14, The Mathematical Association of America

- van Lint, J.H.; Wilson, R.M. (2001), A Course in Combinatorics, Cambridge University Press, ISBN 0521422604

Further reading

- Hall, Jr., Marshall (1986), Combinatorial Theory (2nd ed.), New York: John Wiley & Sons, pp. 56–72, ISBN 0-471-09138-3 Contains a proof of the Van der Waerden conjecture.

- Marcus, M.; Minc, H. (1965), "Permanents", The American Mathematical Monthly 72: 577–591, doi:10.2307/2313846

![\langle

x_1 \vee x_2 \vee \cdots \vee x_k,

y_1 \vee y_2 \vee \cdots \vee y_k

\rangle =

\operatorname{perm}\left[\langle x_i,y_j \rangle\right]_{i,j = 1}^k](../I/m/c5bbcb3dd61e054b43bf76d18c8586de.png)

![\left|\operatorname{perm} \left[\langle x_i,y_j \rangle\right]_{i,j = 1}^k \right|^2 \leq

\operatorname{perm} \left[\langle x_i,x_j \rangle\right]_{i,j = 1}^k \cdot

\operatorname{perm} \left[\langle y_i,y_j \rangle\right]_{i,j = 1}^k](../I/m/9e9497df48f97c349f295d854fb37dde.png)