Type theory

In mathematics, logic, and computer science, a type theory is any of a class of formal systems, some of which can serve as alternatives to set theory as a foundation for all mathematics. In type theory, every "term" has a "type" and operations are restricted to terms of a certain type.

Type theory is closely related to (and in some cases overlaps with) type systems, which are a programming language feature used to reduce bugs. The types of type theory were created to avoid paradoxes in a variety of formal logics and rewrite systems and sometimes "type theory" is used to refer to this broader application.

Two well-known type theories that can serve as mathematical foundations are Alonzo Church's typed λ-calculus and Per Martin-Löf's intuitionistic type theory.

History

The types of type theory were invented by Bertrand Russell in response to his discovery that Gottlob Frege's version of naive set theory was afflicted with Russell's paradox. This theory of types features prominently in Whitehead and Russell's Principia Mathematica. It avoids Russell's paradox by first creating a hierarchy of types, then assigning each mathematical (and possibly other) entity to a type. Objects of a given type are built exclusively from objects of preceding types (those lower in the hierarchy), thus preventing loops.

The common usage of "type theory" is when those types are used with a term rewrite system. The most famous early example is Alonzo Church's lambda calculus. Church's theory of types[1] helped the formal system avoid the Kleene–Rosser paradox that afflicted the original untyped lambda calculus. Church demonstrated that it could serve as a foundation of mathematics and it was referred to as a higher-order logic.

Some other type theories include Per Martin-Löf's intuitionistic type theory, which has been the foundation used in some areas of constructive mathematics and for the proof assistant Agda. Thierry Coquand's calculus of constructions and its derivatives are the foundation used by Coq and others. The field is an area of active research, as demonstrated by homotopy type theory.

Basic concepts

In a system of type theory, each term has a type and operations are restricted to terms of a certain type. A typing judgment  describes that the term

describes that the term  has type

has type  . For example,

. For example,  may be a type representing the natural numbers and

may be a type representing the natural numbers and  may be inhabitants of that type. The judgement that

may be inhabitants of that type. The judgement that  has type

has type  is written as

is written as  .

.

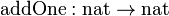

A function in type theory is denoted with an arrow  . The function

. The function  (commonly called successor), has the judgement

(commonly called successor), has the judgement  . Calling or "applying" a function to an argument is usually written without parentheses, so

. Calling or "applying" a function to an argument is usually written without parentheses, so  instead of

instead of  . (This allows for consistent currying.)

. (This allows for consistent currying.)

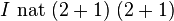

Type theories also contain rules for rewriting terms. These are called conversion rules or, if the rule only works in one direction, a reduction rule. For example,  and

and  are syntactically different terms, but the first reduces to the latter. This reduction is denoted as

are syntactically different terms, but the first reduces to the latter. This reduction is denoted as  .

.

Difference from set theory

There are many different set theories and many different systems of type theory, so what follows are generalizations.

- Set theory is built on top of logic. It requires a separate system like Frege's underneath it. In type theory, concepts like "and" and "or" can be encoded as types in the type theory itself.

- In set theory, an element can belong to multiple sets, either to a subset or superset. In type theory, terms (generally) belong to only one type. (Where a subset would be used, type theory creates a new type, called a dependent sum type, with new terms. Union is similarly achieved by a new sum type and new terms.)

- In set theory, sets can contain unrelated elements, e.g., apples and real numbers. In type theory, types that combine unrelated types do so by creating new terms.

- Set theory usually encodes numbers as sets. (0 is the empty set, 1 is a set containing the empty set, etc.) Type theory can encode numbers as functions using Church encoding or more naturally as inductive types, which are types with well-behaved constant terms.

- Set theory allows set builder notation.

- Type theory has a simple connection to constructive mathematics through the BHK interpretation.

Optional features

Normalization

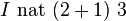

The term  reduces to

reduces to  . Since

. Since  cannot be reduced further, it is called a normal form. A system of type theory is said to be strongly normalizing if all terms have a normal form and any order of reductions reaches it. Weakly normalizing systems have a normal form but some orders of reductions may loop forever and never reach it.

cannot be reduced further, it is called a normal form. A system of type theory is said to be strongly normalizing if all terms have a normal form and any order of reductions reaches it. Weakly normalizing systems have a normal form but some orders of reductions may loop forever and never reach it.

For a normalizing system, some borrow the word element from set theory and use it to refer to all closed terms that can reduce to the same normal form. A closed term is one without parameters. (A term like  with its parameter

with its parameter  is called an open term.) Thus,

is called an open term.) Thus,  and

and  may be different terms but they're both from the element

may be different terms but they're both from the element  .

.

A similar idea that works for open and closed terms is convertibility. Two terms are convertible if there exists a term that they both reduce to. For example,  and

and  are convertible. As are

are convertible. As are  and

and  . However,

. However,  and

and  (where

(where  is a free variable) are not because both are in normal form and they are not the same. Confluent and weakly normalizing systems can test if two terms are convertible by checking if they both reduce to the same normal form.

is a free variable) are not because both are in normal form and they are not the same. Confluent and weakly normalizing systems can test if two terms are convertible by checking if they both reduce to the same normal form.

Dependent types

A dependent type is a type that depends on a term or on another type. Thus, the type returned by a function may depend upon the argument to the function.

For example, a list of  s of length 4 may be a different type than a list of

s of length 4 may be a different type than a list of  s of length 5. In a type theory with dependent types, it is possible to define a function that take a parameter "n" and returns a list containing "n" zeros. Calling the function with 4 would produce a term with a different type than if the function was called with 5.

s of length 5. In a type theory with dependent types, it is possible to define a function that take a parameter "n" and returns a list containing "n" zeros. Calling the function with 4 would produce a term with a different type than if the function was called with 5.

Dependent types play a central role in intuitionistic type theory and in the design of functional programming languages like Idris, ATS, Agda and Epigram.

Equality types (or "identity types")

Many systems of type theory have a type that represents equality of types and terms. This type is different from convertibility, and is often denoted propositional equality.

In intuitionistic type theory, the equality type is known as  for identity. There is a type

for identity. There is a type  when

when  is a type and

is a type and  and

and  are both terms of type

are both terms of type  . A term of type

. A term of type  is interpreted as meaning that

is interpreted as meaning that  is equal to

is equal to  .

.

In practice, it is possible to build a type  but there will not exist a term of that type. In intuitionistic type theory, new terms of equality start with reflexivity. If

but there will not exist a term of that type. In intuitionistic type theory, new terms of equality start with reflexivity. If  is a term of type

is a term of type  , then there exists a term of type

, then there exists a term of type  . More complicated equalities can be created by creating a reflexive term and then doing a reduction on one side. So if

. More complicated equalities can be created by creating a reflexive term and then doing a reduction on one side. So if  is a term of type

is a term of type  , then there is a term of type

, then there is a term of type  and, by reduction, generate a term of type

and, by reduction, generate a term of type  . Thus, in this system, the equality type denotes that two values of the same type are convertible by reductions.

. Thus, in this system, the equality type denotes that two values of the same type are convertible by reductions.

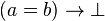

Having a type for equality is important because it can be manipulated inside the system. There is usually no judgement to say two terms are not equal; instead, as in the Brouwer–Heyting–Kolmogorov interpretation, we map  to

to  , where

, where  is the bottom type having no values. There exists a term with type

is the bottom type having no values. There exists a term with type  , but not one of type

, but not one of type  .

.

Homotopy type theory differs from intuitionistic type theory mostly by its handling of the equality type.

Inductive types

A system of type theory requires some basic terms and types to operate on. Some systems build them out of functions using Church encoding. Other systems have inductive types: a set of base types and a set of type constructors that generate types with well-behaved properties. For example, certain recursive functions called on inductive types are guaranteed to terminate.

Coinductive type are infinite data types created by giving a function that generates the next element(s). See Coinduction and Corecursion.

Induction induction is a feature for declaring an inductive type and a family of types that depends on the inductive type.

Induction recursion allows a wider range of well-behaved types but requires that the type and the recursive functions that operate on them be defined at the same time.

Universe types

Types were created to prevent paradoxes, such as Russell's paradox. However, the motives that lead to those paradoxes—being able to say things about all types—still exist. So many type theories have a "universe type", which contains all other types (and not itself).

In systems where you might want to say something about universe types, there is a hierarchy of universe types, each containing the one below it in the hierarchy. The hierarchy is defined as being infinite, but statements must only refer to a finite number of universe levels.

Type universes are particularly tricky in type theory. The initial proposal of intuitionistic type theory suffered from Girard's paradox.

Computational component

Many systems of type theory, such as the simply-typed lambda calculus, intuitionistic type theory, and the calculus of constructions, are also programming languages. That is, they are said to have a "computational component". The computation is the reduction of terms of the language using rewriting rules.

A system of type theory that has a well-behaved computational component also has a simple connection to constructive mathematics through the BHK interpretation.

Non-constructive mathematics in these systems is possible by adding operators on continuations such as call with current continuation. However, these operators tend to break desirable properties such as canonicity and parametricity.

Systems of type theory

Major

- Simply typed lambda calculus which is a higher-order logic;

- intuitionistic type theory;

- system F;

- LF is often used to define other type theories;

- calculus of constructions and its derivatives.

Minor

- Automath;

- ST type theory;

- some forms of combinatory logic;

- others defined in the lambda cube;

- others under the name typed lambda calculus;

- others under the name pure type system.

Active

- Homotopy type theory is being researched.

Practical impact

Programming languages

There is extensive overlap and interaction between the fields of type theory and type systems. Type systems are a programming language feature designed to identify bugs. Any static program analysis, such as the type checking algorithms in the semantic analysis phase of compiler, has a connection to type theory.

A prime example is Agda, a programming language which uses intuitionistic type theory for its type system. The programming language ML was developed for manipulating type theories (see LCF) and its own type system was heavily influenced by them.

Mathematical foundations

The first computer proof assistant, called Automath, used type theory to encode mathematics on a computer. Martin-Löf specifically developed intuitionistic type theory to encode all mathematics to serve as a new foundation for mathematics. There is current research into mathematical foundations using homotopy type theory.

Mathematicians working in category theory already had difficulty working with the widely accepted foundation of Zermelo–Fraenkel set theory. This led to proposals such as Lawvere's Elementary Theory of the Category of Sets (ETCS).[2] Homotopy type theory continues in this line using type theory. Researchers are exploring connections between dependent types (especially the identity type) and algebraic topology (specifically homotopy).

Proof assistants

Much of the current research into type theory is driven by proof checkers, interactive proof assistants, and automated theorem provers. Most of these systems use a type theory as the mathematical foundation for encoding proofs, which is not surprising, given the close connection between type theory and programming languages:

- LF is used by Twelf, often to define other type theories;

- multiple type theories falling under higher-order logic are used by the HOL family of provers and PVS;

- intuitionistic type theory is used by Agda which is both a programming language and proof assistant;

- computational type theory is used by NuPRL;

- calculus of constructions and its derivatives are used by Coq and Matita.

Multiple type theories are supported by LEGO and Isabelle. Isabelle also supports foundations besides type theories, such as ZFC. Mizar is an example of a proof system that only supports set theory.

Linguistics

Type theory is also widely in use in formal theories of semantics of natural languages, especially Montague grammar and its descendants. In particular, categorial grammars and pregroup grammars make extensive use of type constructors to define the types (noun, verb, etc.) of words.

The most common construction takes the basic types  and

and  for individuals and truth-values, respectively, and defines the set of types recursively as follows:

for individuals and truth-values, respectively, and defines the set of types recursively as follows:

- if

and

and  are types, then so is

are types, then so is  ;

; - nothing except the basic types, and what can be constructed from them by means of the previous clause are types.

A complex type  is the type of functions from entities of type

is the type of functions from entities of type  to entities of type

to entities of type  . Thus one has types like

. Thus one has types like  which are interpreted as elements of the set of functions from entities to truth-values, i.e. indicator functions of sets of entities. An expression of type

which are interpreted as elements of the set of functions from entities to truth-values, i.e. indicator functions of sets of entities. An expression of type  is a function from sets of entities to truth-values, i.e. a (indicator function of a) set of sets. This latter type is standardly taken to be the type of natural language quantifiers, like everybody or nobody (Montague 1973, Barwise and Cooper 1981).

is a function from sets of entities to truth-values, i.e. a (indicator function of a) set of sets. This latter type is standardly taken to be the type of natural language quantifiers, like everybody or nobody (Montague 1973, Barwise and Cooper 1981).

Social sciences

Gregory Bateson introduced a theory of logical types into the social sciences; his notions of double bind and logical levels are based on Russell's theory of types.

Relation to category theory

Although the initial motivation for category theory was far removed from foundationalism, the two fields turned out to have deep connections. As John Lane Bell writes: "In fact categories can themselves be viewed as type theories of a certain kind; this fact alone indicates that type theory is much more closely related to category theory than it is to set theory." In brief, a category can be viewed as a type theory by regarding its objects as types (or sorts), i.e. "Roughly speaking, a category may be thought of as a type theory shorn of its syntax." A number of significant results follow in this way:[3]

- cartesian closed categories correspond to the typed λ-calculus (Lambek, 1970);

- C-monoids (categories with products and exponentials and a single, nonterminal object) correspond to the untyped λ-calculus (observed independently by Lambek and Dana Scott around 1980);

- locally cartesian closed categories correspond to Martin-Löf type theories (Seely, 1984).

The interplay, known as categorical logic, has been a subject of active research since then; see the monograph of Jacobs (1999) for instance.

See also

- Data type for concrete types of data in programming

- Domain theory

- Type (model theory)

- Type system for a more practical discussion of type systems for programming languages

References

- W. Farmer, The seven virtues of simple type theory, Journal of Applied Logic, Vol. 6, No. 3. (September 2008), pp. 267–286.

- ↑ Alonzo Church, A formulation of the simple theory of types, The Journal of Symbolic Logic 5(2):56–68 (1940)

- ↑ ETCS in nLab

- ↑ John L. Bell (2012). "Types, Sets and Categories". In Akihiro Kanamory. Handbook of the History of Logic. Volume 6. Sets and Extensions in the Twentieth Century (PDF). Elsevier. ISBN 978-0-08-093066-4.

Further reading

- Constable, Robert L., 2002, "Naïve Computational Type Theory," in H. Schwichtenberg and R. Steinbruggen (eds.), Proof and System-Reliability: 213–259. Intended as a type theory counterpart of Paul Halmos's (1960) Naïve Set Theory

- Andrews B., Peter (2002). An Introduction to Mathematical Logic and Type Theory: To Truth Through Proof, 2nd ed. Kluwer Academic Publishers. ISBN 978-1-4020-0763-7.

- Jacobs, Bart (1999). Categorical Logic and Type Theory. Studies in Logic and the Foundations of Mathematics 141. North Holland, Elsevier. ISBN 0-444-50170-3. Covers type theory in depth, including polymorphic and dependent type extensions. Gives categorical semantics.

- Collins, Jordan E. (2012). A History of the Theory of Types: Developments After the Second Edition of 'Principia Mathematica'. LAP Lambert Academic Publishing. ISBN 978-3-8473-2963-3. Provides a historical survey of the developments of the theory of types with a focus on the decline of the theory as a foundation of mathematics over the four decades following the publication of the second edition of 'Principia Mathematica'.

- Cardelli, Luca, 1997, "Type Systems," in Allen B. Tucker, ed., The Computer Science and Engineering Handbook. CRC Press: 2208–2236.

- Thompson, Simon, 1991. Type Theory and Functional Programming. Addison–Wesley. ISBN 0-201-41667-0.

- J. Roger Hindley, Basic Simple Type Theory, Cambridge University Press, 2008, ISBN 0-521-05422-2 (also 1995, 1997). A good introduction to simple type theory for computer scientists; the system described is not exactly Church's STT though. Book review

- Stanford Encyclopedia of Philosophy: Type Theory" by Thierry Coquand.

- Fairouz D. Kamareddine, Twan Laan, Rob P. Nederpelt, A modern perspective on type theory: from its origins until today, Springer, 2004, ISBN 1-4020-2334-0

- José Ferreirós, José Ferreirós Domínguez, Labyrinth of thought: a history of set theory and its role in modern mathematics, Edition 2, Springer, 2007, ISBN 3-7643-8349-6, chapter X "Logic and Type Theory in the Interwar Period"

External links

- Computational type theory at Scholarpedia, curated by Robert L. Constable.

- The TYPES Forum — moderated e-mail forum focusing on type theory in computer science, operating since 1987.

- The Nuprl Book: "Introduction to Type Theory."

- Types Project lecture notes of summer schools 2005–2008

- The 2005 summer school has introductory lectures

| ||||||||||||||||||||||||||||||||||||||