Thermal fluctuations

In statistical mechanics, thermal fluctuations are random deviations of a system from its average state, that occur in a system at equilibrium.[1] All thermal fluctuations become larger and more frequent as the temperature increases, and likewise they decrease as temperature approaches absolute zero.

Thermal fluctuations are a basic consequence of the definition of temperature: A system at nonzero temperature does not stay in its equilibrium microscopic state, but instead randomly samples all possible states, with probabilities given by the Boltzmann distribution.

Thermal fluctuations generally affect all the degrees of freedom of a system: There can be random vibrations (phonons), random rotations (rotons), random electronic excitations, and so forth.

Thermodynamic variables, such as pressure, temperature, or entropy, likewise undergo thermal fluctuations. For example, a system has an equilibrium pressure, but its true pressure fluctuates to some extent about the equilibrium.

Only the 'control variables' of statistical ensembles (such as N, V and E in the microcanonical ensemble) do not fluctuate.

Thermal fluctuations are a source of noise in many systems. The random forces that give rise to thermal fluctuations are a source of both diffusion and dissipation (including damping and viscosity). The competing effects of random drift and resistance to drift are related by the fluctuation-dissipation theorem. Thermal fluctuations play a major role in phase transitions and chemical kinetics.

Central Limit Theorem for Thermal Fluctuations

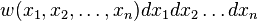

The volume of phase space  , occupied by a system of

, occupied by a system of  degrees of freedom is the product of the configuration volume

degrees of freedom is the product of the configuration volume  and the momentum space volume. Since the energy is a quadratic form of the momenta for a non-relativistic system, the radius of momentum space will be

and the momentum space volume. Since the energy is a quadratic form of the momenta for a non-relativistic system, the radius of momentum space will be  so that the volume of a hypersphere will vary as

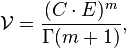

so that the volume of a hypersphere will vary as  giving a phase volume of

giving a phase volume of

where  is a constant depending upon the specific properties of the system and

is a constant depending upon the specific properties of the system and  is the Gamma function. In the case that this hypersphere has a very high dimensionality,

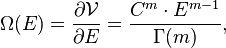

is the Gamma function. In the case that this hypersphere has a very high dimensionality,  , which is the usual case in thermodynamics, essentially all the volume will lie near to the surface

, which is the usual case in thermodynamics, essentially all the volume will lie near to the surface

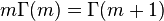

where we used the recursion formula  .

.

The surface area  has its legs in two worlds: (i) the macroscopic one in which it is considered a function of the energy, and the other extensive variables, like the volume, that have been held constant in the differentiation of the phase volume, and (ii) the microscopic world where it represents the number of complexions that is compatible with a given macroscopic state. It is this quantity that Planck referred to as a 'thermodynamic' probability. It differs from a classical probability inasmuch as it cannot be normalized; that is, its integral over all energies diverges--but it diverges as a power of the energy and not faster. Since its integral over all energies is infinite, we might try to consider its Laplace transform

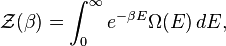

has its legs in two worlds: (i) the macroscopic one in which it is considered a function of the energy, and the other extensive variables, like the volume, that have been held constant in the differentiation of the phase volume, and (ii) the microscopic world where it represents the number of complexions that is compatible with a given macroscopic state. It is this quantity that Planck referred to as a 'thermodynamic' probability. It differs from a classical probability inasmuch as it cannot be normalized; that is, its integral over all energies diverges--but it diverges as a power of the energy and not faster. Since its integral over all energies is infinite, we might try to consider its Laplace transform

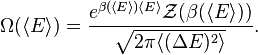

which can be given a physical interpretation. The exponential decreasing factor, where  is a positive parameter, will overpower the rapidly increasing surface area so that an enormously sharp peak will develop at a certain energy

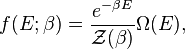

is a positive parameter, will overpower the rapidly increasing surface area so that an enormously sharp peak will develop at a certain energy  . Most of the contribution to the integral will come from an immediate neighborhood about this value of the energy. This enables the definition of a proper probability density according to

. Most of the contribution to the integral will come from an immediate neighborhood about this value of the energy. This enables the definition of a proper probability density according to

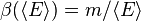

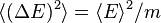

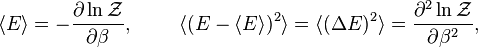

whose integral over all energies is unity on the strength of the definition of  , which is referred to as the partition function, or generating function. The latter name is due to the fact that the derivatives of its logarithm generates the central moments, namely,

, which is referred to as the partition function, or generating function. The latter name is due to the fact that the derivatives of its logarithm generates the central moments, namely,

and so on, where the first term is the mean energy and the second one is the dispersion in energy.

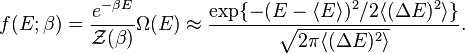

The fact that  increases no faster than a power of the energy ensures that these moments will be finite. [2] Therefore, we can expand the factor

increases no faster than a power of the energy ensures that these moments will be finite. [2] Therefore, we can expand the factor  about the mean value

about the mean value  , which will coincide with

, which will coincide with  for Gaussian fluctuations (i.e. average and most probable values coincide), and retaining lowest order terms result in

for Gaussian fluctuations (i.e. average and most probable values coincide), and retaining lowest order terms result in

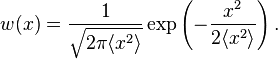

This is the Gaussian, or normal, distribution, which is defined by its first two moments. In general, one would need all the moments to specify the probability density,  , which is referred to as the canonical, or posterior, density in contrast to the prior density

, which is referred to as the canonical, or posterior, density in contrast to the prior density  , which is referred to as the 'structure' function.[2] This is the central limit theorem as it applies to thermodynamic systems.[3]

, which is referred to as the 'structure' function.[2] This is the central limit theorem as it applies to thermodynamic systems.[3]

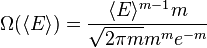

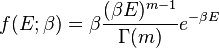

If the phase volume increases as  , its Laplace transform, the partition function, will vary as

, its Laplace transform, the partition function, will vary as  . Rearranging the normal distribution so that it becomes an expression for the structure function and evaluating it at

. Rearranging the normal distribution so that it becomes an expression for the structure function and evaluating it at  give

give

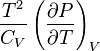

It follows from the expression of the first moment that  , while from the second central moment,

, while from the second central moment,  . Introducing these two expressions into the expression of the structure function evaluated at the mean value of the energy leads to

. Introducing these two expressions into the expression of the structure function evaluated at the mean value of the energy leads to

.

.

The denominator is exactly Stirling's approximation for  , and if the structure function retains the same functional dependency for all values of the energy, the canonical probability density,

, and if the structure function retains the same functional dependency for all values of the energy, the canonical probability density,

will belong to the family of exponential distributions known as gamma densities. Consequently, the canonical probability density falls under the jurisdiction of the local law of large numbers which asserts that a sequence of independent and identically distributed random variables tends to the normal law as the sequence increases without limit.

Distribution of fluctuations about equilibrium

The expressions given below are for systems that are close to equilibrium and have negligible quantum effects.[4]

Single variable

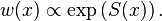

Suppose  is a thermodynamic variable. The probability distribution

is a thermodynamic variable. The probability distribution  for

for  is determined by the entropy

is determined by the entropy  :

:

If the entropy is Taylor expanded about its maximum (corresponding to the equilibrium state), the lowest order term is a Gaussian distribution:

The quantity  is the mean square fluctuation.[4]

is the mean square fluctuation.[4]

Multiple variables

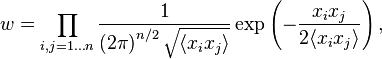

The above expression has a straightforward generalization to the probability distribution  :

:

where  is the mean value of

is the mean value of  .[4]

.[4]

Fluctuations of the fundamental thermodynamic quantities

In the table below are given the mean square fluctuations of the thermodynamic variables  and

and  in any small part of a body. The small part must still be large enough, however, to have negligible quantum effects.

in any small part of a body. The small part must still be large enough, however, to have negligible quantum effects.

|  |  |  | |

|

|  |  |  |

|

|  |  |  |

|

|  |  |  |

|

|  |  |  |

See also

Notes

- ↑ In statistical mechanics they are often simply referred to as fluctuations.

- 1 2 Khinchin 1949

- ↑ Lavenda 1991

- 1 2 3 4 Landau 1985

References

- Khinchin, A. I. (1949). Mathematical Foundations of Statistical Mechanics. Dover Publications. ISBN 0-486-60147-1.

- Lavenda, B. H. (1991). Statistical Physics: A Probabilistic Approach. Wiley-Interscience. ISBN 0-471-54607-0.

- Landau, L. D.; Lifshitz, E. M. (1985). Statistical Physics, Part 1 (3rd ed.). Pergamon Press. ISBN 0-08-023038-5.

to get degrees).

to get degrees).  is the

is the