Telescoping series

In mathematics, a telescoping series is a series whose partial sums eventually only have a fixed number of terms after cancellation.[1][2] Such a technique is also known as the method of differences.

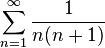

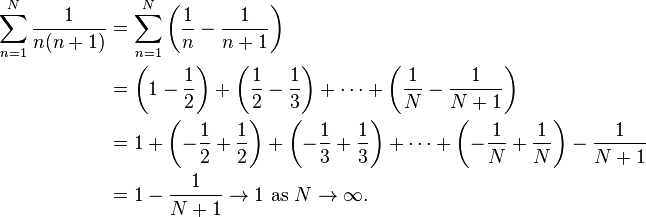

For example, the series

(the series of reciprocals of pronic numbers) simplifies as

In general

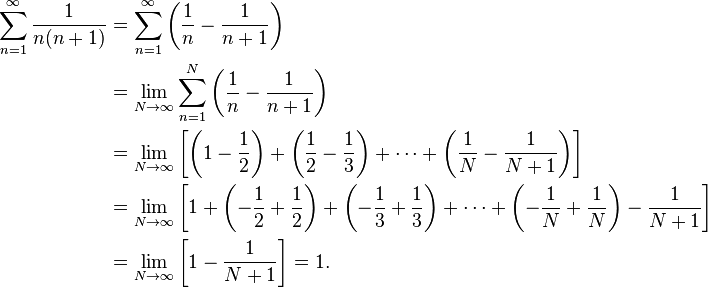

Let  be a sequence of numbers. Then,

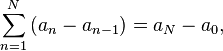

be a sequence of numbers. Then,

and, if

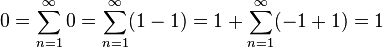

A pitfall

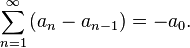

Although telescoping can be a useful technique, there are pitfalls to watch out for:

is not correct because this regrouping of terms is invalid unless the individual terms converge to 0; see Grandi's series. The way to avoid this error is to find the sum of the first N terms first and then take the limit as N approaches infinity:

More examples

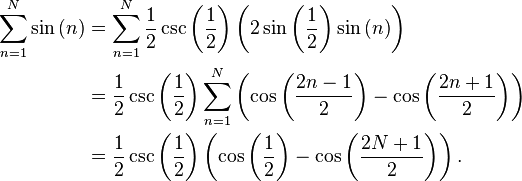

- Many trigonometric functions also admit representation as a difference, which allows telescopic cancelling between the consecutive terms.

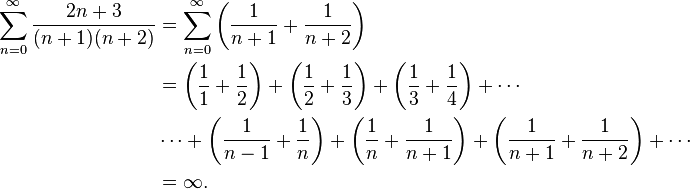

- Some sums of the form

- where f and g are polynomial functions whose quotient may be broken up into partial fractions, will fail to admit summation by this method. In particular, we have

- The problem is that the terms do not cancel.

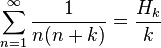

- Let k be a positive integer. Then

- where Hk is the kth harmonic number. All of the terms after 1/(k − 1) cancel.

An application in probability theory

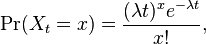

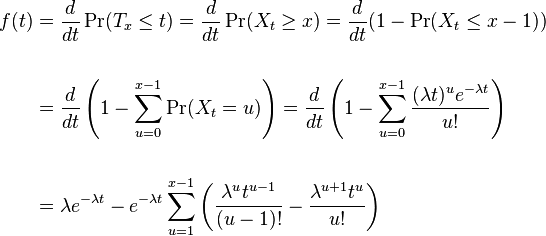

In probability theory, a Poisson process is a stochastic process of which the simplest case involves "occurrences" at random times, the waiting time until the next occurrence having a memoryless exponential distribution, and the number of "occurrences" in any time interval having a Poisson distribution whose expected value is proportional to the length of the time interval. Let Xt be the number of "occurrences" before time t, and let Tx be the waiting time until the xth "occurrence". We seek the probability density function of the random variable Tx. We use the probability mass function for the Poisson distribution, which tells us that

where λ is the average number of occurrences in any time interval of length 1. Observe that the event {Xt ≥ x} is the same as the event {Tx ≤ t}, and thus they have the same probability. The density function we seek is therefore

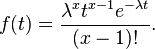

The sum telescopes, leaving

Other applications

For other applications, see:

- Grandi's series;

- Proof that the sum of the reciprocals of the primes diverges, where one of the proofs uses a telescoping sum;

- Order statistic, where a telescoping sum occurs in the derivation of a probability density function;

- Lefschetz fixed-point theorem, where a telescoping sum arises in algebraic topology;

- Homology theory, again in algebraic topology;

- Eilenberg–Mazur swindle, where a telescoping sum of knots occurs.

- Fundamental theorem of calculus, a continuous analog of telescoping series.

Notes and references

- ↑ Tom M. Apostol, Calculus, Volume 1, Blaisdell Publishing Company, 1962, pages 422–3

- ↑ Brian S. Thomson and Andrew M. Bruckner, Elementary Real Analysis, Second Edition, CreateSpace, 2008, page 85