Stationary process

In mathematics and statistics, a stationary process (or strict(ly) stationary process or strong(ly) stationary process) is a stochastic process whose joint probability distribution does not change when shifted in time. Consequently, parameters such as the mean and variance, if they are present, also do not change over time and do not follow any trends.

Stationarity is used as a tool in time series analysis, where the raw data is often transformed to become stationary; for example, economic data are often seasonal and/or dependent on a non-stationary price level. An important type of non-stationary process that does not include a trend-like behavior is the cyclostationary process.

Note that a "stationary process" is not the same thing as a "process with a stationary distribution". Indeed, there are further possibilities for confusion with the use of "stationary" in the context of stochastic processes; for example a "time-homogeneous" Markov chain is sometimes said to have "stationary transition probabilities". Besides, all stationary Markov random processes are time-homogeneous.

Definition

Formally, let  be a stochastic process and let

be a stochastic process and let  represent the cumulative distribution function of the joint distribution of

represent the cumulative distribution function of the joint distribution of  at times

at times  . Then,

. Then,  is said to be strictly(or strongly) stationary if, for all

is said to be strictly(or strongly) stationary if, for all  , for all

, for all  , and for all

, and for all  ,

,

Since  does not affect

does not affect  ,

,  is not a function of time.

is not a function of time.

Examples

As an example, white noise is stationary. The sound of a cymbal clashing, if hit only once, is not stationary because the acoustic power of the clash (and hence its variance) diminishes with time. However, it would be possible to invent a stochastic process describing when the cymbal is hit, such that the overall response would form a stationary process. For example, if the cymbal were hit at moments in time corresponding to a homogeneous Poisson Process, the overall response would be stationary.

An example of a discrete-time stationary process where the sample space is also discrete (so that the random variable may take one of N possible values) is a Bernoulli scheme. Other examples of a discrete-time stationary process with continuous sample space include some autoregressive and moving average processes which are both subsets of the autoregressive moving average model. Models with a non-trivial autoregressive component may be either stationary or non-stationary, depending on the parameter values, and important non-stationary special cases are where unit roots exist in the model.

Let Y be any scalar random variable, and define a time-series { Xt }, by

.

.

Then { Xt } is a stationary time series, for which realisations consist of a series of constant values, with a different constant value for each realisation. A law of large numbers does not apply on this case, as the limiting value of an average from a single realisation takes the random value determined by Y, rather than taking the expected value of Y.

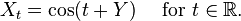

As a further example of a stationary process for which any single realisation has an apparently noise-free structure, let Y have a uniform distribution on (0,2π] and define the time series { Xt } by

Then { Xt } is strictly stationary.

Weaker forms of stationarity

Weak or wide-sense stationarity

A weaker form of stationarity commonly employed in signal processing is known as weak-sense stationarity, wide-sense stationarity (WSS), covariance stationarity, or second-order stationarity. WSS random processes only require that 1st moment and autocovariance do not vary with respect to time. Any strictly stationary process which has a mean and a covariance is also WSS.

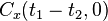

So, a continuous-time random process x(t) which is WSS has the following restrictions on its mean function

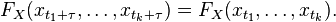

and autocovariance function

The first property implies that the mean function mx(t) must be constant. The second property implies that the covariance function depends only on the difference between  and

and  and only needs to be indexed by one variable rather than two variables. Thus, instead of writing,

and only needs to be indexed by one variable rather than two variables. Thus, instead of writing,

the notation is often abbreviated and written as:

This also implies that the autocorrelation depends only on  , that is

, that is

The main advantage of wide-sense stationarity is that it places the time-series in the context of Hilbert spaces. Let H be the Hilbert space generated by {x(t)} (that is, the closure of the set of all linear combinations of these random variables in the Hilbert space of all square-integrable random variables on the given probability space). By the positive definiteness of the autocovariance function, it follows from Bochner's theorem that there exists a positive measure μ on the real line such that H is isomorphic to the Hilbert subspace of L2(μ) generated by {e−2πiξ⋅t}. This then gives the following Fourier-type decomposition for continuous time stationary stochastic process: there exists a stochastic process ωξ with orthogonal increments such that, for all t

where the integral on the right hand side is interpreted in a suitable (Riemann) sense. Same result holds for a discrete-time stationary process, with the spectral measure now defined on the unit circle.

When processing WSS random signals with linear, time-invariant (LTI) filters, it is helpful to think of the correlation function as a linear operator. Since it is a circulant operator (depends only on the difference between the two arguments), its eigenfunctions are the Fourier complex exponentials. Additionally, since the eigenfunctions of LTI operators are also complex exponentials, LTI processing of WSS random signals is highly tractable—all computations can be performed in the frequency domain. Thus, the WSS assumption is widely employed in signal processing algorithms.

Other terminology

The terminology used for types of stationarity other than strict stationarity can be rather mixed. Some examples follow.

- Priestley uses stationary up to order m if conditions similar to those given here for wide sense stationarity apply relating to moments up to order m.[1][2] Thus wide sense stationarity would be equivalent to "stationary to order 2", which is different from the definition of second-order stationarity given here.

See also

References

- ↑ Priestley, M. B. (1981). Spectral Analysis and Time Series. Academic Press. ISBN 0-12-564922-3.

- ↑ Priestley, M. B. (1988). Non-linear and Non-stationary Time Series Analysis. Academic Press. ISBN 0-12-564911-8.

- ↑ Honarkhah, M.; Caers, J. (2010). "Stochastic Simulation of Patterns Using Distance-Based Pattern Modeling". Mathematical Geosciences 42 (5): 487–517. doi:10.1007/s11004-010-9276-7.

- ↑ Tahmasebi, P.; Sahimi, M. (2015). "Reconstruction of nonstationary disordered materials and media: Watershed transform and cross-correlation function" (PDF). Physical Review E 91 (3). doi:10.1103/PhysRevE.91.032401.

Further reading

- Enders, Walter (2010). Applied Econometric Time Series (Third ed.). New York: Wiley. pp. 53–57. ISBN 978-0-470-50539-7.

External links

| ||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

![\mathbb{E}[x(t)] = m_x(t) = m_x(t + \tau) \,\, \text{ for all } \, \tau \in \mathbb{R}](../I/m/35ac697d0d3db7ddab3bd18bc4264ccc.png)

![\mathbb{E}[(x(t_1)-m_x(t_1))(x(t_2)-m_x(t_2))] = C_x(t_1, t_2) = C_x(t_1 + (-t_2), t_2 + (-t_2)) = C_x(t_1 - t_2, 0).](../I/m/d8c497bdbbab83200eeae403d5a00d52.png)