Stein's lemma

Stein's lemma,[1] named in honor of Charles Stein, is a theorem of probability theory that is of interest primarily because of its applications to statistical inference — in particular, to James–Stein estimation and empirical Bayes methods — and its applications to portfolio choice theory. The theorem gives a formula for the covariance of one random variable with the value of a function of another, when the two random variables are jointly normally distributed.

Statement of the lemma

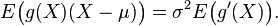

Suppose X is a normally distributed random variable with expectation μ and variance σ2. Further suppose g is a function for which the two expectations E(g(X) (X − μ) ) and E( g ′(X) ) both exist (the existence of the expectation of any random variable is equivalent to the finiteness of the expectation of its absolute value). Then

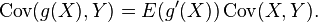

In general, suppose X and Y are jointly normally distributed. Then

Proof

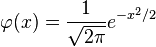

In order to prove the univariate version of this lemma, recall that the probability density function for the normal distribution with expectation 0 and variance 1 is

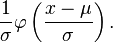

and that for a normal distribution with expectation μ and variance σ2 is

Then use integration by parts.

More general statement

Suppose X is in an exponential family, that is, X has the density

Suppose this density has support  where

where  could be

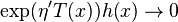

could be  and as

and as  ,

, where

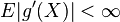

where  is any differentiable function such that

is any differentiable function such that  or

or  if

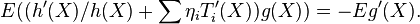

if  finite. Then

finite. Then

The derivation is same as the special case, namely, integration by parts.

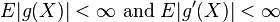

If we only know  has support

has support  , then it could be the case that

, then it could be the case that  but

but  . To see this, simply put

. To see this, simply put  and

and  with infinitely spikes towards infinity but still integrable. One such example could be adapted from

with infinitely spikes towards infinity but still integrable. One such example could be adapted from  so that

so that  is smooth.

is smooth.

Extensions to elliptically-contoured distributions also exist.[2][3]

See also

References

- ↑ Ingersoll, J., Theory of Financial Decision Making, Rowman and Littlefield, 1987: 13-14.

- ↑ Hamada, Mahmoud; Valdez, Emiliano A. (2008). "CAPM and option pricing with elliptically contoured distributions". The Journal of Risk & Insurance 75 (2): 387–409. doi:10.1111/j.1539-6975.2008.00265.x.

- ↑ Landsman, Zinoviy; Nešlehová, Johanna (2008). "Stein's Lemma for elliptical random vectors". Journal of Multivariate Analysis 99 (5): 912––927. doi:10.1016/j.jmva.2007.05.006.