Square root of a matrix

In mathematics, the square root of a matrix extends the notion of square root from numbers to matrices.

Matrix B is said to be a square root of A if the matrix product BB is equal to A.[1]

Properties

In general, a matrix can have several square roots. For example, the matrix

has square roots

has square roots

and

and

,

as well as their additive inverses.

,

as well as their additive inverses.

Another example is the 2×2 identity matrix  which has infinitely many symmetric rational square roots given by

which has infinitely many symmetric rational square roots given by

where  is any Pythagorean triple—that is, any set of positive integers such that

is any Pythagorean triple—that is, any set of positive integers such that  .[2]

.[2]

However, a positive-semidefinite matrix has precisely one positive-semidefinite square root, which can be called its principal square root.

While the square root of a nonnegative integer is either again an integer or an irrational number, in contrast an integer matrix can have a square root whose entries are rational, yet not integer. For example, the matrix

has the non-integer square root

has the non-integer square root

as well as the integer square root matrix

as well as the integer square root matrix

.

The 2×2 identity matrix is another example.

.

The 2×2 identity matrix is another example.

A 2×2 matrix with two distinct nonzero eigenvalues has four square roots.

More generally, an n×n matrix with n distinct nonzero eigenvalues has 2n square roots. Such a matrix, A, has a decomposition VDV−1 where V is the matrix whose columns are eigenvectors of A and D is the diagonal matrix whose diagonal elements are the corresponding n eigenvalues λi. Thus the square roots of A are given by VD½ V−1, where D½ is any square root matrix of D, which, for distinct eigenvalues, must be diagonal with diagonal elements equal to square roots of the diagonal elements of D; since there are two possible choices for a square root of each diagonal element of D, there are 2n choices for the matrix D½.

This also leads to a proof of the above observation, that a positive-definite matrix has precisely one positive-definite square root: a positive definite matrix has only positive eigenvalues, and each of these eigenvalues has only one positive square root; and since the eigenvalues of the square root matrix are the diagonal elements of D½, for the square root matrix to be itself positive definite necessitates the use of only the unique positive square roots of the original eigenvalues.

Just as with the real numbers, a real matrix may fail to have a real square root, but have a square root with complex-valued entries.

Some matrices have no square root. An example is the matrix  .

.

In general, a complex matrix with positive real eigenvalues has a unique square root with positive eigenvalues called the principal square root. Moreover, the operation of taking the principal square root is continuous on this set of matrices. If the matrix has real entries, then the square root also has real entries. These properties are consequences of the holomorphic functional calculus applied to matrices. The existence and uniqueness of the principal square root can be deduced directly from the Jordan normal form (see below).[3] [4]

Computation methods

Explicit formulas

For a 2 × 2 matrix, there are explicit formulas that give up to four square roots, if the matrix has any roots.

If D is a diagonal n × n matrix, one can obtain a square root by taking a diagonal matrix R, where each element along the diagonal is a square root of the corresponding element of D. If the diagonal elements of D are real and non-negative, and the square roots are taken with non-negative sign, the matrix R will be the principal root of D.

If a matrix is idempotent, one of its square roots is the matrix itself.

By diagonalization

An n × n matrix A is diagonalizable if there is a matrix V and a diagonal matrix D such that A = VDV−1. This happens if and only if A has n eigenvectors which constitute a basis for Cn. In this case, V can be chosen to be the matrix with the n eigenvectors as columns, and thus a square root of A is

where S is any square root of D. Indeed,

For example, the matrix

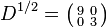

can be diagonalized as VDV−1, where

can be diagonalized as VDV−1, where

and

and  .

.

D has principal square root

,

,

giving the square root

.

.

When A is symmetric, the diagonalizing matrix V can be made an orthogonal matrix by suitably choosing the eigenvectors (see spectral theorem). Then the inverse of V is simply the transpose, so that

By Jordan decomposition

For non-diagonalizable matrices one can calculate the Jordan normal form followed by a series expansion, similar to the approach described in logarithm of a matrix.

To see that any complex matrix with positive eigenvalues has a square root of the same form, it suffices to check this for a Jordan block. Any such block has the form λ(I + N) with λ > 0 and N nilpotent. If (1 + z)1/2 = 1 + a1 z + a2 z2 + ⋅⋅⋅⋅⋅ is the binomial expansion for the square root (valid in |z| < 1), then as a formal power series its square equals 1 + z. Substituting N for z, only finitely many terms will be non-zero and S = √λ (I + a1 N + a2 N2 + ⋅⋅⋅⋅⋅) gives a square root of the Jordan block with eigenvalue √λ.

It suffices to check uniqueness for a Jordan block with λ = 1. The square constructed above has the form S = I + L where L is polynomial in N without constant term. Any other square root T with positive eigenvalues has the form T = I + M with M nilpotent, commuting with N and hence L. But then 0 = S2 − T2 = 2(L − M)(I + (L + M)/2). Since L and M commute, the matrix L + M is nilpotent and I + (L + M)/2 is invertible with inverse given by a Neumann series. Hence L = M.

If A is a matrix with positive eigenvalues and minimal polynomial p(t), then the Jordan decomposition into generalized eigenspaces of A can be deduced from the partial fraction expansion of p(t)−1. The corresponding projections onto the generalized eigenspaces are given by real polynomials in A. On each eigenspace, A has the form λ(I + N) as above. The power series expression for the square root on the eigenspace show that the principal square root of A has the form q(A) where q(t) is a polynomial with real coefficients.

By Denman–Beavers iteration

Another way to find the square root of an n × n matrix A is the Denman–Beavers square root iteration.[5]

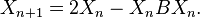

Let Y0 = A and Z0 = I, where I is the n × n identity matrix. The iteration is defined by

As this uses a pair of sequences of matrix inverses whose later elements change comparatively little, only the first elements have a high computational cost since the remainder can be computed from earlier elements with only a few passes of a variant of Newton's method for computing inverses,

With this, for later values of k one would set  and

and  and then use

and then use  for some small n (perhaps just 1), and similarly for

for some small n (perhaps just 1), and similarly for

Convergence is not guaranteed, even for matrices that do have square roots, but if the process converges, the matrix  converges quadratically to a square root A1/2, while

converges quadratically to a square root A1/2, while  converges to its inverse, A−1/2.

converges to its inverse, A−1/2.

By the Babylonian method

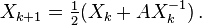

Yet another iterative method is obtained by taking the well-known formula of the Babylonian method for computing the square root of a real number, and applying it to matrices. Let X0 = I, where I is the identity matrix. The iteration is defined by

Again, convergence is not guaranteed, but if the process converges, the matrix  converges quadratically to a square root A1/2. Compared to Denman–Beavers iteration, an advantage of the Babylonian method is that only one matrix inverse need be computed per iteration step. On the other hand, as Denman–Beavers iteration uses a pair of sequences of matrix inverses whose later elements change comparatively little, only the first elements have a high computational cost since the remainder can be computed from earlier elements with only a few passes of a variant of Newton's method for computing inverses (see Denman–Beavers iteration above); of course, the same approach can be used to get the single sequence of inverses needed for the Babylonian method. However, unlike Denman–Beavers iteration, the Babylonian method is numerically unstable and more likely to fail to converge.[1]

converges quadratically to a square root A1/2. Compared to Denman–Beavers iteration, an advantage of the Babylonian method is that only one matrix inverse need be computed per iteration step. On the other hand, as Denman–Beavers iteration uses a pair of sequences of matrix inverses whose later elements change comparatively little, only the first elements have a high computational cost since the remainder can be computed from earlier elements with only a few passes of a variant of Newton's method for computing inverses (see Denman–Beavers iteration above); of course, the same approach can be used to get the single sequence of inverses needed for the Babylonian method. However, unlike Denman–Beavers iteration, the Babylonian method is numerically unstable and more likely to fail to converge.[1]

Square roots of positive operators

In linear algebra and operator theory, given a bounded positive semidefinite operator (a non-negative operator) T on a complex Hilbert space, B is a square root of T if T = B* B, where B* denotes the Hermitian adjoint of B. According to the spectral theorem, the continuous functional calculus can be applied to obtain an operator T½ such that T½ is itself positive and (T½)2 = T. The operator T½ is the unique non-negative square root of T.

A bounded non-negative operator on a complex Hilbert space is self adjoint by definition. So T = (T½)* T½. Conversely, it is trivially true that every operator of the form B* B is non-negative. Therefore, an operator T is non-negative if and only if T = B* B for some B (equivalently, T = CC* for some C).

The Cholesky factorization provides another particular example of square root, which should not be confused with the unique non-negative square root.

Unitary freedom of square roots

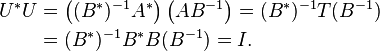

If T is a non-negative operator on a finite-dimensional Hilbert space, then all square roots of T are related by unitary transformations. More precisely, if T = A*A = B*B, then there exists a unitary U such that A = UB.

Indeed, take B = T½ to be the unique non-negative square root of T. If T is strictly positive, then B is invertible, and so U = AB−1 is unitary:

If T is non-negative without being strictly positive, then the inverse of B cannot be defined, but the Moore–Penrose pseudoinverse B+ can be. In that case, the operator B+A is a partial isometry, that is, a unitary operator from the range of T to itself. This can then be extended to a unitary operator U on the whole space by setting it equal to the identity on the kernel of T. More generally, this is true on an infinite-dimensional Hilbert space if, in addition, T has closed range. In general, if A, B are closed and densely defined operators on a Hilbert space H, and A* A = B* B, then A = UB where U is a partial isometry.

Some applications

Square roots, and the unitary freedom of square roots, have applications throughout functional analysis and linear algebra.

Polar decomposition

If A is an invertible operator on a finite-dimensional Hilbert space, then there is a unique unitary operator U and positive operator P such that

this is the polar decomposition of A. The positive operator P is the unique positive square root of the positive operator A∗A, and U is defined by U = AP−1.

If A is not invertible, then it still has a polar composition in which P is defined in the same way (and is unique). The unitary operator U is not unique. Rather it is possible to determine a "natural" unitary operator as follows: AP+ is a unitary operator from the range of A to itself, which can be extended by the identity on the kernel of A∗. The resulting unitary operator U then yields the polar decomposition of A.

Kraus operators

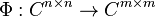

By Choi's result, a linear map

is completely positive if and only if it is of the form

where k ≤ nm. Let {Ep q} ⊂ Cn × n be the n2 elementary matrix units. The positive matrix

is called the Choi matrix of Φ. The Kraus operators correspond to the, not necessarily square, square roots of MΦ: For any square root B of MΦ, one can obtain a family of Kraus operators Vi by undoing the Vec operation to each column bi of B. Thus all sets of Kraus operators are related by partial isometries.

Mixed ensembles

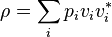

In quantum physics, a density matrix for an n-level quantum system is an n × n complex matrix ρ that is positive semidefinite with trace 1. If ρ can be expressed as

where ∑ pi = 1, the set

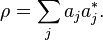

is said to be an ensemble that describes the mixed state ρ. Notice {vi} is not required to be orthogonal. Different ensembles describing the state ρ are related by unitary operators, via the square roots of ρ. For instance, suppose

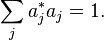

The trace 1 condition means

Let

and vi be the normalized ai. We see that

gives the mixed state ρ.

Unscented Kalman Filter

In the Unscented Kalman Filter (UKF),[6] the square root of the state error covariance matrix is required for the unscented transform which is the statistical linearization method used. A comparison between different matrix square root calculation methods within a UKF application of GPS/INS sensor fusion was presented, which indicated that the Cholesky decomposition method was best suited for UKF applications.[7]

See also

- Matrix function

- Holomorphic functional calculus

- Logarithm of a matrix

- Sylvester's formula

- 2 × 2 real matrices#Functions of 2 × 2 real matrices

Notes

- 1 2 Higham, Nicholas J. (April 1986), "Newton's Method for the Matrix Square Root" (PDF), Mathematics of Computation 46 (174): 537–549, doi:10.2307/2007992, JSTOR 2007992

- ↑ Mitchell, Douglas W. "Using Pythagorean triples to generate square roots of I2". The Mathematical Gazette 87, November 2003, 499-500.

- ↑ For analytic functions of matrices, see

- ↑ For the holomorphic functional calculus, see:

- ↑ Denman & Beavers 1976; Cheng et al. 2001

- ↑ Julier, S.; J. Uhlmann (1997), "A New Extension of the Kalman Filtering to Non Linear Systems", SPIE Proceedings Series 3068: 182–193

- ↑ Rhudy, Matthew; Yu Gu, Jason Gross, and Marcello R. Napolitano; Gross, Jason; Napolitano, Marcello R. (December 2011), "Evaluation of Matrix Square Root Operations for UKF within a UAV_Based GPS/INS Sensor Fusion Application", International Journal of Navigation and Observation 2011: 1, doi:10.1155/2011/416828

References

- Bourbaki, Nicolas (2007), Théories spectrales, chapitres 1 et 2, Springer, ISBN 3540353313

- Conway, John B. (1990), A Course in Functional Analysis, Graduate Texts in Mathematics 96, Springer, pp. 199–205, ISBN 0387972455, Chapter IV, Reisz functional calculus

- Cheng, Sheung Hun; Higham, Nicholas J.; Kenney, Charles S.; Laub, Alan J. (2001), "Approximating the Logarithm of a Matrix to Specified Accuracy" (PDF), SIAM Journal on Matrix Analysis and Applications 22 (4): 1112–1125, doi:10.1137/S0895479899364015

- Burleson, Donald R., Computing the square root of a Markov matrix: eigenvalues and the Taylor series

- Denman, Eugene D.; Beavers, Alex N. (1976), "The matrix sign function and computations in systems", Applied Mathematics and Computation 2 (1): 63–94, doi:10.1016/0096-3003(76)90020-5

- Higham, Nicholas (2008), Functions of Matrices. Theory and Computation, SIAM, ISBN 978-0-89871-646-7

- Horn, Roger A.; Johnson, Charles R. (1994), Topics in Matrix Analysis, Cambridge University Press, ISBN 0521467136

- Rudin, Walter (1991), Functional analysis, International series in pure and applied mathematics (2nd ed.), McGraw-Hill, ISBN 0070542368