Smoothing spline

The smoothing spline is a method of fitting a smooth curve to a set of noisy observations using a spline function.

Definition

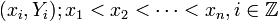

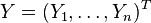

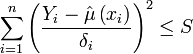

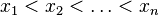

Let  be a sequence of observations, modeled by the relation

be a sequence of observations, modeled by the relation  . The smoothing spline estimate

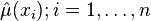

. The smoothing spline estimate  of the function

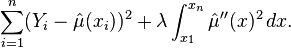

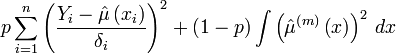

of the function  is defined to be the minimizer (over the class of twice differentiable functions) of[1]

is defined to be the minimizer (over the class of twice differentiable functions) of[1]

Remarks:

-

is a smoothing parameter, controlling the trade-off between fidelity to the data and roughness of the function estimate.

is a smoothing parameter, controlling the trade-off between fidelity to the data and roughness of the function estimate. - The integral is evaluated over the range of the

.

. - As

(no smoothing), the smoothing spline converges to the interpolating spline.

(no smoothing), the smoothing spline converges to the interpolating spline. - As

(infinite smoothing), the roughness penalty becomes paramount and the estimate converges to a linear least squares estimate.

(infinite smoothing), the roughness penalty becomes paramount and the estimate converges to a linear least squares estimate. - The roughness penalty based on the second derivative is the most common in modern statistics literature, although the method can easily be adapted to penalties based on other derivatives.

- In early literature, with equally-spaced

, second or third-order differences were used in the penalty, rather than derivatives.

, second or third-order differences were used in the penalty, rather than derivatives. - When the sum-of-squares term is replaced by a log-likelihood, the resulting estimate is termed penalized likelihood. The smoothing spline is the special case of penalized likelihood resulting from a Gaussian likelihood.

Derivation of the smoothing spline

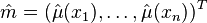

It is useful to think of fitting a smoothing spline in two steps:

- First, derive the values

.

. - From these values, derive

for all x.

for all x.

Now, treat the second step first.

Given the vector  of fitted values, the sum-of-squares part of the spline criterion is fixed. It remains only to minimize

of fitted values, the sum-of-squares part of the spline criterion is fixed. It remains only to minimize  , and the minimizer is a natural cubic spline that interpolates the points

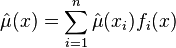

, and the minimizer is a natural cubic spline that interpolates the points  . This interpolating spline is a linear operator, and can be written in the form

. This interpolating spline is a linear operator, and can be written in the form

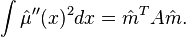

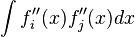

where  are a set of spline basis functions. As a result, the roughness penalty has the form

are a set of spline basis functions. As a result, the roughness penalty has the form

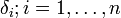

where the elements of A are  . The basis functions, and hence the matrix A, depend on the configuration of the predictor variables

. The basis functions, and hence the matrix A, depend on the configuration of the predictor variables  , but not on the responses

, but not on the responses  or

or  .

.

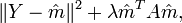

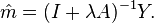

Now back to the first step. The penalized sum-of-squares can be written as

where  .

Minimizing over

.

Minimizing over  gives

gives

De Boor's approach

De Boor's approach exploits the same idea, of finding a balance between having a smooth curve and being close to the given data.[2]

where  is a parameter called smooth factor and belongs to the interval

is a parameter called smooth factor and belongs to the interval ![[0,1]](../I/m/ccfcd347d0bf65dc77afe01a3306a96b.png) , and

, and  are the quantities controlling the extent of smoothing (they represent the weight

are the quantities controlling the extent of smoothing (they represent the weight  of each point

of each point  ). In practice, since cubic splines are mostly used,

). In practice, since cubic splines are mostly used,  is usually

is usually  . The solution for

. The solution for  was proposed by Reinsch in 1967.[3] For

was proposed by Reinsch in 1967.[3] For  , when

, when  approaches

approaches  ,

,  converges to the "natural" spline interpolant to the given data.[2] As

converges to the "natural" spline interpolant to the given data.[2] As  approaches

approaches  ,

,  converges to a straight line (the smoothest curve). Since finding a suitable value of

converges to a straight line (the smoothest curve). Since finding a suitable value of  is a task of trial and error, a redundant constant

is a task of trial and error, a redundant constant  was introduced for convenience.[3]

was introduced for convenience.[3]

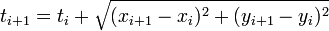

is used to numerically determine the value of

is used to numerically determine the value of  so that the function

so that the function  meets the following condition:

meets the following condition:

The algorithm described by de Boor starts with  and increases

and increases  until the condition is met.[2] If

until the condition is met.[2] If  is an estimation of the standard deviation for

is an estimation of the standard deviation for  , the constant

, the constant  is recommended to be chosen in the interval

is recommended to be chosen in the interval ![\left [ n-\sqrt{2n},n+\sqrt{2n} \right ]](../I/m/18a2287adf423fea7bfa55f63a0d56f1.png) . Having

. Having  means the solution is the "natural" spline interpolant.[3] Increasing

means the solution is the "natural" spline interpolant.[3] Increasing  means we obtain a smoother curve by getting farther from the given data.

means we obtain a smoother curve by getting farther from the given data.

Creating a multidimensional spline

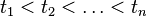

Given the constraint from the definition formula  we can conclude that the algorithm doesn't work for all sets of data. If we plan to use this algorithm for random points in a multidimensional space we need to find a solution to give as input to the algorithm sets of data where these constraints are met. A solution for this is to introduce a parameter so that the input data would be represented as single-valued functions depending on that parameter; after this the smoothing will be performed for each function. In a bidimensional space a solution would be to parametrize

we can conclude that the algorithm doesn't work for all sets of data. If we plan to use this algorithm for random points in a multidimensional space we need to find a solution to give as input to the algorithm sets of data where these constraints are met. A solution for this is to introduce a parameter so that the input data would be represented as single-valued functions depending on that parameter; after this the smoothing will be performed for each function. In a bidimensional space a solution would be to parametrize  and

and  so that they would become

so that they would become  and

and  where

where  . A convenient solution for

. A convenient solution for  is the cumulating distance

is the cumulating distance  where

where  .[4][5]

.[4][5]

A more detailed analysis on parametrization is done by E.T.Y Lee.[6]

Related methods

Smoothing splines are related to, but distinct from:

- Regression splines. In this method, the data is fitted to a set of spline basis functions with a reduced set of knots, typically by least squares. No roughness penalty is used. (See also multivariate adaptive regression splines.)

- Penalized Splines. This combines the reduced knots of regression splines, with the roughness penalty of smoothing splines.[7]

- Elastic maps method for manifold learning. This method combines the least squares penalty for approximation error with the bending and stretching penalty of the approximating manifold and uses the coarse discretization of the optimization problem; see thin plate splines.

Source code

Source code for spline smoothing can be found in the examples from Carl de Boor's book A Practical Guide to Splines. The examples are in Fortran programming language. The updated sources are available also on Carl de Boor's official site .

Further reading

- Wahba, G. (1990). Spline Models for Observational Data. SIAM, Philadelphia.

- Green, P. J. and Silverman, B. W. (1994). Nonparametric Regression and Generalized Linear Models. CRC Press.

- De Boor, C. (2001). A Practical Guide to Splines (Revised Edition). Springer.

References

- ↑ Hastie, T. J.; Tibshirani, R. J. (1990). Generalized Additive Models. Chapman and Hall. ISBN 0-412-34390-8.

- 1 2 3 De Boor, C. (2001). A Practical Guide to Splines (Revised Edition). Springer. pp. 207–214. ISBN 0-387-90356-9.

- 1 2 3 Reinsch, Christian H. "Smoothing by Spline Functions" (PDF). Retrieved 11 March 2011.

- ↑ Robert E. Smith Jr., Joseph M Price and Lona M. Howser. "A Smoothing Algorithm Using Cubic Spline Functions" (PDF). Retrieved 31 May 2011.

- ↑ N. Y. Graham. "Smoothing With Periodic Cubic Splines" (PDF). Retrieved 31 May 2011.

- ↑ E.T.Y. Lee. "Choosing nodes in parametric curve interpolation" (PDF). Retrieved 28 June 2011.

- ↑ Ruppert, David; Wand, M. P.; Carroll, R. J. (2003). Semiparametric Regression. Cambridge University Press. ISBN 0-521-78050-0.