Speedup

| Look up speedup in Wiktionary, the free dictionary. |

In computer architecture, speedup is a metric for improvement in performance between two systems processing the same problem. More technically, is it the improvement in speed of execution of a task executed on two similar architectures with different resources. The notion of speedup was established by Amdahl's law, which was particularly focused on parallel processing. However, speedup can be used more generally to show the effect on performance after any resource enhancement.

Definitions

Speedup can be defined for two different types of quantities: latency and throughput.[1]

Latency of an architecture is the reciprocal of the execution speed of a task:

where

- v is the execution speed of the task;

- T is the execution time of the task;

- W is the execution workload of the task.

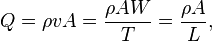

Throughput of an architecture is the execution rate of a task:

where

- ρ is the execution density (e.g., the number of stages in an instruction pipeline for a pipelined architecture);

- A is the execution capacity (e.g., the number of processors for a parallel architecture).

Latency is often measured in seconds per unit of execution workload. Throughput is often measured in units of execution workload per second. Another frequent unit of throughput is the instruction per cycle (IPC). Its reciprocal, the cycle per instruction (CPI), is another frequent unit of latency.

Speedup is dimensionless and defined differently for each type of quantity so that it is a consistent metric.

Speedup in latency

Speedup in latency is defined by the following formula:[2]

where

- Slatency is the speedup in latency of the architecture 2 with respect to the architecture 1;

- L1 is the latency of the architecture 1;

- L2 is the latency of the architecture 2.

Speedup in latency can be predicted from Amdahl's law or Gustafson's law.

Speedup in throughput

Speedup in throughput is defined by the following formula:[3]

where

- Sthroughput is the speedup in throughput of the architecture 2 with respect to the architecture 1;

- Q1 is the throughput of the architecture 1;

- Q2 is the throughput of the architecture 2.

Examples

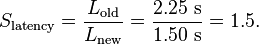

Using execution times

We are testing the effectiveness of a branch predictor on the execution of a program. First, we execute the program with the standard branch predictor on the processor, which yields an execution time of 2.25 seconds. Next, we execute the program with our modified (and hopefully improved) branch predictor on the same processor, which produces an execution time of 1.50 seconds. In both cases the execution workload is the same. Using our speedup formula, we know

Our new branch predictor has provided a 1.5x speedup over the original.

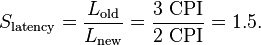

Using cycles per instruction and instructions per cycle

We can also measure speedup in cycles per instruction (CPI) which is a latency. First, we execute the program with the standard branch predictor, which yields a CPI of 3. Next, we execute the program with our modified branch predictor, which yields a CPI of 2. In both cases the execution workload is the same and both architectures are not pipelined nor parallel. Using the speedup formula gives

We can also measure speedup in instructions per second (IPC), which is a throughput and the inverse of CPI. Using the speedup formula gives

We achieve the same 1.5x speedup, though we measured different quantities.

Additional details

Let S be the speedup of execution of a task and s the speedup of execution of the part of the task that benefits from the improvement of the resources of an architecture. Linear speedup or ideal speedup is obtained when S = s. When running an task with linear speedup, doubling then local speedup doubles the overall speedup. As this is ideal, it is considered very good scalability.

Efficiency is a metric of the utilization of the resources of the improved system defined as

Its value is typically between 0 and 1. Programs with linear speedup and programs running on a single processor have an efficiency of 1, while many difficult-to-parallelize programs have efficiency such as 1/ln(s) that approaches 0 as the number of processors A = s increases.

In engineering contexts, efficiency curves are more often used for graphs than speedup curves, since

- all of the area in the graph is useful (whereas in speedup curves half of the space is wasted);

- it is easy to see how well the improvement of the system is working;

- there is no need to plot a "perfect speedup" curve.

In marketing contexts, speedup curves are more often used, largely because they go up and to the right and thus appear better to the less-informed.

Super-linear speedup

Sometimes a speedup of more than A when using A processors is observed in parallel computing, which is called super-linear speedup. Super-linear speedup rarely happens and often confuses beginners, who believe the theoretical maximum speedup should be A when A processors are used.

One possible reason for super-linear speedup in low-level computations is the cache effect resulting from the different memory hierarchies of a modern computer: in parallel computing, not only do the numbers of processors change, but so does the size of accumulated caches from different processors. With the larger accumulated cache size, more or even all of the working set can fit into caches and the memory access time reduces dramatically, which causes the extra speedup in addition to that from the actual computation.[4]

An analogous situation occurs when searching large datasets, such as the genomic data searched by BLAST implementations. There the accumulated RAM from each of the nodes in a cluster enables the dataset to move from disk into RAM thereby drastically reducing the time required by e.g. mpiBLAST to search it.[5]

Super-linear speedups can also occur when performing backtracking in parallel: an exception in one thread can cause several other threads to backtrack early, before they reach the exception themselves.

Super-linear speedups can also occur in parallel implementations of branch-and-bound for optimization:[6] the processing of one node by one processor may affect the work other processors need to do for the other nodes.

References

- ↑ Martin, Milo. "Performance and Benchmarking" (PDF). Retrieved 5 June 2014.

- ↑ Hennessy, John L.; David A., Patterson (2012). Computer Architecture: A Quantitive Approach. Waltham, MA: Morgan Kaufmann. pp. 46–47. ISBN 978-0-12-383872-8.

- ↑ Baer, Jean-Loup (2010). Microprocessor Architecture: From Simple Pipelines to Chip Multiprocessors. New York: Cambridge University Press. p. 10. ISBN 978-0-521-76992-1.

- ↑ Benzi, John; Damodaran, M. (2007). "Parallel Three Dimensional Direct Simulation Monte Carlo for Simulating Micro Flows". Parallel Computational Fluid Dynamics 2007: Implementations and Experiences on Large Scale and Grid Computing. Parallel Computational Fluid Dynamics. Springer. p. 95. Retrieved 2013-03-21.

- ↑ http://people.cs.vt.edu/~feng/presentations/030903-ParCo.pdf

- ↑ http://mat.tepper.cmu.edu/blog/?p=534#comment-3029

See also

| ||||||||||||||||||||||||||||||||||||||||||||||