Space hierarchy theorem

In computational complexity theory, the space hierarchy theorems are separation results that show that both deterministic and nondeterministic machines can solve more problems in (asymptotically) more space, subject to certain conditions. For example, a deterministic Turing machine can solve more decision problems in space n log n than in space n. The somewhat weaker analogous theorems for time are the time hierarchy theorems.

The foundation for the hierarchy theorems lies in the intuition that with either more time or more space comes the ability to compute more functions (or decide more languages). The hierarchy theorems are used to demonstrate that the time and space complexity classes form a hierarchy where classes with tighter bounds contain fewer languages than those with more relaxed bounds. Here we define and prove the space hierarchy theorem.

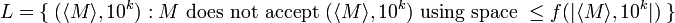

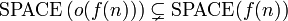

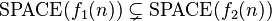

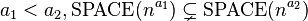

The space hierarchy theorems rely on the concept of space-constructible functions. The deterministic and nondeterministic space hierarchy theorems state that for all space-constructible functions f(n),

,

,

where SPACE stands for either DSPACE or NSPACE, and  refers to the little o notation.

refers to the little o notation.

Statement

Formally, a function  is space-constructible if

is space-constructible if  and there exists a Turing machine

which computes the function

and there exists a Turing machine

which computes the function  in space

in space  when starting

with an input

when starting

with an input  , where

, where  represents a string of

represents a string of

s. Most of the common functions that we work with are space-constructible, including polynomials, exponents, and logarithms.

s. Most of the common functions that we work with are space-constructible, including polynomials, exponents, and logarithms.

For every space-constructible function  , there exists a language

, there exists a language  that is decidable in space

that is decidable in space

but not in space

but not in space  .

.

Proof

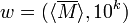

The goal here is to define a language that can be decided in space

but not space

but not space  . Here we define the language

. Here we define the language  :

:

Now, for any machine  that decides a language in space

that decides a language in space  ,

,  will differ in at least one spot from the language of

will differ in at least one spot from the language of  , namely at the value of

, namely at the value of  . The algorithm for deciding the language

. The algorithm for deciding the language  is as follows:

is as follows:

- On an input

, compute

, compute  using space-constructibility, and mark off

using space-constructibility, and mark off  cells of tape. Whenever an attempt is made to use more than

cells of tape. Whenever an attempt is made to use more than  cells, reject.

cells, reject. - If

is not of the form

is not of the form  for some TM

for some TM  , reject.

, reject. - Simulate

on input

on input  for at most

for at most  steps (using

steps (using  space). If the simulation tries to use more than

space). If the simulation tries to use more than  space or more than

space or more than  operations, then reject.

operations, then reject. - If

accepted

accepted  during this simulation, then reject; otherwise, accept.

during this simulation, then reject; otherwise, accept.

Note on step 3: Execution is limited to  steps in order to avoid the

case where

steps in order to avoid the

case where  does not halt on the input

does not halt on the input  . That is, the case where

. That is, the case where

consumes space of only

consumes space of only  as required, but runs for

infinite time.

as required, but runs for

infinite time.

The above proof holds for the case of PSPACE whereas we must make some change for the case of NPSPACE. The crucial point is that while on a deterministic TM we may easily invert acceptance and rejection (crucial for step 4), this is not possible on a non-deterministic machine.

For the case of NPSPACE we will first modify step 4 to:

- If

accepted

accepted  during this simulation, then accept; otherwise, reject.

during this simulation, then accept; otherwise, reject.

We will now prove by contradiction that  can not be decided by a TM using

can not be decided by a TM using  cells.

cells.

Assuming  can be decided by a TM using

can be decided by a TM using  cells, and following from the Immerman–Szelepcsényi theorem follows that

cells, and following from the Immerman–Szelepcsényi theorem follows that  can also be determined by a TM (which we will call

can also be determined by a TM (which we will call  ) using

) using  cells.

cells.

Here lies the contradiction, therefore our assumption must be false:

- If

(for some large enough k) is not in

(for some large enough k) is not in  then

then  will accept it, therefore

will accept it, therefore  rejects

rejects  , therefore

, therefore  is in

is in  (contradiction).

(contradiction). - If

(for some large enough k) is in

(for some large enough k) is in  then

then  will reject it, therefore

will reject it, therefore  accepts

accepts  , therefore

, therefore  is not in

is not in  (contradiction).

(contradiction).

Comparison and improvements

The space hierarchy theorem is stronger than the analogous time hierarchy theorems in several ways:

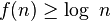

- It only requires s(n) to be at least log n instead of at least n.

- It can separate classes with any asymptotic difference, whereas the time hierarchy theorem requires them to be separated by a logarithmic factor.

- It only requires the function to be space-constructible, not time-constructible.

It seems to be easier to separate classes in space than in time. Indeed, whereas the time hierarchy theorem has seen little remarkable improvement since its inception, the nondeterministic space hierarchy theorem has seen at least one important improvement by Viliam Geffert in his 2003 paper "Space hierarchy theorem revised". This paper made several generalizations of the theorem:

- It relaxes the space-constructibility requirement. Instead of merely separating the union classes DSPACE(O(s(n)) and DSPACE(o(s(n)), it separates DSPACE(f(n)) from DSPACE(g(n)) where f(n) is an arbitrary O(s(n)) function and g(n) is a computable o(s(n)) function. These functions need not be space-constructible or even monotone increasing.

- It identifies a unary language, or tally language, which is in one class but not the other. In the original theorem, the separating language was arbitrary.

- It does not require s(n) to be at least log n; it can be any nondeterministically fully space-constructible function.

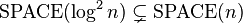

Refinement of space hierarchy

If space is measured as the number of cells used regardless of alphabet size, then SPACE(f(n)) = SPACE(O(f(n))) because one can achieve any linear compression by switching to a larger alphabet. However, by measuring space in bits, a much sharper separation is achievable for deterministic space. Instead of being defined up to a multiplicative constant, space is now defined up to an additive constant. However, because any constant amount of external space can be saved by storing the contents into the internal state, we still have SPACE(f(n)) = SPACE(f(n)+O(1)).

Assume that f is space-constructible. SPACE is deterministic.

- For a wide variety of sequential computational models, including for Turing machines, SPACE(f(n)-ω(log(f(n)+n))) ⊊ SPACE(f(n)). This holds even if SPACE(f(n)-ω(log(f(n)+n))) is defined using a different computational model than SPACE(f(n)) because the different models can simulate each other with O(log(f(n)+n)) space overhead.

- For certain computational models, including Turing machines with a fixed number of tapes (with one head per tape) and fixed alphabet size and with delimiters for the visited segment on each tape, SPACE(f(n)-ω(1)) ⊊ SPACE(f(n)).

The proof is similar to the proof of the space hierarchy theorem, but with two complications: The universal Turing machine has to be space-efficient, and the reversal has to be space-efficient. One can generally construct universal Turing machines with O(log(space)) space overhead, and under appropriate assumptions, just O(1) space overhead (which may depend on the machine being simulated). For the reversal, the key issue is how to detect if the simulated machine rejects by entering an infinite (space-constrained) loop. Simply counting the number of steps taken would increase space consumption by about f(n). At the cost of a potentially exponential time increase, loops can be detected space-efficiently as follows: [1]

Modify the machine to erase everything and to go to a specific configuration A on success. Use depth-first search to determine whether A is reachable in the space bound from the starting configuration. The search starts at A and goes over configurations that lead to A. Because of determinism, this can be done in place and without going into a loop. Also (but this is not necessary for the proof), to determine whether the machine exceeds the space bound (as opposed to looping within the space bound), we can iterate over all configurations about to exceed the space bound and check (again using depth-first search) whether the initial configuration leads to any of them.

Corollaries

Corollary 1

For any two functions  ,

,  , where

, where  is

is  and

and  is space-constructible,

is space-constructible,  .

.

This corollary lets us separate various space complexity classes.

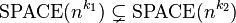

For any function  is space-constructible for any natural

number k. Therefore for any two natural numbers

is space-constructible for any natural

number k. Therefore for any two natural numbers  we can

prove

we can

prove  . We can extend

this idea for real numbers in the following corollary. This

demonstrates the detailed hierarchy within the PSPACE class.

. We can extend

this idea for real numbers in the following corollary. This

demonstrates the detailed hierarchy within the PSPACE class.

Corollary 2

For any two nonnegative real numbers  .

.

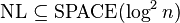

Corollary 3

Proof

Savitch's theorem shows that  , while the space hierarchy theorem shows that

, while the space hierarchy theorem shows that  . Thus we get this corollary along with the fact that TQBF ∉ NL

since TQBF is PSPACE-complete.

. Thus we get this corollary along with the fact that TQBF ∉ NL

since TQBF is PSPACE-complete.

This could also be proven using the non-deterministic space hierarchy theorem to show that NL ⊊ NPSPACE, and using Savitch's theorem to show that PSPACE = NPSPACE.

Corollary 4

This last corollary shows the existence of decidable problems that are intractable. In other words their decision procedures must use more than polynomial space.

Corollary 5

There are problems in PSPACE requiring an arbitrarily large exponent to solve; therefore PSPACE does not collapse to DSPACE(nk) for some constant k.

See Also

References

- ↑ Sipser, Michael (1978). "Halting Space-Bounded Computations". Proceedings of the 19th Annual Symposium on Foundations of Computer Science.

- Arora, Sanjeev; Barak, Boaz (2009). Computational complexity. A modern approach. Cambridge University Press. ISBN 978-0-521-42426-4. Zbl 1193.68112.

- Luca Trevisan. Notes on Hierarchy Theorems. Handout 7. CS172: Automata, Computability and Complexity. U.C. Berkeley. April 26, 2004.

- Viliam Geffert. Space hierarchy theorem revised. Theoretical Computer Science, volume 295, number 1-3, p.171-187. February 24, 2003.

- Sipser, Michael (1997). Introduction to the Theory of Computation. PWS Publishing. ISBN 0-534-94728-X. Pages 306–310 of section 9.1: Hierarchy theorems.

- Papadimitriou, Christos (1993). Computational Complexity (1st ed.). Addison Wesley. ISBN 0-201-53082-1. Section 7.2: The Hierarchy Theorem, pp.143–146.