Function of several real variables

| Function | |||||||||||||||||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| x ↦ f(x) | |||||||||||||||||||||||||||||

| By domain and codomain | |||||||||||||||||||||||||||||

|

|||||||||||||||||||||||||||||

| Classes/properties | |||||||||||||||||||||||||||||

| Constant · Identity · Linear · Polynomial · Rational · Algebraic · Analytic · Smooth · Continuous · Measurable · Injective · Surjective · Bijective | |||||||||||||||||||||||||||||

| Constructions | |||||||||||||||||||||||||||||

| Restriction · Composition · λ · Inverse | |||||||||||||||||||||||||||||

| Generalizations | |||||||||||||||||||||||||||||

| Partial · Multivalued · Implicit | |||||||||||||||||||||||||||||

In mathematical analysis, and applications in geometry, applied mathematics, engineering, natural sciences, and economics, a function of several real variables or real multivariate function is a function with more than one argument, with all arguments being real variables. This concept extends the idea of a function of a real variable to several variables. The "input" variables take real values, while the "output", also called the "value of the function", may be real or complex. However, the study of the complex valued functions may be easily reduced to the study of the real valued functions, by considering the real and imaginary parts of the complex function; therefore, unless explicitly specified, only real valued functions will be considered in this article.

The domain of a function of several variables is the subset of ℝn for which the function is defined. As usual, the domain of a function of several real variables is supposed to contain an open subset of ℝn.

General definition

A real-valued function of n real variables is a function that takes as input n real numbers, commonly represented by the variables x1, x2, ..., xn, for producing another real number, the value of the function, commonly denoted f(x1, x2, ..., xn). For simplicity, in this article a real-valued function of several real variables will be simply called a function. To avoid any ambiguity, the other types of functions that may occur will be explicitly specified.

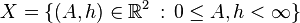

Some functions are defined for all real values of the variables (one says that they are everywhere defined), but some other functions are defined only if the value of the variable are taken in a subset X of ℝn, the domain of the function, which is always supposed to contain an open subset of ℝn. In other words, a real-valued function of n real variables is a function

such that its domain X is a subset of ℝn that contains an open set.

An element of X being an n-tuple (x1, x2,..., xn) (usually delimited by parentheses), the general notation for denoting functions would be f((x1, x2,..., xn)). The common usage, much older than the general definition of functions between sets, it to not use double parentheses and to simply write f(x1, x2,..., xn).

It is also common to abbreviate the n-tuple (x1, x2,..., xn) by using a notation similar to that for vectors, like boldface x, underline x, or overarrow . This article will use bold.

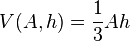

A simple example of a function in two variables could be:

which is the volume V of a cone with base area A and height h measured perpendicularly from the base. The domain restricts all variables to be positive since lengths and areas must be positive.

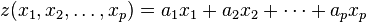

For an example of a function in two variables:

where a and b are real non-zero constants. Using the three-dimensional Cartesian coordinate system, where the xy plane is the domain ℝ2 and the z axis is the codomain ℝ, one can visualize the image to be a two-dimensional plane, with a slope of a in the positive x direction and a slope of b in the positive y direction. The function is well-defined at all points (x, y) in ℝ2. The previous example can be extended easily to higher dimensions:

for p non-zero real constants a1, a2,..., ap, which describes a p-dimensional hyperplane.

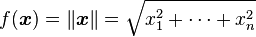

The Euclidean norm:

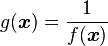

is also a function of n variables which is everywhere defined, while

is defined only for x ≠ (0, 0, ..., 0).

For a non-linear example function in two variables:

which takes in all points in X, a disk of radius √8 "punctured" at the origin (x, y) = (0, 0) in the plane ℝ2, and returns a point in ℝ. The function does not include the origin (x, y) = (0, 0), if it did then f would be ill-defined at that point. Using a 3d Cartesian coordinate system with the xy plane as the domain ℝ2, and the z axis the codomain ℝ, the image can be visualized as a curved surface.

The function can be evaluated at the point (x, y) = (2, √3) in X:

However, the function couldn't be evaluated at, say

since these values of x and y do not satisfy the domain's rule.

Image

The image of a function f(x1, x2, ..., xn) is the set of all values of f when the n-tuple (x1, x2, ..., xn) runs in the whole domain of f. For a continuous (see below for a definition) real-valued function which has a connected domain, the image is either an interval or a single value. In the latter case, the function is a constant function.

The preimage of a given real number y is called a level set. It is the set of the solutions of the equation y = f(x1, x2, ..., xn).

Domain

The domain of a function of several real variables is a subset of ℝn that is sometimes, but not always, explicitly defined. In fact, if one restricts the domain X of a function f to a subset Y ⊂ X, one gets formally a different function, the restriction of f to Y, which is denoted f|Y. In practice, it is often (but not always) not harmful to identify f and f|Y, and to omit the subscript |Y.

Conversely, it is sometimes possible to enlarge naturally the domain of a given function, for example by continuity or by analytic continuation. This means that it is not worthy to explicitly define the domain of a function of several real variables.

Moreover, many functions are defined in such a way that it is difficult to specify explicitly their domain, or even an open subset of their domain. For example, let us consider a function f such that f(0) = 0. which is defined and continuous (see below for a definition) in a ball B centered at 0 = (0, ..., 0). Then the functions

and

are defined and continuous in a ball C centered at 0. Although the existence of C follows easily from the definition of the continuity of f, it is impossible to compute its radius, or even a lower bound of its radius, without further information on f. Even if f is explicitly given, for example as a multivariate polynomial, the computation of the radius of C may be very difficult.

Algebraic structure

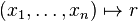

The arithmetic operations may be applied to the functions in the following way:

- For every real number r, the constant function

- is everywhere defined.

- For every real number r and every function f, the function:

- has the same domain as f (or is everywhere defined if r = 0).

- If f and g are two functions of respective domains X and Y such that X ∩ Y contains an open subset of ℝn, then

- and

- are functions that have a domain containing X ∩ Y.

It follows that the functions of n} variables that are everywhere defined and the functions of n variables that are defined in some neighbourhood of a given point both form commutative algebras over the reals (ℝ-algebras).

One may similarly define

which is a function only if the set of the points (x1, ...,xn) in the domain of f such that f(x1, ...,xn) ≠ 0 contains an open subset of ℝn. This constraint implies that the above two algebras are not fields.

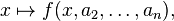

Univariable functions associated with a multivariable function

One can easily obtain a function in one real variable by giving a constant value to all but one of the variables. For example, if (a1, ..., an) is a point of the interior of the domain of the function f, we can fix the values of x2, ..., xn to a2, ..., an respectively, to get a univariable function

whose domain contains an interval centered at a1. This function may also be viewed as the restriction of the function f to the line defined by the equations xi = ai, for i = 2, ...,n.

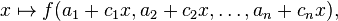

Other univariable functions may be defined by restricting f to any line passing through (a1, ..., an). These are the functions

where the ci are real numbers that are not all zero.

In next section, we will show that, if the multivariable function is continuous, so are all these univariable functions, but the converse is not necessarily true.

Continuity and limit

Until the second part of 19th century, only continuous functions were considered by mathematicians. At that time, the notion of continuity was elaborated for the functions of one or several real variables a rather long time before the formal definition of a topological space and a continuous map between topological spaces. As continuous functions of several real variables are ubiquitous in mathematics, it is worth to define this notion without reference to the general notion of continuous maps between topological space.

For defining the continuity, it is useful to consider the distance function of ℝn, which is an everywhere defined function of 2n real variables:

A function f is continuous at a point a = (a1, ..., an) which is interior to its domain, if, for every positive real number ε, there is a positive real number φ such that |f(x) − f(a)| < ε for all x such that d(x a) < φ. In other words, φ may be chosen small enough for having the image by f of the ball of radius φ centered at a contained in the interval of length 2ε centered at f(a). A function is continuous if it is continuous at every point of its domain.

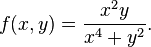

If a function is continuous at f(a), then all the univariate functions, that are obtained by fixing all the variables xi but one at the value ai, are continuous at f(a). The converse is false; this means that all these univariate functions may be continuous for a function that is not continuous at f(a). For an example, let us consider the function f such that f(0, 0) = 0, and is otherwise defined by

The functions x ↦ f(x, 0) and y ↦ f(0, y) are both constant and equal to zero, and are therefore continuous. The function f is not continuous at (0, 0), because, if ε < 1/2 and y = x2 ≠ 0, we have f(x, y) = 1/2, even if |x| is very small. Although not continuous, this function has the further property that all the univariate functions obtained by restricting it to a line passing through (0, 0) are also continuous. In fact, we have

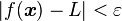

for λ ≠ 0. The limit of a real-valued function of several real variables is as follows.[1] Let a = (a1, a2, ..., an) be a point in topological closure of the domain X of the function f. The function, f has a limit L when x tends toward a, denoted

if the following condition is satisfied: For every positive real number ε > 0, there is a positive real number δ > 0 such that

for all x in the domain such that

If the limit exists, it is unique. If a is in the interior of the domain, the limit exists if and only if the function is continuous at a. In this case, we have

When a is in the boundary of the domain of f, and if f has a limit at a, the latter formula allows to "extend by continuity" the domain of f to a.

Symmetry

A symmetric function is a function f is unchanged when two variables xi and xj are interchanged:

where i and j are each one of 1, 2, ..., n. For example:

is symmetric in x, y, z since interchanging any pair of x, y, z leaves f unchanged, but is not symmetric in all of x, y, z, t, since interchanging t with x or y or z is a different function.

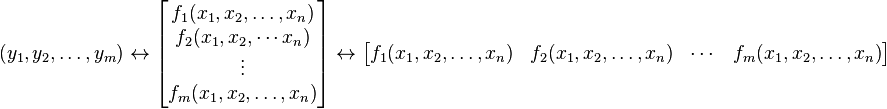

Function composition

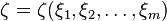

Suppose the functions:

or more compactly ξ = ξ(x), are all defined on a domain X. As the n-tuple x = (x1, x2, ..., xn) varies in X, a subset of ℝn, the m-tuple ξ = (ξ1, ξ2, ..., ξm) varies in another region Ξ a subset of ℝm. To restate this:

Then, a function ζ of the functions ξ(x) defined on Ξ:

is a function composition defined on X,[2] in other terms the mapping

Note the numbers m and n do not need to be equal.

For example, the function:

defined everywhere on ℝ2 can be rewritten by introducing:

which is also everywhere defined in ℝ3 to obtain:

Function composition can be used to simplify functions, which is useful for carrying out multiple integrals and solving partial differential equations.

Calculus

Elementary calculus is the calculus of real-valued functions of one real variable, and the principal ideas of differentiation and integration of such functions can be extended to functions of more than one real variable; this extension is multivariable calculus.

Partial derivatives

Partial derivatives can be defined with respect to each variable:

Partial derivatives themselves are functions, each of which represents the rate of change of f parallel to one of the x1, x2, ..., xn axes at all points in the domain (if the derivatives exist and are continuous—see also below). A first derivative is positive if the function increases along the direction of the relevant axis, negative if it decreases, and zero if there is no increase or decrease. Evaluating a partial derivative at a particular point in the domain gives the rate of change of the function at that point in the direction parallel to a particular axis, a real number.

For real-valued functions of a real variable, y = f(x), its ordinary derivative dy/dx is geometrically the gradient of the tangent line to the curve y = f(x) at all points in the domain. Partial derivatives extend this idea to tangent hyperplanes to a curve.

The second order partial derivatives can be calculated for every pair of variables:

Geometrically, they are related to the local curvature of the function's image at all points in the domain. At any point where the function is well-defined, the function could be increasing along some axes, and/or decreasing along other axes, and/or not increasing or decreasing at all along other axes.

This leads to a variety of possible stationary points: global or local maxima, global or local minima, and saddle points—the multidimensional analogue of inflection points for real functions of one real variable. The Hessian matrix is a matrix of all the second order partial derivatives, which are used to investigate the stationary points of the function, important for mathematical optimization.

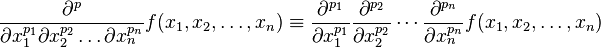

In general, partial derivatives of higher order p have the form:

where p1, p2, ..., pn are each integers between 0 and p such that p1 + p2 + ... + pn = p, using the definitions of zeroth partial derivatives as identity operators:

The number of possible partial derivatives increases with p, although some mixed partial derivatives (those with respect to more than one variable) are superfluous, because of the symmetry of second order partial derivatives. This reduces the number of partial derivatives to calculate for some p.

Multivariable differentiability

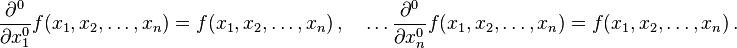

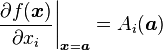

A function f(x) is differentiable in a neighborhood of a point a if there is an n-tuple of numbers dependent on a in general, A(a) = (A1(a), A2(a), ..., An(a)), so that:[3]

where α → 0 as |x − a| → 0. This means that if f is differentiable at a point a, then f is continuous at x = a, although the converse is not true - continuity in the domain does not imply differentiability in the domain. If f is differentiable at a then the first order partial derivatives exist at a and:

for i = 1, 2, ..., n, which can be found from the definitions of the individual partial derivatives, so the partial derivatives of f exist.

Assuming an n-dimensional analogue of a rectangular Cartesian coordinate system, these partial derivatives can be used to form a vectorial linear differential operator, called the gradient (also known as "nabla" or "del") in this coordinate system:

used extensively in vector calculus, because it is useful for constructing other differential operators and compactly formulating theorems in vector calculus.

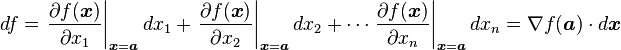

Then substituting the gradient ∇f (evaluated at x = a) with a slight rearrangement gives:

where · denotes the dot product. This equation represents the best linear approximation of the function f at all points x within a neighborhood of a. For infinitesimal changes in f and x as x → a:

which is defined as the total differential, or simply differential, of f, at a. This expression corresponds to the total infinitesimal change of f, by adding all the infinitesimal changes of f in all the xi directions. Also, df can be construed as a covector with basis vectors as the infinitesimals dxi in each direction and partial derivatives of f as the components.

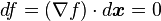

Geometrically ∇f is perpendicular to the level sets of f, given by f(x) = c which for some constant c describes an (n − 1)-dimensional hypersurface. The differential of a constant is zero:

in which dx is an infinitesimal change in x in the hypersurface f(x) = c, and since the dot product of ∇f and dx is zero, this means ∇f is perpendicular to dx.

In arbitrary curvilinear coordinate systems in n dimensions, the explicit expression for the gradient would not be so simple - there would be scale factors in terms of the metric tensor for that coordinate system. For the above case used throughout this article, the metric is just the Kronecker delta and the scale factors are all 1.

Differentiability classes

If all first order partial derivatives evaluated at a point a in the domain:

exist and are continuous for all a in the domain, f has differentiability class C1. In general, if all order p partial derivatives evaluated at a point a:

exist and are continuous, where p1, p2, ..., pn, and p are as above, for all a in the domain, then f is differentiable to order p throughout the domain and has differentiability class C p.

If f is of differentiability class C∞, f has continuous partial derivatives of all order and is called smooth. If f is an analytic function and equals its Taylor series about any point in the domain, the notation Cω denotes this differentiability class.

Multiple integration

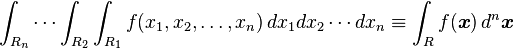

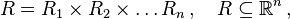

Definite integration can be extended to multiple integration over the several real variables with the notation;

where each region R1, R2, ..., Rn is a subset of or all of the real line:

and their Cartesian product gives the region to integrate over as a single set:

an n-dimensional hypervolume. When evaluated, a definite integral is a real number if the integral converges in the region R of integration (the result of a definite integral may diverge to infinity for a given region, in such cases the integral remains ill-defined). The variables are treated as "dummy" or "bound" variables which are substituted for numbers in the process of integration.

The integral of a real-valued function of a real variable y = f(x) with respect to x has geometric interpretation as the area bounded by the curve y = f(x) and the x-axis. Multiple integrals extend the dimensionality of this concept: assuming an n-dimensional analogue of a rectangular Cartesian coordinate system, the above definite integral has the geometric interpretation as the n-dimensional hypervolume bounded by f(x) and the x1, x2, ..., xn axes, which may be positive, negative, or zero, depending on the function being integrated (if the integral is convergent).

While bounded hypervolume is a useful insight, the more important idea of definite integrals is that they represent total quantities within space. This has significance in applied mathematics and physics: if f is some scalar density field and x are the position vector coordinates, i.e. some scalar quantity per unit n-dimensional hypervolume, then integrating over the region R gives the total amount of quantity in R. The more formal notions of hypervolume is the subject of measure theory. Above we used the Lebesgue measure, see Lebesgue integration for more on this topic.

Theorems

With the definitions of multiple integration and partial derivatives, key theorems can be formulated, including the fundamental theorem of calculus in several real variables (namely Stokes' theorem), integration by parts in several real variables, and Taylor's theorem for multivariable functions. Evaluating a mixture of integrals and partial derivatives can be done by using theorem differentiation under the integral sign.

Vector calculus

One can collect a number of functions each of several real variables, say

into an m-tuple, or sometimes as a column vector or row vector, respectively:

all treated on the same footing as an m-component vector field, and use whichever form is convenient. All the above notations have a common compact notation y = f(x). The calculus of such vector fields is vector calculus. For more on the treatment of row vectors and column vectors of multivariable functions, see matrix calculus.

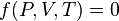

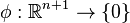

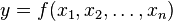

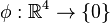

Implicit functions

A real-valued implicit function of several real variables is not written in the form "y = f(...)". Instead, the mapping is from the space ℝn + 1 to the zero element in ℝ (just the ordinary zero 0):

and

is an equation in all the variables. Implicit functions are a more general way to represent functions, since if:

then we can always define:

but the converse is not always possible, i.e. not all implicit functions have an explicit form.

For example, using interval notation, let

Choosing a 3-dimensional (3D) Cartesian coordinate system, this function describes the surface of a 3D ellipsoid centered at the origin (x, y, z) = (0, 0, 0) with constant semi-major axes a, b, c, along the positive x, y and z axes respectively. In the case a = b = c = r, we have a sphere of radius r centered at the origin. Other conic section examples which can be described similarly include the hyperboloid and paraboloid, more generally so can any 2D surface in 3D Euclidean space. The above example can be solved for x, y or z; however it is much tidier to write it in an implicit form.

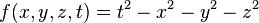

For a more sophisticated example:

for non-zero real constants A, B, C, ω, this function is well-defined for all (t, x, y, z), but it cannot be solved explicitly for these variables and written as "t = ", "x = ", etc.

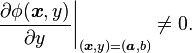

The implicit function theorem of more than two real variables deals with the continuity and differentiability of the function, as follows.[4] Let ϕ(x1, x2, ..., xn) be a continuous function with continuous first order partial derivatives, and let ϕ evaluated at a point (a, b) = (a1, a2, ..., an, b) be zero:

and let the first partial derivative of ϕ with respect to y evaluated at (a, b) be non-zero:

Then, there is an interval [y1, y2] containing b, and a region R containing (a, b), such that for every x in R there is exactly one value of y in [y1, y2] satisfying ϕ(x, y) = 0, and y is a continuous function of x so that ϕ(x, y(x)) = 0. The total differentials of the functions are:

Substituting dy into the latter differential and equating coefficients of the differentials gives the first order partial derivatives of y with respect to xi in terms of the derivatives of the original function, each as a solution of the linear equation

for i = 1, 2, ..., n.

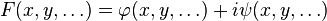

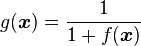

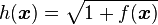

Complex-valued function of several real variables

A complex-valued function of several real variables may be defined by relaxing, in the definition of the real-valued functions, the restriction of the codomain to the real numbers, and allowing complex values.

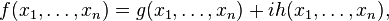

If f(x1, ..., xn) is such a complex valued function, it may be decomposed as

where g and h are real-valued functions. In other words, the study of the complex valued functions reduces easily to the study of the pairs of real valued functions.

This reduction works for the general properties. However, for an explicitly given function, such as:

the computation of the real and the imaginary part may be difficult.

Applications

Multivariable functions of real variables arise inevitably in engineering and physics, because observable physical quantities are real numbers (with associated units and dimensions), and any one physical quantity will generally depend on a number of other quantities.

When creating a mathematical model of a physical system; definitions of quantities, scientific principles, and mathematical theorems are applied to set up equations in terms of the physical quantities characterizing the system. At the simplest level for problems in a restricted application, they would be algebraic equations or implicit functions, for more general and complicated problems with a broad scope of application, partial differential equations occur - many non-linear partial differential equations have been formulated for a variety of situations. The solutions to the equations are real or complex valued functions of many real variables.

Examples of real-valued functions of several real variables

Examples in continuum mechanics include the local mass density ρ of a mass distribution, a scalar field which depends on the spatial position coordinates (here Cartesian to exemplify), r = (x, y, z), and time t:

Similarly for electric charge density for electrically charged objects, and numerous other scalar potential fields.

Another example is the velocity field, a vector field, which has components of velocity v = (vx, vy, vz) that are each multivariable functions of spatial coordinates and time similarly:

Similarly for other physical vector fields such as electric fields and magnetic fields, and vector potential fields.

Another important example is the equation of state in thermodynamics, an equation relating pressure P, temperature T, and volume V of a fluid, in general it has an implicit form:

The simplest example is the ideal gas law:

where n is the number of moles, constant for a fixed amount of substance, and R the gas constant. Much more complicated equations of state have been empirically derived, but they all have the above implicit form.

Real-valued functions of several real variables appear pervasively in economics. In the underpinnings of consumer theory, utility is expressed as a function of the amounts of various goods consumed, each amount being an argument of the utility function. The result of maximizing utility is a set of demand functions, each expressing the amount demanded of a particular good as a function of the prices of the various goods and of income or wealth. In producer theory, a firm is usually assumed to maximize profit as a function of the quantities of various goods produced and of the quantities of various factors of production employed. The result of the optimization is a set of demand functions for the various factors of production and a set of supply functions for the various products; each of these functions has as its arguments the prices of the goods and of the factors of production.

Examples of complex-valued functions of several real variables

Some "physical quantities" may be actually complex valued - such as complex impedance, complex permittivity, complex permeability, and complex refractive index. These are also functions of real variables, such as frequency or time, as well as temperature.

In two-dimensional fluid mechanics, specifically in the theory of the potential flows used to describe fluid motion in 2d, the complex potential

is a complex valued function of the two spatial coordinates x and y, and other real variables associated with the system. The real part is the velocity potential and the imaginary part is the stream function.

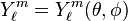

The spherical harmonics occur in physics and engineering as the solution to Laplace's equation, as well as the eigenfunctions of the z-component angular momentum operator, which are complex-valued functions of real-valued spherical polar angles:

In quantum mechanics, the wavefunction is necessarily complex-valued, but is a function of real spatial coordinates (or momentum components), as well as time t:

where each is related by a Fourier transform.

See also

References

- ↑ R. Courant. Differential and Integral Calculus 2. Wiley Classics Library. pp. 46–47. ISBN 0-471-60840-8.

- ↑ R. Courant. Differential and Integral Calculus 2. Wiley Classics Library. p. 70. ISBN 0-471-60840-8.

- ↑ W. Fulks (1978). Advanced calculus. John Wiley & Sons. pp. 300–302. ISBN 0-471-02195-4.

- ↑ R. Courant. Differential and Integral Calculus 2. Wiley Classics Library. pp. 117–118. ISBN 0-471-60840-8.

- F. Ayres, E. Mendelson (2009). Calculus. Schaum's outline series (5th ed.). McGraw Hill. ISBN 978-0-07-150861-2.

- R. Wrede, M. R. Spiegel (2010). Advanced calculus. Schaum's outline series (3rd ed.). McGraw Hill. ISBN 978-0-07-162366-7.

- W. F. Hughes, J. A. Brighton (1999). Fluid Dynamics. Schaum's outline series (3rd ed.). McGraw Hill. p. 160. ISBN 978-0-07-031118-3.

- R. Penrose (2005). The Road to Reality. Vintage books. ISBN 978-00994-40680.

- S. Dineen (2001). Multivariate Calculus and Geometry. Springer Undergraduate Mathematics Series (2 ed.). Springer. ISBN 185-233-472-X.

- N. Bourbaki (2004). Functions of a Real Variable: Elementary Theory. Springer. ISBN 354-065-340-6.

- M. A. Moskowitz, F. Paliogiannis (2011). Functions of Several Real Variables. World Scientific. ISBN 981-429-927-8.

- W. Fleming (1977). Functions of Several Variables. Undergraduate Texts in Mathematics (2nd ed.). Springer. ISBN 0-387-902-066.

External links

| ||||||||||||||

![f(x,y) = e^{xy}[\sin 3(x-y) - \cos 2(x+y)]](../I/m/551a3f79dc68f28132184e3784219752.png)

![f(x,y) = \zeta(\alpha(x,y),\beta(x,y),\gamma(x,y)) = \zeta(\alpha,\beta,\gamma) = e^\alpha[\sin (3\beta) - \cos (2\gamma)] \,.](../I/m/0a0af45126236d44f28f66a799666da7.png)

![X = [-a,a] \times [-b,b] \times [-c,c] = \{ (x,y,z) \in \mathbb{R}^3 \,:\, -a\leq x\leq a, -b\leq y\leq b, -c\leq z\leq c \}\,.](../I/m/effd691a8355d905bacf775c80e0e826.png)

![z(x,y,\alpha,a,q) = \frac{q}{2\pi}\left[\ln(x+iy- ae^{i\alpha}) - \ln(x+iy + ae^{-i\alpha})\right]](../I/m/4c24e675c8e1e90010931b0409a8e017.png)

![\mathbf{v} (\mathbf{r},t) = \mathbf{v}(x,y,z,t) = [v_x(x,y,z,t), v_y(x,y,z,t), v_z(x,y,z,t)]](../I/m/defc6495722781b7217896b119c9e0d5.png)