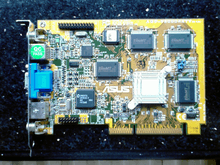

RIVA 128

| Release date | 1997 |

|---|---|

| Codename | NV3 |

| Cards | |

| High-end | RIVA 128, ZX |

| Rendering support | |

| Direct3D | Direct3D 5.0 |

Released in late 1997 by Nvidia, the RIVA 128, or "NV3", was one of the first consumer graphics processing units to integrate 3D acceleration in addition to traditional 2D and video acceleration. Its name is an acronym for Real-time Interactive Video and Animation accelerator.[1]

Following the less successful "NV1" accelerator, RIVA 128 was the first product to gain Nvidia widespread recognition. It was also a major change in technological direction for Nvidia.

Architecture

Nvidia's "NV1" chip had been designed for a fundamentally different type of rendering technology, called quadratic texture mapping, a technique not supported by Direct3D. The RIVA 128 was instead designed to accelerate Direct3D to the utmost extent possible. It was built to render within the Direct3D 5 and OpenGL API specifications. The graphics accelerator consists of 3.5 million transistors built on a 350 nm fabrication process and is clocked at 100 MHz.[1] RIVA 128 has a single pixel pipeline capable of 1 pixel per clock when sampling one texture. It is specified to output pixels at a rate of 100 million per second and 25-pixel triangles at 1.5 million per second.[1] There is 12 KiB of on-chip memory used for pixel and vertex caches.[1] The chip was limited to a 16-bit (Highcolor) pixel format when performing 3D acceleration and a 16-bit Z-buffer.

The 2D accelerator engine within RIVA 128 is 128 bits wide and also operates at 100 MHz. In this "fast and wide" configuration, as Nvidia referred to it, RIVA 128 performed admirably for GUI acceleration compared to competitors.[2] A 32-bit hardware VESA-compliant SVGA/VGA core was implemented as well. Video acceleration aboard the chip is optimized for MPEG-2 but lacks full acceleration of that standard. Final picture output is routed through an integrated 206 MHz RAMDAC.[1] RIVA 128 had the advantage of being a combination 2D/3D graphics chip, unlike Voodoo Graphics. This meant that the computer did not require a separate 2D card for output outside of 3D applications. It also allowed 3D rendering within a window. The ability to build a system with just one graphics card, and still have it be feature-complete for the time, made RIVA 128 a lower-cost high-performance solution.

Nvidia equipped RIVA 128 with 4 MiB of SGRAM, a new memory technology for the time, clocked at 100 MHz and connected to the graphics processor via a 128-bit memory bus.[1] This provides memory bandwidth of 1.60 gigabytes per second. The memory was used in a unified memory architecture that shared the whole RAM pool with both framebuffer and texture storage. The main benefit of this, over a split design such as that on Voodoo Graphics and Voodoo², was support for 3D resolutions of 800×600 and 960×720, higher than Voodoo's 640x480.[3]

RIVA 128 was one of the early AGP 2X parts, giving it some more marketing headroom by being on the forefront of interface technology. The graphics processor was built around Intel's AGP specification targeting the Intel 440LX chipset for the Pentium II. Nvidia designed the RIVA 128 with a maximum memory capacity of 4 MiB because, at the time, this was the cost-optimal approach for a consumer 3D accelerator.[4] This was the case partly because of the chip's capability to store textures in off-screen system RAM in both PCI or AGP configurations.[4]

In early 1998, Nvidia released a refreshed version called RIVA 128 ZX. This refreshed design of NV3 increased memory support to 8 MiB and increased RAMDAC frequency to 250 MHz. These additions allowed RIVA 128 ZX to support higher resolutions and refresh rates.[5] The next major chip from Nvidia would be the RIVA TNT.

Image Quality

At the time of RIVA 128's release, 3Dfx Voodoo Graphics had firmly established itself as the 3D hardware benchmark against which all newcomers were compared. Voodoo was the first 3D game accelerator to offer exceptional performance and quality in emerging PC 3D gaming. RIVA 128 was scorned for its lower quality rendering (compared to Voodoo) and rendering errors.

With initial drivers, RIVA 128 used per-polygon mipmapping instead of the much higher quality, but more demanding, per-pixel variety. This caused the different texture detail levels to "pop" into place as the player moved through a game and approached each polygon, instead of allowing a seamless, gradual per-pixel transition. Nvidia eventually released drivers which allowed a per-pixel mode. Another issue with the card's texturing was its use of automated mipmap generation. While this improves visual quality and performance in games without mipmaps, it also caused unforeseen problems because it forced games to render in a way that they were not programmed for.

NV3's bilinear filtering was actually "sharper" than that of 3Dfx Voodoo Graphics. But, while it didn't blur textures as much as Voodoo, it did instead add some light noise to textures, because of a lower-fidelity filtering algorithm. There were also problems with noticeable seams between polygons.

While initial drivers did present these image quality problems, later drivers offered image quality arguably matching that of Voodoo Graphics. In addition, because RIVA 128 can render at resolutions higher than 640x480, the card can offer quality superior to that of Voodoo Graphics, as shown in the above Quake II screenshot. The final drivers released for RIVA 128 support per-pixel mipmapping, full-scene anti-aliasing (super-sampling), and a number of options to fine-tune features in order to optimize quality and performance.

Drivers and APIs

Drivers were, for a significant portion of the card's life, rather rough. Not only were the aforementioned Direct3D issues apparent, but the card lacked good OpenGL support.[2] With RIVA 128, Nvidia began their quest for top-quality OpenGL support, eventually resulting in the board being a capable OpenGL performer. One major disadvantage for Nvidia was that many games during RIVA 128's lifetime used 3Dfx's proprietary Glide API. Legally, only 3Dfx cards could use 3Dfx's Glide API.

Like the competing ATI Rage Pro, RIVA 128 was never able to accelerate the popular Unreal Engine in Direct3D mode due to missing hardware features. It was, however, possible to use the engine's OpenGL renderer, but unfortunately OpenGL support was quite slow and buggy in the original Unreal Engine. Performance in Quake III Arena, a game using an engine more advanced than Unreal Engine 1, was better due to the engine having been designed for OpenGL.

Nvidia's final RIVA 128 drivers for Windows 9x include a full OpenGL driver. However, for this driver to function, Windows must be set with a desktop color depth of 16-bit.

A driver for RIVA 128 is also included in Windows 2000 and XP, but lacks 3D support. A beta driver with OpenGL support was once leaked by Nvidia but was canceled later, and there is no Windows 2000 driver for RIVA 128 on Nvidia's driver site today. Neither the beta driver nor the ones come with Windows 2000/XP could support Direct3D.

Performance

At the time, RIVA 128 was one of the first combination 2D/3D cards that could rival Voodoo Graphics. RIVA 128's 2D capability was seen as impressive for its time and was competitive with even high-end 2D-only graphics cards in both quality and performance.[2][3]

Competing chipsets

- Matrox m3D, Mystique 220

- 3Dfx Voodoo Graphics, Voodoo²

- ATI Rage series (Pro was the most recent at the time)

- S3 ViRGE, Savage 3D

- Rendition Vérité V1000 & V2x00

- PowerVR Series 1

See also

References

- 1 2 3 4 5 6 RIVA 128 Brochure, Nvidia, accessed October 9, 2007.

- 1 2 3 STB VELOCITY 128 REVIEW (PCI), Rage's Hardware, February 7, 1998.

- 1 2 Review AGP Graphic Cards, Tom's Hardware, October 27, 1997.

- 1 2 http://developer.nvidia.com/object/RIVA_128_FAQ.html RIVA 128/ZX/TNT FAQ], Nvidia, accessed October 9, 2007.

- ↑ Covey, Alf. STB Velocity 128 vs STB Velocity 128zx What is the difference?, STB Technical Support, June 3, 1998.

External links

| Wikimedia Commons has media related to Riva 128 series. |

| ||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||