Rice distribution

|

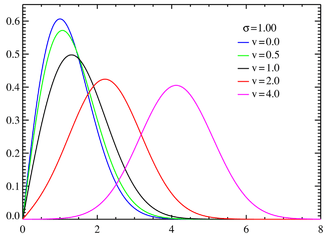

Probability density function

| |

|

Cumulative distribution function

| |

| Parameters |

ν ≥ 0 — distance between the reference point and the center of the bivariate distribution, σ ≥ 0 — scale |

|---|---|

| Support | x ∈ [0, +∞) |

| |

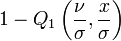

| CDF |

|

| Mean |

|

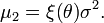

| Variance |

|

| Skewness | (complicated) |

| Ex. kurtosis | (complicated) |

In probability theory, the Rice distribution or Rician distribution is the probability distribution of the magnitude of a circular bivariate normal random variable with potentially non-zero mean. It was named after Stephen O. Rice.

Characterization

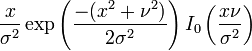

The probability density function is

where I0(z) is the modified Bessel function of the first kind with order zero.

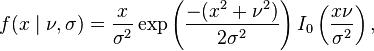

The characteristic function is:[1][2]

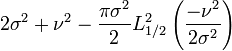

where  is one of Horn's confluent hypergeometric functions with two variables and convergent for all finite values of

is one of Horn's confluent hypergeometric functions with two variables and convergent for all finite values of  and

and  . It is given by:[3][4]

. It is given by:[3][4]

where

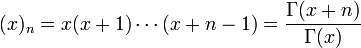

is the rising factorial.

Properties

Moments

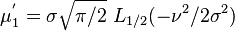

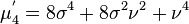

The first few raw moments are:

and, in general, the raw moments are given by

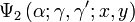

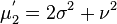

Here Lq(x) denotes a Laguerre polynomial:

where  is the confluent hypergeometric function of the first kind. When k is even, the raw moments become simple polynomials in σ and ν, as in the examples above.

is the confluent hypergeometric function of the first kind. When k is even, the raw moments become simple polynomials in σ and ν, as in the examples above.

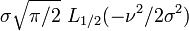

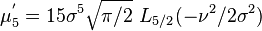

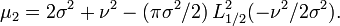

For the case q = 1/2:

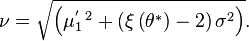

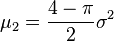

The second central moment, the variance, is

Note that  indicates the square of the Laguerre polynomial

indicates the square of the Laguerre polynomial  , not the generalized Laguerre polynomial

, not the generalized Laguerre polynomial

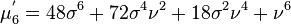

Differential equation

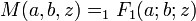

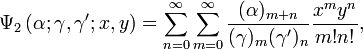

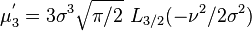

The pdf of the Rice distribution is a solution of the following differential equation:

Related distributions

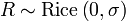

has a Rice distribution if

has a Rice distribution if  where

where  and

and  are statistically independent normal random variables and

are statistically independent normal random variables and  is any real number.

is any real number.

- Another case where

comes from the following steps:

comes from the following steps:

- 1. Generate

having a Poisson distribution with parameter (also mean, for a Poisson)

having a Poisson distribution with parameter (also mean, for a Poisson)

- 2. Generate

having a chi-squared distribution with 2P + 2 degrees of freedom.

having a chi-squared distribution with 2P + 2 degrees of freedom.

- 3. Set

- If

then

then  has a noncentral chi-squared distribution with two degrees of freedom and noncentrality parameter

has a noncentral chi-squared distribution with two degrees of freedom and noncentrality parameter  .

. - If

then

then  has a noncentral chi distribution with two degrees of freedom and noncentrality parameter

has a noncentral chi distribution with two degrees of freedom and noncentrality parameter  .

. - If

then

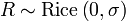

then  , i.e., for the special case of the Rice distribution given by ν = 0, the distribution becomes the Rayleigh distribution, for which the variance is

, i.e., for the special case of the Rice distribution given by ν = 0, the distribution becomes the Rayleigh distribution, for which the variance is  .

. - If

then

then  has an exponential distribution.[5]

has an exponential distribution.[5]

Limiting cases

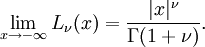

For large values of the argument, the Laguerre polynomial becomes[6]

It is seen that as ν becomes large or σ becomes small the mean becomes ν and the variance becomes σ2.

Parameter estimation (the Koay inversion technique)

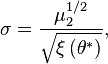

There are three different methods for estimating the parameters of the Rice distribution, (1) method of moments,[7][8][9][10] (2) method of maximum likelihood,[7][8][9] and (3) method of least squares. In the first two methods the interest is in estimating the parameters of the distribution, ν and σ, from a sample of data. This can be done using the method of moments, e.g., the sample mean and the sample standard deviation. The sample mean is an estimate of μ1' and the sample standard deviation is an estimate of μ21/2.

The following is an efficient method, known as the "Koay inversion technique".[11] for solving the estimating equations, based on the sample mean and the sample standard deviation, simultaneously . This inversion technique is also known as the fixed point formula of SNR. Earlier works[7][12] on the method of moments usually use a root-finding method to solve the problem, which is not efficient.

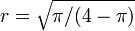

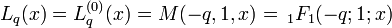

First, the ratio of the sample mean to the sample standard deviation is defined as r, i.e.,  . The fixed point formula of SNR is expressed as

. The fixed point formula of SNR is expressed as

where  is the ratio of the parameters, i.e.,

is the ratio of the parameters, i.e.,  , and

, and  is given by:

is given by:

where  and

and  are modified Bessel functions of the first kind.

are modified Bessel functions of the first kind.

Note that  is a scaling factor of

is a scaling factor of  and is related to

and is related to  by:

by:

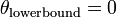

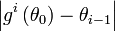

To find the fixed point,  , of

, of  , an initial solution is selected,

, an initial solution is selected,  , that is greater than the lower bound, which is

, that is greater than the lower bound, which is  and occurs when

and occurs when  [11] (Notice that this is the

[11] (Notice that this is the  of a Rayleigh distribution). This provides a starting point for the iteration, which uses functional composition, and this continues until

of a Rayleigh distribution). This provides a starting point for the iteration, which uses functional composition, and this continues until  is less than some small positive value. Here,

is less than some small positive value. Here,  denotes the composition of the same function,

denotes the composition of the same function,  ,

,  times. In practice, we associate the final

times. In practice, we associate the final  for some integer

for some integer  as the fixed point,

as the fixed point,  , i.e.,

, i.e.,  .

.

Once the fixed point is found, the estimates  and

and  are found through the scaling function,

are found through the scaling function,  , as follows:

, as follows:

and

To speed up the iteration even more, one can use the Newton's method of root-finding.[11] This particular approach is highly efficient.

Applications

- The Euclidean norm of a bivariate normally distributed random vector.

- Rician fading

- Effect of sighting error on target shooting.[13]

See also

- Rayleigh distribution

- Stephen O. Rice (1907–1986)

Notes

- ↑ Liu 2007 (in one of Horn's confluent hypergeometric functions with two variables).

- ↑ Annamalai 2000 (in a sum of infinite series).

- ↑ Erdelyi 1953.

- ↑ Srivastava 1985.

- ↑ Richards, M.A., Rice Distribution for RCS, Georgia Institute of Technology (Sep 2006)

- ↑ Abramowitz and Stegun (1968) §13.5.1

- 1 2 3 Talukdar et al. 1991

- 1 2 Bonny et al. 1996

- 1 2 Sijbers et al. 1998

- ↑ den Dekker and Sijbers 2014

- 1 2 3 Koay et al. 2006 (known as the SNR fixed point formula).

- ↑ Abdi 2001

- ↑ "Ballistipedia". Retrieved 4 May 2014.

References

- Abramowitz, M. and Stegun, I. A. (ed.), Handbook of Mathematical Functions, National Bureau of Standards, 1964; reprinted Dover Publications, 1965. ISBN 0-486-61272-4

- Rice, S. O., Mathematical Analysis of Random Noise. Bell System Technical Journal 24 (1945) 46–156.

- I. Soltani Bozchalooi and Ming Liang (20 November 2007). "A smoothness index-guided approach to wavelet parameter selection in signal de-noising and fault detection". Journal of Sound and Vibration 308 (1–2): 253–254. doi:10.1016/j.jsv.2007.07.038.

- Liu, X. and Hanzo, L., A Unified Exact BER Performance Analysis of Asynchronous DS-CDMA Systems Using BPSK Modulation over Fading Channels, IEEE Transactions on Wireless Communications, Volume 6, Issue 10, October 2007, Pages 3504–3509.

- Annamalai, A., Tellambura, C. and Bhargava, V. K., Equal-Gain Diversity Receiver Performance in Wireless Channels, IEEE Transactions on Communications,Volume 48, October 2000, Pages 1732–1745.

- Erdelyi, A., Magnus, W., Oberhettinger, F. and Tricomi, F. G., Higher Transcendental Functions, Volume 1. McGraw-Hill Book Company Inc., 1953.

- Srivastava, H. M. and Karlsson, P. W., Multiple Gaussian Hypergeometric Series. Ellis Horwood Ltd., 1985.

- Sijbers J., den Dekker A. J., Scheunders P. and Van Dyck D., "Maximum Likelihood estimation of Rician distribution parameters", IEEE Transactions on Medical Imaging, Vol. 17, Nr. 3, p. 357–361, (1998)

- den Dekker, A.J., and Sijbers, J (December 2014). "Data distributions in magnetic resonance images: a review". Physica Medica 30 (7): 725–741. doi:10.1016/j.ejmp.2014.05.002.

- Koay, C.G. and Basser, P. J., Analytically exact correction scheme for signal extraction from noisy magnitude MR signals, Journal of Magnetic Resonance, Volume 179, Issue = 2, p. 317–322, (2006)

- Abdi, A., Tepedelenlioglu, C., Kaveh, M., and Giannakis, G. On the estimation of the K parameter for the Rice fading distribution, IEEE Communications Letters, Volume 5, Number 3, March 2001, Pages 92–94.

- Talukdar, K.K., and Lawing, William D. (March 1991). "Estimation of the parameters of the Rice distribution". Journal of the Acoustical Society of America 89 (3): 1193–1197. doi:10.1121/1.400532.

- Bonny,J.M., Renou, J.P., and Zanca, M. (November 1996). "Optimal Measurement of Magnitude and Phase from MR Data". Journal of Magnetic Resonance, Series B 113 (2): 136–144. doi:10.1006/jmrb.1996.0166.

External links

- MATLAB code for Rice/Rician distribution (PDF, mean and variance, and generating random samples)

![\begin{align}

&\chi_X(t\mid\nu,\sigma) \\

& \quad = \exp \left( -\frac{\nu^2}{2\sigma^2} \right) \left[

\Psi_2 \left( 1; 1, \frac{1}{2}; \frac{\nu^2}{2\sigma^2}, -\frac{1}{2} \sigma^2 t^2 \right) \right. \\[8pt]

& \left. {} \qquad + i \sqrt{2} \sigma t

\Psi_2 \left( \frac{3}{2}; 1, \frac{3}{2}; \frac{\nu^2}{2\sigma^2}, -\frac{1}{2} \sigma^2 t^2 \right) \right],

\end{align}](../I/m/fdc8965962275f13b003548bdb8aca79.png)

![\begin{align}

L_{1/2}(x) &=\,_1F_1\left( -\frac{1}{2};1;x\right) \\

&= e^{x/2} \left[\left(1-x\right)I_0\left(\frac{-x}{2}\right) -xI_1\left(\frac{-x}{2}\right) \right].

\end{align}](../I/m/6cb203976080f0189ebb813b6cb8f570.png)

![\left\{\begin{array}{l}

\sigma ^4 x^2 f''(x)+\left(2\sigma^2 x^3-\sigma^4 x\right)

f'(x)+f(x) \left(\sigma ^4-v^2 x^2+x^4\right)=0 \\[10pt]

f(1)=\frac{\exp\left(-\frac{v^2+1}{2\sigma^2}\right) I_0\left(\frac{v}{\sigma^2}\right)}{\sigma^2} \\[10pt]

f'(1)=\frac{\exp\left(-\frac{v^2+1}{2 \sigma ^2}\right)

\left(\left(\sigma^2-1\right) I_0\left(\frac{v}{\sigma ^2}\right)+v

I_1\left(\frac{v}{\sigma^2}\right)\right)}{\sigma^4}

\end{array}\right\}](../I/m/6d31c1dd0ac654d286e429cb856ec6e1.png)

![g(\theta) = \sqrt{ \xi{(\theta)} \left[ 1+r^2\right] - 2},](../I/m/0f0f1943f3ddf223877fdeb1722ec3e0.png)

![\xi{\left(\theta\right)} = 2 + \theta^2 - \frac{\pi}{8} \exp{(-\theta^2/2)}\left[ (2+\theta^2) I_0 (\theta^2/4) + \theta^2 I_1(\theta^{2}/4)\right]^2,](../I/m/aaab0a3fdeff7718ef3e31603dcc4e6a.png)