Probability mass function

In probability theory and statistics, a probability mass function (pmf) is a function that gives the probability that a discrete random variable is exactly equal to some value.[1] The probability mass function is often the primary means of defining a discrete probability distribution, and such functions exist for either scalar or multivariate random variables whose domain is discrete.

A probability mass function differs from a probability density function (pdf) in that the latter is associated with continuous rather than discrete random variables; the values of the latter are not probabilities as such: a pdf must be integrated over an interval to yield a probability.[2]

Formal definition

Suppose that X: S → A (A  R) is a discrete random variable defined on a sample space S. Then the probability mass function fX: A → [0, 1] for X is defined as[3][4]

R) is a discrete random variable defined on a sample space S. Then the probability mass function fX: A → [0, 1] for X is defined as[3][4]

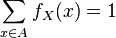

Thinking of probability as mass helps to avoid mistakes since the physical mass is conserved as is the total probability for all hypothetical outcomes x:

When there is a natural order among the hypotheses x, it may be convenient to assign numerical values to them (or n-tuples in case of a discrete multivariate random variable) and to consider also values not in the image of X. That is, fX may be defined for all real numbers and fX(x) = 0 for all x  X(S) as shown in the figure.

X(S) as shown in the figure.

Since the image of X is countable, the probability mass function fX(x) is zero for all but a countable number of values of x. The discontinuity of probability mass functions is related to the fact that the cumulative distribution function of a discrete random variable is also discontinuous. Where it is differentiable, the derivative is zero, just as the probability mass function is zero at all such points.

Measure theoretic formulation

A probability mass function of a discrete random variable X can be seen as a special case of two more general measure theoretic constructions: the distribution of X and the probability density function of X with respect to the counting measure. We make this more precise below.

Suppose that  is a probability space

and that

is a probability space

and that  is a measurable space whose underlying σ-algebra is discrete, so in particular contains singleton sets of B. In this setting,

a random variable

is a measurable space whose underlying σ-algebra is discrete, so in particular contains singleton sets of B. In this setting,

a random variable  is discrete provided its image is a countable set.

The pushforward measure

is discrete provided its image is a countable set.

The pushforward measure  ---called a distribution of X in this context---is a probability measure

on B whose restriction to singleton sets induces a probability mass function

---called a distribution of X in this context---is a probability measure

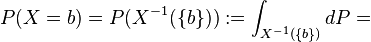

on B whose restriction to singleton sets induces a probability mass function  since

since ](../I/m/ce24f73ae0683c1e79b8764f87f291a4.png) for each b in B.

for each b in B.

Now suppose that  is a measure space equipped with the counting measure μ. The probability density function f of X with respect to the counting measure, if it exists,

is the Radon-Nikodym derivative of the pushforward measure of X (with respect to the counting measure), so

is a measure space equipped with the counting measure μ. The probability density function f of X with respect to the counting measure, if it exists,

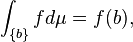

is the Radon-Nikodym derivative of the pushforward measure of X (with respect to the counting measure), so  and f is a function from B to the non-negative reals. As a consequence, for any b in B we have

and f is a function from B to the non-negative reals. As a consequence, for any b in B we have

demonstrating that f is in fact a probability mass function.

Examples

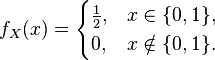

Suppose that S is the sample space of all outcomes of a single toss of a fair coin, and X is the random variable defined on S assigning 0 to "tails" and 1 to "heads". Since the coin is fair, the probability mass function is

This is a special case of the binomial distribution, the Bernoulli distribution.

An example of a multivariate discrete distribution, and of its probability mass function, is provided by the multinomial distribution.

References

- ↑ Stewart, William J. (2011). Probability, Markov Chains, Queues, and Simulation: The Mathematical Basis of Performance Modeling. Princeton University Press. p. 105. ISBN 978-1-4008-3281-1.

- ↑ Probability Function at Mathworld

- ↑ Kumar, Dinesh (2006). Reliability & Six Sigma. Birkhäuser. p. 22. ISBN 978-0-387-30255-3.

- ↑ Rao, S.S. (1996). Engineering optimization: theory and practice. John Wiley & Sons. p. 717. ISBN 978-0-471-55034-1.

Further reading

- Johnson, N.L., Kotz, S., Kemp A. (1993) Univariate Discrete Distributions (2nd Edition). Wiley. ISBN 0-471-54897-9 (p 36)

| ||||||||||||||||