Poisson summation formula

In mathematics, the Poisson summation formula is an equation that relates the Fourier series coefficients of the periodic summation of a function to values of the function's continuous Fourier transform. Consequently, the periodic summation of a function is completely defined by discrete samples of the original function's Fourier transform. And conversely, the periodic summation of a function's Fourier transform is completely defined by discrete samples of the original function. The Poisson summation formula was discovered by Siméon Denis Poisson and is sometimes called Poisson resummation.

Forms of the equation

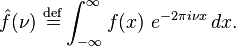

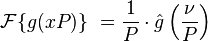

For appropriate functions  the Poisson summation formula may be stated as:

the Poisson summation formula may be stated as:

-

where

where  is the Fourier transform[note 1] of

is the Fourier transform[note 1] of  ; that is

; that is

(Eq.1)

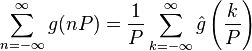

With the substitution,  and the Fourier transform property,

and the Fourier transform property,  (for P > 0), Eq.1 becomes:

(for P > 0), Eq.1 becomes:

-

(Eq.2)

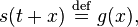

With another definition,  and the transform property

and the transform property  Eq.2 becomes a periodic summation (with period P) and its equivalent Fourier series:

Eq.2 becomes a periodic summation (with period P) and its equivalent Fourier series:

-

(Eq.3)

Similarly, the periodic summation of a function's Fourier transform has this Fourier series equivalent:

-

(Eq.4)

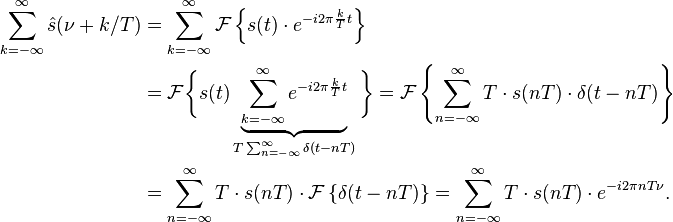

where T represents the time interval at which a function s(t) is sampled, and 1/T is the rate of samples/sec.

Distributional formulation

These equations can be interpreted in the language of distributions (Córdoba 1988; Hörmander 1983, §7.2) for a function  whose derivatives are all rapidly decreasing (see Schwartz function). Using the Dirac comb distribution and its Fourier series:

whose derivatives are all rapidly decreasing (see Schwartz function). Using the Dirac comb distribution and its Fourier series:

-

(Eq.7)

In other words, the periodization of a Dirac delta  ,

resulting in a Dirac comb, corresponds to the discretization of its spectrum which is constantly one.

Hence, this again is a Dirac comb but with reciprocal increments.

,

resulting in a Dirac comb, corresponds to the discretization of its spectrum which is constantly one.

Hence, this again is a Dirac comb but with reciprocal increments.

Eq.1 readily follows:

Similarly:

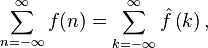

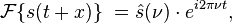

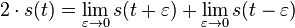

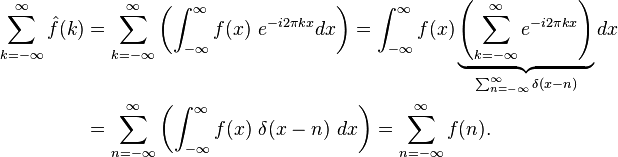

Derivation

We can also prove that Eq.3 holds in the sense that if s(t) ∈ L1(R), then the right-hand side is the (possibly divergent) Fourier series of the left-hand side. This proof may be found in either (Pinsky 2002) or (Zygmund 1968). It follows from the dominated convergence theorem that sP(t) exists and is finite for almost every t. And furthermore it follows that sP is integrable on the interval [0,P]. The right-hand side of Eq.3 has the form of a Fourier series. So it is sufficient to show that the Fourier series coefficients of sP(t) are  . Proceeding from the definition of the Fourier coefficients we have:

. Proceeding from the definition of the Fourier coefficients we have:

- where the interchange of summation with integration is once again justified by dominated convergence. With a change of variables (τ = t + nP) this becomes:

![\begin{align}

S[k] =

\frac{1}{P} \sum_{n=-\infty}^{\infty} \int_{nP}^{nP + P} s(\tau) \ e^{-i 2\pi \frac{k}{P} \tau} \ \underbrace{e^{i 2\pi k n}}_{1}\,d\tau

\ =\ \frac{1}{P} \int_{-\infty}^{\infty} s(\tau) \ e^{-i 2\pi \frac{k}{P} \tau} d\tau = \frac{1}{P}\cdot \hat s\left(\frac{k}{P}\right)

\end{align}](../I/m/912b0ea2458685c8c515a9bfb9d78801.png) QED.

QED.

Applicability

Eq.3 holds provided s(t) is a continuous integrable function which satisfies

for some C, δ > 0 and every t (Grafakos 2004; Stein & Weiss 1971). Note that such s(t) is uniformly continuous, this together with the decay assumption on s, show that the series defining sP converges uniformly to a continuous function. Eq.3 holds in the strong sense that both sides converge uniformly and absolutely to the same limit (Stein & Weiss 1971).

Eq.3 holds in a pointwise sense under the strictly weaker assumption that s has bounded variation and

(Zygmund 1968).

(Zygmund 1968).

The Fourier series on the right-hand side of Eq.3 is then understood as a (conditionally convergent) limit of symmetric partial sums.

As shown above, Eq.3 holds under the much less restrictive assumption that s(t) is in L1(R), but then it is necessary to interpret it in the sense that the right-hand side is the (possibly divergent) Fourier series of sP(t) (Zygmund 1968). In this case, one may extend the region where equality holds by considering summability methods such as Cesàro summability. When interpreting convergence in this way Eq.2 holds under the less restrictive conditions that g(x) is integrable and 0 is a point of continuity of gP(x). However Eq.2 may fail to hold even when both  and

and  are integrable and continuous, and the sums converge absolutely (Katznelson 1976).

are integrable and continuous, and the sums converge absolutely (Katznelson 1976).

Applications

Method of images

In partial differential equations, the Poisson summation formula provides a rigorous justification for the fundamental solution of the heat equation with absorbing rectangular boundary by the method of images. Here the heat kernel on R2 is known, and that of a rectangle is determined by taking the periodization. The Poisson summation formula similarly provides a connection between Fourier analysis on Euclidean spaces and on the tori of the corresponding dimensions (Grafakos 2004). In one dimension, the resulting solution is called a theta function.

Sampling

In the statistical study of time-series, if  is a function of time, then looking only at its values at equally spaced points of time is called "sampling." In applications, typically the function

is a function of time, then looking only at its values at equally spaced points of time is called "sampling." In applications, typically the function  is band-limited, meaning that there is some cutoff frequency

is band-limited, meaning that there is some cutoff frequency  such that the Fourier transform is zero for frequencies exceeding the cutoff:

such that the Fourier transform is zero for frequencies exceeding the cutoff:  for

for  . For band-limited functions, choosing the sampling rate

. For band-limited functions, choosing the sampling rate  guarantees that no information is lost: since

guarantees that no information is lost: since  can be reconstructed from these sampled values, then, by Fourier inversion, so can

can be reconstructed from these sampled values, then, by Fourier inversion, so can  . This leads to the Nyquist–Shannon sampling theorem (Pinsky 2002).

. This leads to the Nyquist–Shannon sampling theorem (Pinsky 2002).

Ewald summation

Computationally, the Poisson summation formula is useful since a slowly converging summation in real space is guaranteed to be converted into a quickly converging equivalent summation in Fourier space. (A broad function in real space becomes a narrow function in Fourier space and vice versa.) This is the essential idea behind Ewald summation.

Lattice points in a sphere

The Poisson summation formula may be used to derive Landau's asymptotic formula for the number of lattice points in a large Euclidean sphere. It can also be used to show that if an integrable function,  and

and  both have compact support then

both have compact support then  (Pinsky 2002).

(Pinsky 2002).

Number theory

In number theory, Poisson summation can also be used to derive a variety of functional equations including the functional equation for the Riemann zeta function.[1]

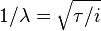

One important such use of Poisson summation concerns theta functions: periodic summations of Gaussians . Put  , for

, for  a complex number in the upper half plane, and define the theta function:

a complex number in the upper half plane, and define the theta function:

The relation between  and

and  turns out to be important for number theory, since this kind of relation is one of the defining properties of a modular form. By choosing

turns out to be important for number theory, since this kind of relation is one of the defining properties of a modular form. By choosing  in the second version of the Poisson summation formula (with

in the second version of the Poisson summation formula (with  ), and using the fact that

), and using the fact that  , one gets immediately

, one gets immediately

by putting  .

.

It follows from this that  has a simple transformation property under

has a simple transformation property under  and this can be used to prove Jacobi's formula for the number of different ways to express an integer as the sum of eight perfect squares.

and this can be used to prove Jacobi's formula for the number of different ways to express an integer as the sum of eight perfect squares.

Generalizations

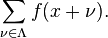

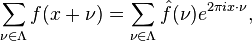

The Poisson summation formula holds in Euclidean space of arbitrary dimension. Let Λ be the lattice in Rd consisting of points with integer coordinates; Λ is the character group, or Pontryagin dual, of Rd. For a function ƒ in L1(Rd), consider the series given by summing the translates of ƒ by elements of Λ:

Theorem For ƒ in L1(Rd), the above series converges pointwise almost everywhere, and thus defines a periodic function Pƒ on Λ. Pƒ lies in L1(Λ) with ||Pƒ||1 ≤ ||ƒ||1. Moreover, for all ν in Λ, Pƒ̂(ν) (Fourier transform on Λ) equals ƒ̂(ν) (Fourier transform on Rd).

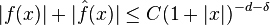

When ƒ is in addition continuous, and both ƒ and ƒ̂ decay sufficiently fast at infinity, then one can "invert" the domain back to Rd and make a stronger statement. More precisely, if

for some C, δ > 0, then

(Stein & Weiss 1971, VII §2)

(Stein & Weiss 1971, VII §2)

where both series converge absolutely and uniformly on Λ. When d = 1 and x = 0, this gives the formula given in the first section above.

More generally, a version of the statement holds if Λ is replaced by a more general lattice in Rd. The dual lattice Λ′ can be defined as a subset of the dual vector space or alternatively by Pontryagin duality. Then the statement is that the sum of delta-functions at each point of Λ, and at each point of Λ′, are again Fourier transforms as distributions, subject to correct normalization.

This is applied in the theory of theta functions, and is a possible method in geometry of numbers. In fact in more recent work on counting lattice points in regions it is routinely used − summing the indicator function of a region D over lattice points is exactly the question, so that the LHS of the summation formula is what is sought and the RHS something that can be attacked by mathematical analysis.

Selberg trace formula

Further generalisation to locally compact abelian groups is required in number theory. In non-commutative harmonic analysis, the idea is taken even further in the Selberg trace formula, but takes on a much deeper character.

A series of mathematicians applying harmonic analysis to number theory, most notably Martin Eichler, Atle Selberg, Robert Langlands, and James Arthur, have generalised the Poisson summation formula to the Fourier transform on non-commutative locally compact reductive algebraic groups  with a discrete subgroup

with a discrete subgroup  such that

such that  has finite volume. For example,

has finite volume. For example,  can be the real points of

can be the real points of  and

and  can be the integral points of

can be the integral points of  . In this setting,

. In this setting,  plays the role of the real number line in the classical version of Poisson summation, and

plays the role of the real number line in the classical version of Poisson summation, and  plays the role of the integers

plays the role of the integers  that appear in the sum. The generalised version of Poisson summation is called the Selberg Trace Formula, and has played a role in proving many cases of Artin's conjecture and in Wiles's proof of Fermat's Last Theorem. The left-hand side of (1) becomes a sum over irreducible unitary representations of

that appear in the sum. The generalised version of Poisson summation is called the Selberg Trace Formula, and has played a role in proving many cases of Artin's conjecture and in Wiles's proof of Fermat's Last Theorem. The left-hand side of (1) becomes a sum over irreducible unitary representations of  , and is called "the spectral side," while the right-hand side becomes a sum over conjugacy classes of

, and is called "the spectral side," while the right-hand side becomes a sum over conjugacy classes of  , and is called "the geometric side."

, and is called "the geometric side."

The Poisson summation formula is the archetype for vast developments in harmonic analysis and number theory.

See also

Notes

References

- ↑ H. M. Edwards (1974). Riemann's Zeta Function. Academic Press. ISBN 0-486-41740-9. (pages 209-211)

Further reading

- Benedetto, J.J.; Zimmermann, G. (1997), "Sampling multipliers and the Poisson summation formula", J. Fourier Ana. App. 3 (5).

- Córdoba, A., "La formule sommatoire de Poisson", C.R. Acad. Sci. Paris, Series I 306: 373–376.

- Gasquet, Claude; Witomski, Patrick (1999), Fourier Analysis and Applications, Springer, pp. 344–352, ISBN 0-387-98485-2.

- Grafakos, Loukas (2004), Classical and Modern Fourier Analysis, Pearson Education, Inc., pp. 253–257, ISBN 0-13-035399-X.

- Higgins, J.R. (1985), "Five short stories about the cardinal series", Bull. Amer. Math. Soc. 12 (1): 45–89, doi:10.1090/S0273-0979-1985-15293-0.

- Hörmander, L. (1983), The analysis of linear partial differential operators I, Grundl. Math. Wissenschaft. 256, Springer, ISBN 3-540-12104-8, MR 0717035.

- Katznelson, Yitzhak (1976), An introduction to harmonic analysis (Second corrected ed.), New York: Dover Publications, Inc, ISBN 0-486-63331-4

- Pinsky, M. (2002), Introduction to Fourier Analysis and Wavelets., Brooks Cole.

- Stein, Elias; Weiss, Guido (1971), Introduction to Fourier Analysis on Euclidean Spaces, Princeton, N.J.: Princeton University Press, ISBN 978-0-691-08078-9.

- Zygmund, Antoni (1968), Trigonometric series (2nd ed.), Cambridge University Press (published 1988), ISBN 978-0-521-35885-9.

![\underbrace{\sum_{n=-\infty}^{\infty} s(t + nP)}_{S_P(t)} = \sum_{k=-\infty}^{\infty} \underbrace{\frac{1}{P}\cdot \hat s\left(\frac{k}{P}\right)}_{S[k]}\ e^{i 2\pi \frac{k}{P} t }](../I/m/ab936c275f13ee2b0eab6b7b218ac3fd.png)

![\begin{align}

S[k]\ &\stackrel{\text{def}}{=}\ \frac{1}{P}\int_0^{P} s_P(t)\cdot e^{-i 2\pi \frac{k}{P} t}\, dt\\

&=\ \frac{1}{P}\int_0^{P}

\left(\sum_{n=-\infty}^{\infty} s(t + nP)\right)

\cdot e^{-i 2\pi\frac{k}{P} t}\, dt\\

&=\ \frac{1}{P}

\sum_{n=-\infty}^{\infty}

\int_0^{P} s(t + nP)\cdot e^{-i 2\pi\frac{k}{P} t}\, dt,

\end{align}](../I/m/e2386cbb2a720b5e7d3effd8dd8c408e.png)