Non-negative least squares

| Part of a series on Statistics |

| Regression analysis |

|---|

|

| Models |

| Estimation |

| Background |

|

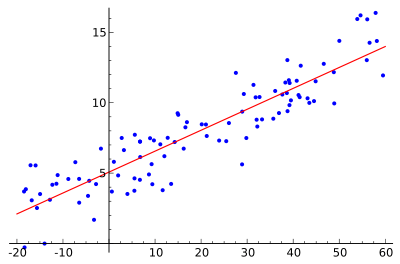

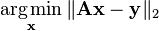

In mathematical optimization, the problem of non-negative least squares (NNLS) is a constrained version of the least squares problem where the coefficients are not allowed to become negative. That is, given a matrix A and a (column) vector of response variables y, the goal is to find[1]

subject to x ≥ 0.

subject to x ≥ 0.

Here, x ≥ 0 means that each component of the vector x should be non-negative and ‖·‖₂ denotes the Euclidean norm.

Non-negative least squares problems turn up as subproblems in matrix decomposition, e.g. in algorithms for PARAFAC[2] and non-negative matrix/tensor factorization.[3][4] The latter can be considered a generalization of NNLS.[1]

Another generalization of NNLS is bounded-variable least squares (BLVS), with simultaneous upper and lower bounds αᵢ ≤ xᵢ ≤ βᵢ.[5]:291[6]

Quadratic programming version

The NNLS problem is equivalent to a quadratic programming problem

,

,

where Q = AᵀA and c = −Aᵀ y. This problem is convex as Q is positive semidefinite and the non-negativity constraints form a convex feasible set.[7]

Algorithms

The first widely used algorithm for solving this problem is an active set method published by Lawson and Hanson in their 1974 book Solving Least Squares Problems.[5]:291 In pseudocode, this algorithm looks as follows:[1][2]

- Inputs:

- a real-valued matrix A of dimension m × n

- a real-valued vector y of dimension m

- a real value t, the tolerance for the stopping criterion

- Initialize:

- Set P = ∅

- Set R = {1, ..., n}

- Set x to an all-zero vector of dimension n

- Set w = Aᵀ(y − Ax)

- Main loop: while R ≠ ∅ and max(w) > t,

- Let j be the index of max(w) in w

- Add j to P

- Remove j from R

- Let AP be A restricted to the variables included in P

- Let SP = ((AP)ᵀ AP)-1 (AP)ᵀy

- While min(SP ≤ 0):

- Let α = min(xi/xi - si) for i in P where si ≤ 0

- Set x to x + α(s - x)

- Move to R all indices j in P such that xj = 0

- Set sP = ((AP)ᵀ AP)-1 (AP)ᵀy

- Set sR to zero

- Set x to s

- Set w to Aᵀ(y − Ax)

This algorithm takes a finite number of steps to reach a solution and smoothly improves its candidate solution as it goes (so it can find good approximate solutions when cut off at a reasonable number of iterations), but is very slow in practice, owing largely to the computation of the pseudoinverse ((Aᴾ)ᵀ Aᴾ)⁻¹.[1] Variants of this algorithm are available in Matlab as the routine lsqnonneg[1] and in SciPy as optimize.nnls.[8]

Many improved algorithms have been suggested since 1974.[1] Fast NNLS (FNNLS) is an optimized version of the Lawson—Hanson algorithm.[2] Other algorithms include variants of Landweber's gradient descent method[9] and coordinate-wise optimization based on the quadratic programming problem above.[7]

See also

References

- 1 2 3 4 5 6 Chen, Donghui; Plemmons, Robert J. (2009). Nonnegativity constraints in numerical analysis. Symposium on the Birth of Numerical Analysis. CiteSeerX: 10

.1 ..1 .157 .9203 - 1 2 3 Bro, Rasmus; De Jong, Sijmen (1997). "A fast non-negativity-constrained least squares algorithm". Journal of Chemometrics 11 (5): 393. doi:10.1002/(SICI)1099-128X(199709/10)11:5<393::AID-CEM483>3.0.CO;2-L.

- ↑ Lin, Chih-Jen (2007). "Projected Gradient Methods for Nonnegative Matrix Factorization" (PDF). Neural Computation 19 (10): 2756–2779. doi:10.1162/neco.2007.19.10.2756. PMID 17716011.

- ↑ Boutsidis, Christos; Drineas, Petros (2009). "Random projections for the nonnegative least-squares problem". Linear Algebra and its Applications 431 (5–7): 760–771. doi:10.1016/j.laa.2009.03.026.

- 1 2 Lawson, Charles L.; Hanson, Richard J. (1995). Solving Least Squares Problems. SIAM.

- ↑ Stark, Philip B.; Parker, Robert L. (1995). "Bounded-variable least-squares: an algorithm and applications" (PDF). Computational Statistics 10: 129–129.

- 1 2 Franc, Vojtěch; Hlaváč, Václav; Navara, Mirko (2005). "Computer Analysis of Images and Patterns". Lecture Notes in Computer Science 3691. p. 407. doi:10.1007/11556121_50. ISBN 978-3-540-28969-2.

|chapter=ignored (help) - ↑ "scipy.optimize.nnls". SciPy v0.13.0 Reference Guide. Retrieved 25 January 2014.

- ↑ Johansson, B. R.; Elfving, T.; Kozlov, V.; Censor, Y.; Forssén, P. E.; Granlund, G. S. (2006). "The application of an oblique-projected Landweber method to a model of supervised learning". Mathematical and Computer Modelling 43 (7–8): 892. doi:10.1016/j.mcm.2005.12.010.