Resultant

In mathematics, the resultant of two polynomials is a polynomial expression of their coefficients, which is equal to zero if and only if the polynomials have a common root (possibly in a field extension), or, equivalently, a common factor (over their field of coefficients). In some older texts, the resultant is also called eliminant.[1]

The resultant is widely used in number theory, either directly or through the discriminant, which is essentially the resultant of a polynomial and its derivative. The resultant of two polynomials with rational or polynomial coefficients may be computed efficiently on a computer. It is a basic tool of computer algebra, and is a built-in function of most computer algebra systems. It is used, among others, for cylindrical algebraic decomposition, integration of rational functions and drawing of curves defined by a bivariate polynomial equation.

The resultant of n homogeneous polynomials in n variables or multivariate resultant, sometimes called Macaulay's resultant, is a generalization of the usual resultant introduced by Macaulay. It is, with Gröbner bases, one of the main tools of effective elimination theory (elimination theory on computers).

Definition

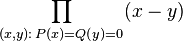

For univariate monic polynomials P and Q over a field k, the resultant res(P,Q) is a polynomial function of their coefficients. It is defined as the product

of the differences of their roots in an algebraic closure of k; in the case of multiple roots, the factors are repeated according to their multiplicities. It results that the number of factors is always the product of the degrees of P and Q. For non-monic polynomials with leading coefficients p and q, respectively, the above product is multiplied by pdegQqdegP.

See the section on computation below, for a proof that res(P,Q) is a polynomial function of their coefficients.

Properties

- The resultant is zero if and only if the two polynomials have a common root in an algebraically closed field containing the coefficients.

- Since the resultant is a polynomial with integer coefficients in terms of the coefficients of P and Q, it follows that

- The resultant is well defined for polynomials over any commutative ring.

- If h is a homomorphism of the ring of the coefficients into another commutative ring, which preserve the degrees of P and Q, then the resultant of the image by h of P and Q is the image by h of the resultant of P and Q.

- If P and Q are two polynomials over a commutative ring R, then there exist two polynomials A and B in the variable X over R such that AP + BQ = res(P, Q) (with the right hand side being interpreted as a constant polynomial). This result is a kind of substitute for Bézout's identity for polynomials over arbitrary commutative rings, where the usual version of Bézout's identity doesn't generally hold.

- The resultant of two polynomials with coefficients in an integral domain is null if and only if they have a common divisor of positive degree.

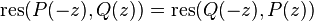

- res(P,Q) = (-1)degPdegQres(Q,P)

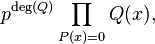

- res(PR,Q)=res(P,Q)res(R,Q)

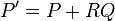

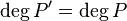

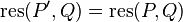

- If

and

and  , then

, then  .

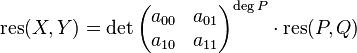

. - If X, Y, P, Q have the same degree and X=a00P+a01Q, Y=a10P+a11Q,

- then

Computation

Since the resultant depends polynomially (with integer coefficients) on the roots of P and Q, and it is invariant with respect to permutations of each set of roots, it must be possible to calculate it using an (integer) polynomial formula on the coefficients of P and Q. See elementary symmetric polynomial for details.

More concretely, the resultant is the determinant of the Sylvester matrix (and of the Bézout matrix) associated to P and Q. This is the standard definition of the resultant over a commutative ring.

The above definition of the resultant can be rewritten as

so it can be expressed polynomially in terms of the coefficients of Q for each fixed P. By the symmetry of the defining formula, the resultant is also a polynomial in the coefficients of P for each fixed Q. It follows that the resultant is a polynomial in the coefficients of P and Q jointly.

This expression remains unchanged if Q is replaced by the remainder P mod Q of the Euclidean division of Q by P. If we set P' = P mod Q, then this idea can be continued by swapping the roles of P' and Q. However, P' has a set of roots different from that of P. This can be resolved by writing res(P',Q) as a determinant again, where P' has leading zero coefficients. This determinant can now be simplified by iterative expansion with respect to the column, where only the leading coefficient q of Q appears: res(P,Q)=qdegP-degP' res(P',Q). Continuing this procedure ends up in a variant of the Euclid's algorithm.

This procedure needs a number of arithmetic operations on the coefficients that is of the order of product of the degrees. However, when the coefficients are integers, rational numbers or polynomials, these arithmetic operations imply a number of GCD computations of coefficients which is of the same order and make the algorithm inefficient.

The subresultant pseudo-remainder sequences were introduced to solve this problem and avoid any fraction and any GCD computation of coefficients. A more efficient algorithm is obtained by using the good behavior of the resultant under a ring homomorphism of the coefficients: to compute a resultant of two polynomials with integer coefficients, one computes their resultants modulo sufficiently many prime numbers and then reconstructs the result with the Chinese remainder theorem.

Applications

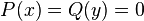

- If x and y are algebraic numbers such that

(with degree of Q = n), we see that

(with degree of Q = n), we see that  is a root of the resultant (in x) of

is a root of the resultant (in x) of  and

and  and that

and that  is a root of the resultant of

is a root of the resultant of  and

and  ; combined with the fact that

; combined with the fact that  is a root of

is a root of  , this shows that the set of algebraic numbers is a field.

, this shows that the set of algebraic numbers is a field.

- The discriminant of a polynomial is the quotient by its leading coefficient of the resultant of the polynomial and its derivative.

- Resultants can be used in algebraic geometry to determine intersections. For example, let

- and

- define algebraic curves in

. If

. If  and

and  are viewed as polynomials in

are viewed as polynomials in  with coefficients in

with coefficients in ![k[y]](../I/m/717b1d213cee2c577149828e9fe3fd05.png) , then the resultant of

, then the resultant of  and

and  is a polynomial in

is a polynomial in  whose roots are the

whose roots are the  -coordinates of the intersection of the curves and of the common asymptotes parallel to the

-coordinates of the intersection of the curves and of the common asymptotes parallel to the  axis.

axis.

- In computer algebra, the resultant is a tool that can be used to analyze modular images of the greatest common divisor of integer polynomials where the coefficients are taken modulo some prime number

. The resultant of two polynomials is frequently computed in the Lazard–Rioboo–Trager method of finding the integral of a ratio of polynomials.

. The resultant of two polynomials is frequently computed in the Lazard–Rioboo–Trager method of finding the integral of a ratio of polynomials.

- In wavelet theory, the resultant is closely related to the determinant of the transfer matrix of a refinable function.

Generalizations and related concepts

The resultant is sometimes defined for two homogeneous polynomials in two variables, in which case it vanishes when the polynomials have a common non-zero solution, or equivalently when they have a common zero on the projective line. More generally, the multipolynomial resultant,[2] multivariate resultant or Macaulay's resultant of n homogeneous polynomials in n variables is a polynomial in their coefficients that vanishes when they have a common non-zero solution, or equivalently when the n hypersurfaces corresponding to them have a common zero in n–1 dimensional projective space. The multivariate resultant is, with Gröbner bases, one of the main tools of effective elimination theory (elimination theory on computers).

See also

Notes

- ↑ Salmon 1885, lesson VIII, p. 66.

- ↑ Cox, David; Little, John; O'Shea, Donal (2005), Using Algebraic Geometry, Springer Science+Business Media, ISBN 978-0387207339, Chapter 3. Resultants

References

- Gelfand, I. M.; Kapranov, M.M.; Zelevinsky, A.V. (1994), Discriminants, resultants, and multidimensional determinants, Boston: Birkhäuser, ISBN 978-0-8176-3660-9

- MacAulay, F. S. (1902), "Some Formulæ in Elimination", Proc. London Math. Soc. 35: 3–27, doi:10.1112/plms/s1-35.1.3

- Salmon, George (1885) [1859], Lessons introductory to the modern higher algebra (4th ed.), Dublin, Hodges, Figgis, and Co., ISBN 978-0-8284-0150-0

External links

| ||||||||||||||