Master theorem

In the analysis of algorithms, the master theorem provides a solution in asymptotic terms (using Big O notation) for recurrence relations of types that occur in the analysis of many divide and conquer algorithms. It was popularized by the canonical algorithms textbook Introduction to Algorithms by Cormen, Leiserson, Rivest, and Stein, in which it is both introduced and proved. Not all recurrence relations can be solved with the use of the master theorem; its generalizations include the Akra–Bazzi method.

Introduction

Consider a problem that can be solved using a recursive algorithm such as the following:

procedure T( n : size of problem ) defined as:

if n < 1 then exit

Do work of amount f(n)

repeat for a total of ′a′ times

T(n/b)

end repeat

end procedure

In the above algorithm we are dividing the problem into a number of subproblems recursively, each subproblem being of size n/b. This can be visualized as building a call tree with each node of the tree as an instance of one recursive call and its child nodes being instances of subsequent calls. In the above example, each node would have a number of child nodes. Each node does an amount of work that corresponds to the size of the sub problem n passed to that instance of the recursive call and given by  . The total amount of work done by the entire tree is the sum of the work performed by all the nodes in the tree.

. The total amount of work done by the entire tree is the sum of the work performed by all the nodes in the tree.

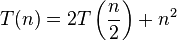

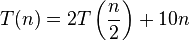

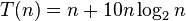

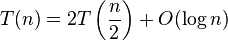

Algorithms such as above can be represented as a recurrence relation  . This recursive relation can be successively substituted into itself and expanded to obtain expression for total amount of work done.[1]

. This recursive relation can be successively substituted into itself and expanded to obtain expression for total amount of work done.[1]

The Master theorem allows us to easily calculate the running time of such a recursive algorithm in Θ-notation without doing an expansion of the recursive relation above.

Generic form

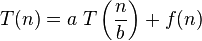

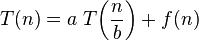

The master theorem concerns recurrence relations of the form:

where

where

In the application to the analysis of a recursive algorithm, the constants and function take on the following significance:

- n is the size of the problem.

- a is the number of subproblems in the recursion.

- n/b is the size of each subproblem. (Here it is assumed that all subproblems are essentially the same size.)

- f (n) is the cost of the work done outside the recursive calls, which includes the cost of dividing the problem and the cost of merging the solutions to the subproblems.

It is possible to determine an asymptotic tight bound in these three cases:

Case 1

Generic form

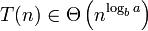

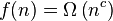

If  where

where  (using big O notation)

(using big O notation)

then:

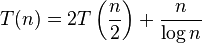

Example

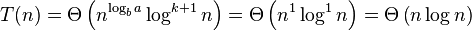

As one can see from the formula above:

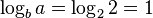

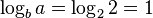

, so

, so , where

, where

Next, we see if we satisfy the case 1 condition:

.

.

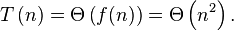

It follows from the first case of the master theorem that

(indeed, the exact solution of the recurrence relation is  , assuming

, assuming  ).

).

Case 2

Generic form

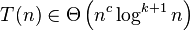

If it is true, for some constant k ≥ 0, that:

where

where

then:

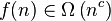

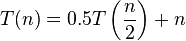

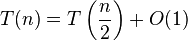

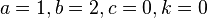

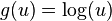

Example

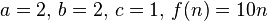

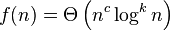

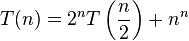

As we can see in the formula above the variables get the following values:

where

where

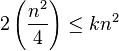

Next, we see if we satisfy the case 2 condition:

, and therefore, yes,

, and therefore, yes,

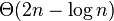

So it follows from the second case of the master theorem:

Thus the given recurrence relation T(n) was in Θ(n log n).

(This result is confirmed by the exact solution of the recurrence relation, which is  , assuming

, assuming  .)

.)

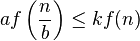

Case 3

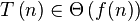

Generic form

If it is true that:

where

where

and if it is also true that:

for some constant

for some constant  and sufficiently large

and sufficiently large  (often called the regularity condition)

(often called the regularity condition)

then:

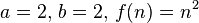

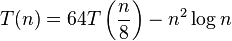

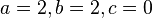

Example

As we can see in the formula above the variables get the following values:

, where

, where

Next, we see if we satisfy the case 3 condition:

, and therefore, yes,

, and therefore, yes,

The regularity condition also holds:

, choosing

, choosing

So it follows from the third case of the master theorem:

Thus the given recurrence relation T(n) was in Θ(n2), that complies with the f (n) of the original formula.

(This result is confirmed by the exact solution of the recurrence relation, which is  , assuming

, assuming  .)

.)

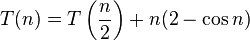

Inadmissible equations

The following equations cannot be solved using the master theorem:[2]

- a is not a constant; the number of subproblems should be fixed

- non-polynomial difference between f(n) and

(see below)

(see below)

- non-polynomial difference between f(n) and

- a<1 cannot have less than one sub problem

- f(n) which is the combination time is not positive

- case 3 but regularity violation.

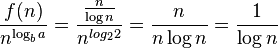

In the second inadmissible example above, the difference between  and

and  can be expressed with the ratio

can be expressed with the ratio  . It is clear that

. It is clear that  for any constant

for any constant  . Therefore, the difference is not polynomial and the Master Theorem does not apply.

. Therefore, the difference is not polynomial and the Master Theorem does not apply.

See also

Application to common algorithms

| Algorithm | Recurrence Relationship | Run time | Comment |

|---|---|---|---|

| Binary search |  |

|

Apply Master theorem case  , where , where  [3] [3] |

| Binary tree traversal |  |

|

Apply Master theorem case  where where  [3] [3] |

| Optimal Sorted Matrix Search |  |

|

Apply the Akra–Bazzi theorem for  and and  to get to get  |

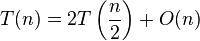

| Merge Sort |  |

|

Apply Master theorem case  , where , where  |

Notes

- ↑ Duke University, "Big-Oh for Recursive Functions: Recurrence Relations", http://www.cs.duke.edu/~ola/ap/recurrence.html

- ↑ Massachusetts Institute of Technology (MIT), "Master Theorem: Practice Problems and Solutions", http://www.csail.mit.edu/~thies/6.046-web/master.pdf

- 1 2 Dartmouth College, http://www.math.dartmouth.edu/archive/m19w03/public_html/Section5-2.pdf

References

- Thomas H. Cormen, Charles E. Leiserson, Ronald L. Rivest, and Clifford Stein. Introduction to Algorithms, Second Edition. MIT Press and McGraw–Hill, 2001. ISBN 0-262-03293-7. Sections 4.3 (The master method) and 4.4 (Proof of the master theorem), pp. 73–90.

- Michael T. Goodrich and Roberto Tamassia. Algorithm Design: Foundation, Analysis, and Internet Examples. Wiley, 2002. ISBN 0-471-38365-1. The master theorem (including the version of Case 2 included here, which is stronger than the one from CLRS) is on pp. 268–270.