Jacobi's formula

In matrix calculus, Jacobi's formula expresses the derivative of the determinant of a matrix A in terms of the adjugate of A and the derivative of A.[1] If A is a differentiable map from the real numbers to n × n matrices,

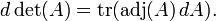

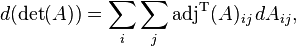

Equivalently, if dA stands for the differential of A, the formula is

It is named after the mathematician C.G.J. Jacobi.

Derivation

We first prove a preliminary lemma:

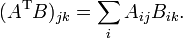

Lemma. Let A and B be a pair of square matrices of the same dimension n. Then

Proof. The product AB of the pair of matrices has components

Replacing the matrix A by its transpose AT is equivalent to permuting the indices of its components:

The result follows by taking the trace of both sides:

Theorem. (Jacobi's formula) For any differentiable map A from the real numbers to n × n matrices,

Proof. Laplace's formula for the determinant of a matrix A can be stated as

Notice that the summation is performed over some arbitrary row i of the matrix.

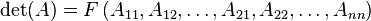

The determinant of A can be considered to be a function of the elements of A:

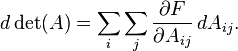

so that, by the chain rule, its differential is

This summation is performed over all n×n elements of the matrix.

To find ∂F/∂Aij consider that on the right hand side of Laplace's formula, the index i can be chosen at will. (In order to optimize calculations: Any other choice would eventually yield the same result, but it could be much harder). In particular, it can be chosen to match the first index of ∂ / ∂Aij:

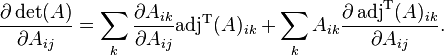

Thus, by the product rule,

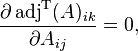

Now, if an element of a matrix Aij and a cofactor adjT(A)ik of element Aik lie on the same row (or column), then the cofactor will not be a function of Aij, because the cofactor of Aik is expressed in terms of elements not in its own row (nor column). Thus,

so

All the elements of A are independent of each other, i.e.

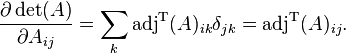

where δ is the Kronecker delta, so

Therefore,

and applying the Lemma yields

Corollary

For any invertible matrix A, the inverse A−1 is related to the adjugate by A−1 = (det A)−1 adj A. It follows that if A(t) is invertible for all t, then

which can be alternatively written as

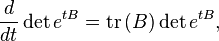

Furthermore, taking A(t ) = exp(tB ) in the first equation, we obtain

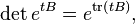

solved by

a useful relation connecting the trace to the determinant of the associated matrix exponential.

Notes

- ↑ Magnus & Neudecker (1999), Part Three, Section 8.3

References

- Magnus, Jan R.; Neudecker, Heinz (1999), Matrix Differential Calculus with Applications in Statistics and Econometrics, Wiley, ISBN 0-471-98633-X

- Bellmann, Richard (1987), Introduction to Matrix Analysis, SIAM, ISBN 0898713994