Jackknife resampling

In statistics, the jackknife is a resampling technique especially useful for variance and bias estimation. The jackknife predates other common resampling methods such as the bootstrap. The jackknife estimator of a parameter is found by systematically leaving out each observation from a dataset and calculating the estimate and then finding the average of these calculations. Given a sample of size  , the jackknife estimate is found by aggregating the estimates of each

, the jackknife estimate is found by aggregating the estimates of each  estimate in the sample.

estimate in the sample.

The jackknife technique was developed by Maurice Quenouille (1949, 1956). John Tukey (1958) expanded on the technique and proposed the name "jackknife" since, like a Boy Scout's jackknife, it is a "rough and ready" tool that can solve a variety of problems even though specific problems may be more efficiently solved with a purpose-designed tool.[1]

The jackknife is a linear approximation of the bootstrap.[1]

Estimation

The jackknife estimate of a parameter can be found by estimating the parameter for each subsample omitting the ith observation to estimate the previously unknown value of a parameter (say  ).[2]

).[2]

Variance estimation

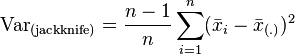

An estimate of the variance of an estimator can be calculated using the jackknife technique.

where  is the parameter estimate based on leaving out the ith observation, and

is the parameter estimate based on leaving out the ith observation, and  is the estimator based on all of the subsamples.[3]

is the estimator based on all of the subsamples.[3]

Bias estimation and correction

The jackknife technique can be used to estimate the bias of an estimator calculated over the entire sample. Say  is the calculated estimator parameter of interest based on all

is the calculated estimator parameter of interest based on all  observations. Here,

observations. Here,

where  is the estimation of parameter of interest based on sample omitting ith observation (jackknife estimator), and

is the estimation of parameter of interest based on sample omitting ith observation (jackknife estimator), and  is the mean jackknife estimator.

The parameter bias estimate is,

is the mean jackknife estimator.

The parameter bias estimate is,

This removes the bias in the special case that the bias is  and to

and to  in other cases.[1]

in other cases.[1]

This provides an estimated correction of bias due to the estimation method. The jackknife does not correct for a biased sample.

Notes

- 1 2 3 Cameron & Trivedi 2005, p. 375.

- ↑ Efron 1982, p. 2.

- ↑ Efron 1982, p. 14.

References

- Cameron, Adrian; Trivedi, Pravin K. (2005). Microeconometrics : methods and applications. Cambridge New York: Cambridge University Press. ISBN 9780521848053.

- Efron, B.; Stein, C. (May 1981). "The Jackknife Estimate of Variance". The Annals of Statistics 9 (3): 586–596. doi:10.1214/aos/1176345462. JSTOR 2240822.

- Efron, Bradley (1982). The jackknife, the bootstrap, and other resampling plans. Philadelphia, Pa: Society for Industrial and Applied Mathematics. ISBN 9781611970319.

- Quenouille, M. H. (September 1949). "Problems in Plane Sampling". The Annals of Mathematical Statistics 20 (3): 355–375. doi:10.1214/aoms/1177729989. JSTOR 2236533.

- Quenouille, M. H. (1956). "Notes on Bias in Estimation". Biometrika 43 (3-4): 353–360. doi:10.1093/biomet/43.3-4.353. JSTOR 2332914.

- Tukey, J. W. (1958). "Bias and confidence in not quite large samples". The Annals of Mathematical Statistics 29: 614–623. doi:10.1214/aoms/1177706647..

| ||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||