Interval arithmetic

Interval arithmetic, interval mathematics, interval analysis, or interval computation, is a method developed by mathematicians since the 1950s and 1960s, as an approach to putting bounds on rounding errors and measurement errors in mathematical computation and thus developing numerical methods that yield reliable results. Very simply put, it represents each value as a range of possibilities. For example, instead of estimating the height of someone using standard arithmetic as 2.0 metres, using interval arithmetic we might be certain that that person is somewhere between 1.97 and 2.03 metres.

This concept is suitable for a variety of purposes. The most common use is to keep track of and handle rounding errors directly during the calculation and of uncertainties in the knowledge of the exact values of physical and technical parameters. The latter often arise from measurement errors and tolerances for components or due to limits on computational accuracy. Interval arithmetic also helps find reliable and guaranteed solutions to equations and optimization problems.

Mathematically, instead of working with an uncertain real  we work with the two ends of the interval

we work with the two ends of the interval ![[a,b]](../I/m/2c3d331bc98b44e71cb2aae9edadca7e.png) which contains

which contains  . In interval arithmetic, any variable

. In interval arithmetic, any variable  lies between

lies between  and

and  , or could be one of them. A function

, or could be one of them. A function  when applied to

when applied to  is also uncertain. In interval arithmetic

is also uncertain. In interval arithmetic  produces an interval

produces an interval ![[c,d]](../I/m/c31d2b7df15fa7d119c2f8d13f69e10b.png) which is all the possible values for

which is all the possible values for  for all

for all ![x \in [a,b]](../I/m/8290bddba5acf9822dcbf61f4ac67d1b.png) .

.

Introduction

The main focus of interval arithmetic is the simplest way to calculate upper and lower endpoints for the range of values of a function in one or more variables. These endpoints are not necessarily the supremum or infimum, since the precise calculation of those values can be difficult or impossible.

Treatment is typically limited to real intervals, so quantities of form

where  and

and  are allowed; with one of them infinite we would have an unbounded interval, while with both infinite we would have the extended real number line.

are allowed; with one of them infinite we would have an unbounded interval, while with both infinite we would have the extended real number line.

As with traditional calculations with real numbers, simple arithmetic operations and functions on elementary intervals must first be defined.[1] More complicated functions can be calculated from these basic elements.[1]

Example

Take as an example the calculation of body mass index (BMI). The BMI is the body weight in kilograms divided by the square of height in metres. Measuring the mass with bathroom scales may have an accuracy of one kilogram. We will not know intermediate values – about 79.6 kg or 80.3 kg – but information rounded to the nearest whole number. It is unlikely that when the scale reads 80 kg, someone really weighs exactly 80.0 kg. In normal rounding to the nearest value, the scales showing 80 kg indicates a weight between 79.5 kg and 80.5 kg. The relevant range is that of all real numbers that are greater than or equal to 79.5, while less than or equal to 80.5, or in other words the interval [79.5,80.5].

For a man who weighs 80 kg and is 1.80 m tall, the BMI is about 24.7. With a weight of 79.5 kg and the same height the value is 24.5, while 80.5 kilograms gives almost 24.9. So the actual BMI is in the range [24.5,24.9]. The error in this case does not affect the conclusion (normal weight), but this is not always the position. For example, weight fluctuates in the course of a day so that the BMI can vary between 24 (normal weight) and 25 (overweight). Without detailed analysis it is not possible to always exclude questions as to whether an error ultimately is large enough to have significant influence.

Interval arithmetic states the range of possible outcomes explicitly. Simply put, results are no longer stated as numbers, but as intervals which represent imprecise values. The size of the intervals are similar to error bars to a metric in expressing the extent of uncertainty. Simple arithmetic operations, such as basic arithmetic and trigonometric functions, enable the calculation of outer limits of intervals.

Simple arithmetic

Returning to the earlier BMI example, in determining the body mass index, height and body weight both affect the result. For height, measurements are usually in round centimetres: a recorded measurement of 1.80 metres actually means a height somewhere between 1.795 m and 1.805 m. This uncertainty must be combined with the fluctuation range in weight between 79.5 kg and 80.5 kg. The BMI is defined as the weight in kilograms divided by the square of height in metre. Using either 79.5 kg and 1.795 m or 80.5 kg and 1.805 m gives approximately 24.7. But the person in question may only be 1.795 m tall, with a weight of 80.5 kilograms – or 1.805 m and 79.5 kilograms: all combinations of all possible intermediate values must be considered. Using the interval arithmetic methods described below, the BMI lies in the interval

An operation  , such as addition or multiplication, on two intervals is defined by

, such as addition or multiplication, on two intervals is defined by

![[x_1, x_2] {\,\langle\!\mathrm{op}\!\rangle\,} [y_1, y_2] = \{ x {\,\langle\!\mathrm{op}\!\rangle\,} y \, | \, x \in [x_1, x_2] \,\mbox{and}\, y \in [y_1, y_2] \}](../I/m/b404757cf32f99e44beaa3bf3fe162f0.png) .

.

For the four basic arithmetic operations this can become

provided that  is allowed for all

is allowed for all

![x\in [x_1, x_2]](../I/m/7e05f1f852af731aa65aae569d2f770f.png) and

and ![y \in [y_1, y_2]](../I/m/04f5f11ef1ff0fc2deb95364963105b9.png) .

.

For practical applications this can be simplified further:

- Addition:

![[x_1, x_2] + [y_1, y_2] = [x_1+y_1, x_2+y_2]](../I/m/95924bccf0e7ed5d314040750f68068f.png)

- Subtraction:

![[x_1, x_2] - [y_1, y_2] = [x_1-y_2, x_2-y_1]](../I/m/76f3cd6ada11d45d3877d05b5041c8ed.png)

- Multiplication:

![[x_1, x_2] \cdot [y_1, y_2] = [\min(x_1 y_1,x_1 y_2,x_2 y_1,x_2 y_2), \max(x_1 y_1,x_1 y_2,x_2 y_1,x_2 y_2)]](../I/m/5b7381798edddd8f93944535407245b1.png)

- Division:

![[x_1, x_2] / [y_1, y_2] =

[x_1, x_2] \cdot (1/[y_1, y_2])](../I/m/b435292ef000b84313b146cdd563062c.png) , where

, where ![1/[y_1, y_2] = [1/y_2, 1/y_1]](../I/m/ea88c6b5943f1f2135e12203cba91f19.png) if

if ![0 \notin [y_1, y_2]](../I/m/0e1733af616576c84fcc40e54a50475e.png) .

.

For division by an interval including zero, first define

-

![1/[y_1, 0] = [-\infty, 1/y_1]](../I/m/7e8b4c1dd3a4dcf1c1fbebad3278e3c0.png) and

and ![1/[0, y_2] = [1/y_2, \infty]](../I/m/85090382090dd355f0ff73b324efd3e2.png) .

.

For  , we get

, we get ![1/[y_1, y_2] = [-\infty, 1/y_1] \cup [1/y_2, \infty]](../I/m/17981ec43d59462284f7bb1cc55ef5db.png) which as a single interval gives

which as a single interval gives ![1/[y_1, y_2] = [-\infty, \infty]](../I/m/2db552be615995e0eff8d7455fdc454d.png) ; this loses useful information about

; this loses useful information about  . So typically it is common to work with

. So typically it is common to work with ![[-\infty, 1/y_1]](../I/m/617b090573d63fa2959626b82038654e.png) and

and ![[1/y_2, \infty]](../I/m/8e15dd96f6c5d8140a546eecdaff5468.png) as separate intervals.

as separate intervals.

Because several such divisions may occur in an interval arithmetic calculation, it is sometimes useful to do the calculation with so-called multi-intervals of the form ![\textstyle \bigcup_{i=1}^l [x_{i1},x_{i2}]](../I/m/238635c74ecc803649ad66663e0f9ff6.png) . The corresponding multi-interval arithmetic maintains a disjoint set of intervals and also provides for overlapping intervals to unite.[2]

. The corresponding multi-interval arithmetic maintains a disjoint set of intervals and also provides for overlapping intervals to unite.[2]

Since a real number  can be interpreted as the interval

can be interpreted as the interval ![[r,r]](../I/m/6eb662778a40bbe89a40296bbbeae90a.png) , intervals and real numbers can be freely and easily combined.

, intervals and real numbers can be freely and easily combined.

With the help of these definitions, it is already possible to calculate the range of simple functions, such as  .

If, for example,

.

If, for example, ![a = [1,2]](../I/m/34c2af7975938d9146417db0012896a1.png) ,

, ![b = [5,7]](../I/m/ccca263df4d6531e36bfd839085c3710.png) and

and ![x = [2,3]](../I/m/21be8033edfcf34acd83d4b519275828.png) , it is clear

, it is clear

![f(a,b,x) = ([1,2] \cdot [2,3]) + [5,7] = [1\cdot 2, 2\cdot 3] + [5,7] = [7,13]](../I/m/50d50810fce884a80b7e739af846f82a.png) .

.

Interpreting this as a function  of the variable

of the variable

with interval parameters

with interval parameters  and

and  , then it is possible to find the roots of this function. It is then

, then it is possible to find the roots of this function. It is then

the possible zeros are in the interval ![[-7, {-2.5}]](../I/m/dbb7a756f8f943df7ee7f86f33cd2938.png) .

.

As in the above example, the multiplication of intervals often only requires two multiplications. It is in fact

The multiplication can be seen as a destination area of a rectangle with varying edges. The result interval covers all levels from the smallest to the largest.

The same applies when one of the two intervals is non-positive and the other non-negative. Generally, multiplication can produce results as wide as ![[-\infty, \infty]](../I/m/074b1ead8cba41c3058fd6a3688fb563.png) , for example if

, for example if  is squared. This also occurs, for example, in a division, if the numerator and denominator both contain zero.

is squared. This also occurs, for example, in a division, if the numerator and denominator both contain zero.

Notation

To make the notation of intervals smaller in formulae, brackets can be used.

So we can use ![[x] \equiv [x_1, x_2]](../I/m/d986ee08e36a8a9de2f83fef895f2c2d.png) to represent an interval. For the set of all finite intervals, we can use

to represent an interval. For the set of all finite intervals, we can use

as an abbreviation. For a vector of intervals ![\big([x]_1, \ldots , [x]_n \big) \in [\mathbb{R}]^n](../I/m/4f6ab4c5e727b8516666015783bde511.png) we can also use a bold font:

we can also use a bold font: ![[\mathbf{x}]](../I/m/1585ff3cc7b9d8901c5fc864a6be6457.png) .

.

Note that in such a compact notation, ![[x]](../I/m/3e5314e9fd31509fdeb83faa0f729ba2.png) should not be confused between a so-called improper or single point interval

should not be confused between a so-called improper or single point interval ![[x_1, x_1]](../I/m/50a5ada6404f81c8a8a743c8f033868b.png) and the lower and upper limit.

and the lower and upper limit.

Elementary functions

Interval methods can also apply to functions which do not just use simple arithmetic, and we must also use other basic functions for redefining intervals, using already known monotonicity properties.

For monotonic functions in one variable, the range of values is also easy. If  is monotonically rising or falling in the interval

is monotonically rising or falling in the interval ![[x_1, x_2]](../I/m/48f0a3fbaaf45d915fd69980c25b8d48.png) , then for all values in the interval

, then for all values in the interval ![y_1, y_2 \in [x_1, x_2]](../I/m/f3c5b84ca53e105b20f60b5f538662c2.png) such that

such that  , one of the following inequalities applies:

, one of the following inequalities applies:

, or

, or  .

.

The range corresponding to the interval ![[y_1, y_2] \subseteq [x_1, x_2]](../I/m/dbedf31e35c737c928cb6d1b340e7bc7.png) can be calculated by applying the function to the endpoints

can be calculated by applying the function to the endpoints  and

and  :

:

![f([y_1, y_2]) = \left[\min \big \{f(y_1), f(y_2) \big\}, \max \big\{ f(y_1), f(y_2) \big\}\right]](../I/m/6185ea314f29da92b50df9d120824aef.png) .

.

From this the following basic features for interval functions can easily be defined:

- Exponential function:

![a^{[x_1, x_2]} = [a^{x_1},a^{x_2}]](../I/m/ee471657f7db21c40645b02022689668.png) , for

, for  ,

, - Logarithm:

![\log_a\big( {[x_1, x_2]} \big) = [\log_a {x_1}, \log_a {x_2}]](../I/m/6f8d91fae8186d7f3d8040f589f39139.png) , for positive intervals

, for positive intervals ![[x_1, x_2]](../I/m/48f0a3fbaaf45d915fd69980c25b8d48.png) and

and

- Odd powers:

![{[x_1, x_2]}^n = [{x_1}^n,{x_2}^n]](../I/m/31ac86d74250e36b22ad756c0998ce53.png) , for odd

, for odd  .

.

For even powers, the range of values being considered is important, and needs to be dealt with before doing any multiplication.

For example  for

for ![x \in [-1,1]](../I/m/547db7d2339cfb3345123313fe6a4981.png) should produce the interval

should produce the interval ![[0,1]](../I/m/ccfcd347d0bf65dc77afe01a3306a96b.png) when

when  . But if

. But if ![[-1,1]^n](../I/m/4760ff679f5881ed5ddd208e2030c33d.png) is taken by applying interval multiplication of form

is taken by applying interval multiplication of form ![[-1,1]\cdot \ldots \cdot [-1,1]](../I/m/bc92fbf2ca7eda39c66822bd11148501.png) then the result will appear to be

then the result will appear to be ![[-1,1]](../I/m/d060b17b29e0dae91a1cac23ea62281a.png) , wider than necessary.

, wider than necessary.

Instead consider the function  as a monotonically decreasing function for

as a monotonically decreasing function for  and a monotonically increasing function for

and a monotonically increasing function for  . So for even

. So for even  :

:

-

![{[x_1, x_2]}^n = [x_1^n, x_2^n]](../I/m/bd91b8e667e16ec5551c64d2c4b9bc68.png) , if

, if  ,

, -

![{[x_1, x_2]}^n = [x_2^n, x_1^n]](../I/m/610e66f3bd84ce62b7630ec86450072a.png) , if

, if  ,

, -

![{[x_1, x_2]}^n = [0, \max \{x_1^n, x_2^n \} ]](../I/m/c1d5e1af2f1a437991d8215be7538279.png) , otherwise.

, otherwise.

More generally, one can say that for piecewise monotonic functions it is sufficient to consider the endpoints  of the interval

of the interval ![[x_1, x_2]](../I/m/48f0a3fbaaf45d915fd69980c25b8d48.png) , together with the so-called critical points within the interval being those points where the monotonicity of the function changes direction.

, together with the so-called critical points within the interval being those points where the monotonicity of the function changes direction.

For the sine and cosine functions, the critical points are at  or

or  for all

for all  respectively. Only up to five points matter as the resulting interval will be

respectively. Only up to five points matter as the resulting interval will be ![[-1,1]](../I/m/d060b17b29e0dae91a1cac23ea62281a.png) if the interval includes at least two extrema. For sine and cosine, only the endpoints need full evaluation as the critical points lead to easily pre-calculated values – namely -1, 0, +1.

if the interval includes at least two extrema. For sine and cosine, only the endpoints need full evaluation as the critical points lead to easily pre-calculated values – namely -1, 0, +1.

Interval extensions of general functions

In general, it may not be easy to find such a simple description of the output interval for many functions. But it may still be possible to extend functions to interval arithmetic.

If  is a function from a real vector to a real number, then

is a function from a real vector to a real number, then ![[f]:[\mathbb{R}]^n \rightarrow [\mathbb{R}]](../I/m/43ae995138c6b6f41b1902e4ec5dd0a2.png) is called an interval extension of

is called an interval extension of  if

if

\supseteq \{f(\mathbf{y}) | \mathbf{y} \in [\mathbf{x}]\}](../I/m/3d060ef7e0c86d4123d85344c52a43ee.png) .

.

This definition of the interval extension does not give a precise result. For example, both  =[e^{x_1}, e^{x_2}]](../I/m/c45a99431d6f89d22bce7ae4c91fa753.png) and

and  =[{-\infty}, {\infty}]](../I/m/5eb96d37b0c85d0363cbafa0662f9a24.png) are allowable extensions of the exponential function. Extensions as tight as possible are desirable, taking into the relative costs of calculation and imprecision; in this case

are allowable extensions of the exponential function. Extensions as tight as possible are desirable, taking into the relative costs of calculation and imprecision; in this case ![[f]](../I/m/7b93cec8a8b3e110392556212941efcd.png) should be chosen as it give the tightest possible result.

should be chosen as it give the tightest possible result.

The natural interval extension is achieved by combining the function rule  with the equivalents of the basic arithmetic and elementary functions.

with the equivalents of the basic arithmetic and elementary functions.

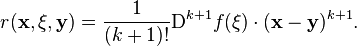

The Taylor interval extension (of degree  ) is a

) is a  times differentiable function

times differentiable function  defined by

defined by

:= f(\mathbf{y}) + \sum_{i=1}^k\frac{1}{i!}\mathrm{D}^i f(\mathbf{y}) \cdot ([\mathbf{x}] - \mathbf{y})^i + [r]([\mathbf{x}], [\mathbf{x}], \mathbf{y})](../I/m/75879a93677997904628c44ea317df92.png) ,

,

for some ![\mathbf{y} \in [\mathbf{x}]](../I/m/7d5d9cde3769de9e80f069efc03fedb5.png) ,

where

,

where  is the

is the  th order differential of

th order differential of  at the point

at the point  and

and ![[r]](../I/m/c67bae384c19ebaf9ce07de893add5c8.png) is an interval extension of the Taylor remainder

is an interval extension of the Taylor remainder

The vector  lies between

lies between  and

and  with

with ![\mathbf{x}, \mathbf{y} \in [\mathbf{x}]](../I/m/addf9c687e01110b2b71d13edd92efb3.png) ,

,  is protected by

is protected by ![[\mathbf{x}]](../I/m/1585ff3cc7b9d8901c5fc864a6be6457.png) .

Usually one chooses

.

Usually one chooses  to be the midpoint of the interval and uses the natural interval extension to assess the remainder.

to be the midpoint of the interval and uses the natural interval extension to assess the remainder.

The special case of the Taylor interval extension of degree  is also referred to as the mean value form.

For an interval extension of the Jacobian

is also referred to as the mean value form.

For an interval extension of the Jacobian ](../I/m/542acaeba352c3ad2cd1e013e69c6241.png) we get

we get

:=

f(\mathbf{y}) + [J_f](\mathbf{[x]}) \cdot ([\mathbf{x}] - \mathbf{y})](../I/m/d1fc30920edee9dd7bee9b277dcff652.png) .

.

A nonlinear function can be defined by linear features.

Complex interval arithmetic

An interval can also be defined as a locus of points at a given distance from the centre, and this definition can be extended from real numbers to complex numbers.[3] As it is the case with computing with real numbers, computing with complex numbers involves uncertain data. So, given the fact that an interval number is a real closed interval and a complex number is an ordered pair of real numbers, there is no reason to limit the application of interval arithmetic to the measure of uncertainties in computations with real numbers.[4] Interval arithmetic can thus be extended, via complex interval numbers, to determine regions of uncertainty in computing with complex numbers.[4]

The basic algebraic operations for real interval numbers (real closed intervals) can be extended to complex numbers. It is therefore not surprising that complex interval arithmetic is similar to, but not the same as, ordinary complex arithmetic.[4] It can be shown that, as it is the case with real interval arithmetic, there is no distributivity between addition and multiplication of complex interval numbers except for certain special cases, and inverse elements do not always exist for complex interval numbers.[4] Two other useful properties of ordinary complex arithmetic fail to hold in complex interval arithmetic: the additive and multiplicative properties, of ordinary complex conjugates, do not hold for complex interval conjugates.[4]

Interval arithmetic can be extended, in an analogous manner, to other multidimensional number systems such as quaternions and octonions, but with the expense that we have to sacrifice other useful properties of ordinary arithmetic.[4]

Interval methods

The methods of classical numerical analysis can not be transferred one-to-one into interval-valued algorithms, as dependencies between numerical values are usually not taken into account.

Rounded interval arithmetic

In order to work effectively in a real-life implementation, intervals must be compatible with floating point computing. The earlier operations were based on exact arithmetic, but in general fast numerical solution methods may not be available. The range of values of the function  for

for ![x \in [0.1, 0.8]](../I/m/98e4fe400f13514f319e82090de06669.png) and

and ![y \in [0.06, 0.08]](../I/m/c4b199a851930dcd973a377639468264.png) are for example

are for example ![[0.16, 0.88]](../I/m/99650b8414c46022ecf91b73016c4a47.png) . Where the same calculation is done with single digit precision, the result would normally be

. Where the same calculation is done with single digit precision, the result would normally be ![[0.2, 0.9]](../I/m/73101e88e883ca99214fe04d9594f597.png) . But

. But ![[0.2, 0.9] \not\supseteq [0.16, 0.88]](../I/m/06fef1e2caf0aaf0ce9e4d2f4b84e26b.png) ,

so this approach would contradict the basic principles of interval arithmetic, as a part of the domain of

,

so this approach would contradict the basic principles of interval arithmetic, as a part of the domain of ![f([0.1, 0.8], [0.06, 0.08])](../I/m/8970a1011c6c7a1d4a1990a838dcbb00.png) would be lost.

Instead, it is the outward rounded solution

would be lost.

Instead, it is the outward rounded solution ![[0.1, 0.9]](../I/m/3ea4093e4d61b527064cd7e124d3496f.png) which is used.

which is used.

The standard IEEE 754 for binary floating-point arithmetic also sets out procedures for the implementation of rounding. An IEEE 754 compliant system allows programmers to round to the nearest floating point number; alternatives are rounding towards 0 (truncating), rounding toward positive infinity (i.e. up), or rounding towards negative infinity (i.e. down).

The required external rounding for interval arithmetic can thus be achieved by changing the rounding settings of the processor in the calculation of the upper limit (up) and lower limit (down). Alternatively, an appropriate small interval ![[\varepsilon_1, \varepsilon_2]](../I/m/8146aa0277e09cdfa9913d8e65675e6e.png) can be added.

can be added.

Dependency problem

The so-called dependency problem is a major obstacle to the application of interval arithmetic. Although interval methods can determine the range of elementary arithmetic operations and functions very accurately, this is not always true with more complicated functions. If an interval occurs several times in a calculation using parameters, and each occurrence is taken independently then this can lead to an unwanted expansion of the resulting intervals.

As an illustration, take the function  defined by

defined by

. The values of this function over the interval

. The values of this function over the interval ![[-1, 1]](../I/m/d060b17b29e0dae91a1cac23ea62281a.png) are really

are really ![[-1/4 , 2]](../I/m/956742f8e5cb077cd8df298bdd10a1ff.png) . As the natural interval extension, it is calculated as

. As the natural interval extension, it is calculated as ![[-1, 1]^2 + [-1, 1] = [0,1] + [-1,1] = [-1,2]](../I/m/d4ed1c2bf668d6896753b093bcc1c3e5.png) , which is slightly larger; we have instead calculated the infimum and supremum of the function

, which is slightly larger; we have instead calculated the infimum and supremum of the function  over

over ![x,y \in [-1,1]](../I/m/3b54ecc061d737eade094ed5bd827010.png) .

There is a better expression of

.

There is a better expression of  in which the variable

in which the variable  only appears once, namely by rewriting

only appears once, namely by rewriting  as addition and squaring in the quadratic

as addition and squaring in the quadratic

.

.

So the suitable interval calculation is

and gives the correct values.

In general, it can be shown that the exact range of values can be achieved, if each variable appears only once and if  is continuous inside the box. However, not every function can be rewritten this way.

is continuous inside the box. However, not every function can be rewritten this way.

The dependency of the problem causing over-estimation of the value range can go as far as covering a large range, preventing more meaningful conclusions.

An additional increase in the range stems from the solution of areas that do not take the form of an interval vector. The solution set of the linear system

for ![p\in [-1,1]](../I/m/ad18f8dce0b1026566b9d1823dfc85b3.png) is precisely the line between the points

is precisely the line between the points  and

and  .

Interval methods deliver the best case, but in the square

.

Interval methods deliver the best case, but in the square ![[-1,1] \times [-1,1]](../I/m/afd6932f58971a6eabd42f45e3009223.png) , The real solution is contained in this square (this is known as the wrapping effect).

, The real solution is contained in this square (this is known as the wrapping effect).

Linear interval systems

A linear interval system consists of a matrix interval extension ![[\mathbf{A}] \in [\mathbb{R}]^{n\times m}](../I/m/667bfec8f8c1de20a69e201874f02b05.png) and an interval vector

and an interval vector ![[\mathbf{b}] \in [\mathbb{R}]^{n}](../I/m/4dd448fcee83375610c3186ac6682515.png) . We want the smallest cuboid

. We want the smallest cuboid ![[\mathbf{x}] \in [\mathbb{R}]^{m}](../I/m/fcc0a38faed045c78b49ee631f19d767.png) , for all vectors

, for all vectors

which there is a pair

which there is a pair  with

with ![\mathbf{A} \in [\mathbf{A}]](../I/m/e7874d3b5d1450b9d4874e6bdb6344b1.png) and

and ![\mathbf{b} \in [\mathbf{b}]](../I/m/e6fd06833af203423adb1aa9557592c1.png) satisfying

satisfying

.

.

For quadratic systems – in other words, for  – there can be such an interval vector

– there can be such an interval vector ![[\mathbf{x}]](../I/m/1585ff3cc7b9d8901c5fc864a6be6457.png) , which covers all possible solutions, found simply with the interval Gauss method. This replaces the numerical operations, in that the linear algebra method known as Gaussian elimination becomes its interval version. However, since this method uses the interval entities

, which covers all possible solutions, found simply with the interval Gauss method. This replaces the numerical operations, in that the linear algebra method known as Gaussian elimination becomes its interval version. However, since this method uses the interval entities![[\mathbf{A}]](../I/m/92cc25d5c71dc014ce9bc08473961f7d.png) and

and ![[\mathbf{b}]](../I/m/2ca26d791e4442b688aa6d29eadc1179.png) repeatedly in the calculation, it can produce poor results for some problems. Hence using the result of the interval-valued Gauss only provides first rough estimates, since although it contains the entire solution set, it also has a large area outside it.

repeatedly in the calculation, it can produce poor results for some problems. Hence using the result of the interval-valued Gauss only provides first rough estimates, since although it contains the entire solution set, it also has a large area outside it.

A rough solution ![[\mathbf{x}]](../I/m/1585ff3cc7b9d8901c5fc864a6be6457.png) can often be improved by an interval version of the Gauss–Seidel method.

The motivation for this is that the

can often be improved by an interval version of the Gauss–Seidel method.

The motivation for this is that the  -th row of the interval extension of the linear equation

-th row of the interval extension of the linear equation

can be determined by the variable  if the division

if the division ![1/[a_{ii}]](../I/m/6eac1a76a55da63a6e3f7bb0ba83810b.png) is allowed. It is therefore simultaneously

is allowed. It is therefore simultaneously

![x_j \in [x_j]](../I/m/5a9023c2e84f663400d364bbc8a2d417.png) and

and ![x_j \in \frac{[b_i]- \sum\limits_{k \not= j} [a_{ik}] \cdot [x_k]}{[a_{ii}]}](../I/m/397e827bfcda0d0a4a1bc701b3c327f6.png) .

.

So we can now replace ![[x_j]](../I/m/0e95d2e006440931f3d75289c41e757c.png) by

by

![[x_j] \cap \frac{[b_i]- \sum\limits_{k \not= j} [a_{ik}] \cdot [x_k]}{[a_{ii}]}](../I/m/31ba541417a70d7c56297cd027b4e4f2.png) ,

,

and so the vector ![[\mathbf{x}]](../I/m/1585ff3cc7b9d8901c5fc864a6be6457.png) by each element.

Since the procedure is more efficient for a diagonally dominant matrix, instead of the system

by each element.

Since the procedure is more efficient for a diagonally dominant matrix, instead of the system ![[\mathbf{A}]\cdot \mathbf{x} = [\mathbf{b}]\mbox{,}](../I/m/93b41940cc4e2eb106e1226b1f9a35a5.png) one can often try multiplying it by an appropriate rational matrix

one can often try multiplying it by an appropriate rational matrix  with the resulting matrix equation

with the resulting matrix equation

left to solve. If one chooses, for example,  for the central matrix

for the central matrix ![\mathbf{A} \in [\mathbf{A}]](../I/m/e7874d3b5d1450b9d4874e6bdb6344b1.png) , then

, then ![\mathbf{M} \cdot[\mathbf{A}]](../I/m/a7223dd77f94980c605a166e0cc4a69e.png) is outer extension of the identity matrix.

is outer extension of the identity matrix.

These methods only work well if the widths of the intervals occurring are sufficiently small. For wider intervals it can be useful to use an interval-linear system on finite (albeit large) real number equivalent linear systems. If all the matrices ![\mathbf{A} \in [\mathbf{A}]](../I/m/e7874d3b5d1450b9d4874e6bdb6344b1.png) are invertible, it is sufficient to consider all possible combinations (upper and lower) of the endpoints occurring in the intervals. The resulting problems can be resolved using conventional numerical methods. Interval arithmetic is still used to determine rounding errors.

are invertible, it is sufficient to consider all possible combinations (upper and lower) of the endpoints occurring in the intervals. The resulting problems can be resolved using conventional numerical methods. Interval arithmetic is still used to determine rounding errors.

This is only suitable for systems of smaller dimension, since with a fully occupied  matrix,

matrix,  real matrices need to be inverted, with

real matrices need to be inverted, with  vectors for the right hand side. This approach was developed by Jiri Rohn and is still being developed.[5]

vectors for the right hand side. This approach was developed by Jiri Rohn and is still being developed.[5]

Interval Newton method

An interval variant of Newton's method for finding the zeros in an interval vector ![[\mathbf{x}]](../I/m/1585ff3cc7b9d8901c5fc864a6be6457.png) can be derived from the average value extension.[6] For an unknown vector

can be derived from the average value extension.[6] For an unknown vector ![\mathbf{z}\in [\mathbf{x}]](../I/m/bff3fb63a6440b9266aea3cb42fbe6ae.png) applied to

applied to ![\mathbf{y}\in [\mathbf{x}]](../I/m/7d5d9cde3769de9e80f069efc03fedb5.png) , gives

, gives

\cdot (\mathbf{z} - \mathbf{y})](../I/m/106604ba533e0a60735510a4e018d9b5.png) .

.

For a zero  , that is

, that is  , and thus must satisfy

, and thus must satisfy

\cdot (\mathbf{z} - \mathbf{y})=0](../I/m/cee11b9fc6cc00bb6331233ac641c3f5.png) .

.

This is equivalent to

^{-1}\cdot f(\mathbf{y})](../I/m/d12d279b871be6cef37b6245209294c3.png) .

An outer estimate of

.

An outer estimate of ^{-1}\cdot f(\mathbf{y}))](../I/m/c7c60a05fb3391381e039c285dd13c5d.png) can be determined using linear methods.

can be determined using linear methods.

In each step of the interval Newton method, an approximate starting value ![[\mathbf{x}]\in [\mathbb{R}]^n](../I/m/001021fe278a280d9c85a0f933ccd7b4.png) is replaced by

is replaced by ![[\mathbf{x}]\cap \left(\mathbf{y} - [J_f](\mathbf{[x]})^{-1}\cdot f(\mathbf{y})\right)](../I/m/38152c3232f889988fb697e66ca5309a.png) and so the result can be improved iteratively. In contrast to traditional methods, the interval method approaches the result by containing the zeros. This guarantees that the result will produce all the zeros in the initial range. Conversely, it will prove that no zeros of

and so the result can be improved iteratively. In contrast to traditional methods, the interval method approaches the result by containing the zeros. This guarantees that the result will produce all the zeros in the initial range. Conversely, it will prove that no zeros of  were in the initial range

were in the initial range ![[\mathbf{x}]](../I/m/1585ff3cc7b9d8901c5fc864a6be6457.png) if a Newton step produces the empty set.

if a Newton step produces the empty set.

The method converges on all zeros in the starting region. Division by zero can lead to separation of distinct zeros, though the separation may not be complete; it can be complemented by the bisection method.

As an example, consider the function  , the starting range

, the starting range ![[x] = [-2,2]](../I/m/7248ff1dc366619f032346fab5b79a12.png) , and the point

, and the point  . We then have

. We then have  and the first Newton step gives

and the first Newton step gives

![[-2,2]\cap \left(0 - \frac{1}{2\cdot[-2,2]} (0-2)\right) = [-2,2]\cap \Big([{-\infty}, {-0.5}]\cup [{0.5}, {\infty}] \Big) = [{-2}, {-0.5}] \cup [{0.5}, {2}]](../I/m/3f35c099eebf2c11e0cd530b143cb8da.png) .

.

More Newton steps are used separately on ![x\in [{-2}, {-0.5}]](../I/m/3451fd8bfe3977ebc244ed06e1e98a13.png) and

and ![[{0.5}, {2}]](../I/m/21d7a3fda7caec560ad4f4ff219f689f.png) . These converge to arbitrarily small intervals around

. These converge to arbitrarily small intervals around  and

and  .

.

The Interval Newton method can also be used with thick functions such as ![g(x)= x^2-[2,3]](../I/m/91deac36fa0b0670f8ff89e630c97196.png) , which would in any case have interval results. The result then produces intervals containing

, which would in any case have interval results. The result then produces intervals containing ![\left[-\sqrt{3},-\sqrt{2} \right] \cup \left[\sqrt{2},\sqrt{3} \right]](../I/m/bc2c96634f61ad853f875aaf535d8cfc.png) .

.

Bisection and covers

The various interval methods deliver conservative results as dependencies between the sizes of different intervals extensions are not taken into account. However the dependency problem becomes less significant for narrower intervals.

Covering an interval vector ![[\mathbf{x}]](../I/m/1585ff3cc7b9d8901c5fc864a6be6457.png) by smaller boxes

by smaller boxes ![[\mathbf{x}_1], \dots , [\mathbf{x}_k]\mbox{,}](../I/m/7eaa5a8a3b2dbeb86a2a1da972c0c403.png) so that

so that ![\textstyle [\mathbf{x}] = \bigcup_{i=1}^k [\mathbf{x}_i]\mbox{,}](../I/m/37e9f757d3a744346fa123f6aafdd6e9.png) is then valid for the range of values

is then valid for the range of values

![\textstyle f([\mathbf{x}]) = \bigcup_{i=1}^k f([\mathbf{x}_i])\mbox{.}](../I/m/41421eb3bb7635bb892122544e6b7248.png) So for the interval extensions described above,

So for the interval extensions described above,

\supseteq \bigcup_{i=1}^k [f]([\mathbf{x}_i])](../I/m/a737d90e8c3943627ced98ef3f60a898.png) is valid.

Since

is valid.

Since ](../I/m/60d9c9083346a7bb7ca2c898dcd4e685.png) is often a genuine superset of the right-hand side, this usually leads to an improved estimate.

is often a genuine superset of the right-hand side, this usually leads to an improved estimate.

Such a cover can be generated by the bisection method such as thick elements ![[x_{i1}, x_{i2}]](../I/m/f21b50393cb741f33add5a49cf2ebf26.png) of the interval vector

of the interval vector ![[\mathbf{x}] = ([x_{11}, x_{12}], \dots, [x_{n1}, x_{n2}])](../I/m/4fe11e0f8dcb2a8cdf6c37010859bc47.png) by splitting in the centre into the two intervals

by splitting in the centre into the two intervals ![[x_{i1}, (x_{i1}+x_{i2})/2]](../I/m/5b90c7df165b344fb5d0920d71703e38.png) and

and ![[(x_{i1}+x_{i2})/2, x_{i2}]](../I/m/ff9ef69e7ec6f93270a3e69fbd0b7897.png) . If the result is still not suitable then further gradual subdivision is possible. Note that a cover of

. If the result is still not suitable then further gradual subdivision is possible. Note that a cover of  intervals results from

intervals results from  divisions of vector elements, substantially increasing the computation costs.

divisions of vector elements, substantially increasing the computation costs.

With very wide intervals, it can be helpful to split all intervals into several subintervals with a constant (and smaller) width, a method known as mincing. This then avoids the calculations for intermediate bisection steps. Both methods are only suitable for problems of low dimension.

Application

Interval arithmetic can be used in various areas (such as set inversion, motion planning, set estimation or stability analysis), in order to be treated estimates for which no exact numerical values can stated.[7]

Rounding error analysis

Interval arithmetic is used with error analysis, to control rounding errors arising from each calculation. The advantage of interval arithmetic is that after each operation there is an interval which reliably includes the true result. The distance between the interval boundaries gives the current calculation of rounding errors directly:

- Error =

for a given interval

for a given interval ![[a,b]](../I/m/2c3d331bc98b44e71cb2aae9edadca7e.png) .

.

Interval analysis adds to rather than substituting for traditional methods for error reduction, such as pivoting.

Tolerance analysis

Parameters for which no exact figures can be allocated often arise during the simulation of technical and physical processes. The production process of technical components allows certain tolerances, so some parameters fluctuate within intervals. In addition, many fundamental constants are not known precisely.[2]

If the behavior of such a system affected by tolerances satisfies, for example,  , for

, for ![\mathbf{p} \in [\mathbf{p}]](../I/m/347565a428a884582aeb017ae539142c.png) and unknown

and unknown  then the set of possible solutions

then the set of possible solutions

![\{\mathbf{x}\,|\, \exists \mathbf{p} \in [\mathbf{p}], f(\mathbf{x}, \mathbf{p})= 0\}](../I/m/fe7e18f54bd79b84e87ccf6b0dfdc5be.png) ,

,

can be found by interval methods. This provides an alternative to traditional propagation of error analysis. Unlike point methods, such as Monte Carlo simulation, interval arithmetic methodology ensures that no part of the solution area can be overlooked. However, the result is always a worst case analysis for the distribution of error, as other probability-based distributions are not considered.

Fuzzy interval arithmetic

Interval arithmetic can also be used with affiliation functions for fuzzy quantities as they are used in fuzzy logic. Apart from the strict statements ![x\in [x]](../I/m/d8009fa9f097e64b39d03d092bfc6524.png) and

and ![x \not\in [x]](../I/m/2b753c6b8e83bee2ce43de68280d93d4.png) , intermediate values are also possible, to which real numbers

, intermediate values are also possible, to which real numbers ![\mu \in [0,1]](../I/m/40c22bb74ef18fef1869b28e9a8a702b.png) are assigned.

are assigned.  corresponds to definite membership while

corresponds to definite membership while  is non-membership. A distribution function assigns uncertainty which can be understood as a further interval.

is non-membership. A distribution function assigns uncertainty which can be understood as a further interval.

For fuzzy arithmetic[8] only a finite number of discrete affiliation stages ![\mu_i \in [0,1]](../I/m/e3dfb6877e8939cabfbecd1f98528776.png) are considered. The form of such a distribution for an indistinct value can then represented by a sequence of intervals

are considered. The form of such a distribution for an indistinct value can then represented by a sequence of intervals

![\left[x^{(1)}\right] \supset \left[x^{(2)}\right] \supset \cdots \supset \left[x^{(k)} \right]](../I/m/cc3fef7681917047a17bc3a53bf79830.png) . The interval

. The interval ![[x^{(i)}]](../I/m/1e5d1b65746d4b5f9a063542cf55952d.png) corresponds exactly to the fluctuation range for the stage

corresponds exactly to the fluctuation range for the stage  .

.

The appropriate distribution for a function  concerning indistinct values

concerning indistinct values

and the corresponding sequences

and the corresponding sequences

![\left[x_1^{(1)} \right] \supset \cdots \supset \left[x_1^{(k)} \right], \cdots ,

\left[x_n^{(1)} \right] \supset \cdots \supset \left[x_n^{(k)} \right]](../I/m/d0275ea3deb441cdcc54f784cebc91fb.png) can be approximated by the sequence

can be approximated by the sequence

![\left[y^{(1)}\right] \supset \cdots \supset \left[y^{(k)}\right]](../I/m/8c21f393e9c2afdd4adc4bc5cda71ba2.png) .

The values

.

The values ![\left[y^{(i)}\right]](../I/m/d945d27b31ba1ed550beca968f2cf942.png) are given by

are given by ![\left[y^{(i)}\right] = f \left( \left[x_{1}^{(i)}\right], \cdots \left[x_{n}^{(i)}\right]\right)](../I/m/8ba6aaf24f12373bef2fe3860ea6a8c8.png) and can be calculated by interval methods. The value

and can be calculated by interval methods. The value ![\left[y^{(1)}\right]](../I/m/7da8958c81b37074e715d58d252ce0f5.png) corresponds to the result of an interval calculation.

corresponds to the result of an interval calculation.

History

Interval arithmetic is not a completely new phenomenon in mathematics; it has appeared several times under different names in the course of history. For example Archimedes calculated lower and upper bounds 223/71 < π < 22/7 in the 3rd century BC. Actual calculation with intervals has neither been as popular as other numerical techniques, nor been completely forgotten.

Rules for calculating with intervals and other subsets of the real numbers were published in a 1931 work by Rosalind Cicely Young, a doctoral candidate at the University of Cambridge. Arithmetic work on range numbers to improve reliability of digital systems were then published in a 1951 textbook on linear algebra by Paul Dwyer (University of Michigan); intervals were used to measure rounding errors associated with floating-point numbers. A comprehensive paper on interval algebra in numerical analysis was published by Sunaga (1958).[9]

The birth of modern interval arithmetic was marked by the appearance of the book Interval Analysis by Ramon E. Moore in 1966.[10][11] He had the idea in Spring 1958, and a year later he published an article about computer interval arithmetic.[12] Its merit was that starting with a simple principle, it provided a general method for automated error analysis, not just errors resulting from rounding.

Independently in 1956, Mieczyslaw Warmus suggested formulae for calculations with intervals,[13] though Moore found the first non-trivial applications.

In the following twenty years, German groups of researchers carried out pioneering work around Götz Alefeld[14] and Ulrich Kulisch[1] at the University of Karlsruhe and later also at the Bergische University of Wuppertal. For example, Karl Nickel explored more effective implementations, while improved containment procedures for the solution set of systems of equations were due to Arnold Neumaier among others. In the 1960s, Eldon R. Hansen dealt with interval extensions for linear equations and then provided crucial contributions to global optimisation, including what is now known as Hansen's method, perhaps the most widely used interval algorithm.[6] Classical methods in this often have the problem of determining the largest (or smallest) global value, but could only find a local optimum and could not find better values; Helmut Ratschek and Jon George Rokne developed branch and bound methods, which till then had only applied to integer values, by using intervals to provide applications for continuous values.

In 1988, Rudolf Lohner developed Fortran-based software for reliable solutions for initial value problems using ordinary differential equations.[15]

The journal Reliable Computing (originally Interval Computations) has been published since the 1990s, dedicated to the reliability of computer-aided computations. As lead editor, R. Baker Kearfott, in addition to his work on global optimisation, has contributed significantly to the unification of notation and terminology used in interval arithmetic (Web: Kearfott).

In recent years work has concentrated in particular on the estimation of preimages of parameterised functions and to robust control theory by the COPRIN working group of INRIA in Sophia Antipolis in France (Web: INRIA).

Implementations

There are many software packages that permit the development of numerical applications using interval arithmetic.[16] These are usually provided in the form of program libraries. There are also C++ and Fortran compilers that handle interval data types and suitable operations as a language extension, so interval arithmetic is supported directly.

Since 1967, Extensions for Scientific Computation (XSC) have been developed in the University of Karlsruhe for various programming languages, such as C++, Fortran and Pascal.[17] The first platform was a Zuse Z 23, for which a new interval data type with appropriate elementary operators was made available. There followed in 1976 Pascal-SC, a Pascal variant on a Zilog Z80 which it made possible to create fast complicated routines for automated result verification. Then came the Fortran 77-based ACRITH XSC for the System/370 architecture, which was later delivered by IBM. Starting from 1991 one could produce code for C compilers with Pascal-XSC; a year later the C++ class library supported C-XSC on many different computer systems. In 1997 all XSC variants were made available under the GNU General Public License. At the beginning of 2000 C-XSC 2.0 was released under the leadership of the working group for scientific computation at the Bergische University of Wuppertal, in order to correspond to the improved C++ standard.

Another C++-class library was created in 1993 at the Hamburg University of Technology called Profil/BIAS (Programmer's Runtime Optimized Fast Interval Library, Basic Interval Arithmetic), which made the usual interval operations more user friendly. It emphasized the efficient use of hardware, portability and independence of a particular presentation of intervals.

The Boost collection of C++ libraries contains a template class for intervals. Its authors are aiming to have interval arithmetic in the standard C++ language.[18]

Gaol[19] is another C++ interval arithmetic library that is unique in that it offers the relational interval operators used in interval constraint programming.

The Frink programming language has an implementation of interval arithmetic which can handle arbitrary-precision numbers. Programs written in Frink can use intervals without rewriting or recompilation.

In addition computer algebra systems, such as Mathematica, Maple and MuPAD, can handle intervals. There is a Matlab extension Intlab which builds on BLAS routines, as well as the Toolbox b4m which makes a Profil/BIAS interface.[20] Moreover, the Software Euler Math Toolbox includes an interval arithmetic.

A library for the functional language OCaml was written in assembly language and C.[21]

IEEE Std 1788-2015 – IEEE standard for interval arithmetic

A standard for interval arithmetic has been approved in June 2015.[22] There are two reference implementations freely available,[23] which have been developed by members of the standard's working group: The libieeep1788[24] library for C++, and the interval package[25] for GNU Octave.

A minimalistic subset of the standard is currently under development, which shall be easier to implement and speed up production of implementations.[26]

Conferences and Workshop

Several international conferences or workshop take place every year in the world. The main conference is probably SCAN (International Symposium on Scientific Computing, Computer Arithmetic, and Verified Numerical Computation), but there is also SWIM (Small Workshop on Interval Methods), PPAM (International Conference on Parallel Processing and Applied Mathematics), REC (International Workshop on Reliable Engineering Computing).

See also

- Affine arithmetic

- Automatic differentiation

- Multigrid method

- Monte-Carlo simulation

- Interval finite element

- Fuzzy number

- Significant figures

References

- 1 2 3 Kulisch, Ulrich (1989). Wissenschaftliches Rechnen mit Ergebnisverifikation. Eine Einführung (in German). Wiesbaden: Vieweg-Verlag. ISBN 3-528-08943-1.

- 1 2 Dreyer, Alexander (2003). Interval Analysis of Analog Circuits with Component Tolerances. Aachen, Germany: Shaker Verlag. ISBN 3-8322-4555-3.

- ↑ Complex interval arithmetic and its applications, Miodrag Petkovi?, Ljiljana Petkovi?, Wiley-VCH, 1998, ISBN 978-3-527-40134-5

- 1 2 3 4 5 6 Hend Dawood (2011). Theories of Interval Arithmetic: Mathematical Foundations and Applications. Saarbrücken: LAP LAMBERT Academic Publishing. ISBN 978-3-8465-0154-2.

- ↑ Jiri Rohn, List of publications

- 1 2 Walster, G. William; Hansen, Eldon Robert (2004). Global Optimization using Interval Analysis (2nd ed.). New York: Marcel Dekker. ISBN 0-8247-4059-9.

- ↑ Jaulin, Luc; Kieffer, Michel; Didrit, Olivier; Walter, Eric (2001). Applied Interval Analysis. Berlin: Springer. ISBN 1-85233-219-0.

- ↑ Application of Fuzzy Arithmetic to Quantifying the Effects of Uncertain Model Parameters, Michael Hanss, University of Stuttgart

- ↑ T.Sunaga. "Theory of interval algebra and its application to numerical analysis", RAAG Memoirs, 2 (1958), pp. 29-46.".

- ↑ Moore, R. E. (1966). Interval Analysis. Englewood Cliff, New Jersey: Prentice-Hall. ISBN 0-13-476853-1.

- ↑ Cloud, Michael J.; Moore, Ramon E.; Kearfott, R. Baker (2009). Introduction to Interval Analysis. Philadelphia: Society for Industrial and Applied Mathematics. ISBN 0-89871-669-1.

- ↑ Hansen, E. R. (August 13, 2001). "Publications Related to Early Interval Work of R. E. Moore". University of Louisiana. Retrieved 2015-06-29.

- ↑ Precursory papers on interval analysis by M. Warmus Archived April 18, 2008 at the Wayback Machine

- ↑ Alefeld, Götz; Herzberger, Jürgen. Einführung in die Intervallrechnung. Reihe Informatik (in German) 12. Mannheim, Wien, Zürich: B.I.-Wissenschaftsverlag. ISBN 3-411-01466-0.

- ↑ Bounds for ordinary differential equations of Rudolf Lohner (in German)

- ↑ Software for Interval Computations collected by Vladik Kreinovich , University of Texas at El Paso

- ↑ History of XSC-Languages

- ↑ A Proposal to add Interval Arithmetic to the C++ Standard Library

- ↑ Gaol is Not Just Another Interval Arithmetic Library

- ↑ INTerval LABoratory and b4m

- ↑ Alliot, Jean-Marc; Gotteland, Jean-Baptiste; Vanaret, Charlie; Durand, Nicolas; Gianazza, David (2012). Implementing an interval computation library for OCaml on x86/amd64 architectures. 17th ACM SIGPLAN International Conference on Functional Programming

- ↑ IEEE Standard for Interval Arithmetic

- ↑ Revol, Nathalie (2015). The (near-)future IEEE 1788 standard for interval arithmetic. 8th small workshop on interval methods. Slides (PDF)

- ↑ C++ implementation of the preliminary IEEE P1788 standard for interval arithmetic

- ↑ GNU Octave interval package

- ↑ IEEE Project P1788.1

Further reading

- Hayes, Brian (November–December 2003). "A Lucid Interval" (PDF). American Scientist (Sigma Xi) 91 (6): 484–488. doi:10.1511/2003.6.484.

External links

- Introductory Film (mpeg) of the COPRIN teams of INRIA, Sophia Antipolis

- Bibliography of R. Baker Kearfott, University of Louisiana at Lafayette

- Interval Methods from Arnold Neumaier, University of Vienna

- INTLAB, Institute for Reliable Computing, Hamburg University of Technology

| ||||||||||||||||||||||||||||||

![[a,b] = \{x \in \mathbb{R} \,|\, a \le x \le b\},](../I/m/406abf9490d62d096cc039cb354f927c.png)

![[79{.}5; 80{.}5]/([1{.}795; 1{.}805])^2 = [24{.}4; 25{.}0].](../I/m/409655059110fd6d948fa2cbd408e993.png)

![\begin{align}[][x_1, x_2] \,\langle\!\mathrm{op}\!\rangle\, [y_1, y_2] & = \left[ \min(x_1 {\langle\!\mathrm{op}\!\rangle} y_1, x_1 \langle\!\mathrm{op}\!\rangle y_2, x_2 \langle\!\mathrm{op}\!\rangle y_1, x_2 \langle\!\mathrm{op}\!\rangle y_2),

\right.\\

&{}\qquad \left.

\;\max(x_1 {\langle\!\mathrm{op}\!\rangle}y_1, x_1 {\langle\!\mathrm{op}\!\rangle} y_2, x_2

{\langle\!\mathrm{op}\!\rangle} y_1, x_2 {\langle\!\mathrm{op}\!\rangle} y_2) \right]

\,\mathrm{,}

\end{align}](../I/m/a48cbe3535282dfc240a2e7196813d46.png)

![f([1,2],[5,7],x) = ([1,2] \cdot x) + [5,7] = 0\Leftrightarrow [1,2] \cdot x = [-7, -5]\Leftrightarrow x = [-7, -5]/[1,2],](../I/m/e7b351f33168a09795e5c411bf0ab238.png)

![[x_1, x_2] \cdot [y_1, y_2] = [x_1 \cdot y_1, x_2 \cdot y_2],\text{ if }x_1, y_1 \geq 0.](../I/m/f049fbdad0aa57fd5ad860838a27fe5f.png)

![[\mathbb{R}] := \big\{\, [x_1, x_2] \,|\, x_1 \leq x_2 \text{ and } x_1, x_2 \in \mathbb{R} \cup \{-\infty, \infty\} \big\}](../I/m/7aeabb1f17dc6c53f39e745d196739f7.png)

![\left([-1,1] + \frac{1}{2}\right)^2 -\frac{1}{4} =

\left[-\frac{1}{2}, \frac{3}{2}\right]^2 -\frac{1}{4} = \left[0, \frac{9}{4}\right] -\frac{1}{4} = \left[-\frac{1}{4},2\right]](../I/m/eaabcfe1b5e33f46c3876f6107b92946.png)

![\begin{pmatrix}

{[a_{11}]} & \cdots & {[a_{1n}]} \\

\vdots & \ddots & \vdots \\

{[a_{n1}]} & \cdots & {[a_{nn}]}

\end{pmatrix}

\cdot

\begin{pmatrix}

{x_1} \\

\vdots \\

{x_n}

\end{pmatrix}

=

\begin{pmatrix}

{[b_1]} \\

\vdots \\

{[b_n]}

\end{pmatrix}](../I/m/f352e90bbf4babc7da2eb1dd71821cba.png)

![(\mathbf{M}\cdot[\mathbf{A}])\cdot \mathbf{x} = \mathbf{M}\cdot[\mathbf{b}]](../I/m/748917c633ce3cd54cd77810e9ddbae7.png)