Perturbation theory

Perturbation theory comprises mathematical methods for finding an approximate solution to a problem, by starting from the exact solution of a related, simpler problem. A critical feature of the technique is a middle step that breaks the problem into "solvable" and "perturbation" parts.[1] Perturbation theory is applicable if the problem at hand cannot be solved exactly, but can be formulated by adding a "small" term to the mathematical description of the exactly solvable problem.

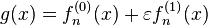

Perturbation theory leads to an expression for the desired solution in terms of a formal power series in some "small" parameter – known as a perturbation series – that quantifies the deviation from the exactly solvable problem. The leading term in this power series is the solution of the exactly solvable problem, while further terms describe the deviation in the solution, due to the deviation from the initial problem. Formally, we have for the approximation to the full solution A, a series in the small parameter (here called ε), like the following:

In this example, A0 would be the known solution to the exactly solvable initial problem and A1, A2, ... represent the higher-order terms which may be found iteratively by some systematic procedure. For small ε these higher-order terms in the series become successively smaller.

An approximate "perturbation solution" is obtained by truncating the series, usually by keeping only the first two terms, the initial solution and the "first-order" perturbation correction

General description

Perturbation theory is closely related to methods used in numerical analysis. The earliest use of what would now be called perturbation theory was to deal with the otherwise unsolvable mathematical problems of celestial mechanics: for example the orbit of the Moon, which moves noticeably differently from a simple Keplerian ellipse because of the competing gravitation of the Earth and the Sun.

Perturbation methods start with a simplified form of the original problem, which is simple enough to be solved exactly. In celestial mechanics, this is usually a Keplerian ellipse. Under non-relativistic gravity, an ellipse is exactly correct when there are only two gravitating bodies (say, the Earth and the Moon) but not quite correct when there are three or more objects (say, the Earth, Moon, Sun, and the rest of the solar system) and not quite correct when the gravitational interaction is stated using formulas from General relativity.

The solved, but simplified problem is then “perturbed” to make the conditions that the perturbed solution actually satisfies closer to the real problem, such as including the gravitational attraction of a third body (the Sun). The "conditions" are a formula (or several) that represent reality, often something arising from a physical law like Newton’s second law, the force-acceleration equation,

In the case of the example, the force F is calculated based on the number of gravitationally relevant bodies; the acceleration a is obtained, using calculus, from the path of the Moon in its orbit. Both of these come in two forms: approximate values for force and acceleration, which result from simplifications, and hypothetical exact values for force and acceleration, which would require the complete answer to calculate.

The slight changes that result from accommodating the perturbation, which themselves may have been simplified yet again, are used as corrections to the approximate solution. Because of simplifications introduced along every step of the way, the corrections are never perfect, and the conditions met by the corrected solution do not perfectly match the equation demanded by reality. However, even only one cycle of corrections often provides an excellent approximate answer to what the real solution should be.

There is no requirement to stop at only one cycle of corrections. A partially corrected solution can be re-used as the new starting point for yet another cycle of perturbations and corrections. In principle, cycles of finding increasingly better corrections could go on indefinitely. In practice, one typically stops at one or two cycles of corrections. The usual difficulty with the method is that the corrections progressively make the new solutions very much more complicated, so each cycle is much more difficult to manage than the previous cycle of corrections. Isaac Newton is reported to have said, regarding the problem of the Moon's orbit, that "It causeth my head to ache."[2]

This general procedure is a widely used mathematical tool in advanced sciences and engineering: start with a simplified problem and gradually add corrections that make the formula that the corrected problem matches closer and closer to the formula that represents reality. It is the natural extension to mathematical functions of the "guess, check, and fix" method used by older civilisations to compute certain numbers, such as square roots.

Examples

Examples for the "mathematical description" are: an algebraic equation, a differential equation (e.g., the equations of motion in celestial mechanics or a wave equation), a free energy (in statistical mechanics), a Hamiltonian operator (in quantum mechanics).

Examples for the kind of solution to be found perturbatively: the solution of the equation (e.g., the trajectory of a particle), the statistical average of some physical quantity (e.g., average magnetization), the ground state energy of a quantum mechanical problem.

Examples for the exactly solvable problems to start with: linear equations, including linear equations of motion (harmonic oscillator, linear wave equation), statistical or quantum-mechanical systems of non-interacting particles (or in general, Hamiltonians or free energies containing only terms quadratic in all degrees of freedom).

Examples of "perturbations" to deal with: Nonlinear contributions to the equations of motion, interactions between particles, terms of higher powers in the Hamiltonian/Free Energy.

For physical problems involving interactions between particles, the terms of the perturbation series may be displayed (and manipulated) using Feynman diagrams.

History

Perturbation theory was first devised to solve otherwise intractable problems in the calculation of the motions of planets in the solar system. The gradually increasing accuracy of astronomical observations led to incremental demands in the accuracy of solutions to Newton's gravitational equations, which led several notable 18th and 19th century mathematicians to extend and generalize the methods of perturbation theory. These well-developed perturbation methods were adopted and adapted to solve new problems arising during the development of Quantum Mechanics in 20th century atomic and subatomic physics.

Beginnings in the study of planetary motion

Since the planets are very remote from each other, and since their mass is small as compared to the mass of the Sun, the gravitational forces between the planets can be neglected, and the planetary motion is considered, to a first approximation, as taking place along Kepler's orbits, which are defined by the equations of the two-body problem, the two bodies being the planet and the Sun.[3]

Since astronomic data came to be known with much greater accuracy, it became necessary to consider how the motion of a planet around the Sun is affected by other planets. This was the origin of the three-body problem; thus, in studying the system Moon–Earth–Sun the mass ratio between the Moon and the Earth was chosen as the small parameter. Lagrange and Laplace were the first to advance the view that the constants which describe the motion of a planet around the Sun are "perturbed" , as it were, by the motion of other planets and vary as a function of time; hence the name "perturbation theory" .[3]

Perturbation theory was investigated by the classical scholars — Laplace, Poisson, Gauss — as a result of which the computations could be performed with a very high accuracy. The discovery of the planet Neptune in 1848 by Urbain Le Verrier, based on the deviations in motion of the planet Uranus (he sent the coordinates to Johann Gottfried Galle who successfully observed Uranus through his telescope), represented a triumph of perturbation theory.[3]

Rise of understanding of chaotic systems

The development of basic perturbation theory for differential equations was fairly complete by the middle of the 19th century. It was at that time that Charles-Eugène Delaunay was studying the perturbative expansion for the Earth-Moon-Sun system, and discovered the so-called "problem of small denominators". Here, the denominator appearing in the n-th term of the perturbative expansion could become arbitrarily small, causing the n-th correction to be as large or larger than the first-order correction.

At the turn of the 20th century, this problem led Henri Poincaré to make one of the first deductions of the existence of chaos, and what is poetically called the “butterfly effect”: that even a very small perturbation can ultimately have a very large effect on non-dissipative or “friction-free” dynamic systems.

A partial resolution of the small-divisor problem was given by the statement of the KAM theorem in 1954. Developed by Andrey Kolmogorov, Vladimir Arnold and Jürgen Moser, this theorem stated the conditions under which a system of partial differential equations will have only mildly chaotic behaviour under small perturbations.

Application to new problems in 20th century physics

Perturbation theory saw a particularly dramatic expansion and evolution with the arrival of quantum mechanics. Although perturbation theory was used in the semi-classical theory of the Bohr atom, the calculations were monstrously complicated, and subject to somewhat ambiguous interpretation. The discovery of Heisenberg's matrix mechanics allowed a vast simplification of the application of perturbation theory. Notable examples are the Stark effect and the Zeeman effect, which have a simple enough theory to be included in standard undergraduate textbooks in quantum mechanics. Other early applications include the fine structure and the hyperfine structure in the hydrogen atom.

In modern times, perturbation theory underlies much of quantum chemistry and quantum field theory. In chemistry, perturbation theory was used to obtain the first solutions for the helium atom.

In the middle of the 20th century, Richard Feynman realized that the perturbative expansion could be given a dramatic and beautiful graphical representation in terms of what are now called Feynman diagrams. Although originally applied only in quantum field theory, such diagrams now find increasing use in any area where perturbative expansions are studied.

Search for better methods for quantum mechanics

In the late 20th century, broad dissatisfaction with perturbation theory in the quantum physics community, including not only the difficulty of going beyond second order in the expansion, but also questions about whether the perturbative expansion is even convergent, has led to a strong interest in the area of non-perturbative analysis, that is, the study of exactly solvable models.

Much of the theoretical work in non-perturbative analysis goes under the name of quantum groups and non-commutative geometry. The prototypical model is the Korteweg–de Vries equation, a highly non-linear equation for which the interesting solutions, the solitons, cannot be reached by perturbation theory, even if the perturbations were carried out to infinite order.

Perturbation orders

The standard exposition of perturbation theory is given in terms of the order to which the perturbation is carried out: first-order perturbation theory or second-order perturbation theory, and whether the perturbed states are degenerate, which requires singular perturbation. In the singular case extra care must be taken, and the theory is slightly more elaborate.

First-order, non-singular perturbation theory

This section develops, in simple terms,[4] the general theory for the perturbative solution to a differential equation to the first order. To keep the exposition simple, a crucial assumption is made: that the solutions to the unperturbed system are not degenerate, so that the perturbation series can be inverted. There are ways of dealing with the degenerate (or singular) case; these require extra care.

Suppose one wants to solve a differential equation of the form

where D is some specific differential operator, and λ is an eigenvalue. Many problems involving ordinary or partial differential equations can be cast in this form.

It is presumed that the differential operator can be written in the form

where ε is presumed to be small, and that, furthermore, the complete set of solutions for D(0) are known.

That is, one has a set of solutions  , labelled by some arbitrary index n, such that

, labelled by some arbitrary index n, such that

Furthermore, one assumes that the set of solutions  form an orthonormal set,

form an orthonormal set,

with δmn the Kronecker delta function.

To zeroth order, one expects that the solutions g(x) are then somehow "close" to one of the unperturbed solutions  . That is,

. That is,

and

where  denotes the relative size, in big-O notation, of the perturbation.

denotes the relative size, in big-O notation, of the perturbation.

To solve this problem, one assumes that the solution g(x) can be written as a linear combination of the  ,

,

with all of the constants  except for n, where

except for n, where  .

.

Substituting this last expansion into the differential equation, taking the inner product of the result with  , and making use of orthogonality, one obtains

, and making use of orthogonality, one obtains

This can be trivially rewritten as a simple linear algebra problem of finding the eigenvalue of a matrix, where

where the matrix elements Anm are given by

Rather than solving this full matrix equation, one notes that, of all the cm in the linear equation, only one, namely cn, is not small. Thus, to the first order in ε, the linear equation may be solved trivially as

since all of the other terms in the linear equation are of order  . The above gives the solution of the eigenvalue to first order in perturbation theory.

. The above gives the solution of the eigenvalue to first order in perturbation theory.

The function g(x) to first order is obtained through similar reasoning. Substituting

so that

gives an equation for  .

.

It may be solved integrating with the partition of unity

to give

which finally gives the exact solution to the perturbed differential equation to first order in the perturbation ε.

Several observations may be made about the form of this solution. First, the sum over functions with differences of eigenvalues in the denominator evokes the resolvent in Fredholm theory. This is no accident; the resolvent acts essentially as a kind of Green's function or propagator, passing the perturbation along. Higher-order perturbations resemble this form, with an additional sum over a resolvent appearing at each order.

The form of this solution also illustrates the idea behind the small-divisor problem. If, for whatever reason, two eigenvalues are close, so that the difference  becomes small, the corresponding term in the above sum will become disproportionately large. In particular, if this happens in higher-order terms, the higher-order perturbation may become as large or larger in magnitude than the first-order perturbation. Such a situation calls into question the validity of utilizing a perturbative analysis to begin with, which can be understood to be a fairly catastrophic situation; it is frequently encountered in chaotic dynamical systems, and requires the development of techniques other than perturbation theory to solve the problem.

becomes small, the corresponding term in the above sum will become disproportionately large. In particular, if this happens in higher-order terms, the higher-order perturbation may become as large or larger in magnitude than the first-order perturbation. Such a situation calls into question the validity of utilizing a perturbative analysis to begin with, which can be understood to be a fairly catastrophic situation; it is frequently encountered in chaotic dynamical systems, and requires the development of techniques other than perturbation theory to solve the problem.

Curiously, the situation is not at all bad if two or more eigenvalues are exactly equal. This case is referred to as singular or degenerate perturbation theory, addressed below. The degeneracy of eigenvalues indicates that the unperturbed system has some sort of symmetry, and that the generators of that symmetry commute with the unperturbed differential operator. Typically, the perturbing term does not possess the symmetry, and so the full solutions do not, either; one says that the perturbation lifts or breaks the degeneracy. In this case, the perturbation can still be performed, as in following sections; however, care must be taken to work in a basis for the unperturbed states, so that these map one-to-one to the perturbed states, rather than being a mixture.

Perturbation theory of degenerate states

One may note that a problem occurs in the above first order perturbation theory when two or more eigenfunctions of the unperturbed system correspond to the same eigenvalue, i.e. when the eigenvalue equation becomes

and the index i labels several states with the same eigenvalue  . The expression for the eigenfunctions which has energy differences in the denominators becomes infinite. In that case, degenerate perturbation theory must be applied.

. The expression for the eigenfunctions which has energy differences in the denominators becomes infinite. In that case, degenerate perturbation theory must be applied.

The degeneracy must first be removed for higher order perturbation theory. First, consider the eigenfunction which is a linear combination of eigenfunctions with the same eigenvalue only,

which, again from the orthogonality of  , leads to the following equation,

, leads to the following equation,

for each n.

As for the majority of low quantum numbers n, i changes over a small range of integers, so often the later equation can be solved analytically as an at most 4 × 4 matrix equation. Once the degeneracy is removed, the first and any order of the above perturbation theory may be further applied relying on the new eigenfunctions.

An example of second-order singular perturbation theory

Consider the following equation for the unknown variable x:

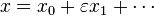

For the initial problem with ε = 0, the solution is x0 = 1. For small ε the lowest-order approximation may be found by inserting the ansatz

into the equation and demanding the equation to be fulfilled up to terms that involve powers of ε higher than the first. This yields x1 = 1. In the same way, the higher orders may be found. However, even in this simple example it may be observed that for (arbitrarily) small positive ε there are four other solutions to the equation (with very large magnitude). The reason we don't find these solutions in the above perturbation method is because these solutions diverge when ε → 0 while the ansatz assumes regular behavior in this limit.

The four additional solutions can be found using the methods of singular perturbation theory. In this case this works as follows. Since the four solutions diverge at ε = 0, it makes sense to rescale x. We put

such that in terms of y the solutions stay finite. This means that we need to choose the exponent ν to match the rate at which the solutions diverge. In terms of y the equation reads:

The 'right' value for ν is obtained when the exponent of ε in the prefactor of the term proportional to y is equal to the exponent of ε in the prefactor of the term proportional to y5, i.e. when ν = 1/4. This is called 'significant degeneration'. If we choose ν larger, then the four solutions will collapse to zero in terms of y and they will become degenerate with the solution we found above. If we choose ν smaller, then the four solutions will still diverge to infinity.

Putting ν = 1/4 in the above equation yields:

This equation can be solved using ordinary perturbation theory in the same way as regular expansion for x was obtained. Since the expansion parameter is now ε1/4 we put:

There are five solutions for y0: {0, ±1, ±i}. We must disregard the solution y = 0 since it corresponds to the original regular solution which appears to be at zero for ε = 0, because in the limit ε → 0 we are rescaling by an infinite amount. The next term is y1 = − 1/4. In terms of x the four solutions are thus given as:

![x = \varepsilon^{-\frac{1}{4}}\left[y_0 - \tfrac{1}{4} \varepsilon^{\frac{1}{4}} +\cdots\right]](../I/m/78249e5d0635bf997a4b56f088219042.png)

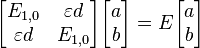

Example of degenerate perturbation theory – Stark effect in resonant rotating wave

Let us consider a hydrogen atom rotating with a constant angular frequency ω in an electric field. The Hamiltonian is given by:

where the unperturbed Hamiltonian is

and Lz is the operator for the z-component of angular momentum: Lz = i∂/∂φ. The perturbation εx can be seen as the strength of the applied electric field multiplied by one of the space coordinates (This calculation is in atomic units, so that every quantity is dimensionless).

The eigenvalues of H0 are

For the lowest energy eigenstates of Hydrogen  and

and  in the resonance E2,1 − E1,0 = 0 their energies are therefore equal E1,0 = E2,1 = − 1/2, while the eigenstates are different.

in the resonance E2,1 − E1,0 = 0 their energies are therefore equal E1,0 = E2,1 = − 1/2, while the eigenstates are different.

The eigenvalue equation for the Hamiltonian takes the form

where

which leads to the quadratic equation which can be readily solved

with the solution

These states are the Stark states in the rotating frame, they are Trojan (higher eigenvalue) and anti-Trojan wavepackets.

Some modern applications and limitations

Both regular and singular perturbation theory are frequently used in physics and engineering. Regular perturbation theory may only be used to find those solutions of a problem that evolve smoothly out of the initial solution when changing the parameter (that are "adiabatically connected" to the initial solution).

A well-known example from physics where regular perturbation theory fails is in fluid dynamics when one treats the viscosity as a small parameter. Close to a boundary, the fluid velocity goes to zero, even for very small viscosity (the no-slip condition). For zero viscosity, it is not possible to impose this boundary condition and a regular perturbative expansion amounts to an expansion about an unrealistic physical solution. Singular perturbation theory can, however, be applied here and this amounts to 'zooming in' at the boundaries (using the method of matched asymptotic expansions).

Perturbation theory can fail when the system can transition to a different "phase" of matter, with a qualitatively different behaviour, that cannot be modelled by the physical formulas put into the perturbation theory (e.g., a solid crystal melting into a liquid). In some cases, this failure manifests itself by divergent behavior of the perturbation series. Such divergent series can sometimes be resummed using techniques such as Borel resummation.

Perturbation techniques can be also used to find approximate solutions to non-linear differential equations. Examples of techniques used to find approximate solutions to these types of problems are the Lindstedt–Poincaré technique and the method of multiple time scales.

There is absolutely no guarantee that perturbative methods result in a convergent solution. In fact, asymptotic series are the norm.

Perturbation theory in chemistry

Many of the ab initio quantum chemistry methods use perturbation theory directly or are closely related methods. Implicit perturbation theory[5] works with the complete Hamiltonian from the very beginning and never specifies a perturbation operator as such. Møller–Plesset perturbation theory uses the difference between the Hartree–Fock Hamiltonian and the exact non-relativistic Hamiltonian as the perturbation. The zero-order energy is the sum of orbital energies. The first-order energy is the Hartree–Fock energy and electron correlation is included at second-order or higher. Calculations to second, third or fourth order are very common and the code is included in most ab initio quantum chemistry programs. A related but more accurate method is the coupled cluster method.

See also

- Cosmological perturbation theory

- Dynamic nuclear polarisation

- Alternative approach to perturbation theory[6]

- Eigenvalue perturbation

- Interval FEM

- Orders of approximation

- Structural stability

- Lyapunov stability

References

- ↑ William E. Wiesel (2010). Modern Astrodynamics. Ohio: Aphelion Press. p. 107. ISBN 978-145378-1470.

- ↑ Cropper, William H. (2004), Great Physicists: The Life and Times of Leading Physicists from Galileo to Hawking, Oxford University Press, p. 34, ISBN 978-0-19-517324-6.

- 1 2 3 Perturbation theory. N. N. Bogolyubov, jr. (originator), Encyclopedia of Mathematics. URL: http://www.encyclopediaofmath.org/index.php?title=Perturbation_theory&oldid=11676

- ↑

- Sakurai, J.J., and Napolitano, J. (1964,2011). Modern quantum mechanics (2nd ed.), Addison Wesley ISBN 978-0-8053-8291-4 . Chapter 5

- ↑ King, Matcha (1976). "Theory of the Chemical Bond". JACS 98 (12): 3415–3420. doi:10.1021/ja00428a004.

- ↑ Martínez-Carranza, J.; Soto-Eguibar, F.; Moya-Cessa, H. (2012). "Alternative analysis to perturbation theory in quantum mechanics". The European Physical Journal D 66. doi:10.1140/epjd/e2011-20654-5.

External links

- Introduction to regular perturbation theory by Eric Vanden-Eijnden (PDF)

- Perturbation Method of Multiple Scales