Hensel's lemma

In mathematics, Hensel's lemma, also known as Hensel's lifting lemma, named after Kurt Hensel, is a result in modular arithmetic, stating that if a polynomial equation has a simple root modulo a prime number p, then this root corresponds to a unique root of the same equation modulo any higher power of p, which can be found by iteratively "lifting" the solution modulo successive powers of p. More generally it is used as a generic name for analogues for complete commutative rings (including p-adic fields in particular) of the Newton method for solving equations. Since p-adic analysis is in some ways simpler than real analysis, there are relatively neat criteria guaranteeing a root of a polynomial.

Statement

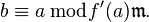

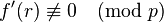

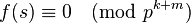

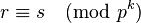

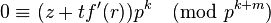

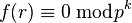

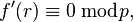

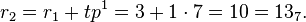

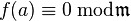

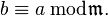

Let  be a polynomial with integer (or p-adic integer) coefficients, and let m,k be positive integers such that m ≤ k. If r is an integer such that

be a polynomial with integer (or p-adic integer) coefficients, and let m,k be positive integers such that m ≤ k. If r is an integer such that

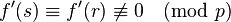

and

and

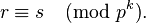

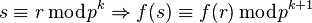

then there exists an integer s such that

and

and

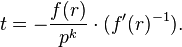

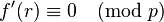

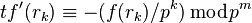

Furthermore, this s is unique modulo pk+m, and can be computed explicitly as

where

where

In this formula for t, the division by pk denotes ordinary integer division (where the remainder will be 0), while negation, multiplication, and multiplicative inversion  are performed in

are performed in  .

.

As an aside, if  , then 0, 1, or several s may exist (see Hensel Lifting below).

, then 0, 1, or several s may exist (see Hensel Lifting below).

Derivation

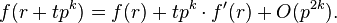

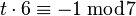

The lemma derives from considering the Taylor expansion of f around r. From  , we see that s has to be of the form s = r + tpk for some integer t. Expanding

, we see that s has to be of the form s = r + tpk for some integer t. Expanding  gives

gives

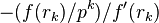

Reducing both sides modulo pk+m, we see that for  to hold, we need

to hold, we need

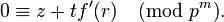

where the O(p2k) terms vanish because k+m ≤ 2k. Then we note that  for some integer z since r is a root of f mod pk, so

for some integer z since r is a root of f mod pk, so

,

,

which is to say

Then substituting back f(r)/pk for z and solving for t in  gives the explicit formula for t mentioned above. The assumption that

gives the explicit formula for t mentioned above. The assumption that  is not divisible by p ensures that

is not divisible by p ensures that  has an inverse mod

has an inverse mod  which is necessarily unique. Hence a solution for t exists uniquely modulo

which is necessarily unique. Hence a solution for t exists uniquely modulo  , and s exists uniquely modulo

, and s exists uniquely modulo  .

.

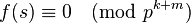

Hensel Lifting

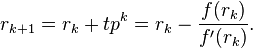

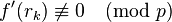

Using the lemma, one can "lift" (i.e. add multiples of the next power of p) a root r of the polynomial f mod pk to a new root s mod pk+1 such that r ≡ s mod pk (by taking m=1; taking larger m follows by induction). In fact, a root mod pk+1 is also a root mod pk, so the roots mod pk+1 are precisely the liftings of roots mod pk. The new root s is congruent to r mod p, so the new root also satisfies  . So the lifting can be repeated, and starting from a solution rk of

. So the lifting can be repeated, and starting from a solution rk of  we can derive a sequence of solutions rk+1, rk+2, ... of the same congruence for successively higher powers of p, provided

we can derive a sequence of solutions rk+1, rk+2, ... of the same congruence for successively higher powers of p, provided  for the initial root rk. This also shows that f has the same number of roots mod pk as mod pk+1, mod p k+2, or any other higher power of p, provided the roots of f mod pk are all simple.

for the initial root rk. This also shows that f has the same number of roots mod pk as mod pk+1, mod p k+2, or any other higher power of p, provided the roots of f mod pk are all simple.

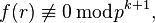

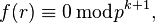

What happens to this process if r is not a simple root mod p? If we have a root mod pk at which the derivative mod p is 0, then there is not a unique lifting of a root mod pk to a root mod pk+1: either there is no lifting to a root mod pk+1 or there are multiple choices:

- if

and

and  then

then  .

.

- if

That is,  for all integers t.

Therefore if

for all integers t.

Therefore if  then there is no lifting of r to a root of f(x) mod pk+1, while if

then there is no lifting of r to a root of f(x) mod pk+1, while if  then every lifting of r to modulus pk+1 is a root of f(x) mod pk+1.

then every lifting of r to modulus pk+1 is a root of f(x) mod pk+1.

To see the difficulty that can arise in concrete examples, take p = 2, f(x) = x2 + 1, and r = 1. Then f(1) ≡ 0 mod 2 and f'(1) ≡ 0 mod 2. We have f(1) = 2 ≠ 0 mod 4 which means that no lifting of 1 to modulus 4 is a root of f(x) mod 4.

On the other hand, if we take f(x) = x2 − 17 then, as before, 1 is a root of f(x) mod 2 and the derivative is 0 mod 2. But since f(1) is 0 mod 4, then we can lift our solution to modulo 4 and both 1 and 3 are solutions. The derivative is still 0 mod 2, so a priori we don't know whether we can lift them to modulo 8, but in fact we can, since f(1) is 0 mod 8 and f(3) is 0 mod 8, giving solutions at 1, 3, 5, and 7 mod 8. Since of these only f(1) and f(7) are 0 mod 16 we can lift only 1 and 7 to modulo 16, giving 1, 7, 9, and 15 mod 16. Of these, only 7 and 9 give f(x)=0 mod 32, so these can be raised giving 7, 9, 23, and 25 mod 32. It turns out that (for this example f(x)) for every positive integer k there are four liftings of 1 mod 2 to a root of f(x) mod 2k.

Hensel's Lemma for p-adic Numbers

In the p-adic numbers, where we can make sense of rational numbers modulo powers of p as long as the denominator is not a multiple of p, the recursion from rk (roots mod pk) to rk+1 (roots mod pk+1) can be expressed in a much more intuitive way. Instead of choosing t to be an(y) integer which solves the congruence

, let t be the rational number

, let t be the rational number  (the pk here is not really a denominator since f(rk) is divisible by pk). Then set

(the pk here is not really a denominator since f(rk) is divisible by pk). Then set

This fraction may not be an integer, but it is a p-adic integer, and the sequence of numbers rk converges in the p-adic integers to a root of f(x) = 0. Moreover, the displayed recursive formula for the (new) number rk+1 in terms of rk is precisely Newton's method for finding roots to equations in the real numbers.

By working directly in the p-adics and using the p-adic absolute value, there is a version of Hensel's lemma which can be applied even if we start with a solution of f(a) ≡ 0 mod p such that f'(a) ≡ 0 mod p. We just need to make sure the number f'(a) is not exactly 0. This more general version is as follows: if there is an integer a which satisfies |f(a)|p < |f′(a)|p2, then there is a unique p-adic integer b such f(b) = 0 and |b-a|p < |f'(a)|p. The construction of b amounts to showing that the recursion from Newton's method with initial value a converges in the p-adics and we let b be the limit. The uniqueness of b as a root fitting the condition |b-a|p < |f'(a)|p needs additional work.

The statement of Hensel's lemma given above (taking  ) is a special case of this more general version, since the conditions that f(a) ≡ 0 mod p and f'(a) ≠ 0 mod p say that |f(a)|p < 1 and |f'(a)|p = 1.

) is a special case of this more general version, since the conditions that f(a) ≡ 0 mod p and f'(a) ≠ 0 mod p say that |f(a)|p < 1 and |f'(a)|p = 1.

Examples

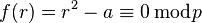

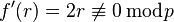

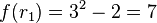

Suppose that p is an odd prime number and a is a quadratic residue modulo p that is nonzero mod p. Then Hensel's lemma implies that a has a square root in the ring of p-adic integers Zp. Indeed, let f(x)=x2-a. Its derivative is 2x, so if r is a square root of a mod p we have

-

and

and  ,

,

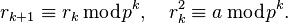

where the second condition depends on p not being 2. The basic version of Hensel's lemma tells us that starting from r1= r we can recursively construct a sequence of integers { rk } such that

This sequence converges to some p-adic integer b and b2=a. In fact, b is the unique square root of a in Zp congruent to r1 modulo p. Conversely, if a is a perfect square in Zp and it is not divisible by p then it is a nonzero quadratic residue mod p. Note that the quadratic reciprocity law allows one to easily test whether a is a nonzero quadratic residue mod p, thus we get a practical way to determine which p-adic numbers (for p odd) have a p-adic square root, and it can be extended to cover the case p=2 using the more general version of Hensel's lemma (an example with 2-adic square roots of 17 is given later).

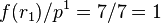

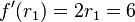

To make the discussion above more explicit, let us find a "square root of 2" (the solution to  ) in the 7-adic integers. Modulo 7 one solution is 3 (we could also take 4), so we set

) in the 7-adic integers. Modulo 7 one solution is 3 (we could also take 4), so we set  . Hensel's lemma then allows us to find

. Hensel's lemma then allows us to find  as follows:

as follows:

that is,

that is,

And sure enough,  . (If we had used the Newton method recursion directly in the 7-adics, then r2 = r1 - f(r1)/f'(r1) = 3 - 7/6 = 11/6, and 11/6 ≡ 10 mod 72.)

. (If we had used the Newton method recursion directly in the 7-adics, then r2 = r1 - f(r1)/f'(r1) = 3 - 7/6 = 11/6, and 11/6 ≡ 10 mod 72.)

We can continue and find  . Each time we carry out the calculation (that is, for each successive value of k), one more base 7 digit is added for the next higher power of 7. In the 7-adic integers this sequence converges, and the limit is a square root of 2 in Z7 which has initial 7-adic expansion

. Each time we carry out the calculation (that is, for each successive value of k), one more base 7 digit is added for the next higher power of 7. In the 7-adic integers this sequence converges, and the limit is a square root of 2 in Z7 which has initial 7-adic expansion

If we started with the initial choice  then Hensel's lemma would produce a square root of 2 in Z7 which is congruent to 4 (mod 7) instead of 3 (mod 7) and in fact this second square root would be the negative of the first square root (which is consistent with 4 = -3 mod 7).

then Hensel's lemma would produce a square root of 2 in Z7 which is congruent to 4 (mod 7) instead of 3 (mod 7) and in fact this second square root would be the negative of the first square root (which is consistent with 4 = -3 mod 7).

As an example where the original version of Hensel's lemma is not valid but the more general one is, let f(x) = x2 - 17 and a = 1. Then f(a) = -16 and f'(a) = 2, so |f(a)|2 < |f′(a)|22, which implies there is a unique 2-adic integer b satisfying b2 = 17 and |b- a|2 < |f'(a)|2 = 1/2, i.e., b ≡ 1 mod 4. There are two square roots of 17 in the 2-adic integers, differing by a sign, and although they are congruent mod 2 they are not congruent mod 4. This is consistent with the general version of Hensel's lemma only giving us a unique 2-adic square root of 17 that is congruent to 1 mod 4 rather than mod 2. If we had started with the initial approximate root a = 3 then we could apply the more general Hensel's lemma again to find a unique 2-adic square root of 17 which is congruent to 3 mod 4. This is the other 2-adic square root of 17.

In terms of lifting roots of x2 - 17 from one modulus 2k to the next 2k+1, the lifts starting with the root 1 mod 2 are as follows:

- 1 mod 2 --> 1, 3 mod 4

- 1 mod 4 --> 1, 5 mod 8 and 3 mod 4 ---> 3, 7 mod 8

- 1 mod 8 --> 1, 9 mod 16 and 7 mod 8 ---> 7, 15 mod 16, while 3 mod 8 and 5 mod 8 don't lift to roots mod 16

- 9 mod 16 --> 9, 25 mod 32 and 7 mod 16 --> 7, 23 mod 16, while 1 mod 16 and 15 mod 16 don't lift to roots mod 32.

For every k at least 3, there are four roots of x2 - 17 mod 2k, but if we look at their 2-adic expansions we can see that in pairs they are converging to just two 2-adic limits. For instance, the four roots mod 32 break up into two pairs of roots which each look the same mod 16:

- 9 = 1 + 23 and 25 = 1 + 23 + 24, 7 = 1 + 2 + 22 and 23 = 1 + 2 + 22 + 24.

The 2-adic square roots of 17 have expansions

- 1 + 23 + 25 + 26 + 27 + 29 + 210 + ..., 1 + 2 + 22 + 24 + 28 + 211...

Another example where we can use the more general version of Hensel's lemma but not the basic version is a proof that any 3-adic integer c ≡ 1 mod 9 is a cube in Z3. Let f(x) = x3 - c and take initial approximation a = 1. The basic Hensel's lemma cannot be used to find roots of f(x) since f'(r) ≡ 0 mod 3 for every r. To apply the general version of Hensel's lemma we want |f(1)|3 < |f'(1)|32, which means c ≡ 1 mod 27. That is, if c ≡ 1 mod 27 then the general Hensel's lemma tells us f(x) has a 3-adic root, so c is a 3-adic cube. However, we wanted to have this result under the weaker condition that c ≡ 1 mod 9. If c ≡ 1 mod 9 then c ≡ 1, 10, or 19 mod 27. We can apply the general Hensel's lemma three times depending on the value of c mod 27: if c ≡ 1 mod 27 then use a = 1, if c ≡ 10 mod 27 then use a = 4 (since 4 is a root of f(x) mod 27), and if c ≡ 19 mod 27 then use a = 7. (It is not true that every c ≡ 1 mod 3 is a 3-adic cube, e.g., 4 is not a 3-adic cube since it is not a cube mod 9.)

In a similar way, after some preliminary work Hensel's lemma can be used to show that for any odd prime number p, any p-adic integer c which is 1 mod p2 is a p-th power in Zp. (This is false when p is 2.)

Generalizations

Suppose A is a commutative ring, complete with respect to an ideal  , and let

, and let ![f(x) \in A[x]](../I/m/d66301cbedf7f93d6de603223425624d.png) be a polynomial with coefficients in A. Then if a ∈ A is an "approximate root" of f in the sense that it satisfies

be a polynomial with coefficients in A. Then if a ∈ A is an "approximate root" of f in the sense that it satisfies

then there is an exact root b ∈ A of f "close to" a; that is,

and

Further, if f ′(a) is not a zero-divisor then b is unique.

As a special case, if  and f ′(a) is a unit in A then there is a unique solution to f(b) = 0 in A such that

and f ′(a) is a unit in A then there is a unique solution to f(b) = 0 in A such that

This result can be generalized to several variables as follows:

Theorem: Let A be a commutative ring that is complete with respect to an ideal m ⊂ A and fi(x) ∈ A[x1, …, xn] for i = 1,...,n be a system of n polynomials in n variables over A. Let f = (f1,...,fn), viewed as a mapping from An to An, and let Jf(x) be the Jacobian matrix of f. Suppose some a = (a1, …, an) ∈ An is an approximate solution to f = 0 in the sense that

- fi(a) ≡ 0 mod (det Jf(a))2m

for 1 ≤ i ≤ n. Then there is some b = (b1, …, bn) in An satisfying f(b) = 0, i.e.,

- fi(b) = 0 for all i,

and furthermore this solution is "close" to a in the sense that

- bi ≡ ai mod Jf(a)m

for 1 ≤ i ≤ n.

As a special case, if fi(a) ≡ 0 mod m for all i and det Jf(a) is a unit in A then there is a solution to f(b) = 0 with bi ≡ ai mod m for all i.

When n = 1, a = a is an element of A and Jf(a) = Jf(a) is f ′(a). The hypotheses of this multivariable Hensel's lemma reduce to the ones which were stated in the one-variable Hensel's lemma.

Related concepts

Completeness of a ring is not a necessary condition for the ring to have the Henselian property: Goro Azumaya in 1950 defined a commutative local ring satisfying the Henselian property for the maximal ideal m to be a Henselian ring.

Masayoshi Nagata proved in the 1950s that for any commutative local ring A with maximal ideal m there always exists a smallest ring Ah containing A such that Ah is Henselian with respect to mAh. This Ah is called the Henselization of A. If A is noetherian, Ah will also be noetherian, and Ah is manifestly algebraic as it is constructed as a limit of étale neighbourhoods. This means that Ah is usually much smaller than the completion  while still retaining the Henselian property and remaining in the same category.

See also

References

- Eisenbud, David (1995), Commutative algebra, Graduate Texts in Mathematics 150, Berlin, New York: Springer-Verlag, ISBN 978-0-387-94269-8, MR 1322960

- Milne, J. G. (1980), Étale cohomology, Princeton University Press, ISBN 978-0-691-08238-7