Grayscale

| Color depth |

|---|

| Related |

In photography and computing, a grayscale or greyscale digital image is an image in which the value of each pixel is a single sample, that is, it carries only intensity information. Images of this sort, also known as black-and-white, are composed exclusively of shades of gray, varying from black at the weakest intensity to white at the strongest.[1]

Grayscale images are distinct from one-bit bi-tonal black-and-white images, which in the context of computer imaging are images with only the two colors, black, and white (also called bilevel or binary images). Grayscale images have many shades of gray in between.

Grayscale images are often the result of measuring the intensity of light at each pixel in a single band of the electromagnetic spectrum (e.g. infrared, visible light, ultraviolet, etc.), and in such cases they are monochromatic proper when only a given frequency is captured. But also they can be synthesized from a full color image; see the section about converting to grayscale.

Numerical representations

The intensity of a pixel is expressed within a given range between a minimum and a maximum, inclusive. This range is represented in an abstract way as a range from 0 (total absence, black) and 1 (total presence, white), with any fractional values in between. This notation is used in academic papers, but this does not define what "black" or "white" is in terms of colorimetry.

Another convention is to employ percentages, so the scale is then from 0% to 100%. This is used for a more intuitive approach, but if only integer values are used, the range encompasses a total of only 101 intensities, which are insufficient to represent a broad gradient of grays. Also, the percentile notation is used in printing to denote how much ink is employed in halftoning, but then the scale is reversed, being 0% the paper white (no ink) and 100% a solid black (full ink).

In computing, although the grayscale can be computed through rational numbers, image pixels are stored in binary, quantized form. Some early grayscale monitors can only show up to sixteen (4-bit) different shades, but today grayscale images (as photographs) intended for visual display (both on screen and printed) are commonly stored with 8 bits per sampled pixel, which allows 256 different intensities (i.e., shades of gray) to be recorded, typically on a non-linear scale. The precision provided by this format is barely sufficient to avoid visible banding artifacts, but very convenient for programming because a single pixel then occupies a single byte.

Technical uses (e.g. in medical imaging or remote sensing applications) often require more levels, to make full use of the sensor accuracy (typically 10 or 12 bits per sample) and to guard against roundoff errors in computations. Sixteen bits per sample (65,536 levels) is a convenient choice for such uses, as computers manage 16-bit words efficiently. The TIFF and the PNG (among other) image file formats support 16-bit grayscale natively, although browsers and many imaging programs tend to ignore the low order 8 bits of each pixel.

No matter what pixel depth is used, the binary representations assume that 0 is black and the maximum value (255 at 8 bpp, 65,535 at 16 bpp, etc.) is white, if not otherwise noted.

Converting color to grayscale

Conversion of a color image to grayscale is not unique; different weighting of the color channels effectively represent the effect of shooting black-and-white film with different-colored photographic filters on the cameras.

Colorimetric (luminance-preserving) conversion to grayscale

A common strategy is to use the principles of photometry or, more broadly, colorimetry to match the luminance of the grayscale image to the luminance of the original color image.[2][3] This also ensures that both images will have the same absolute luminance, as can be measured in its SI units of candelas per square meter, in any given area of the image, given equal whitepoints. In addition, matching luminance provides matching perceptual lightness measures, such as L* (as in the 1976 CIE Lab color space) which is determined by the luminance Y (as in the CIE 1931 XYZ color space) .

To convert a color from a colorspace based on an RGB color model to a grayscale representation of its luminance, weighted sums must be calculated in a linear RGB space, that is, after the gamma compression function has been removed first via gamma expansion.[4]

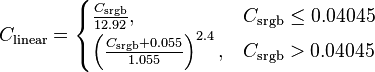

For the sRGB color space, gamma expansion is defined as

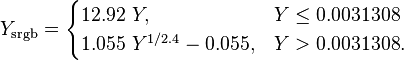

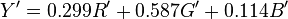

where Csrgb represents any of the three gamma-compressed sRGB primaries (Rsrgb, Gsrgb, and Bsrgb, each in range [0,1]) and Clinear is the corresponding linear-intensity value (R, G, and B, also in range [0,1]). Then, luminance is calculated as a weighted sum of the three linear-intensity values. The sRGB color space is defined in terms of the CIE 1931 linear luminance Y, which is given by

.[5]

.[5]

The coefficients represent the measured intensity perception of typical trichromat humans, depending on the primaries being used; in particular, human vision is most sensitive to green and least sensitive to blue. To encode grayscale intensity in linear RGB, each of the three primaries can be set to equal the calculated linear luminance Y (replacing R,G,B by Y,Y,Y to get this linear grayscale). Linear luminance typically needs to be gamma compressed to get back to a conventional non-linear representation. For sRGB, each of its three primaries is then set to the same gamma-compressed Ysrgb given by the inverse of the gamma expansion above as

In practice, because the three sRGB components are then equal, it is only necessary to store these values once in sRGB-compatible image formats that support a single-channel representation. Web browsers and other software that recognizes sRGB images will typically produce the same rendering for a such a grayscale image as it would for an sRGB image having the same values in all three color channels.

Luma coding in video systems

For images in color spaces such as Y'UV and its relatives, which are used in standard color TV and video systems such as PAL, SECAM, and NTSC, a nonlinear luma component (Y') is calculated directly from gamma-compressed primary intensities as a weighted sum, which can be calculated quickly without the gamma expansion and compression used in colorimetric grayscale calculations. In the Y'UV and Y'IQ models used by PAL and NTSC, the rec601 luma (Y') component is computed as

where we use the prime to distinguish these gamma-compressed values from the linear R, G, B, and Y discussed above. The ITU-R BT.709 standard used for HDTV developed by the ATSC uses different color coefficients, computing the luma component as

.

.

Although these are numerically the same coefficients used in sRGB above, the effect is different because here they are being applied directly to gamma-compressed values.

Normally these colorspaces are transformed back to R'G'B' before rendering for viewing. To the extent that enough precision remains, they can then be rendered accurately.

But if the luma component by itself is instead used directly as a grayscale representation of the color image, luminance is not preserved: two colors can have the same luma Y' but different CIE linear luminance Y (and thus different nonlinear Ysrgb as defined above) and therefore appear darker or lighter to a typical human than the original color. Similarly, two colors having the same luminance Y (and thus the same Ysrgb) will in general have different luma by either of the Y' luma definitions above.[6]

Grayscale as single channels of multichannel color images

Color images are often built of several stacked color channels, each of them representing value levels of the given channel. For example, RGB images are composed of three independent channels for red, green and blue primary color components; CMYK images have four channels for cyan, magenta, yellow and black ink plates, etc.

Here is an example of color channel splitting of a full RGB color image. The column at left shows the isolated color channels in natural colors, while at right there are their grayscale equivalences:

The reverse is also possible: to build a full color image from their separate grayscale channels. By mangling channels, using offsets, rotating and other manipulations, artistic effects can be achieved instead of accurately reproducing the original image.

See also

- Channel (digital image)

- Halftone

- Duotone

- False-color

- Sepia tone

- Cyanotype

- Morphological image processing

- Mezzotint

- List of monochrome and RGB palettes – Monochrome palettes section

- List of software palettes – Color gradient palettes and false color palettes sections

- Achromatopsia, total color blindness, in which vision is limited to a grayscale.

- Zone System

References

- ↑ Stephen Johnson (2006). Stephen Johnson on Digital Photography. O'Reilly. ISBN 0-596-52370-X.

- ↑ Poynton, Charles A. "Rehabilitation of gamma." Photonics West'98 Electronic Imaging. International Society for Optics and Photonics, 1998. online

- ↑ Charles Poynton, Constant Luminance

- ↑ Bruce Lindbloom, RGB Working Space Information (retrieved 2013-10-02)

- ↑ Michael Stokes, Matthew Anderson, Srinivasan Chandrasekar, and Ricardo Motta, "A Standard Default Color Space for the Internet - sRGB", online see matrix at end of Part 2.

- ↑ Charles Poynton, The magnitude of nonconstant luminance errors in Charles Poynton, A Technical Introduction to Digital Video. New York: John WIley & Sons, 1996.

| ||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||