Formal concept analysis

In information science, formal concept analysis is a principled way of deriving a concept hierarchy or formal ontology from a collection of objects and their properties. Each concept in the hierarchy represents the set of objects sharing the same values for a certain set of properties; and each sub-concept in the hierarchy contains a subset of the objects in the concepts above it. The term was introduced by Rudolf Wille in 1984, and builds on applied lattice and order theory that was developed by Garrett Birkhoff and others in the 1930s.

Formal concept analysis finds practical application in fields including data mining, text mining, machine learning, knowledge management, semantic web, software development, chemistry and biology.

Overview and history

The original motivation of formal concept analysis was the concrete representation of complete lattices and their properties by means of formal contexts, data tables that represent binary relations between objects and attributes. In this theory, a formal concept is defined to be a pair consisting of a set of objects (the "extent") and a set of attributes (the "intent") such that the extent consists of all objects that share the given attributes, and the intent consists of all attributes shared by the given objects. In this way, formal concept analysis formalizes the notions of extension and intension.

Pairs of formal concepts may be partially ordered by the subset relation between their sets of objects, or equivalently by the superset relation between their sets of attributes. This ordering results in a graded system of sub- and superconcepts, a concept hierarchy, which can be displayed as a line diagram. The family of these concepts obeys the mathematical axioms defining a lattice, and is called more formally a concept lattice. In French this is called a trellis de Galois (Galois lattice) because of the relation between the sets of concepts and attributes is a Galois connection.

The theory in its present form goes back to the Technische Universität Darmstadt research group led by Rudolf Wille, Bernhard Ganter and Peter Burmeister, where formal concept analysis originated in the early 1980s. The mathematical basis, however, was already created by Garrett Birkhoff in the 1930s as part of the general lattice theory. Before the work of the Darmstadt group, there were already approaches to the same idea in various French research groups but the Technische Universität Darmstadt normalised and popularised the field in Computer Science research circles. Philosophical foundations of formal concept analysis refer in particular to Charles S. Peirce and the educationalist Hartmut von Hentig.

Motivation and philosophical background

In his article Restructuring Lattice Theory (1982) initiating formal concept analysis as a mathematical discipline, Wille starts from a discontent with the current lattice theory and pure mathematics in general: The production of theoretical results - often achieved by "elaborate mental gymnastics" - were impressive, but the connections between neighbouring domains, even parts of a theory were getting weaker.

Restructuring lattice theory is an attempt to reinvigorate connections with our general culture by interpreting the theory as concretely as possible, and in this way to promote better communication between lattice theorists and potential users of lattice theory.[1]

This aim traces back to Hartmut von Hentig, who in 1972 pleaded for restructuring sciences in view of better teaching and in order to make sciences mutually available and more generally (i.e. also without specialized knowledge) criticable.[2] Hence, by its origins formal concept analysis aims at interdisciplinarity and democratic control of research.[3]

It corrects the starting point of lattice theory during the development of formal logic in the 19th century. Then - and later in model theory - a concept as unary predicate had been reduced to its extent. Now again, the philosophy of concepts should become less abstract by considering the intent. Hence, formal concept analysis is oriented towards the categories extension and intension of linguistics and classical conceptual logic.[4]

Formal Concept Analysis aims at the clarity of concepts according to Charles S. Peirce's pragmatic maxim by unfolding observable, elementary properties of the subsumed objects.[3] In his late philosophy, Peirce assumed that logical thinking aims at perceiving reality, by the triade concept, judgement and conclusion. Mathematics is an abstraction of logic, develops patterns of possible realities and therefore may support rational communication. On this background, Wille defines:

The aim and meaning of Formal Concept Analysis as mathematical theory of concepts and concept hierarchies is to support the rational communication of humans by mathematically developing appropriate conceptual structures which can be logically activated.[5]

Contexts and concepts

Formal context in FCA is a triple K = (G, M, I) where G is a set of objects, M is a set of attributes and the binary relation I ⊆ G × M, called incidence shows, which objects possess which attributes. [6] Formally it can be regarded as a bipartite graph I ⊆ G × M. Predicate gIm designates object g having attribute m. For subsets of objects and attributes A ⊆ G and B ⊆ M Galois operators are defined as follows:

A' = {m ∈ M | ∀ g ∈ A (gIm)},

B' = {g ∈ G | ∀ m ∈ B (gIm)}.

The operator ″ (applying the operator ′ twice) is a closure operator as it is:

- idempotent: A″″ = A″,

- monotonic: A1 ⊆ A2 → A1″ ⊆ A2″,

- extensive: A ⊆ A″.

A set of objects A ⊆ G such that A″ = A is called closed. The same properties hold for closed attribute sets, i.e. subsets of the set M.

A pair (A,B) is called a formal concept of a context K if:

- A ⊆ G,

- B ⊆ M,

- A' = B,

- B' = A.

Intuitively, it can be understood that a formal concept is such a pair (A,B) of subsets of objects G and attributes M of a formal context K = (G,M,I) that:

- every object in A has every attribute in B,

- for every object in G that is not in A, there is an attribute in B that the object does not have,

- for every attribute in M that is not in B, there is an object in A that does not have that attribute.

The sets A and B are closed and are called the extent and the intent of a formal context (G,M,I) respectively. For a set of objects A the set of their common attributes A′ describes the similarity of objects of the set A while the closed set A″ is a cluster of similar objects with the set of common attributes A′. [7]

A context may be described as a table with the objects corresponding to the rows of the table, the attributes corresponding to the columns of the table and a boolean value (in the example represented graphically as a checkmark) in cell (x, y) whenever object x has attribute y.

A concept, in this representation, forms a maximal subarray (not necessarily contiguous) such that all cells within the subarray are checked. For instance, the concept highlighted with a different background color in the example table below is the one describing odd prime numbers and forms a 3 × 2 subarray in which all cells are checked.[8]

Example

| composite | even | odd | prime | square | |

|---|---|---|---|---|---|

| 1 | | | |||

| 2 | | | |||

| 3 | |

|

|||

| 4 | | | | ||

| 5 | |

|

|||

| 6 | | | |||

| 7 | |

|

|||

| 8 | | | |||

| 9 | | | | ||

| 10 | | |

Consider G = {1,2,3,4,5,6,7,8,9,10}, and M = {composite, even, odd, prime, square}. The smallest concept including number 3 is the one with objects {3,5,7} and attributes {odd, prime}, for 3 has both of those attributes and {3,5,7} is the set of objects having that set of attributes. The largest concept involving the attribute of being square is the one with objects {1,4,9} and attributes {square}, for 1, 4 and 9 are all the square numbers and all three of them have that set of attributes. It can readily be seen that both of these example concepts satisfy the formal definitions above.

Concept lattice of a context

The concepts (Gi, Mi) defined above can be partially ordered by inclusion: if (Gi, Mi) and (Gj, Mj) are concepts, we define a partial order ≤ by saying that (Gi, Mi) ≤ (Gj, Mj) whenever Gi ⊆ Gj. Equivalently, (Gi, Mi) ≤ (Gj, Mj) whenever Mj ⊆ Mi.

Every pair of concepts in this partial order has a unique greatest lower bound (meet). The greatest lower bound of (Gi, Mi) and (Gj, Mj) is the concept with objects Gi ∩ Gj; it has as its attributes the union of Mi, Mj, and any additional attributes held by all objects in Gi ∩ Gj. Symmetrically, every pair of concepts in this partial order has a unique least upper bound (join). The least upper bound of (Gi, Mi) and (Gj, Mj) is the concept with attributes Mi ∩ Mj; it has as its objects the union of Gi, Gj, and any additional objects that have all attributes in Mi ∩ Mj.

These meet and join operations satisfy the axioms defining a lattice. In fact, by considering infinite meets and joins, analogously to the binary meets and joins defined above, one sees that this is a complete lattice. It may be viewed as the Dedekind–MacNeille completion of a partially ordered set of height two in which the elements of the partial order are the objects of G and the attributes of M and in which two elements x and y satisfy x ≤ y exactly when x is an object that has attribute y.

Any finite lattice may be generated as the concept lattice for some context. Let L be a finite lattice and form a context in which the objects and the attributes both correspond to elements of L. In this context, let object x have attribute y exactly when x and y are ordered as x ≤ y in the lattice. Then the concept lattice of this context is isomorphic to L itself.[9] This construction may be interpreted as forming the Dedekind–MacNeille completion of L, which is known to produce an isomorphic lattice from any finite lattice.

Example

The full set of concepts for objects and attributes from the above example is shown in the illustration. It includes a concept for each of the original attributes: composite, square, even, odd and prime. Additionally, it includes concepts for even composite numbers, composite square numbers (that is, all square numbers except 1), even composite squares, odd squares, odd composite squares, even primes, and odd primes.

Clarified

row-clarified: the context does not have duplicate rows ( is injective)

is injective)

column-clarified: the context does not have duplicate columns ( is injective)

is injective)

clarified: row-clarified and column-clarified

Reduced

row-reduced: no context rows can be expressed as intersection of other rows. The lattice of such context is meet-reduced.

column-reduced: no context columns can be expressed as intersection of other columns. The lattice of such context is join-reduced.

reduced: row-reduced and column-reduced

A reducible attribute gets added in a node of the lattice, which would be there also without this attribute.

Concept algebra of a context

Modelling negation in a formal context is somewhat problematic because the complement (G\Gi, M\Mi) of a concept (Gi, Mi) is in general not a concept. However, since the concept lattice is complete one can consider the join (Gi, Mi)Δ of all concepts (Gj, Mj) that satisfy Gj ⊆ G\Gi; or dually the meet (Gi, Mi)𝛁 of all concepts satisfying Mj ⊆ M\Mi. These two operations are known as weak negation and weak opposition, respectively.

This can be expressed in terms of the derivative functions. The derivative of a set Gi ⊆ G of objects is the set Gi' ⊆ M of all attributes that hold for all objects in Gi. The derivative of a set Mi ⊆ M of attributes is the set Mi' ⊆ G of all objects that have all attributes in Mi. A pair (Gi, Mi) is a concept if and only if Gi' = Mi and Mi' = Gi. Using this function, weak negation can be written as

- (Gi, Mi)Δ = ((G\M)'', (G\M)'),

and weak opposition can be written as

- (Gi, Mi)𝛁 = ((M\B)', (M\B)'').

The concept lattice equipped with the two additional operations Δ and 𝛁 is known as the concept algebra of a context. Concept algebras are a generalization of power sets.

Weak negation on a concept lattice L is a weak complementation, i.e. an order-reversing map Δ: L → L which satisfies the axioms xΔΔ ≤ x and (x⋀y) ⋁ (x⋀yΔ) = x. Weak composition is a dual weak complementation. A (bounded) lattice such as a concept algebra, which is equipped with a weak complementation and a dual weak complementation, is called a weakly dicomplemented lattice. Weakly dicomplemented lattices generalize distributive orthocomplemented lattices, i.e. Boolean algebras.[10][11]

Recovering the context from the line diagram

The line diagram of the concept lattice encodes enough information to recover the original context from which it was formed. Each object of the context corresponds to a lattice element, the element with the minimal object set that contains that object, and with an attribute set consisting of all attributes of the object. Symmetrically, each attribute of the context corresponds to a lattice element, the one with the minimal attribute set containing that attribute, and with an object set consisting of all objects with that attribute. We may label the nodes of the line diagram with the objects and attributes they correspond to; with this labeling, object x has attribute y if and only if there exists a monotonic path from x to y in the diagram.[12]

Implications and association rules with FCA

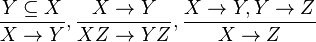

In FCA implication A → B for subsets A, B of the set of attributes M (A,B ⊆ M ) holds if A′ ⊆ B′, i.e. every object possessing each attribute from A also has each attribute from B. Implications obey Armstrong rules:

FCA Algorithms

Algorithms for generating formal concepts and constructing concept lattices

Kuznetsov and Obiedkov [13] survey many algorithms that have been developed for constructing concept lattices. These algorithms vary in many details, but are in general based on the idea that each edge of the line diagram of the concept lattice connects some concept C to the concept formed by the join of C with a single object. Thus, one can build up the concept lattice one concept at a time, by finding the neighbors in the line diagram of known concepts, starting from the concept with an empty set of objects. The amount of time spent to traverse the entire concept lattice in this way is polynomial in the number of input objects and attributes per generated concept.

Generation of the concept set presents two main problems: how to generate all concepts and how to avoid repetitive generation of the same concept or, at least, to determine whether a concept is generated for the first time. There are several ways to generate a new intent. Some algorithms (in particular, incremental ones) intersect a generated intent with some object intent. Other algorithms compute an intent explicitly intersecting all objects of the corresponding extent. There are algorithms that, starting from object intents, create new intents by intersecting already obtained intents. Lastly, one of the algorithms [14] does not use the intersection operation to generate intents. It forms new intents by adding attributes to those already generated and tests some condition on supports of attribute sets (a support of an attribute set is the number of objects whose intents contain all attributes from this set) to realize whether an attribute set is an intent.

The Close by One algorithm, for instance, generates concepts in the lexicographical order of their extents assuming that there is a linear order on the set of objects. At each step of the algorithm there is a current object. The generation of a concept is considered canonical if its extent contains no object preceding the current object. Close by One uses the described canonicity test, a method for selecting subsets of a set of objects G and an intermediate structure that helps to compute closures more efficiently using the generated concepts. Its time complexity is O(|G|2|M||L|), and its polynomial delay is O(|G|3|M|) where |G| stands for the cardinality of the set of objects G, |M|, similarly, is the number of all attributes from M and |L| is the size of the concept lattice.

The Chein Algorithm [15] represents the objects by extent–intent pairs and generates each new concept intent as the intersection of intents of two existent concepts. At every iteration step of the Chein algorithm a new layer of concepts is created by intersecting pairs of concept intents from the current layer and the new intent is searched for in the new layer. The time complexity of the modified version of Chein algorithm is O(|G|3|M||L|) while its polynomial delay is O(|G|3|M|).

The algorithm by Bordat [16] uses a tree for fast storing and retrieval of concepts. This algorithm uses a technique that requires O(|M|) time to realize whether a concept is generated for the first time without any search - the uniqueness of a concept is tested by intersecting its intent with the content of the cache. The time complexity of Bordat is O(|G||M|2|L|). This algorithm has a polynomial delay O(|G||M|3).

The algorithm proposed by Norris [17] is essentially an incremental version of the Close by One algorithm with time complexity - O(|G|2|M||L|).

The algorithm proposed by Godin [18] has the worst-case time complexity quadratic in the number of concepts. This algorithm is based on the use of an efficiently computable hash function f (which is actually the cardinality of an intent) defined on the set of concepts.

The choice of an algorithm for construction of the concept lattice should be based on the properties of input data. According to the survey [13] , recommendations are as follows: the Godin algorithm should be used for small and sparse contexts. For dense contexts the algorithms based on the canonicity test, linear in the number of input objects, such as Close by One and Norris, should be used. Bordat performs well on contexts of average density, especially, when the diagram graph is to be constructed.

Fast Close by One (FCbO) [19] can be seen as an extended version of Close by One which involves improved canonicity test that significantly reduces the number of formal concepts computed multiple times. It also combines depth-first and breadth-first search and employs an additional test that can be performed before a new formal concept is computed eliminating thus some unnecessary computations. FCbO performs well in case of both sparse and dense data of reasonable size. From the point of view of the asymptotic worst-case complexity, FCbO has time delay O(|G|3|M|) [20] , and asymptotic time complexity O(|G|2|M||L|) because in the worst case FCbO can degenerate into the original Close by One.

In-Close [21] is another Close by One variant that introduces a 'partial-closure' canonicity test to further improve efficiency. In-Close has the same time complexity of FCbO. The latest version of In-Close also incorporates the breadth-and-depth approach of FCbO to produce a 'best of Close by One breed'.[22]

The AddIntent algorithm [23] produces not only the concept set, but also the diagram graph. Being incremental, it relies on the graph constructed from the first objects of the context to integrate the next object into the lattice. Therefore, its use is most appropriate in those applications that require both the concept set and diagram graph, for example, in applications related to information retrieval and document browsing. The best estimate for the algorithm’s upper bound complexity to construct a concept lattice L whose context has a set of objects G, each of which possesses at most max(|g′|) attributes, is O(|L||G|2max(|g′|)). The AddIntent algorithm outperformed a selection of other published algorithms in experimental comparison for most types of contexts and was close to the most efficient algorithm in other cases.

Relationships of FCA to models of knowledge representation and processing

FCA, biclustering and multidimensional clustering

There are several types of biclusters (co-clusters) known in the literature: [24] biclusters of equal values, similar values, coherent values, the commonality of them being the existence of inclusion-maximal set of objects described by inclusion-maximal set of attributes with some special pattern of behavior. Clustering objects based on sets of attributes taking similar values dates back to the work of Hartigan [25] and was called biclustering by Mirkin. [26] Attention to biclustering approaches started to grow from the beginning of the 2000s with the growth of the need to analyze similarities in gene expression data [27] and design of recommender systems. [28]

Given an object-attribute numerical data-table (many-valued context in terms of FCA), the goal of biclustering is to group together some objects having similar values of some attributes. For example, in gene expression data, it is known that genes (objects) may share a common behavior for a subset of biological situations (attributes) only: one should accordingly produce local patterns to characterize biological processes, the latter should possibly overlap, since a gene may be involved in several processes. The same remark applies for recommender systems where one is interested in local patterns characterizing groups of users that strongly share almost the same tastes for a subset of items. [28]

A bicluster in a binary object-attribute data-table is a pair (A,B) consisting of an inclusion-maximal set of objects A and an inclusion-maximal set of attributes B such that almost all objects from A have almost all attributes from B and vice versa. Of course, formal concepts can be considered as "rigid" biclusters where all objects have all attributes and vice versa. Hence, it is not surprising that some bicluster definitions coming from practice [29] are just definitions of a formal concept. [30] A bicluster of similar values in a numerical object-attribute data-table is usually defined [31] [32] [33] as a pair consisting of an inclusion-maximal set of objects and an inclusion-maximal set of attributes having similar values for the objects. Such a pair can be represented as an inclusion-maximal rectangle in the numerical table, modulo rows and columns permutations. In [30] it was shown that biclusters of similar values correspond to triconcepts of a triadic context [34] where the third dimension is given by a scale that represents numerical attribute values by binary attributes. This fact can be generalized to n-dimensional case, where n-dimensional clusters of similar values in n-dimensional data are represented by n+1-dimensional concepts. This reduction allows one to use standard definitions and algorithms from multidimensional concept analysis [33] [35] for computing multidimensional clusters.

Tools

Many FCA software applications are available today. The main purpose of these tools varies from formal context creation to formal concept mining and generating the concepts lattice of a given formal context and the corresponding association rules. Most of these tools are academic and still under active development. One can find a non exhaustive list of FCA tools in the FCA software website. Most of these tools are open-source applications like ConExp, ToscanaJ, Lattice Miner,[36] Coron, FcaBedrock, etc.

See also

- Association rule learning

- Biclustering

- Cluster analysis

- Commonsense reasoning

- Conceptual clustering

- Concept learning

- Concept mining

- Correspondence analysis

- Description logic

- Factor analysis

- Graphical model

- Grounded theory

- Inductive logic programming

- Pattern theory

- Statistical relational learning

Notes

- ↑ Rudolf Wille: Restructuring lattice theory: An approach based on hierarchies of concepts. Reprint in: ICFCA '09: Proceedings of the 7th International Conference on Formal Concept Analysis, Berlin, Heidelberg, 2009, p. 314.

- ↑ Hartmut von Hentig: Magier oder Magister? Über die Einheit der Wissenschaft im Verständigungsprozeß. Klett 1972 / Suhrkamp 1974. Cited after Karl Erich Wolff: Ordnung, Wille und Begriff, Ernst Schröder Zentrum für Begriffliche Wissensverarbeitung, Darmstadt 2003.

- 1 2 Johannes Wollbold: Attribute Exploration of Gene Regulatory Processes. PhD thesis, University of Jena 2011, p. 9

- ↑ Bernhard Ganter, Bernhard and Rudolf Wille: Formal Concept Analysis: Mathematical Foundations. Springer, Berlin, ISBN 3-540-62771-5, p. 1

- ↑ Rudolf Wille: Formal Concept Analysis as Mathematical Theory of Concepts and Concept Hierarchies. In: B. Ganter et al.: Formal Concept Analysis. Foundations and Applications, Springer, 2005, p. 1f.

- ↑ Ganter B., Wille R. Formal Concept Analysis: Mathematical Foundations, 1st edn. Springer-Verlag New York, Inc., Secaucus. 1999

- ↑ Ignatov D.I., Kuznetsov S.O., Zhukov L.E., Poelmans J. Can triconcepts become triclusters? In: International Journal of General Systems, Vol. 42. No. 6, pp. 572-593, 2013.

- ↑ Wolff, section 2.

- ↑ Stumme, Theorem 1.

- ↑ Wille, Rudolf (2000), "Boolean Concept Logic", in Ganter, B.; Mineau, G. W., ICCS 2000 Conceptual Structures: Logical, Linguistic and Computational Issues, LNAI 1867, Springer, pp. 317–331, ISBN 978-3-540-67859-5.

- ↑ Kwuida, Léonard (2004), Dicomplemented Lattices. A contextual generalization of Boolean algebras (PDF), Shaker Verlag, ISBN 978-3-8322-3350-1

- ↑ Wolff, section 3.

- 1 2 Kuznetsov S., Obiedkov S. Comparing Performance of Algorithms for Generating Concept Lattices, 14, Journal of Experimental and Theoretical Artificial Intelligence, Taylor & Francis, ISSN 0952–813X print/ISSN 1362–3079 online, pp.189–216, 2002

- ↑ Stumme G., Taouil R., Bastide Y., Pasquier N., Lakhal L. Fast Computation of Concept Lattices Using Data Mining Techniques. Proc. 7th Int. Workshop on Knowledge Representation Meets Databases (KRDB 2000) pp.129-139, 2000.

- ↑ Chein M., Algorithme de recherche des sous-matrice~ premieres d'une matrice, Bull. Math. R.S. Roumanie, No. 13, No. 1, 21-25, 1969

- ↑ Bordat J.P. Calcul pratique du treillis de Galois d’une correspondance, Math. Sci. Hum.no. 96, pp. 31–47, 1986

- ↑ Norris E.M.,An algorithm for computing the maximal rectangles in a binary relation. Rev. Roum. de Math. Pures Appliqudes, 23, No. 2, 243-250, 1978

- ↑ Godin R., Missaoui R. and Alaoui H. Incremental Concept Formation Algorithms Based on Galois Lattices. Computation Intelligence, 1995

- ↑ Krajca P., Outrata J., Vychodil V. Advances in algorithms based on CbO. in: Proceedings of the CLA, pp. 325–337, 2010

- ↑ Outrata J., Vychodil V. Fast algorithm for computing fixpoints of Galois connections induced by object-attribute relational data. Information Sciences, Volume 185, Issue 1, 15 February 2012, Pages 114-127, ISSN 0020-0255

- ↑ Andrews, Simon (2014). "A Partial-Closure Canonicity Test to Improve the Performance of CbO-Type Algorithms". Lecture Notes in Artificial Intelligence 8577.

- ↑ Andrews, Simon (Feb 2015). "A 'Best-of-Breed' Approach for Designing a Fast Algorithm for Computing Fixpoints of Galois Connections". Information Sciences 295. doi:10.1016/j.ins.2014.10.011.

- ↑ van der Merwe D, Obiedkov S., Kourie D. AddIntent: A New Incremental Algorithm for Constructing Concept Lattices, in: Proceedings of the 2nd International Conference on Formal Concept Analysis (ICFCA 2004). "Concept Lattices, Proceedings", Lecture Notes in Artificial Intelligence, vol. 2961, pp. 372-385. 2004

- ↑ Madeira S., Oliveira, A. Biclustering algorithms for biological data analysis: a survey. IEEE/ACM Transactions on Computational Biology and Bioinformatics, 1(1):24-45, 2004.

- ↑ Hartigan, J. A. Direct Clustering of a Data Matrix. Journal of the American Statistical Association, 67(337):123-129, 1972.

- ↑ Mirkin B. Mathematical classification and clustering. Boston-Dordrecht: Kluwer academic publisher, 1996

- ↑ Cheng Y., Church G. Biclustering of expression data. In Proc. 8th International Conference on Intelligent Systems for Molecular Biology (ISBM), 2000. pp. 93-103

- 1 2 Adomavicius C., Tuzhilin A. Toward the next generation of recommender systems: a survey of the state-of-the-art and possible extensions. Knowledge and Data Engineering, IEEE Transac-tions on, 17(6): 734 -749, 2005.

- ↑ Prelic, S. Bleuler, P. Zimmermann, A. Wille, P. Buhlmann, W. Gruissem, L. Hennig, L. Thiele, and E. Zitzler. A Systematic Comparison and Evaluation of Biclustering Methods for Gene Expression Data. Bioinformatics, 22(9):1122-1129, 2006

- 1 2 Kaytoue M., Kuznetsov S., Macko J., Wagner Meira Jr., Napoli A. Mining Biclusters of Similar Values with Triadic Concept Analysis. CLA : 175-190, 2011

- ↑ R. G. Pensa, C. Leschi, J. Besson, J.-F. Boulicaut. Assessment of discretization techniques for relevant pattern discovery from gene expression data. In M. J. Zaki, S. Morishita, and I. Rigoutsos, editors, Proceedings of the 4th ACM SIGKDD Workshop on Data Mining in Bioinformatics (BIOKDD 2004), 24-30, 2004.

- ↑ Besson J., Robardet C. Raedt L.D., Boulicaut, J.-F. Mining bi-sets in numerical data. In S. Dzeroski and J. Struyf, editors, KDID, LNCS 4747, p.11-23. Springer, 2007.

- 1 2 Cerf L., Besson J., Robardet C., Boulicaut J.-F. Closed patterns meet n-ary relations. TKDD, 3(1), 2009

- ↑ Wille R. The basic theorem of triadic concept analysis. Order 12, 149-158., 1995

- ↑ Voutsadakis G. Polyadic Concept Analysis. Order, 19 (3), 295-304., 2002

- ↑ Boumedjout Lahcen and Leonard Kwuida. Lattice Miner: A Tool for Concept Lattice Construction and Exploration. In Supplementary Proceeding of International Conference on Formal concept analysis (ICFCA'10), 2010

References

- Ganter, Bernhard; Stumme, Gerd; Wille, Rudolf, eds. (2005), Formal Concept Analysis: Foundations and Applications, Lecture Notes in Artificial Intelligence, no. 3626, Springer-Verlag, ISBN 3-540-27891-5

- Ganter, Bernhard; Wille, Rudolf (1998), Formal Concept Analysis: Mathematical Foundations, Springer-Verlag, Berlin, ISBN 3-540-62771-5. Translated by C. Franzke.

- Carpineto, Claudio; Romano, Giovanni (2004), Concept Data Analysis: Theory and Applications, Wiley, ISBN 978-0-470-85055-8.

- Wolff, Karl Erich (1994), "A first course in Formal Concept Analysis", in F. Faulbaum, StatSoft '93 (PDF), Gustav Fischer Verlag, pp. 429–438.

- Davey, B.A.; Priestley, H. A. (2002), "3. Formal Concept Analysis", Introduction to Lattices and Order, Cambridge University Press, ISBN 978-0-521-78451-1.

External links

- A Formal Concept Analysis Homepage

- Demo

- 11th International Conference on Formal Concept Analysis. ICFCA 2013 - Dresden, Germany - May 21-24, 2013