Finite element method

| Differential equations | |||||

|---|---|---|---|---|---|

Navier–Stokes differential equations used to simulate airflow around an obstruction. | |||||

| Classification | |||||

|

Types

|

|||||

|

Relation to processes |

|||||

| Solution | |||||

|

General topics |

|||||

The finite element method (FEM) is a numerical technique for finding approximate solutions to boundary value problems for partial differential equations. It is also referred to as finite element analysis (FEA). FEM subdivides a large problem into smaller, simpler, parts, called finite elements. The simple equations that model these finite elements are then assembled into a larger system of equations that models the entire problem. FEM then uses variational methods from the calculus of variations to approximate a solution by minimizing an associated error function.

Basic concepts

The subdivision of a whole domain into simpler parts has several advantages:[1]

- Accurate representation of complex geometry

- Inclusion of dissimilar material properties

- Easy representation of the total solution

- Capture of local effects.

A typical work out of the method involves (1) dividing the domain of the problem into a collection of subdomains, with each subdomain represented by a set of element equations to the original problem, followed by (2) systematically recombining all sets of element equations into a global system of equations for the final calculation. The global system of equations has known solution techniques, and can be calculated from the initial values of the original problem to obtain a numerical answer.

In the first step above, the element equations are simple equations that locally approximate the original complex equations to be studied, where the original equations are often partial differential equations (PDE). To explain the approximation in this process, FEM is commonly introduced as a special case of Galerkin method. The process, in mathematical language, is to construct an integral of the inner product of the residual and the weight functions and set the integral to zero. In simple terms, it is a procedure that minimizes the error of approximation by fitting trial functions into the PDE. The residual is the error caused by the trial functions, and the weight functions are polynomial approximation functions that project the residual. The process eliminates all the spatial derivatives from the PDE, thus approximating the PDE locally with

- a set of algebraic equations for steady state problems,

- a set of ordinary differential equations for transient problems.

These equation sets are the element equations. They are linear if the underlying PDE is linear, and vice versa. Algebraic equation sets that arise in the steady state problems are solved using numerical linear algebra methods, while ordinary differential equation sets that arise in the transient problems are solved by numerical integration using standard techniques such as Euler's method or the Runge-Kutta method.

In step (2) above, a global system of equations is generated from the element equations through a transformation of coordinates from the subdomains' local nodes to the domain's global nodes. This spatial transformation includes appropriate orientation adjustments as applied in relation to the reference coordinate system. The process is often carried out by FEM software using coordinate data generated from the subdomains.

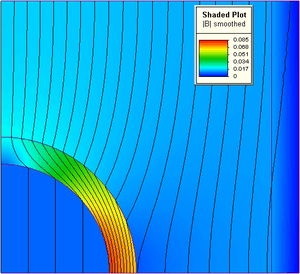

FEM is best understood from its practical application, known as finite element analysis (FEA). FEA as applied in engineering is a computational tool for performing engineering analysis. It includes the use of mesh generation techniques for dividing a complex problem into small elements, as well as the use of software program coded with FEM algorithm. In applying FEA, the complex problem is usually a physical system with the underlying physics such as the Euler-Bernoulli beam equation, the heat equation, or the Navier-Stokes equations expressed in either PDE or integral equations, while the divided small elements of the complex problem represent different areas in the physical system.

FEA is a good choice for analyzing problems over complicated domains (like cars and oil pipelines), when the domain changes (as during a solid state reaction with a moving boundary), when the desired precision varies over the entire domain, or when the solution lacks smoothness. For instance, in a frontal crash simulation it is possible to increase prediction accuracy in "important" areas like the front of the car and reduce it in its rear (thus reducing cost of the simulation). Another example would be in numerical weather prediction, where it is more important to have accurate predictions over developing highly nonlinear phenomena (such as tropical cyclones in the atmosphere, or eddies in the ocean) rather than relatively calm areas.

History

While it is difficult to quote a date of the invention of the finite element method, the method originated from the need to solve complex elasticity and structural analysis problems in civil and aeronautical engineering. Its development can be traced back to the work by A. Hrennikoff [2] and R. Courant.[3] In China, in the later 1950s and early 1960s, based on the computations of dam constructions, K. Feng proposed a systematic numerical method for solving partial differential equations. The method was called the finite difference method based on variation principle, which was another independent invention of finite element method. Although the approaches used by these pioneers are different, they share one essential characteristic: mesh discretization of a continuous domain into a set of discrete sub-domains, usually called elements.

Hrennikoff's work discretizes the domain by using a lattice analogy, while Courant's approach divides the domain into finite triangular subregions to solve second order elliptic partial differential equations (PDEs) that arise from the problem of torsion of a cylinder. Courant's contribution was evolutionary, drawing on a large body of earlier results for PDEs developed by Rayleigh, Ritz, and Galerkin.

The finite element method obtained its real impetus in the 1960s and 1970s by the developments of J. H. Argyris with co-workers at the University of Stuttgart, R. W. Clough with co-workers at UC Berkeley, O. C. Zienkiewicz with co-workers Ernest Hinton, Bruce Irons[4] and others at the University of Swansea, Philippe G. Ciarlet at the University of Paris 6 and Richard Gallagher with co-workers at Cornell University. Further impetus was provided in these years by available open source finite element software programs. NASA sponsored the original version of NASTRAN, and UC Berkeley made the finite element program SAP IV[5] widely available. In Norway the ship classification society Det Norske Veritas (now DNV GL) developed Sesam in 1969 for use in analysis of ships.[6] A rigorous mathematical basis to the finite element method was provided in 1973 with the publication by Strang and Fix.[7] The method has since been generalized for the numerical modeling of physical systems in a wide variety of engineering disciplines, e.g., electromagnetism, heat transfer, and fluid dynamics.[8][9]

Technical discussion

The structure of finite element methods

Finite element methods are numerical methods for approximating the solutions of mathematical problems that are usually formulated so as to precisely state an idea of some aspect of physical reality.

A finite element method is characterized by a variational formulation, a discretization strategy, one or more solution algorithms and post-processing procedures.

Examples of variational formulation are the Galerkin method, the discontinuous Galerkin method, mixed methods, etc.

A discretization strategy is understood to mean a clearly defined set of procedures that cover (a) the creation of finite element meshes, (b) the definition of basis function on reference elements (also called shape functions) and (c) the mapping of reference elements onto the elements of the mesh. Examples of discretization strategies are the h-version, p-version, hp-version, x-FEM, isogeometric analysis, etc. Each discretization strategy has certain advantages and disadvantages. A reasonable criterion in selecting a discretization strategy is to realize nearly optimal performance for the broadest set of mathematical models in a particular model class.

There are various numerical solution algorithms that can be classified into two broad categories; direct and iterative solvers. These algorithms are designed to exploit the sparsity of matrices that depend on the choices of variational formulation and discretization strategy.

Postprocessing procedures are designed for the extraction of the data of interest from a finite element solution. In order to meet the requirements of solution verification, postprocessors need to provide for a posteriori error estimation in terms of the quantities of interest. When the errors of approximation are larger than what is considered acceptable then the discretization has to be changed either by an automated adaptive process or by action of the analyst. There are some very efficient postprocessors that provide for the realization of superconvergence.

Illustrative problems P1 and P2

We will illustrate the finite element method using two sample problems from which the general method can be extrapolated. It is assumed that the reader is familiar with calculus and linear algebra.

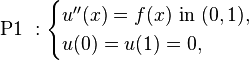

P1 is a one-dimensional problem

where  is given,

is given,  is an unknown function of

is an unknown function of  , and

, and  is the second derivative of

is the second derivative of  with respect to

with respect to  .

.

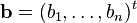

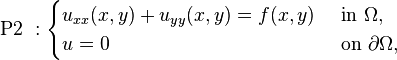

P2 is a two-dimensional problem (Dirichlet problem)

where  is a connected open region in the

is a connected open region in the  plane whose boundary

plane whose boundary  is "nice" (e.g., a smooth manifold or a polygon), and

is "nice" (e.g., a smooth manifold or a polygon), and  and

and  denote the second derivatives with respect to

denote the second derivatives with respect to  and

and  , respectively.

, respectively.

The problem P1 can be solved "directly" by computing antiderivatives. However, this method of solving the boundary value problem (BVP) works only when there is one spatial dimension and does not generalize to higher-dimensional problems or to problems like  . For this reason, we will develop the finite element method for P1 and outline its generalization to P2.

. For this reason, we will develop the finite element method for P1 and outline its generalization to P2.

Our explanation will proceed in two steps, which mirror two essential steps one must take to solve a boundary value problem (BVP) using the FEM.

- In the first step, one rephrases the original BVP in its weak form. Little to no computation is usually required for this step. The transformation is done by hand on paper.

- The second step is the discretization, where the weak form is discretized in a finite-dimensional space.

After this second step, we have concrete formulae for a large but finite-dimensional linear problem whose solution will approximately solve the original BVP. This finite-dimensional problem is then implemented on a computer.

Weak formulation

The first step is to convert P1 and P2 into their equivalent weak formulations.

The weak form of P1

If  solves P1, then for any smooth function

solves P1, then for any smooth function  that satisfies the displacement boundary conditions, i.e.

that satisfies the displacement boundary conditions, i.e.  at

at  and

and  , we have

, we have

(1)

Conversely, if  with

with  satisfies (1) for every smooth function

satisfies (1) for every smooth function  then one may show that this

then one may show that this  will solve P1. The proof is easier for twice continuously differentiable

will solve P1. The proof is easier for twice continuously differentiable  (mean value theorem), but may be proved in a distributional sense as well.

(mean value theorem), but may be proved in a distributional sense as well.

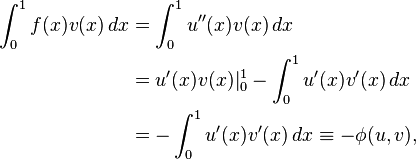

By using integration by parts on the right-hand-side of (1), we obtain

(2)

where we have used the assumption that  .

.

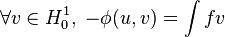

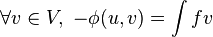

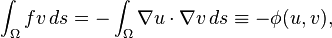

The weak form of P2

If we integrate by parts using a form of Green's identities, we see that if  solves P2, then for any

solves P2, then for any  ,

,

where  denotes the gradient and

denotes the gradient and  denotes the dot product in the two-dimensional plane. Once more

denotes the dot product in the two-dimensional plane. Once more  can be turned into an inner product on a suitable space

can be turned into an inner product on a suitable space  of "once differentiable" functions of

of "once differentiable" functions of  that are zero on

that are zero on  . We have also assumed that

. We have also assumed that  (see Sobolev spaces). Existence and uniqueness of the solution can also be shown.

(see Sobolev spaces). Existence and uniqueness of the solution can also be shown.

A proof outline of existence and uniqueness of the solution

We can loosely think of  to be the absolutely continuous functions of

to be the absolutely continuous functions of  that are

that are  at

at  and

and  (see Sobolev spaces). Such functions are (weakly) "once differentiable" and it turns out that the symmetric bilinear map

(see Sobolev spaces). Such functions are (weakly) "once differentiable" and it turns out that the symmetric bilinear map  then defines an inner product which turns

then defines an inner product which turns  into a Hilbert space (a detailed proof is nontrivial). On the other hand, the left-hand-side

into a Hilbert space (a detailed proof is nontrivial). On the other hand, the left-hand-side  is also an inner product, this time on the Lp space

is also an inner product, this time on the Lp space  . An application of the Riesz representation theorem for Hilbert spaces shows that there is a unique

. An application of the Riesz representation theorem for Hilbert spaces shows that there is a unique  solving (2) and therefore P1. This solution is a-priori only a member of

solving (2) and therefore P1. This solution is a-priori only a member of  , but using elliptic regularity, will be smooth if

, but using elliptic regularity, will be smooth if  is.

is.

Discretization

with zero values at the endpoints (blue), and a piecewise linear approximation (red)

with zero values at the endpoints (blue), and a piecewise linear approximation (red)P1 and P2 are ready to be discretized which leads to a common sub-problem (3). The basic idea is to replace the infinite-dimensional linear problem:

- Find

such that

such that

with a finite-dimensional version:

- (3) Find

such that

such that

where  is a finite-dimensional subspace of

is a finite-dimensional subspace of  . There are many possible choices for

. There are many possible choices for  (one possibility leads to the spectral method). However, for the finite element method we take

(one possibility leads to the spectral method). However, for the finite element method we take  to be a space of piecewise polynomial functions.

to be a space of piecewise polynomial functions.

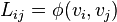

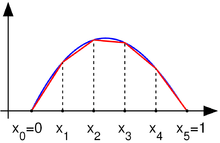

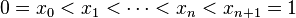

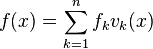

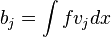

For problem P1

We take the interval  , choose

, choose  values of

values of  with

with  and we define

and we define  by:

by:

where we define  and

and  . Observe that functions in

. Observe that functions in  are not differentiable according to the elementary definition of calculus. Indeed, if

are not differentiable according to the elementary definition of calculus. Indeed, if  then the derivative is typically not defined at any

then the derivative is typically not defined at any  ,

,  . However, the derivative exists at every other value of

. However, the derivative exists at every other value of  and one can use this derivative for the purpose of integration by parts.

and one can use this derivative for the purpose of integration by parts.

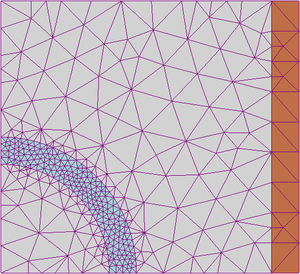

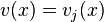

For problem P2

We need  to be a set of functions of

to be a set of functions of  . In the figure on the right, we have illustrated a triangulation of a 15 sided polygonal region

. In the figure on the right, we have illustrated a triangulation of a 15 sided polygonal region  in the plane (below), and a piecewise linear function (above, in color) of this polygon which is linear on each triangle of the triangulation; the space

in the plane (below), and a piecewise linear function (above, in color) of this polygon which is linear on each triangle of the triangulation; the space  would consist of functions that are linear on each triangle of the chosen triangulation.

would consist of functions that are linear on each triangle of the chosen triangulation.

One often reads  instead of

instead of  in the literature. The reason is that one hopes that as the underlying triangular grid becomes finer and finer, the solution of the discrete problem (3) will in some sense converge to the solution of the original boundary value problem P2. The triangulation is then indexed by a real valued parameter

in the literature. The reason is that one hopes that as the underlying triangular grid becomes finer and finer, the solution of the discrete problem (3) will in some sense converge to the solution of the original boundary value problem P2. The triangulation is then indexed by a real valued parameter  which one takes to be very small. This parameter will be related to the size of the largest or average triangle in the triangulation. As we refine the triangulation, the space of piecewise linear functions

which one takes to be very small. This parameter will be related to the size of the largest or average triangle in the triangulation. As we refine the triangulation, the space of piecewise linear functions  must also change with

must also change with  , hence the notation

, hence the notation  . Since we do not perform such an analysis, we will not use this notation.

. Since we do not perform such an analysis, we will not use this notation.

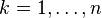

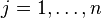

Choosing a basis

.svg.png)

.svg.png)

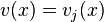

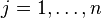

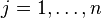

To complete the discretization, we must select a basis of  . In the one-dimensional case, for each control point

. In the one-dimensional case, for each control point  we will choose the piecewise linear function

we will choose the piecewise linear function  in

in  whose value is

whose value is  at

at  and zero at every

and zero at every  , i.e.,

, i.e.,

for  ; this basis is a shifted and scaled tent function. For the two-dimensional case, we choose again one basis function

; this basis is a shifted and scaled tent function. For the two-dimensional case, we choose again one basis function  per vertex

per vertex  of the triangulation of the planar region

of the triangulation of the planar region  . The function

. The function  is the unique function of

is the unique function of  whose value is

whose value is  at

at  and zero at every

and zero at every  .

.

Depending on the author, the word "element" in "finite element method" refers either to the triangles in the domain, the piecewise linear basis function, or both. So for instance, an author interested in curved domains might replace the triangles with curved primitives, and so might describe the elements as being curvilinear. On the other hand, some authors replace "piecewise linear" by "piecewise quadratic" or even "piecewise polynomial". The author might then say "higher order element" instead of "higher degree polynomial". Finite element method is not restricted to triangles (or tetrahedra in 3-d, or higher order simplexes in multidimensional spaces), but can be defined on quadrilateral subdomains (hexahedra, prisms, or pyramids in 3-d, and so on). Higher order shapes (curvilinear elements) can be defined with polynomial and even non-polynomial shapes (e.g. ellipse or circle).

Examples of methods that use higher degree piecewise polynomial basis functions are the hp-FEM and spectral FEM.

More advanced implementations (adaptive finite element methods) utilize a method to assess the quality of the results (based on error estimation theory) and modify the mesh during the solution aiming to achieve approximate solution within some bounds from the 'exact' solution of the continuum problem. Mesh adaptivity may utilize various techniques, the most popular are:

- moving nodes (r-adaptivity)

- refining (and unrefining) elements (h-adaptivity)

- changing order of base functions (p-adaptivity)

- combinations of the above (hp-adaptivity).

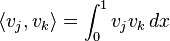

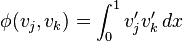

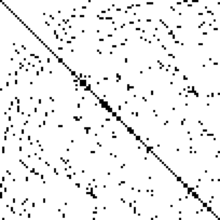

Small support of the basis

in the disk centered at the origin and radius 1, with zero boundary conditions.

in the disk centered at the origin and radius 1, with zero boundary conditions.(a) The triangulation.

The primary advantage of this choice of basis is that the inner products

and

will be zero for almost all  .

(The matrix containing

.

(The matrix containing  in the

in the  location is known as the Gramian matrix.)

In the one dimensional case, the support of

location is known as the Gramian matrix.)

In the one dimensional case, the support of  is the interval

is the interval ![[x_{k-1},x_{k+1}]](../I/m/8a43f36d9e8f2ce9259f885a5e87cad7.png) . Hence, the integrands of

. Hence, the integrands of  and

and  are identically zero whenever

are identically zero whenever  .

.

Similarly, in the planar case, if  and

and  do not share an edge of the triangulation, then the integrals

do not share an edge of the triangulation, then the integrals

and

are both zero.

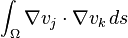

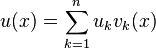

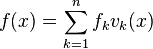

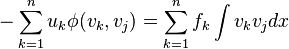

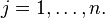

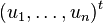

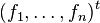

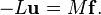

Matrix form of the problem

If we write  and

and  then problem (3), taking

then problem (3), taking  for

for  , becomes

, becomes

for

for  (4)

(4)

If we denote by  and

and  the column vectors

the column vectors  and

and  , and if we let

, and if we let

and

be matrices whose entries are

and

then we may rephrase (4) as

(5)

(5)

It is not necessary to assume  . For a general function

. For a general function  , problem (3) with

, problem (3) with  for

for  becomes actually simpler, since no matrix

becomes actually simpler, since no matrix  is used,

is used,

-

, (6)

, (6)

where  and

and  for

for  .

.

As we have discussed before, most of the entries of  and

and  are zero because the basis functions

are zero because the basis functions  have small support. So we now have to solve a linear system in the unknown

have small support. So we now have to solve a linear system in the unknown  where most of the entries of the matrix

where most of the entries of the matrix  , which we need to invert, are zero.

, which we need to invert, are zero.

Such matrices are known as sparse matrices, and there are efficient solvers for such problems (much more efficient than actually inverting the matrix.) In addition,  is symmetric and positive definite, so a technique such as the conjugate gradient method is favored. For problems that are not too large, sparse LU decompositions and Cholesky decompositions still work well. For instance, MATLAB's backslash operator (which uses sparse LU, sparse Cholesky, and other factorization methods) can be sufficient for meshes with a hundred thousand vertices.

is symmetric and positive definite, so a technique such as the conjugate gradient method is favored. For problems that are not too large, sparse LU decompositions and Cholesky decompositions still work well. For instance, MATLAB's backslash operator (which uses sparse LU, sparse Cholesky, and other factorization methods) can be sufficient for meshes with a hundred thousand vertices.

The matrix  is usually referred to as the stiffness matrix, while the matrix

is usually referred to as the stiffness matrix, while the matrix  is dubbed the mass matrix.

is dubbed the mass matrix.

General form of the finite element method

In general, the finite element method is characterized by the following process.

- One chooses a grid for

. In the preceding treatment, the grid consisted of triangles, but one can also use squares or curvilinear polygons.

. In the preceding treatment, the grid consisted of triangles, but one can also use squares or curvilinear polygons. - Then, one chooses basis functions. In our discussion, we used piecewise linear basis functions, but it is also common to use piecewise polynomial basis functions.

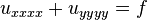

A separate consideration is the smoothness of the basis functions. For second order elliptic boundary value problems, piecewise polynomial basis function that are merely continuous suffice (i.e., the derivatives are discontinuous.) For higher order partial differential equations, one must use smoother basis functions. For instance, for a fourth order problem such as  , one may use piecewise quadratic basis functions that are

, one may use piecewise quadratic basis functions that are  .

.

Another consideration is the relation of the finite-dimensional space  to its infinite-dimensional counterpart, in the examples above

to its infinite-dimensional counterpart, in the examples above  . A conforming element method is one in which the space

. A conforming element method is one in which the space  is a subspace of the element space for the continuous problem. The example above is such a method. If this condition is not satisfied, we obtain a nonconforming element method, an example of which is the space of piecewise linear functions over the mesh which are continuous at each edge midpoint. Since these functions are in general discontinuous along the edges, this finite-dimensional space is not a subspace of the original

is a subspace of the element space for the continuous problem. The example above is such a method. If this condition is not satisfied, we obtain a nonconforming element method, an example of which is the space of piecewise linear functions over the mesh which are continuous at each edge midpoint. Since these functions are in general discontinuous along the edges, this finite-dimensional space is not a subspace of the original  .

.

Typically, one has an algorithm for taking a given mesh and subdividing it. If the main method for increasing precision is to subdivide the mesh, one has an h-method (h is customarily the diameter of the largest element in the mesh.) In this manner, if one shows that the error with a grid  is bounded above by

is bounded above by  , for some

, for some  and

and  , then one has an order p method. Under certain hypotheses (for instance, if the domain is convex), a piecewise polynomial of order

, then one has an order p method. Under certain hypotheses (for instance, if the domain is convex), a piecewise polynomial of order  method will have an error of order

method will have an error of order  .

.

If instead of making h smaller, one increases the degree of the polynomials used in the basis function, one has a p-method. If one combines these two refinement types, one obtains an hp-method (hp-FEM). In the hp-FEM, the polynomial degrees can vary from element to element. High order methods with large uniform p are called spectral finite element methods (SFEM). These are not to be confused with spectral methods.

For vector partial differential equations, the basis functions may take values in  .

.

Various types of finite element methods

AEM

The Applied Element Method, or AEM combines features of both FEM and Discrete element method, or (DEM).

Generalized finite element method

The generalized finite element method (GFEM) uses local spaces consisting of functions, not necessarily polynomials, that reflect the available information on the unknown solution and thus ensure good local approximation. Then a partition of unity is used to “bond” these spaces together to form the approximating subspace. The effectiveness of GFEM has been shown when applied to problems with domains having complicated boundaries, problems with micro-scales, and problems with boundary layers.[10]

Mixed finite element method

hp-FEM

The hp-FEM combines adaptively, elements with variable size h and polynomial degree p in order to achieve exceptionally fast, exponential convergence rates.[11]

hpk-FEM

The hpk-FEM combines adaptively, elements with variable size h, polynomial degree of the local approximations p and global differentiability of the local approximations (k-1) in order to achieve best convergence rates.

XFEM

The extended finite element method (XFEM), is a numerical technique based on the generalized finite element method (GFEM) and the partition of unity method (PUM). It extends the classical finite element method (FEM) approach by enriching the solution space for solutions to differential equations with discontinuous functions. Enriched finite element methods extend, or enrich, the approximation space so that it is able to naturally reproduce the challenging feature associated with the problem of interest: the discontinuity, singularity, boundary layer, etc. It was shown that for some problems, such an embedding of the problem's feature into the approximation space can significantly improve convergence rates and accuracy. Moreover, treating problems with discontinuities with extended Finite Element Methods suppresses the need to mesh and re-mesh the discontinuity surfaces, thus alleviating the computational costs and projection errors associated with conventional finite element methods, at the cost of restricting the discontinuities to mesh edges.

There exists several research codes implementing this technique to various degrees. 1. GetFEM++ 2. xfem++ 3. openxfem++

XFEM has also been implemented in code like Altair Radioss, ASTER, Morfeo, and Abaqus. It is increasingly being adopted by other commercial finite element software, with a few plugins and actual core implementations available (ANSYS, SAMCEF, OOFELIE, etc.).

S-FEM

Spectral element method

Meshfree methods

Discontinuous Galerkin methods

Finite element limit analysis

Stretched grid method

Comparison to the finite difference method

The finite difference method (FDM) is an alternative way of approximating solutions of PDEs. The differences between FEM and FDM are:

- The most attractive feature of the FEM is its ability to handle complicated geometries (and boundaries) with relative ease. While FDM in its basic form is restricted to handle rectangular shapes and simple alterations thereof, the handling of geometries in FEM is theoretically straightforward.

- The most attractive feature of finite differences is that it can be very easy to implement.

- There are several ways one could consider the FDM a special case of the FEM approach. E.g., first order FEM is identical to FDM for Poisson's equation, if the problem is discretized by a regular rectangular mesh with each rectangle divided into two triangles.

- There are reasons to consider the mathematical foundation of the finite element approximation more sound, for instance, because the quality of the approximation between grid points is poor in FDM.

- The quality of a FEM approximation is often higher than in the corresponding FDM approach, but this is extremely problem-dependent and several examples to the contrary can be provided.

Generally, FEM is the method of choice in all types of analysis in structural mechanics (i.e. solving for deformation and stresses in solid bodies or dynamics of structures) while computational fluid dynamics (CFD) tends to use FDM or other methods like finite volume method (FVM). CFD problems usually require discretization of the problem into a large number of cells/gridpoints (millions and more), therefore cost of the solution favors simpler, lower order approximation within each cell. This is especially true for 'external flow' problems, like air flow around the car or airplane, or weather simulation.

Application

A variety of specializations under the umbrella of the mechanical engineering discipline (such as aeronautical, biomechanical, and automotive industries) commonly use integrated FEM in design and development of their products. Several modern FEM packages include specific components such as thermal, electromagnetic, fluid, and structural working environments. In a structural simulation, FEM helps tremendously in producing stiffness and strength visualizations and also in minimizing weight, materials, and costs.

FEM allows detailed visualization of where structures bend or twist, and indicates the distribution of stresses and displacements. FEM software provides a wide range of simulation options for controlling the complexity of both modeling and analysis of a system. Similarly, the desired level of accuracy required and associated computational time requirements can be managed simultaneously to address most engineering applications. FEM allows entire designs to be constructed, refined, and optimized before the design is manufactured.

This powerful design tool has significantly improved both the standard of engineering designs and the methodology of the design process in many industrial applications.[12] The introduction of FEM has substantially decreased the time to take products from concept to the production line.[12] It is primarily through improved initial prototype designs using FEM that testing and development have been accelerated.[13] In summary, benefits of FEM include increased accuracy, enhanced design and better insight into critical design parameters, virtual prototyping, fewer hardware prototypes, a faster and less expensive design cycle, increased productivity, and increased revenue.[12]

FEA has also been proposed to use in stochastic modelling for numerically solving probability models.[14][15]

See also

- Applied element method

- Boundary element method

- Computer experiment

- Direct stiffness method

- Discontinuity layout optimization

- Discrete element method

- Finite difference method

- Finite element machine

- Finite element method in structural mechanics

- Finite volume method

- Finite volume method for unsteady flow

- Interval finite element

- Isogeometric analysis

- Lattice Boltzmann methods

- List of finite element software packages

- Movable cellular automaton

- Multidisciplinary design optimization

- Multiphysics

- Patch test

- Rayleigh–Ritz method

- Weakened weak form

References

- ↑ Reddy, J.N. (2006). An Introduction to the Finite Element Method (Third ed.). McGraw-Hill. ISBN 9780071267618.

- ↑ Hrennikoff, Alexander (1941). "Solution of problems of elasticity by the framework method". Journal of applied mechanics 8.4: 169–175.

- ↑ Courant, R. (1943). "Variational methods for the solution of problems of equilibrium and vibrations". Bulletin of the American Mathematical Society 49: 1–23. doi:10.1090/s0002-9904-1943-07818-4.

- ↑ Hinton, Ernest; Irons, Bruce (July 1968). "Least squares smoothing of experimental data using finite elements". Strain 4: 24–27. doi:10.1111/j.1475-1305.1968.tb01368.x.

- ↑ "SAP-IV Software and Manuals". NISEE e-Library, The Earthquake Engineering Online Archive.

- ↑ Gard Paulsen, Håkon With Andersen, John Petter Collett, Iver Tangen Stensrud (2014). Building Trust, The history of DNV 1864-2014. Lysaker, Norway: Dinamo Forlag A/S. pp. 121, 436. ISBN 978-82-8071-256-1.

- ↑ Strang, Gilbert; Fix, George (1973). An Analysis of The Finite Element Method. Prentice Hall. ISBN 0-13-032946-0.

- ↑ Zienkiewicz, O.C.; Taylor, R.L.; Zhu, J.Z. (2005). The Finite Element Method: Its Basis and Fundamentals (Sixth ed.). Butterworth-Heinemann. ISBN 0750663200.

- ↑ Bathe, K.J. (2006). Finite Element Procedures. Cambridge, MA: Klaus-Jürgen Bathe. ISBN 097900490X.

- ↑ Babuška, Ivo; Banerjee, Uday; Osborn, John E. (June 2004). "Generalized Finite Element Methods: Main Ideas, Results, and Perspective". International Journal of Computational Methods 1 (1): 67–103. doi:10.1142/S0219876204000083.

- ↑ P. Solin, K. Segeth, I. Dolezel: Higher-Order Finite Element Methods, Chapman & Hall/CRC Press, 2003

- 1 2 3 Hastings, J. K., Juds, M. A., Brauer, J. R., Accuracy and Economy of Finite Element Magnetic Analysis, 33rd Annual National Relay Conference, April 1985.

- ↑ McLaren-Mercedes (2006). "McLaren Mercedes: Feature - Stress to impress". Archived from the original on 2006-10-30. Retrieved 2006-10-03.

- ↑ Peng Long; Wang Jinliang; Zhu Qiding (19 May 1995). "Methods with high accuracy for finite element probability computing". Journal of Computational and Applied Mathematics 59 (2): 181–189. doi:10.1016/0377-0427(94)00027-X.

- ↑ Haldar, Achintya; Mahadevan, Sankaran (2000). Reliability Assessment Using Stochastic Finite Element Analysis. John Wiley & Sons. ISBN 978-0471369615.

Further reading

- G. Allaire and A. Craig: Numerical Analysis and Optimization:An Introduction to Mathematical Modelling and Numerical Simulation

- K. J. Bathe : Numerical methods in finite element analysis, Prentice-Hall (1976).

- J. Chaskalovic, Finite Elements Methods for Engineering Sciences, Springer Verlag, (2008).

- O. C. Zienkiewicz, R. L. Taylor, J. Z. Zhu : The Finite Element Method: Its Basis and Fundamentals, Butterworth-Heinemann, (2005).

External links

- IFER Internet Finite Element Resources - Describes and provides access to finite element analysis software via the Internet.

- NAFEMS—The International Association for the Engineering Analysis Community

- Mathematics of the Finite Element Method

- Finite Element Methods for Partial Differential Equations - Lecture notes by Endre Süli

|

![V=\{v:[0,1] \rightarrow \Bbb R\;: v\mbox{ is continuous, }v|_{[x_k,x_{k+1}]} \mbox{ is linear for } k=0,\dots,n \mbox{, and } v(0)=v(1)=0 \}](../I/m/f77e537e478dac5583005234f51875f8.png)

![v_{k}(x)=\begin{cases} {x-x_{k-1} \over x_k\,-x_{k-1}} & \mbox{ if } x \in [x_{k-1},x_k], \\

{x_{k+1}\,-x \over x_{k+1}\,-x_k} & \mbox{ if } x \in [x_k,x_{k+1}], \\

0 & \mbox{ otherwise},\end{cases}](../I/m/807c9437f8c9ef8ef673c9583252901a.png)