Familywise error rate

In statistics, familywise error rate (FWER) is the probability of making one or more false discoveries, or type I errors, among all the hypotheses when performing multiple hypotheses tests.

Definitions

Classification of m hypothesis tests

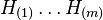

Suppose we have m null hypotheses, denoted by: H1, H2, ..., Hm.

Using a statistical test, we reject the null hypothesis if the test is declared significant. We do not reject the null hypothesis if the test is non-significant.

Summing the test results over Hi will give us the following table and related random variables:

| Null hypothesis is True | Alternative hypothesis is True | Total | |

|---|---|---|---|

| Declared significant |  |

|

|

| Declared non-significant |  |

|

|

| Total |  |

|

|

-

is the number of true null hypotheses, an unknown parameter

is the number of true null hypotheses, an unknown parameter -

is the number of true alternative hypotheses

is the number of true alternative hypotheses -

is the number of false positives (Type I error)

is the number of false positives (Type I error) -

is the number of true positives

is the number of true positives -

is the number of false negatives (Type II error)

is the number of false negatives (Type II error) -

is the number of true negatives

is the number of true negatives -

is the number of rejected null hypotheses

is the number of rejected null hypotheses -

is an observable random variable, while

is an observable random variable, while  ,

,  ,

,  , and

, and  are unobservable random variables.

are unobservable random variables.

The FWER

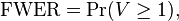

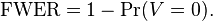

The FWER is the probability of making at least one type I error in the family,

or equivalently,

Thus, by assuring  , the probability of making even one type I error in the family is controlled at level

, the probability of making even one type I error in the family is controlled at level  .

.

A procedure controls the FWER in the weak sense if the FWER control at level  is guaranteed only when all null hypotheses are true (i.e. when

is guaranteed only when all null hypotheses are true (i.e. when  =

=  so the global null hypothesis is true).

so the global null hypothesis is true).

A procedure controls the FWER in the strong sense if the FWER control at level  is guaranteed for any configuration of true and non-true null hypotheses (including the global null hypothesis).

is guaranteed for any configuration of true and non-true null hypotheses (including the global null hypothesis).

Alternative approaches

FWER control exerts a more stringent control over false discovery compared to False discovery rate (FDR) procedures. FWER control limits the probability of at least one false discovery, whereas FDR control limits (in a loose sense) the expected proportion of false discoveries. Thus, FDR procedures have greater power at the cost of increased rates of type I errors, i.e., rejecting null hypotheses of no effect when they should be accepted.[1]

On the other hand, FWER control is less stringent than per-family error rate control, which limits the expected number of errors per family. Because FWER control is concerned with at least one false discovery, unlike per-family error rate control it does not treat multiple simultaneous false discoveries as any worse than one false discovery. The Bonferroni correction is often considered as merely controlling the FWER, but in fact also controls the per-family error rate.[2]

The concept of a family

Within the statistical framework, there are several definitions for the term "family":

- First of all, a distinction must be made between exploratory data analysis and confirmatory data analysis: for exploratory analysis – the family constitutes all inferences made and those that potentially could be made, whereas in the case of confirmatory analysis, the family must include only inferences of interest specified prior to the study.

- Hochberg & Tamhane (1987) define "family" as "any collection of inferences for which it is meaningful to take into account some combined measure of error".[3]

- According to Cox (1982), a set of inferences should be regarded a family:

- To take into account the selection effect due to data dredging

- To ensure simultaneous correctness of a set of inferences as to guarantee a correct overall decision

To summarize, a family could best be defined by the potential selective inference that is being faced: A family is the smallest set of items of inference in an analysis, interchangeable about their meaning for the goal of research, from which selection of results for action, presentation or highlighting could be made (Benjamini).

History

Tukey first coined the term experimentwise error rate and "per-experiment" error rate for the error rate that the researcher should use as a control level in a multiple hypothesis experiment.

Controlling procedures

The following is a concise review of some of the "old and trusted" solutions that ensure strong level  FWER control, followed by some newer solutions.

FWER control, followed by some newer solutions.

The Bonferroni procedure

- Denote by

the p-value for testing

the p-value for testing

- reject

if

if

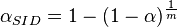

The Šidák procedure

- Testing each hypothesis at level

is Sidak's multiple testing procedure.

is Sidak's multiple testing procedure. - This procedure is more powerful than Bonferroni but the gain is small.

- This procedure can fail to control the FWER when the tests are negatively dependent.

Tukey's procedure

- Tukey's procedure is only applicable for pairwise comparisons.

- It assumes independence of the observations being tested, as well as equal variation across observations (homoscedasticity).

- The procedure calculates for each pair the studentized range statistic:

where

where  is the larger of the two means being compared,

is the larger of the two means being compared,  is the smaller, and

is the smaller, and  is the standard error of the data in question.

is the standard error of the data in question. - Tukey's test is essentially a Student's t-test, except that it corrects for family-wise error-rate.

some newer solutions for strong level  FWER control:

FWER control:

Holm's step-down procedure (1979)

- Start by ordering the p-values (from lowest to highest)

and let the associated hypotheses be

and let the associated hypotheses be

- Let

be the smallest

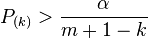

be the smallest  such that

such that

- Reject the null hypotheses

. If

. If  then none of the hypotheses are rejected.

then none of the hypotheses are rejected.

This procedure is uniformly more powerful than the Bonferroni procedure.[4] It is worth noticing here that the reason why this procedure controls the family-wise error rate for all the m hypotheses at level α in the strong sense, is because it is a closed testing procedure. As such, each intersection is tested using the simple Bonferroni test.

Hochberg's step-up procedure (1988)

Hochberg's step-up procedure (1988) is performed using the following steps:[5]

- Start by ordering the p-values (from lowest to highest)

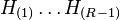

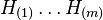

and let the associated hypotheses be

and let the associated hypotheses be

- For a given

, let

, let  be the largest

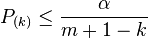

be the largest  such that

such that

- Reject the null hypotheses

Hochberg's procedure is more powerful than Holms'. Nevertheless, while Holm’s is a closed testing procedure (and thus, like Bonferroni, has no restriction on the joint distribution of the test statistics), Hochberg’s is based on the Simes test, so it holds only under non-negative dependence.

Dunnett's correction

Charles Dunnett (1955, 1966) described an alternative alpha error adjustment when k groups are compared to the same control group. Now known as Dunnett's test, this method is less conservative than the Bonferroni adjustment.

Scheffé's method

Resampling procedures

The procedures of Bonferroni and Holm control the FWER under any dependence structure of the p-values (or equivalently the individual test statistics). Essentially, this is achieved by accommodating a `worst-case' dependence structure (which is close to independence for most practical purposes). But such an approach is conservative if dependence is actually positive. To give an extreme example, under perfect positive dependence, there is effectively only one test and thus, the FWER is uninflated.

Accounting for the dependence structure of the p-values (or of the individual test statistics) produce more powerful procedures. This can be achieved by applying resampling methods, such as bootstrapping and permutations methods. The procedure of Westfall and Young (1993) requires a certain condition that does not always hold in practice (namely, subset pivotality).[6] The procedures of Romano and Wolf (2005a,b) dispense with this condition and are thus more generally valid.[7][8]

See also

References

- ↑ Shaffer, J. P. (1995). "Multiple hypothesis testing". Annual Review of Psychology 46: 561–584. doi:10.1146/annurev.ps.46.020195.003021.

- ↑ Frane, Andrew (2015). "Are per-family Type I error rates relevant in social and behavioral science?". Journal of Modern Applied Statistical Methods 14 (1): 12–23.

- ↑ Hochberg, Y.; Tamhane, A. C. (1987). Multiple Comparison Procedures. New York: Wiley. ISBN 0-471-82222-1.

- ↑ Aickin, M; Gensler, H (1996). "Adjusting for multiple testing when reporting research results: the Bonferroni vs Holm methods". American Journal of Public Health 86 (5): 726–728. doi:10.2105/ajph.86.5.726. PMC 1380484. PMID 8629727.

- ↑ Hochberg, Yosef (1988). "A Sharper Bonferroni Procedure for Multiple Tests of Significance" (PDF). Biometrika 75 (4): 800–802. doi:10.1093/biomet/75.4.800.

- ↑ Westfall, P. H.; Young, S. S. (1993). Resampling-Based Multiple Testing: Examples and Methods for p-Value Adjustment. New York: John Wiley. ISBN 0-471-55761-7.

- ↑ Romano, J.P.; Wolf, M. (2005a). "Exact and approximate stepdown methods for multiple hypothesis testing". Journal of the American Statistical Association 100: 94–108. doi:10.1198/016214504000000539.

- ↑ Romano, J.P.; Wolf, M. (2005b). "Stepwise multiple testing as formalized data snooping". Econometrica 73: 1237–1282. doi:10.1111/j.1468-0262.2005.00615.x.

External links

- Large-scale Simultaneous Inference – Syllabus, notes, and homework from Efron's course at Stanford. Includes PDFs for each chapter of his book.