Ergodicity

In mathematics, the term ergodic is used to describe a dynamical system which, broadly speaking, has the same behavior averaged over time as averaged over the space of all the system's states (phase space). In physics the term is used to imply that a system satisfies the ergodic hypothesis of thermodynamics.

In statistics, the term describes a random process for which the time average of one sequence of events is the same as the ensemble average. In other words, for a Markov chain, as one increases the steps, there exists a positive probability measure at step  that is independent of the probability distribution at initial step 0 (Feller, 1971, p. 271).[1]

that is independent of the probability distribution at initial step 0 (Feller, 1971, p. 271).[1]

Etymology

The term "ergodic" was derived from the Greek words έργον (ergon: "work") and οδός (odos: "path" or "way"). It was chosen by Boltzmann while he was working on a problem in statistical mechanics.[2]

Formal definition

Let  be a probability space, and

be a probability space, and  be a measure-preserving transformation. We say that T is ergodic with respect to

be a measure-preserving transformation. We say that T is ergodic with respect to  (or alternatively that

(or alternatively that  is ergodic with respect to T) if one of the following equivalent statements is true:[3]

is ergodic with respect to T) if one of the following equivalent statements is true:[3]

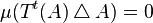

- for every

with

with  either

either  or

or  .

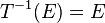

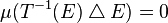

. - for every

with

with  we have

we have  or

or  (where

(where  denotes the symmetric difference).

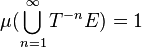

denotes the symmetric difference). - for every

with positive measure we have

with positive measure we have  .

. - for every two sets E and H of positive measure, there exists an n > 0 such that

.

. - Every measurable function

with

with  is almost surely constant.

is almost surely constant.

Measurable flows

These definitions have natural analogues for the case of measurable flows and, more generally, measure-preserving semigroup actions. Let {Tt} be a measurable flow on (X, Σ, μ). An element A of Σ is invariant mod 0 under {Tt} if

for each t ∈ R. Measurable sets invariant mod 0 under a flow or a semigroup action form the invariant subalgebra of Σ, and the corresponding measure-preserving dynamical system is ergodic if the invariant subalgebra is the trivial σ-algebra consisting of the sets of measure 0 and their complements in X.

Markov chains

In a Markov chain, a state  is said to be ergodic if it is aperiodic and positive recurrent (a state is recurrent if there is a nonzero probability of exiting the state and the probability of an eventual return to it is 1; if the former condition is not true the state is "absorbing"). If all states in a Markov chain are ergodic, then the chain is said to be ergodic. A Markov chain is ergodic if there is a strictly positive probability to pass from any state to any other state in one step (Markov's theorem).

is said to be ergodic if it is aperiodic and positive recurrent (a state is recurrent if there is a nonzero probability of exiting the state and the probability of an eventual return to it is 1; if the former condition is not true the state is "absorbing"). If all states in a Markov chain are ergodic, then the chain is said to be ergodic. A Markov chain is ergodic if there is a strictly positive probability to pass from any state to any other state in one step (Markov's theorem).

Examples in electronics

Ergodicity is where the ensemble average equals the time average. Each resistor has thermal noise associated with it and it depends on the temperature. Take N resistors (N should be very large) and plot the voltage across those resistors for a long period. For each resistor you will have a waveform. Calculate the average value of that waveform. This gives you the time average. You should also note that you have N waveforms as we have N resistors. These N plots are known as an ensemble. Now take a particular instant of time in all those plots and find the average value of the voltage. That gives you the ensemble average for each plot. If both ensemble average and time average are the same then it is ergodic.

Ergodic decomposition

Conceptually, ergodicity of a dynamical system is a certain irreducibility property, akin to the notions of irreducibility in the theory of Markov chains, irreducible representation in algebra and prime number in arithmetic. A general measure-preserving transformation or flow on a Lebesgue space admits a canonical decomposition into its ergodic components, each of which is ergodic.

See also

Notes

References

- Walters, Peter (1982), An Introduction to Ergodic Theory, Springer, ISBN 0-387-95152-0

- Brin, Michael; Garrett, Stuck (2002), Introduction to Dynamical Systems, Cambridge University Press, ISBN 0-521-80841-3

- Birkhoff, G. D. (1931). "Proof of the ergodic theorem". Proceedings of the National Academy of Sciences of the United States of America 17 (12): 656. doi:10.1073/pnas.17.2.656.

- Alaoglu, L., & Birkhoff, G. (1940). General ergodic theorems. The Annals of Mathematics, 41(2), 293-309.

External links

| Look up ergodic in Wiktionary, the free dictionary. |

- Outline of Ergodic Theory, by Steven Arthur Kalikow

- A Simple Introduction to Ergodic Theory by Karma Dajani and Sjoerd Dirksin