Diagnostic odds ratio

In medical testing with binary classification, the diagnostic odds ratio is a measure of the effectiveness of a diagnostic test.[1] It is defined as the ratio of the odds of the test being positive if the subject has a disease relative to the odds of the test being positive if the subject does not have the disease.

The rationale for the diagnostic odds ratio is that it is a single indicator of test performance (like accuracy and Youden's J statistic) but which is independent of prevalence (unlike accuracy) and is presented as an odds ratio, which is familiar to medical practitioners.

Definition

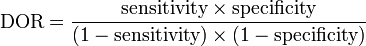

The diagnostic odds ratio is defined mathematically as:

where  ,

,  ,

,  and

and  are the number of true positives, false negatives, false positives and true negatives respectively.[1]

are the number of true positives, false negatives, false positives and true negatives respectively.[1]

Confidence interval

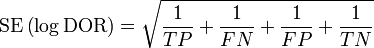

As an odds ratio, the logarithm of the diagnostic odds ratio is approximately normally distributed. The standard error of the log diagnostic odds ratio is approximately:

From this an approximate 95% confidence interval can be calculated for the log diagnostic odds ratio:

Exponentiation of the approximate confidence interval for the log diagnostic odds ratio gives the approximate confidence interval for the diagnostic odds ratio.[1]

Interpretation

The diagnostic odds ratio ranges from zero to infinity, although for useful tests it is greater than one, and higher diagnostic odds ratios are indicative of better test performance.[1] Diagnostic odds ratios less than one indicate that the test can be improved by simply inverting the outcome of the test – the test is in the wrong direction, while a diagnostic odds ratio of exactly one means that the test is equally likely to predict a positive outcome whatever the true condition – the test gives no information.

Relation to other measures of diagnostic test accuracy

The diagnostic odds ratio may be expressed in terms of the sensitivity and specificity of the test:[1]

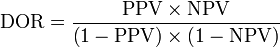

It may also be expressed in terms of the Positive predictive value (PPV) and Negative predictive value (NPV):[1]

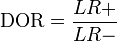

It is also related to the likelihood ratios,  and

and  :[1]

:[1]

Uses

The log diagnostic odds ratio is sometimes used in meta-analyses of diagnostic test accuracy studies due to its simplicity (being approximately normally distributed).[3]

Traditional meta-analytic techniques such as inverse-variance weighting can be used to combine log diagnostic odds ratios computed from a number of data sources to produce an overall diagnostic odds ratio for the test in question.

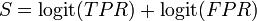

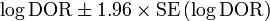

The log diagnostic odds ratio can also be used to study the trade-off between sensitivity and specificity.[4][5] By expressing the log diagnostic odds ratio in terms of the logit of the true positive rate (sensitivity) and false positive rate (1 − specificity), and by additionally constructing a measure,  :

:

It is then possible to fit a straight line,  . If b ≠ 0 then there is a trend in diagnostic performance with threshold beyond the simple trade-off of sensitivity and specificity. The value a can be used to plot a summary ROC (SROC) curve.[4][5]

. If b ≠ 0 then there is a trend in diagnostic performance with threshold beyond the simple trade-off of sensitivity and specificity. The value a can be used to plot a summary ROC (SROC) curve.[4][5]

Example

Consider a test with the following 2×2 confusion matrix:

| Condition (as determined by “Gold standard” | |||

|---|---|---|---|

| Positive | Negative | ||

| Test outcome |

Positive | 26 | 12 |

| Negative | 3 | 48 | |

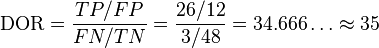

We calculate the diagnostic odds ratio as:

This diagnostic odds ratio is greater than one, so we know that the test is discriminating correctly. We compute the confidence interval for the diagnostic odds ratio of this test as [9, 134].

Criticisms

The diagnostic odds ratio is undefined when the number of false negatives or false positives is zero – if both false negatives and false positives are zero, then the test is perfect, but if only one is, this ratio does not give a usable measure. The typical response to such a scenario is to add 0.5 to all cells in the contingency table,[1][6] although this should not be seen as a correction as it introduces a bias to results.[4] It is suggested that the adjustment is made to all contingency tables, even if there are no cells with zero entries.[4]

See also

- Sensitivity and specificity

- Binary classification

- Positive predictive value and negative predictive value

- Odds ratio

- Two techniques for the meta-analysis of diagnostic test accuracy, recommended by,[7] include the bivariate method[8] and the hierarchical summary ROC (HSROC) method.[9] (Authors on the recommending paper are also authors on the two recommended techniques.)

References

- 1 2 3 4 5 6 7 8 Glas, Afina S.; Lijmer, Jeroen G.; Prins, Martin H.; Bonsel, Gouke J.; Bossuyt, Patrick M.M. (2003). "The diagnostic odds ratio: a single indicator of test performance". Journal of Clinical Epidemiology 56 (11): 1129–1135. doi:10.1016/S0895-4356(03)00177-X. PMID 14615004.

- ↑ (PDF) http://srdta.cochrane.org/sites/srdta.cochrane.org/files/uploads/Chapter%2010%20-%20Version%201.0.pdf. Missing or empty

|title=(help) - ↑ Gatsonis, C; Paliwal, P (2006). "Meta-analysis of diagnostic and screening test accuracy evaluations: Methodologic primer". AJR. American journal of roentgenology 187 (2): 271–81. doi:10.2214/AJR.06.0226. PMID 16861527.

- 1 2 3 4 Moses, L. E.; Shapiro, D; Littenberg, B (1993). "Combining independent studies of a diagnostic test into a summary ROC curve: Data-analytic approaches and some additional considerations". Statistics in medicine 12 (14): 1293–316. doi:10.1002/sim.4780121403. PMID 8210827.

- 1 2 Dinnes, J; Deeks, J; Kunst, H; Gibson, A; Cummins, E; Waugh, N; Drobniewski, F; Lalvani, A (2007). "A systematic review of rapid diagnostic tests for the detection of tuberculosis infection". Health technology assessment (Winchester, England) 11 (3): 1–196. doi:10.3310/hta11030. PMID 17266837.

- ↑ Cox, D.R. (1970). The analysis of binary data. London: Methuen.

- ↑ Leeflang, M. M.; Deeks, J. J.; Gatsonis, C; Bossuyt, P. M.; Cochrane Diagnostic Test Accuracy Working Group (2008). "Systematic reviews of diagnostic test accuracy". Annals of internal medicine 149 (12): 889–97. doi:10.7326/0003-4819-149-12-200812160-00008. PMC 2956514. PMID 19075208.

- ↑ Reitsma, J. B.; Glas, A. S.; Rutjes, A. W.; Scholten, R. J.; Bossuyt, P. M.; Zwinderman, A. H. (2005). "Bivariate analysis of sensitivity and specificity produces informative summary measures in diagnostic reviews". Journal of Clinical Epidemiology 58 (10): 982–90. doi:10.1016/j.jclinepi.2005.02.022. PMID 16168343.

- ↑ Rutter, C. M.; Gatsonis, C. A. (2001). "A hierarchical regression approach to meta-analysis of diagnostic test accuracy evaluations". Statistics in medicine 20 (19): 2865–84. doi:10.1002/sim.942. PMID 11568945.

![D = \log{\text{DOR}} = \log{\left[\frac{TPR}{(1-TPR)}\times\frac{(1-FPR)}{FPR}\right]} = \operatorname{logit}(TPR) - \operatorname{logit}(FPR)](../I/m/d5c7293dba33a0b50aa61dee3f903fed.png)