Decision-theoretic rough sets

In the mathematical theory of decisions, decision-theoretic rough sets (DTRS) is a probabilistic extension of rough set classification. First created in 1990 by Dr. Yiyu Yao,[1] the extension makes use of loss functions to derive  and

and  region parameters. Like rough sets, the lower and upper approximations of a set are used.

region parameters. Like rough sets, the lower and upper approximations of a set are used.

Definitions

The following contains the basic principles of decision-theoretic rough sets.

Conditional risk

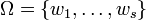

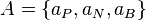

Using the Bayesian decision procedure, the decision-theoretic rough set (DTRS) approach allows for minimum-risk decision making based on observed evidence. Let  be a finite set of

be a finite set of  possible actions and let

possible actions and let  be a finite set of

be a finite set of  states.

states. ![\textstyle P(w_j\mid[x])](../I/m/56f474ef1ae7b9aa1ef063aca8b55b88.png) is

calculated as the conditional probability of an object

is

calculated as the conditional probability of an object  being in state

being in state  given the object description

given the object description

![\textstyle [x]](../I/m/5198616d7cf5af2d0667c9610eaf57f1.png) .

.  denotes the loss, or cost, for performing action

denotes the loss, or cost, for performing action  when the state is

when the state is  .

The expected loss (conditional risk) associated with taking action

.

The expected loss (conditional risk) associated with taking action  is given

by:

is given

by:

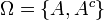

Object classification with the approximation operators can be fitted into the Bayesian decision framework. The

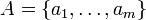

set of actions is given by  , where

, where  ,

,  , and

, and  represent the three

actions in classifying an object into POS(

represent the three

actions in classifying an object into POS( ), NEG(

), NEG( ), and BND(

), and BND( ) respectively. To indicate whether an

element is in

) respectively. To indicate whether an

element is in  or not in

or not in  , the set of states is given by

, the set of states is given by  . Let

. Let

denote the loss incurred by taking action

denote the loss incurred by taking action  when an object belongs to

when an object belongs to

, and let

, and let  denote the loss incurred by take the same action when the object

belongs to

denote the loss incurred by take the same action when the object

belongs to  .

.

Loss functions

Let  denote the loss function for classifying an object in

denote the loss function for classifying an object in  into the POS region,

into the POS region,  denote the loss function for classifying an object in

denote the loss function for classifying an object in  into the BND region, and let

into the BND region, and let  denote the loss function for classifying an object in

denote the loss function for classifying an object in  into the NEG region. A loss function

into the NEG region. A loss function  denotes the loss of classifying an object that does not belong to

denotes the loss of classifying an object that does not belong to  into the regions specified by

into the regions specified by  .

.

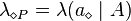

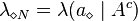

Taking individual can be associated with the expected loss ![\textstyle R(a_\diamond\mid[x])](../I/m/1ba4f7e0c05581c888959d0743aa1d0c.png) actions and can be expressed as:

actions and can be expressed as:

where  ,

,  , and

, and  ,

,  , or

, or  .

.

Minimum-risk decision rules

If we consider the loss functions  and

and  , the following decision rules are formulated (P, N, B):

, the following decision rules are formulated (P, N, B):

- P: If

![\textstyle P(A\mid[x]) \geq \gamma](../I/m/51d1ab15ec8efb5bd69f6a29ef933fd8.png) and

and ![\textstyle P(A\mid[x]) \geq \alpha](../I/m/5a603188660ea41dd9528b209179c0e4.png) , decide POS(

, decide POS( );

); - N: If

![\textstyle P(A\mid[x]) \leq \beta](../I/m/e69d05f05ab62889432e48435e0d7031.png) and

and ![\textstyle P(A\mid[x]) \leq \gamma](../I/m/cfccb929ec0f947c53018105baee349b.png) , decide NEG(

, decide NEG( );

); - B: If

![\textstyle \beta \leq P(A\mid[x]) \leq \alpha](../I/m/063a810649deeafb0cec7968818e3d5c.png) , decide BND(

, decide BND( );

);

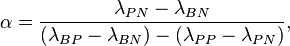

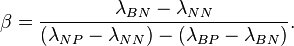

where,

The  ,

,  , and

, and  values define the three different regions, giving us an associated risk for classifying an object. When

values define the three different regions, giving us an associated risk for classifying an object. When  , we get

, we get  and can simplify (P, N, B) into (P1, N1, B1):

and can simplify (P, N, B) into (P1, N1, B1):

- P1: If

![\textstyle P(A\mid [x]) \geq \alpha](../I/m/5a603188660ea41dd9528b209179c0e4.png) , decide POS(

, decide POS( );

); - N1: If

![\textstyle P(A\mid[x]) \leq \beta](../I/m/e69d05f05ab62889432e48435e0d7031.png) , decide NEG(

, decide NEG( );

); - B1: If

![\textstyle \beta < P(A\mid[x]) < \alpha](../I/m/9a90028585b3d202ecf9c3969216bfac.png) , decide BND(

, decide BND( ).

).

When  , we can simplify the rules (P-B) into (P2-B2), which divide the regions based solely on

, we can simplify the rules (P-B) into (P2-B2), which divide the regions based solely on  :

:

- P2: If

![\textstyle P(A\mid[x]) > \alpha](../I/m/9122f7f563c5e168cddfe5cc42c2234e.png) , decide POS(

, decide POS( );

); - N2: If

![\textstyle P(A\mid[x]) < \alpha](../I/m/084b6098241c6a93546ac2f9997db39d.png) , decide NEG(

, decide NEG( );

); - B2: If

![\textstyle P(A\mid[x]) = \alpha](../I/m/a73431e5c44f1272ce7889e200cf2798.png) , decide BND(

, decide BND( ).

).

Data mining, feature selection, information retrieval, and classifications are just some of the applications in which the DTRS approach has been successfully used.

See also

References

- ↑ Yao, Y.Y.; Wong, S.K.M.; Lingras, P. (1990). "A decision-theoretic rough set model". Methodologies for Intelligent Systems, 5, Proceedings of the 5th International Symposium on Methodologies for Intelligent Systems (Knoxville, Tennessee, USA: North-Holland): 17–25.

![R(a_i\mid [x]) = \sum_{j=1}^s \lambda(a_i\mid w_j)P(w_j\mid[x]).](../I/m/0caf42f3d493633cb5dfd3250bdc647f.png)

![\textstyle R(a_P\mid[x]) = \lambda_{PP}P(A\mid[x]) + \lambda_{PN}P(A^c\mid[x]),](../I/m/43a5cedb721b7a0476f29412c787f4c8.png)

![\textstyle R(a_N\mid[x]) = \lambda_{NP}P(A\mid[x]) + \lambda_{NN}P(A^c\mid[x]),](../I/m/0702f67872a9d8cc53c40d41b0184884.png)

![\textstyle R(a_B\mid[x]) = \lambda_{BP}P(A\mid[x]) + \lambda_{BN}P(A^c\mid[x]),](../I/m/71400b32a7b09c8561326597b95b153c.png)