Correction for attenuation

Correction for attenuation is a statistical procedure, due to Spearman (1904), to "rid a correlation coefficient from the weakening effect of measurement error" (Jensen, 1998), a phenomenon also known as regression dilution. In measurement and statistics, it is also called disattenuation. The correlation between two sets of parameters or measurements is estimated in a manner that accounts for measurement error contained within the estimates of those parameters.

Background

Correlations between parameters are diluted or weakened by measurement error. Disattenuation provides for a more accurate estimate of the correlation between the parameters by accounting for this effect.

Definition

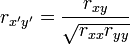

The disattenuated estimate of the correlation between two sets of parameters or measures is therefore

That is, the disattenuated correlation is obtained by dividing the correlation between the estimates by the geometric mean of the separation indices of the two sets of estimates. Expressed in terms of Classical test theory, the correlation is divided by the geometric mean of the reliability coefficients of two tests.

Given two random variables  and

and  , with correlation

, with correlation  , and a known reliability for each variable,

, and a known reliability for each variable,  and

and  , the correlation between

, the correlation between  and

and  corrected for attenuation is

corrected for attenuation is

.

.

How well the variables are measured affects the correlation of X and Y. The correction for attenuation tells you what the correlation would be if you could measure X and Y with perfect reliability.

If  and

and  are taken to be imperfect measurements of underlying variables

are taken to be imperfect measurements of underlying variables  and

and  with independent errors, then

with independent errors, then  measures the true correlation between

measures the true correlation between  and

and  .

.

Derivation of the formula

Let  and

and  be the true values of two attributes of some person or statistical unit. These values are regarded as random variables by virtue of the statistical unit being selected randomly from some population. Let

be the true values of two attributes of some person or statistical unit. These values are regarded as random variables by virtue of the statistical unit being selected randomly from some population. Let  and

and  be estimates of

be estimates of  and

and  derived either directly by observation-with-error or from application of a measurement model, such as the Rasch model. Also, let

derived either directly by observation-with-error or from application of a measurement model, such as the Rasch model. Also, let

where  and

and  are the measurement errors associated with the estimates

are the measurement errors associated with the estimates  and

and  .

.

The correlation between two sets of estimates is

which, assuming the errors are uncorrelated with each other and with the estimates, gives

where  is the separation index of the set of estimates of

is the separation index of the set of estimates of  , which is analogous to Cronbach's alpha; that is, in terms of Classical test theory,

, which is analogous to Cronbach's alpha; that is, in terms of Classical test theory,  is analogous to a reliability coefficient. Specifically, the separation index is given as follows:

is analogous to a reliability coefficient. Specifically, the separation index is given as follows:

where the mean squared standard error of person estimate gives an estimate of the variance of the errors,  . The standard errors are normally produced as a by-product of the estimation process (see Rasch model estimation).

. The standard errors are normally produced as a by-product of the estimation process (see Rasch model estimation).

See also

References

- Jensen, A.R. (1998). The g Factor: The Science of Mental Ability Praeger, Connecticut, USA. ISBN 0-275-96103-6

- Spearman, C. (1904) "The Proof and Measurement of Association between Two Things". The American Journal of Psychology, 15 (1), 72–101 JSTOR 1412159

External links

- Disattenuating correlations

- Disattenuation of correlation and regression coefficients: Jason W. Osborne

![\operatorname{corr}(\hat{\beta},\hat{\theta})= \frac{\operatorname{cov}(\hat{\beta},\hat{\theta})}{\sqrt{\operatorname{var}[\hat{\beta}]\operatorname{var}[\hat{\theta}}]}](../I/m/9930088aa4dcdab308022fd67af64c7a.png)

![=\frac{\operatorname{cov}(\beta+\epsilon_{\beta}, \theta+\epsilon_\theta)}{\sqrt{\operatorname{var}[\beta+\epsilon_{\beta}]\operatorname{var}[\theta+\epsilon_\theta]}},](../I/m/bf01ff1ffdc270c12b51f92a221a1750.png)

![\operatorname{corr}(\hat{\beta},\hat{\theta})= \frac{\operatorname{cov}(\beta,\theta)}{\sqrt{(\operatorname{var}[\beta]+\operatorname{var}[\epsilon_\beta])(\operatorname{var}[\theta]+\operatorname{var}[\epsilon_\theta])}}](../I/m/796e9cccf837cfafc200e4b57558d678.png)

![=\frac{\operatorname{cov}(\beta,\theta)}{\sqrt{(\operatorname{var}[\beta]\operatorname{var}[\theta])}}.\frac{\sqrt{\operatorname{var}[\beta]\operatorname{var}[\theta]}}{\sqrt{(\operatorname{var}[\beta]+\operatorname{var}[\epsilon_\beta])(\operatorname{var}[\theta]+\operatorname{var}[\epsilon_\theta])}}](../I/m/d27e3fdeab6a5c873e25828d56d0b876.png)

![R_\beta=\frac{\operatorname{var}[\beta]}{\operatorname{var}[\beta]+\operatorname{var}[\epsilon_\beta]}=\frac{\operatorname{var}[\hat{\beta}]-\operatorname{var}[\epsilon_\beta]}{\operatorname{var}[\hat{\beta}]},](../I/m/60e426612792951b24e042a4c1c2856c.png)