Cook's distance

In statistics, Cook's distance or Cook's D is a commonly used estimate of the influence of a data point when performing least squares regression analysis.[1] In a practical ordinary least squares analysis, Cook's distance can be used in several ways: to indicate data points that are particularly worth checking for validity; to indicate regions of the design space where it would be good to be able to obtain more data points. It is named after the American statistician R. Dennis Cook, who introduced the concept in 1977.[2][3]

Definition

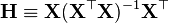

Cook's distance measures the effect of deleting a given observation. Data points with large residuals (outliers) and/or high leverage may distort the outcome and accuracy of a regression. Points with a large Cook's distance are considered to merit closer examination in the analysis. For the algebraic expression, first define

as the  hat matrix (or projection matrix) of the

hat matrix (or projection matrix) of the  observations of each explanatory variables. Then let

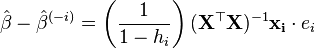

observations of each explanatory variables. Then let  be the OLS estimate of

be the OLS estimate of  that results from omitting the

that results from omitting the  -th observation (

-th observation ( ). Then we have[4]

). Then we have[4]

where  is the residual (i.e., the difference between the observed value and the value fitted by the proposed model), and

is the residual (i.e., the difference between the observed value and the value fitted by the proposed model), and  , defined as

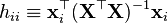

, defined as

is the leverage, i.e., the  -th diagonal element of

-th diagonal element of  . With this, we can define Cook's distance as

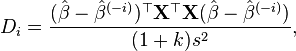

. With this, we can define Cook's distance as

where  is the number of fitted parameters, and

is the number of fitted parameters, and  is the mean square error of the regression model. Algebraically equivalent is the following expression

is the mean square error of the regression model. Algebraically equivalent is the following expression

where  is the OLS estimate of the variance of the error term, defined as

is the OLS estimate of the variance of the error term, defined as

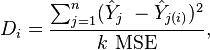

And a third equivalent expression is

where:

is the prediction from the full regression model for observation j;

is the prediction from the full regression model for observation j; is the prediction for observation j from a refitted regression model in which observation i has been omitted;

is the prediction for observation j from a refitted regression model in which observation i has been omitted;

Detecting highly influential observations

There are different opinions regarding what cut-off values to use for spotting highly influential points. A simple operational guideline of  has been suggested.[5] Others have indicated that

has been suggested.[5] Others have indicated that  , where

, where  is the number of observations, might be used.[6]

is the number of observations, might be used.[6]

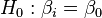

A conservative approach relies on the fact that Cook's distance has the form W/p, where W is formally identical to the Wald statistic that one uses for testing that  using some

using some ![\hat{\beta}_{[-i]}](../I/m/7cb48b2b662ad97813c6e54661e3766b.png) . Recalling that W/p has an

. Recalling that W/p has an  distribution (with p and n-p degrees of freedom), we see that Cook's distance is equivalent to the F statistic for testing this hypothesis, and we can thus use

distribution (with p and n-p degrees of freedom), we see that Cook's distance is equivalent to the F statistic for testing this hypothesis, and we can thus use  as a threshold.[7]

as a threshold.[7]

Interpretation

Specifically  can be interpreted as the distance one's estimates move within the confidence ellipsoid that represents a region of plausible values for the parameters. This is shown by an alternative but equivalent representation of Cook's distance in terms of changes to the estimates of the regression parameters between the cases where the particular observation is either included or excluded from the regression analysis.

can be interpreted as the distance one's estimates move within the confidence ellipsoid that represents a region of plausible values for the parameters. This is shown by an alternative but equivalent representation of Cook's distance in terms of changes to the estimates of the regression parameters between the cases where the particular observation is either included or excluded from the regression analysis.

See also

References

- ↑ Mendenhall, William; Sincich, Terry (1996). A Second Course in Statistics: Regression Analysis (5th ed.). Upper Saddle River, NJ: Prentice-Hall. p. 422. ISBN 0-13-396821-9.

A measure of overall influence an outlying observation has on the estimated

coefficients was proposed by R. D. Cook (1979). Cook's distance, Di, is calculated...

coefficients was proposed by R. D. Cook (1979). Cook's distance, Di, is calculated... - ↑ Cook, R. Dennis (February 1977). "Detection of Influential Observations in Linear Regression". Technometrics (American Statistical Association) 19 (1): 15–18. doi:10.2307/1268249. JSTOR 1268249. MR 0436478.

- ↑ Cook, R. Dennis (March 1979). "Influential Observations in Linear Regression". Journal of the American Statistical Association (American Statistical Association) 74 (365): 169–174. doi:10.2307/2286747. JSTOR 2286747. MR 0529533.

- ↑ Hayashi, Fumio (2000). Econometrics. Princeton University Press. pp. 21–23.

- ↑ Cook, R. Dennis; Weisberg, Sanford (1982). Residuals and Influence in Regression. New York, NY: Chapman & Hall. ISBN 0-412-24280-X.

- ↑ Bollen, Kenneth A.; Jackman, Robert W. (1990). Fox, John; Long, J. Scott, eds. Modern Methods of Data Analysis. Newbury Park, CA: Sage. pp. 257–91. ISBN 0-8039-3366-5.

- ↑ Aguinis, Herman; Gottfredson, Ryan K.; Joo, Harry (2013). "Best-Practice Recommendations for Defining Identifying and Handling Outliers" (PDF). Organizational Research Methods (Sage) 16 (2): 270–301. doi:10.1177/1094428112470848. Retrieved 4 December 2015.

Further reading

- Atkinson, Anthony; Riani, Marco (2000). "Deletion Diagnostics". Robust Diagnostics and Regression Analysis. New York: Springer. pp. 22–25. ISBN 0-387-95017-6.

- Heiberger, Richard M.; Holland, Burt (2013). "Case Statistics". Statistical Analysis and Data Display. Springer Science & Business Media. pp. 312–27. ISBN 9781475742848.

- Krasker, William S.; Kuh, Edwin; Welsch, Roy E. (1983). "Estimation for dirty data and flawed models". Handbook of Econometrics 1. Elsevier. pp. 651–698. doi:10.1016/S1573-4412(83)01015-6.

- Aguinis, Herman; Gottfredson, Ryan K.; Joo, Harry (2013). "Best-Practice Recommendations for Defining Identifying and Handling Outliers" (PDF). Organizational Research Methods (Sage) 16 (2): 270–301. doi:10.1177/1094428112470848. Retrieved 4 December 2015.

![D_i = \frac{e_i^2}{k \ \mathrm{MSE}}\left[\frac{h_{ii}}{(1-h_{ii})^2}\right],](../I/m/b95b4cbddaf9e927677fb02b16e76493.png)