Chernoff bound

In probability theory, the Chernoff bound, named after Herman Chernoff but due to Herman Rubin,[1] gives exponentially decreasing bounds on tail distributions of sums of independent random variables. It is a sharper bound than the known first or second moment based tail bounds such as Markov's inequality or Chebyshev inequality, which only yield power-law bounds on tail decay. However, the Chernoff bound requires that the variates be independent – a condition that neither the Markov nor the Chebyshev inequalities require.

It is related to the (historically prior) Bernstein inequalities, and to Hoeffding's inequality.

The generic bound

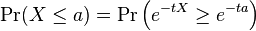

The generic Chernoff bound for a random variable X is attained by applying Markov's inequality to etX.[2] For every  :

:

When X is the sum of n random variables X1, ..., Xn, we get for any t > 0,

In particular, optimizing over t and using the assumption that Xi are independent, we obtain,

-

![\Pr(X \geq a) \leq \min_{t>0} e^{-ta} \prod_i E \left [e^{tX_i} \right ].](../I/m/02192646f1f8393e42b9db7e0523e766.png)

(1)

Similarly,

and so,

Specific Chernoff bounds are attained by calculating ![\mathrm{E} \left[e^{-t\cdot X_i} \right ]](../I/m/0448adb1e430aad4a18f9a7328564430.png) for specific instances of the basic variables

for specific instances of the basic variables  .

.

Example

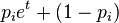

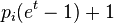

Let X1, ..., Xn be independent Bernoulli random variables, each having probability p > 1/2 of being equal to 1. For a Bernoulli variable:

So:

For any  , taking

, taking  and

and  gives:

gives:

![\mathrm{E} \left[e^{t\cdot X} \right] \leq e^{\delta n p}](../I/m/a76f0542cb16836f707c5b279a5aaeaa.png) and

and

and the generic Chernoff bound gives:[3]:64

The probability of simultaneous occurrence of more than n/2 of the events {Xk = 1} has an exact value:

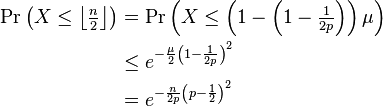

A lower bound on this probability can be calculated based on Chernoff's inequality:

Indeed, noticing that μ = np, we get by the multiplicative form of Chernoff bound (see below or Corollary 13.3 in Sinclair's class notes),[4]

This result admits various generalizations as outlined below. One can encounter many flavours of Chernoff bounds: the original additive form (which gives a bound on the absolute error) or the more practical multiplicative form (which bounds the error relative to the mean).

Additive form (absolute error)

The following Theorem is due to Wassily Hoeffding[5] and hence is called the Chernoff-Hoeffding theorem.

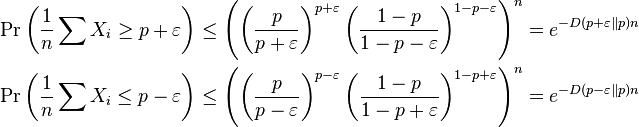

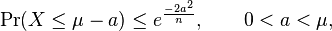

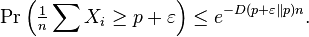

- Chernoff-Hoeffding Theorem. Suppose X1, ..., Xn are i.i.d. random variables, taking values in {0, 1}. Let p = E[Xi] and ε > 0. Then

- where

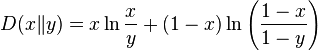

- is the Kullback–Leibler divergence between Bernoulli distributed random variables with parameters x and y respectively. If p ≥ 1/2, then

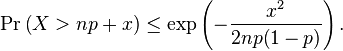

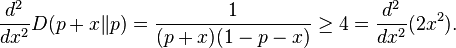

A simpler bound follows by relaxing the theorem using D(p + x || p) ≥ 2x2, which follows from the convexity of D(p + x || p) and the fact that

This result is a special case of Hoeffding's inequality. Sometimes, the bound

which is stronger for p < 1/8, is also used.

Multiplicative form (relative error)

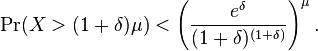

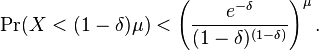

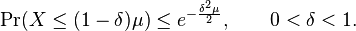

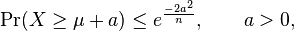

- Multiplicative Chernoff Bound. Suppose X1, ..., Xn are independent random variables taking values in {0, 1}. Let X denote their sum and let μ = E[X] denote the sum's expected value. Then for any δ > 0,

A similar proof strategy can be used to show that

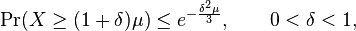

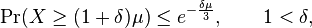

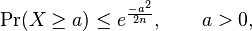

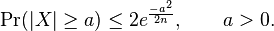

The above formula is often unwieldy in practice,[3] so the following looser but more convenient bounds are often used:

Special cases

We can obtain stronger bounds using simpler proof techniques for some special cases of symmetric random variables.

Suppose X1, ..., Xn are independent random variables, and let X denote their sum.

- If

. Then,

. Then,

- and therefore also

- If

![\Pr(X_i = 1) = \Pr(X_i = 0) = \tfrac{1}{2}, \mathrm{E}[X] = \mu = \frac{n}{2}](../I/m/aadae24d87f34f1958a2a572c0fd7c6e.png) Then,

Then,

Applications

Chernoff bounds have very useful applications in set balancing and packet routing in sparse networks.

The set balancing problem arises while designing statistical experiments. Typically while designing a statistical experiment, given the features of each participant in the experiment, we need to know how to divide the participants into 2 disjoint groups such that each feature is roughly as balanced as possible between the two groups. Refer to this book section for more info on the problem.

Chernoff bounds are also used to obtain tight bounds for permutation routing problems which reduce network congestion while routing packets in sparse networks. Refer to this book section for a thorough treatment of the problem.

Chernoff bounds can be effectively used to evaluate the "robustness level" of an application/algorithm by exploring its perturbation space with randomization. [6] The use of the Chernoff bound permits to abandon the strong -and mostly unrealistic- small perturbation hypothesis (the perturbation magnitude is small). The robustness level can be, in turn, used either to validate or reject a specific algorithmic choice, a hardware implementation or the appropriateness of a solution whose structural parameters are affected by uncertainties.

Matrix bound

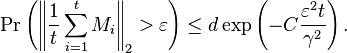

Rudolf Ahlswede and Andreas Winter introduced a Chernoff bound for matrix-valued random variables.[7]

If M is distributed according to some distribution over d × d matrices with zero mean, and if M1, ..., Mt are independent copies of M then for any ε > 0,

where  holds almost surely and C > 0 is an absolute constant.

holds almost surely and C > 0 is an absolute constant.

Notice that the number of samples in the inequality depends logarithmically on d. In general, unfortunately, such a dependency is inevitable: take for example a diagonal random sign matrix of dimension d. The operator norm of the sum of t independent samples is precisely the maximum deviation among d independent random walks of length t. In order to achieve a fixed bound on the maximum deviation with constant probability, it is easy to see that t should grow logarithmically with d in this scenario.[8]

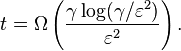

The following theorem can be obtained by assuming M has low rank, in order to avoid the dependency on the dimensions.

Theorem without the dependency on the dimensions

Let 0 < ε < 1 and M be a random symmetric real matrix with ![\| \mathrm{E}[M] \|_2 \leq 1](../I/m/6c194735555362b8fb7c15d873837a1f.png) and

and  almost surely. Assume that each element on the support of M has at most rank r. Set

almost surely. Assume that each element on the support of M has at most rank r. Set

If  holds almost surely, then

holds almost surely, then

where M1, ..., Mt are i.i.d. copies of M.

Sampling variant

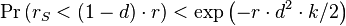

The following variant of Chernoff's bound can be used to bound the probability that a majority in a population will become a minority in a sample, or vice versa.[9]

Suppose there is a general population A and a sub-population B⊆A. Mark the relative size of the sub-population (|B|/|A|) by r.

Suppose we pick an integer k and a random sample S⊂A of size k. Mark the relative size of the sub-population in the sample (|B∩S|/|S|) by rS.

Then, for every fraction d∈[0,1]:

In particular, if B is a majority in A (i.e. r > 0.5) we can bound the probability that B will remain majority in S (rS>0.5) by taking: d = 1 - 1 / (2 r):[10]

This bound is of course not tight at all. For example, when r=0.5 we get a trivial bound Prob > 0.

Proofs

Chernoff-Hoeffding Theorem (additive form)

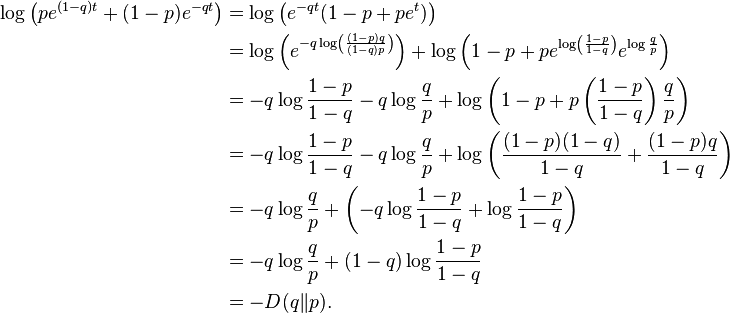

Let q = p + ε. Taking a = nq in (1), we obtain:

Now, knowing that Pr(Xi = 1) = p, Pr(Xi = 0) = 1 − p, we have

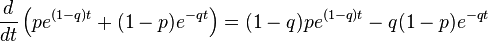

Therefore we can easily compute the infimum, using calculus:

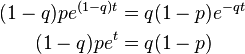

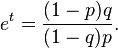

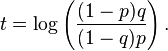

Setting the equation to zero and solving, we have

so that

Thus,

As q = p + ε > p, we see that t > 0, so our bound is satisfied on t. Having solved for t, we can plug back into the equations above to find that

We now have our desired result, that

To complete the proof for the symmetric case, we simply define the random variable Yi = 1 − Xi, apply the same proof, and plug it into our bound.

Multiplicative form

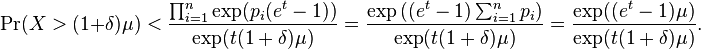

Set Pr(Xi = 1) = pi. According to (1),

The third line above follows because  takes the value et with probability pi and the value 1 with probability 1 − pi. This is identical to the calculation above in the proof of the Theorem for additive form (absolute error).

takes the value et with probability pi and the value 1 with probability 1 − pi. This is identical to the calculation above in the proof of the Theorem for additive form (absolute error).

Rewriting  as

as  and recalling that

and recalling that  (with strict inequality if x > 0), we set

(with strict inequality if x > 0), we set  . The same result can be obtained by directly replacing a in the equation for the Chernoff bound with (1 + δ)μ.[11]

. The same result can be obtained by directly replacing a in the equation for the Chernoff bound with (1 + δ)μ.[11]

Thus,

If we simply set t = log(1 + δ) so that t > 0 for δ > 0, we can substitute and find

This proves the result desired.

See also

- Concentration inequality - a summary of tail-bounds on random variables.

References

- ↑ Chernoff, Herman (2014). "A career in statistics" (PDF). In Lin, Xihong; Genest, Christian; Banks, David L.; Molenberghs, Geert; Scott, David W.; Wang, Jane-Ling. Past, Present, and Future of Statistics. CRC Press. p. 35. ISBN 9781482204964.

- ↑ This method was first applied by Sergei Bernstein to prove the related Bernstein inequalities.

- 1 2 Mitzenmacher, Michael and Upfal, Eli (2005). Probability and Computing: Randomized Algorithms and Probabilistic Analysis. Cambridge University Press. ISBN 0-521-83540-2.

- ↑ Sinclair, Alistair (Fall 2011). "Class notes for the course "Randomness and Computation"" (PDF). Retrieved 30 October 2014.

- ↑ Hoeffding, W. (1963). "Probability Inequalities for Sums of Bounded Random Variables". Journal of the American Statistical Association 58 (301): 13–30. doi:10.2307/2282952. JSTOR 2282952.

- ↑ C.Alippi: "Randomized Algorithms" chapter in Intelligence for Embedded Systems. Springer, 2014, 283pp, ISBN 978-3-319-05278-6.

- ↑ Ahlswede, R.; Winter, A. (2003). "Strong Converse for Identification via Quantum Channels". IEEE Transactions on Information Theory 48 (3): 569–579. arXiv:quant-ph/0012127. doi:10.1109/18.985947.

- ↑

- ↑ Goldberg, A. V.; Hartline, J. D. (2001). "Competitive Auctions for Multiple Digital Goods". Algorithms — ESA 2001. Lecture Notes in Computer Science 2161. p. 416. doi:10.1007/3-540-44676-1_35. ISBN 978-3-540-42493-2.; lemma 6.1

- ↑ See graphs of: the bound as a function of r when k changes and the bound as a function of k when r changes.

- ↑ Refer to the proof above

Additional reading

- Chernoff, H. (1952). "A Measure of Asymptotic Efficiency for Tests of a Hypothesis Based on the sum of Observations". Annals of Mathematical Statistics 23 (4): 493–507. doi:10.1214/aoms/1177729330. JSTOR 2236576. MR 57518. Zbl 0048.11804.

- Chernoff, H. (1981). "A Note on an Inequality Involving the Normal Distribution". Annals of Probability 9 (3): 533. doi:10.1214/aop/1176994428. JSTOR 2243541. MR 614640. Zbl 0457.60014.

- Hagerup, T. (1990). "A guided tour of Chernoff bounds". Information Processing Letters 33 (6): 305. doi:10.1016/0020-0190(90)90214-I.

- Nielsen, F. (2011). "Chernoff information of exponential families". arXiv:1102.2684 [cs.IT].

![\Pr(X \geq a) = \Pr(e^{t\cdot X} \geq e^{t\cdot a}) \leq \frac{\mathrm{E}\left [e^{t\cdot X}\right]}{e^{t\cdot a}}.](../I/m/da17d8c117c441eda728e0cb3594f691.png)

![\Pr(X \geq a) \leq e^{-ta}\mathrm{E} \left [\prod_i e^{t\cdot X_i} \right].](../I/m/89a42f1f2a34102c08ecf7590d26cb63.png)

![\Pr (X \leq a) \leq \min_{t>0} e^{ta} \prod_i \mathrm{E} \left[e^{-t\cdot X_i} \right ]](../I/m/b3482ef5911c33efd5de98410ff3c854.png)

![\mathrm{E} \left[e^{t\cdot X_i} \right] = 1 + p (e^t -1) \leq e^{p (e^t - 1)}](../I/m/383846875d28fa1b6e6bf966c6311c14.png)

![\mathrm{E} \left[e^{t\cdot X} \right] \leq e^{n\cdot p (e^t - 1)}](../I/m/7580ec009c48e2937fe21648a402c129.png)

![\Pr[X \geq (1+\delta)np] \leq \frac{e^{\delta n p}}{(1+\delta)^a} = \left[\frac{e^{\delta}}{(1+\delta)^{1+\delta}}\right]^{np}](../I/m/f6734ac9244774a3f7d50c953005ea88.png)

![\Pr[X > {n \over 2}] = \sum_{i = \lfloor \tfrac{n}{2} \rfloor + 1}^n \binom{n}{i}p^i (1 - p)^{n - i} .](../I/m/539ffe2cf4a303c9d2497361be6797c7.png)

![\Pr[X > {n \over 2}] \ge 1 - e^{-\frac{1}{2p}n \left(p - \frac{1}{2} \right)^2} .](../I/m/f240db1ab386f4b049913bdde2798d9d.png)

![\Pr\left(\left\| \frac{1}{t} \sum_{i=1}^t M_i - \mathrm{E}[M] \right\|_2 > \varepsilon \right) \leq \frac{1}{\mathbf{poly}(t)}](../I/m/2c5bac9bdcfa91182a00f664d9584870.png)

![\Pr\left ( \frac{1}{n} \sum X_i \ge q\right )\le \inf_{t>0} \frac{E \left[\prod e^{t X_i}\right]}{e^{tnq}} = \inf_{t>0} \left ( \frac{ E\left[e^{tX_i} \right] }{e^{tq}}\right )^n.](../I/m/2837159b037ee9f65370b824c1aab38b.png)

![\left (\frac{\mathrm{E}\left[e^{tX_i} \right] }{e^{tq}}\right )^n = \left (\frac{p e^t + (1-p)}{e^{tq} }\right )^n = \left ( pe^{(1-q)t} + (1-p)e^{-qt} \right )^n.](../I/m/cabf51e01da680e2bc341612cc221a9a.png)

![\begin{align}

\Pr (X > (1 + \delta)\mu) &\le \inf_{t > 0} \frac{\mathrm{E}\left[\prod_{i=1}^n\exp(tX_i)\right]}{\exp(t(1+\delta)\mu)}\\

& = \inf_{t > 0} \frac{\prod_{i=1}^n\mathrm{E}\left [e^{tX_i} \right]}{\exp(t(1+\delta)\mu)} \\

& = \inf_{t > 0} \frac{\prod_{i=1}^n\left[p_ie^t + (1-p_i)\right]}{\exp(t(1+\delta)\mu)}

\end{align}](../I/m/07ae8bc6a7daa44f92ebc06026777a08.png)

![\frac{\exp((e^t-1)\mu)}{\exp(t(1+\delta)\mu)} = \frac{\exp((1+\delta - 1)\mu)}{(1+\delta)^{(1+\delta)\mu}} = \left[\frac{e^\delta}{(1+\delta)^{(1+\delta)}}\right]^\mu](../I/m/9df31d95f00bbaa40ce2a6a8605deba3.png)