Chain rule

| Part of a series of articles about | ||||||

| Calculus | ||||||

|---|---|---|---|---|---|---|

|

||||||

|

Specialized |

||||||

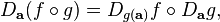

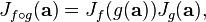

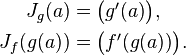

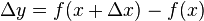

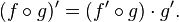

In calculus, the chain rule is a formula for computing the derivative of the composition of two or more functions. That is, if f and g are functions, then the chain rule expresses the derivative of their composition f ∘ g (the function which maps x to f(g(x)) in terms of the derivatives of f and g and the product of functions as follows:

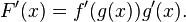

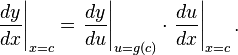

This can be written more explicitly in terms of the variable. Let F = f ∘ g, or equivalently, F(x) = f(g(x)) for all x. Then one can also write

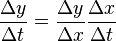

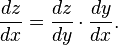

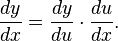

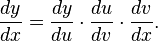

The chain rule may be written, in Leibniz's notation, in the following way. We consider z to be a function of the variable y, which is itself a function of x (y and z are therefore dependent variables), and so, z becomes a function of x as well:

In integration, the counterpart to the chain rule is the substitution rule.

History

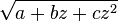

The chain rule seems to have first been used by Leibniz. He used it to calculate the derivative of  as the composite of the square root function and the function

as the composite of the square root function and the function  . He first mentioned it in a 1676 memoir (with a sign error in the calculation). The common notation of chain rule is due to Leibniz.[1] L'Hôpital uses the chain rule implicitly in his Analyse des infiniment petits. The chain rule does not appear in any of Leonhard Euler's analysis books, even though they were written over a hundred years after Leibniz's discovery.

. He first mentioned it in a 1676 memoir (with a sign error in the calculation). The common notation of chain rule is due to Leibniz.[1] L'Hôpital uses the chain rule implicitly in his Analyse des infiniment petits. The chain rule does not appear in any of Leonhard Euler's analysis books, even though they were written over a hundred years after Leibniz's discovery.

One dimension

First example

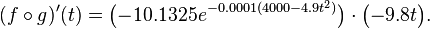

Suppose that a skydiver jumps from an aircraft. Assume that t seconds after his jump, his height above sea level in meters is given by g(t) = 4000 − 4.9t2. One model for the atmospheric pressure at a height h is f(h) = 101325 e−0.0001h. These two equations can be differentiated and combined in various ways to produce the following data:

- g′(t) = −9.8t is the velocity of the skydiver at time t.

- f′(h) = −10.1325e−0.0001h is the rate of change in atmospheric pressure with respect to height at the height h and is proportional to the buoyant force on the skydiver at h meters above sea level. (The true buoyant force depends on the volume of the skydiver.)

- (f ∘ g)(t) is the atmospheric pressure the skydiver experiences t seconds after his jump.

- (f ∘ g)′(t) is the rate of change in atmospheric pressure with respect to time at t seconds after the skydiver's jump and is proportional to the buoyant force on the skydiver at t seconds after his jump.

The chain rule gives a method for computing (f ∘ g)′(t) in terms of f′ and g′. While it is always possible to directly apply the definition of the derivative to compute the derivative of a composite function, this is usually very difficult. The utility of the chain rule is that it turns a complicated derivative into several easy derivatives.

The chain rule states that, under appropriate conditions,

In this example, this equals

In the statement of the chain rule, f and g play slightly different roles because f′ is evaluated at g(t) whereas g′ is evaluated at t. This is necessary to make the units work out correctly. For example, suppose that we want to compute the rate of change in atmospheric pressure ten seconds after the skydiver jumps. This is (f ∘ g)′(10) and has units of Pascals per second. The factor g′(10) in the chain rule is the velocity of the skydiver ten seconds after his jump, and it is expressed in meters per second. f′(g(10)) is the change in pressure with respect to height at the height g(10) and is expressed in Pascals per meter. The product of f′(g(10)) and g′(10) therefore has the correct units of Pascals per second. It is not possible to evaluate f anywhere else. For instance, because the 10 in the problem represents ten seconds, the expression f′(10) represents the change in pressure at a height of ten seconds, which is nonsense. Similarly, because g′(10) = −98 meters per second, the expression f′(g′(10)) represents the change in pressure at a height of −98 meters per second, which is also nonsense. However, g(10) is 3020 meters above sea level, the height of the skydiver ten seconds after his jump. This has the correct units for an input to f.

Statement

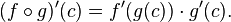

The simplest form of the chain rule is for real-valued functions of one real variable. It says that if g is a function that is differentiable at a point c (i.e. the derivative g′(c) exists) and f is a function that is differentiable at g(c), then the composite function f ∘ g is differentiable at c, and the derivative is[2]

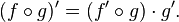

The rule is sometimes abbreviated as

If y = f(u) and u = g(x), then this abbreviated form is written in Leibniz notation as:

The points where the derivatives are evaluated may also be stated explicitly:

Further examples

Absence of formulas

It may be possible to apply the chain rule even when there are no formulas for the functions which are being differentiated. This can happen when the derivatives are measured directly. Suppose that a car is driving up a tall mountain. The car's speedometer measures its speed directly. If the grade is known, then the rate of ascent can be calculated using trigonometry. Suppose that the car is ascending at 2.5 km/h. Standard models for the Earth's atmosphere imply that the temperature drops about 6.5 °C per kilometer ascended (called the lapse rate). To find the temperature drop per hour, we apply the chain rule. Let the function g(t) be the altitude of the car at time t, and let the function f(h) be the temperature h kilometers above sea level. f and g are not known exactly: For example, the altitude where the car starts is not known and the temperature on the mountain is not known. However, their derivatives are known: f′ is −6.5 °C/km, and g′ is 2.5 km/h. The chain rule says that the derivative of the composite function is the product of the derivative of f and the derivative of g. This is −6.5 °C/km ⋅ 2.5 km/h = −16.25 °C/h.

One of the reasons why this computation is possible is because f′ is a constant function. This is because the above model is very simple. A more accurate description of how the temperature near the car varies over time would require an accurate model of how the temperature varies at different altitudes. This model may not have a constant derivative. To compute the temperature change in such a model, it would be necessary to know g and not just g′, because without knowing g it is not possible to know where to evaluate f′.

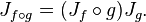

Composites of more than two functions

The chain rule can be applied to composites of more than two functions. To take the derivative of a composite of more than two functions, notice that the composite of f, g, and h (in that order) is the composite of f with g ∘ h. The chain rule says that to compute the derivative of f ∘ g ∘ h, it is sufficient to compute the derivative of f and the derivative of g ∘ h. The derivative of f can be calculated directly, and the derivative of g ∘ h can be calculated by applying the chain rule again.

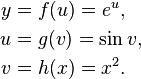

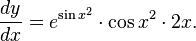

For concreteness, consider the function

This can be decomposed as the composite of three functions:

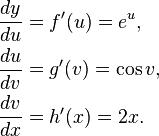

Their derivatives are:

The chain rule says that the derivative of their composite at the point x = a is:

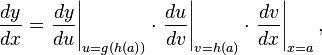

In Leibniz notation, this is:

or for short,

The derivative function is therefore:

Another way of computing this derivative is to view the composite function f ∘ g ∘ h as the composite of f ∘ g and h. Applying the chain rule to this situation gives:

This is the same as what was computed above. This should be expected because (f ∘ g) ∘ h = f ∘ (g ∘ h).

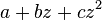

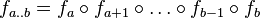

Sometimes it is necessary to differentiate an arbitrarily long composition of the form  . In this case, define

. In this case, define

where  and

and  when

when  . Then the chain rule takes the form

. Then the chain rule takes the form

or, in the Lagrange notation,

Quotient rule

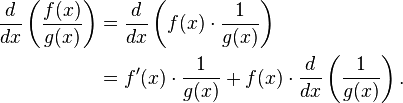

The chain rule can be used to derive some well-known differentiation rules. For example, the quotient rule is a consequence of the chain rule and the product rule. To see this, write the function f(x)/g(x) as the product f(x) · 1/g(x). First apply the product rule:

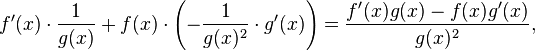

To compute the derivative of 1/g(x), notice that it is the composite of g with the reciprocal function, that is, the function that sends x to 1/x. The derivative of the reciprocal function is −1/x2. By applying the chain rule, the last expression becomes:

which is the usual formula for the quotient rule.

Derivatives of inverse functions

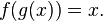

Suppose that y = g(x) has an inverse function. Call its inverse function f so that we have x = f(y). There is a formula for the derivative of f in terms of the derivative of g. To see this, note that f and g satisfy the formula

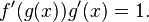

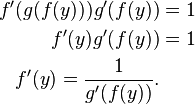

Because the functions f(g(x)) and x are equal, their derivatives must be equal. The derivative of x is the constant function with value 1, and the derivative of f(g(x)) is determined by the chain rule. Therefore we have:

To express f′ as a function of an independent variable y, we substitute f(y) for x wherever it appears. Then we can solve for f′.

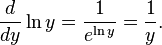

For example, consider the function g(x) = ex. It has an inverse f(y) = ln y. Because g′(x) = ex, the above formula says that

This formula is true whenever g is differentiable and its inverse f is also differentiable. This formula can fail when one of these conditions is not true. For example, consider g(x) = x3. Its inverse is f(y) = y1/3, which is not differentiable at zero. If we attempt to use the above formula to compute the derivative of f at zero, then we must evaluate 1/g′(f(0)). f(0) = 0 and g′(0) = 0, so we must evaluate 1/0, which is undefined. Therefore the formula fails in this case. This is not surprising because f is not differentiable at zero.

Higher derivatives

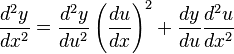

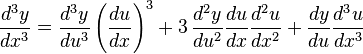

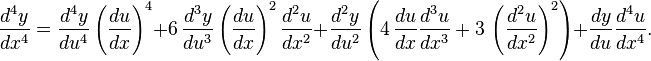

Faà di Bruno's formula generalizes the chain rule to higher derivatives. Assuming that y = f(u) and u = g(x), then the first few derivatives are:

Proofs

First proof

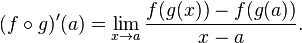

One proof of the chain rule begins with the definition of the derivative:

Assume for the moment that g(x) does not equal g(a) for any x near a. Then the previous expression is equal to the product of two factors:

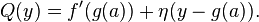

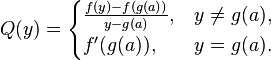

When g oscillates near a, then it might happen that no matter how close one gets to a, there is always an even closer x such that g(x) equals g(a). For example, this happens for g(x) = x2sin(1 / x) near the point a = 0. Whenever this happens, the above expression is undefined because it involves division by zero. To work around this, introduce a function Q as follows:

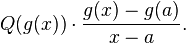

We will show that the difference quotient for f ∘ g is always equal to:

Whenever g(x) is not equal to g(a), this is clear because the factors of g(x) − g(a) cancel. When g(x) equals g(a), then the difference quotient for f ∘ g is zero because f(g(x)) equals f(g(a)), and the above product is zero because it equals f′(g(a)) times zero. So the above product is always equal to the difference quotient, and to show that the derivative of f ∘ g at a exists and to determine its value, we need only show that the limit as x goes to a of the above product exists and determine its value.

To do this, recall that the limit of a product exists if the limits of its factors exist. When this happens, the limit of the product of these two factors will equal the product of the limits of the factors. The two factors are Q(g(x)) and (g(x) − g(a)) / (x − a). The latter is the difference quotient for g at a, and because g is differentiable at a by assumption, its limit as x tends to a exists and equals g′(a).

It remains to study Q(g(x)). Q is defined wherever f is. Furthermore, because f is differentiable at g(a) by assumption, Q is continuous at g(a). g is continuous at a because it is differentiable at a, and therefore Q ∘ g is continuous at a. So its limit as x goes to a exists and equals Q(g(a)), which is f′(g(a)).

This shows that the limits of both factors exist and that they equal f′(g(a)) and g′(a), respectively. Therefore the derivative of f ∘ g at a exists and equals f′(g(a))g′(a).

Second proof

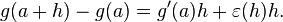

Another way of proving the chain rule is to measure the error in the linear approximation determined by the derivative. This proof has the advantage that it generalizes to several variables. It relies on the following equivalent definition of differentiability at a point: A function g is differentiable at a if there exists a real number g′(a) and a function ε(h) that tends to zero as h tends to zero, and furthermore

Here the left-hand side represents the true difference between the value of g at a and at a + h, whereas the right-hand side represents the approximation determined by the derivative plus an error term.

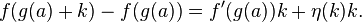

In the situation of the chain rule, such a function ε exists because g is assumed to be differentiable at a. Again by assumption, a similar function also exists for f at g(a). Calling this function η, we have

The above definition imposes no constraints on η(0), even though it is assumed that η(k) tends to zero as k tends to zero. If we set η(0) = 0, then η is continuous at 0.

Proving the theorem requires studying the difference f(g(a + h)) − f(g(a)) as h tends to zero. The first step is to substitute for g(a + h) using the definition of differentiability of g at a:

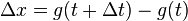

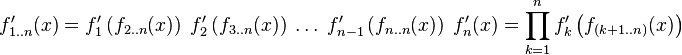

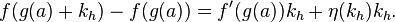

The next step is to use the definition of differentiability of f at g(a). This requires a term of the form f(g(a) + k) for some k. In the above equation, the correct k varies with h. Set kh = g′(a) h + ε(h) h and the right hand side becomes f(g(a) + kh) − f(g(a)). Applying the definition of the derivative gives:

To study the behavior of this expression as h tends to zero, expand kh. After regrouping the terms, the right-hand side becomes:

Because ε(h) and η(kh) tend to zero as h tends to zero, the first two bracketed terms tend to zero as h tends to zero. Applying the same theorem on products of limits as in the first proof, the third bracketed term also tends zero. Because the above expression is equal to the difference f(g(a + h)) − f(g(a)), by the definition of the derivative f ∘ g is differentiable at a and its derivative is f′(g(a)) g′(a).

The role of Q in the first proof is played by η in this proof. They are related by the equation:

The need to define Q at g(a) is analogous to the need to define η at zero.

Third proof

Carathéodory's alternative definition of the differentiability of a function can be used to give an elegant proof of the chain rule.[3]

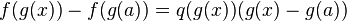

Under this definition, a function f is differentiable at a point a if and only if there is a function q, continuous at a and such that f(x) − f(a) = q(x)(x − a). There is at most one such function, and if f is differentiable at a then f '(a) = q(a).

Given the assumptions of the chain rule and the fact that differentiable functions and compositions of continuous functions are continuous, we have that there exist functions q, continuous at g(a) and r, continuous at a and such that,

and

Therefore,

but the function given by h(x) = q(g(x))r(x) is continuous at a, and we get, for this a

A similar approach works for continuously differentiable (vector-)functions of many variables. This ideology of factoring also allows a unified approach to stronger forms of differentiability, when the derivative is required to be Lipschitz, Holder, etc. Differentiation itself can be viewed as the polynomial remainder theorem (the little Bezout, or factor theorem), generalized to an appropriate class of functions.

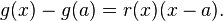

Proof via infinitesimals

If  and

and  then choosing infinitesimal

then choosing infinitesimal  we compute the corresponding

we compute the corresponding  and then the corresponding

and then the corresponding  , so that

, so that

and applying the standard part we obtain

which is the chain rule.

Higher dimensions

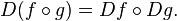

The simplest generalization of the chain rule to higher dimensions uses the total derivative. The total derivative is a linear transformation that captures how the function changes in all directions. Fix differentiable functions f : Rm → Rk and g : Rn → Rm and a point a in Rn. Let Dag denote the total derivative of g at a and Dg(a)f denote the total derivative of f at g(a). These two derivatives are linear transformations Rn → Rm and Rm → Rk, respectively, so they can be composed. The chain rule for total derivatives says that their composite is the total derivative of f ∘ g at a:

or for short,

The higher-dimensional chain rule can be proved using a technique similar to the second proof given above.

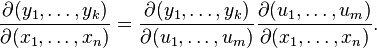

Because the total derivative is a linear transformation, the functions appearing in the formula can be rewritten as matrices. The matrix corresponding to a total derivative is called a Jacobian matrix, and the composite of two derivatives corresponds to the product of their Jacobian matrices. From this perspective the chain rule therefore says:

or for short,

That is, the Jacobian of a composite function is the product of the Jacobians of the composed functions (evaluated at the appropriate points).

The higher-dimensional chain rule is a generalization of the one-dimensional chain rule. If k, m, and n are 1, so that f : R → R and g : R → R, then the Jacobian matrices of f and g are 1 × 1. Specifically, they are:

The Jacobian of f ∘ g is the product of these 1 × 1 matrices, so it is f′(g(a))⋅g′(a), as expected from the one-dimensional chain rule. In the language of linear transformations, Da(g) is the function which scales a vector by a factor of g′(a) and Dg(a)(f) is the function which scales a vector by a factor of f′(g(a)). The chain rule says that the composite of these two linear transformations is the linear transformation Da(f ∘ g), and therefore it is the function that scales a vector by f′(g(a))⋅g′(a).

Another way of writing the chain rule is used when f and g are expressed in terms of their components as y = f(u) = (f1(u), ..., fk(u)) and u = g(x) = (g1(x), ..., gm(x)). In this case, the above rule for Jacobian matrices is usually written as:

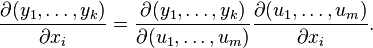

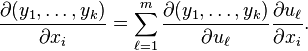

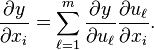

The chain rule for total derivatives implies a chain rule for partial derivatives. Recall that when the total derivative exists, the partial derivative in the ith coordinate direction is found by multiplying the Jacobian matrix by the ith basis vector. By doing this to the formula above, we find:

Since the entries of the Jacobian matrix are partial derivatives, we may simplify the above formula to get:

More conceptually, this rule expresses the fact that a change in the xi direction may change all of g1 through gk, and any of these changes may affect f.

In the special case where k = 1, so that f is a real-valued function, then this formula simplifies even further:

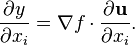

This can be rewritten as a dot product. Recalling that u = (g1, ..., gm), the partial derivative ∂u / ∂xi is also a vector, and the chain rule says that:

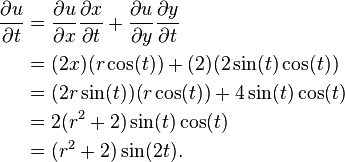

Example

Given u(x, y) = x2 + 2y where x(r, t) = r sin(t) and y(r,t) = sin2(t), determine the value of ∂u / ∂r and ∂u / ∂t using the chain rule.

and

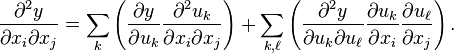

Higher derivatives of multivariable functions

Faà di Bruno's formula for higher-order derivatives of single-variable functions generalizes to the multivariable case. If y = f(u) is a function of u = g(x) as above, then the second derivative of f ∘ g is:

Further generalizations

All extensions of calculus have a chain rule. In most of these, the formula remains the same, though the meaning of that formula may be vastly different.

One generalization is to manifolds. In this situation, the chain rule represents the fact that the derivative of f ∘ g is the composite of the derivative of f and the derivative of g. This theorem is an immediate consequence of the higher dimensional chain rule given above, and it has exactly the same formula.

The chain rule is also valid for Fréchet derivatives in Banach spaces. The same formula holds as before. This case and the previous one admit a simultaneous generalization to Banach manifolds.

In abstract algebra, the derivative is interpreted as a morphism of modules of Kähler differentials. A ring homomorphism of commutative rings f : R → S determines a morphism of Kähler differentials Df : ΩR → ΩS which sends an element dr to d(f(r)), the exterior differential of f(r). The formula D(f ∘ g) = Df ∘ Dg holds in this context as well.

The common feature of these examples is that they are expressions of the idea that the derivative is part of a functor. A functor is an operation on spaces and functions between them. It associates to each space a new space and to each function between two spaces a new function between the corresponding new spaces. In each of the above cases, the functor sends each space to its tangent bundle and it sends each function to its derivative. For example, in the manifold case, the derivative sends a Cr-manifold to a Cr−1-manifold (its tangent bundle) and a Cr-function to its total derivative. There is one requirement for this to be a functor, namely that the derivative of a composite must be the composite of the derivatives. This is exactly the formula D(f ∘ g) = Df ∘ Dg.

There are also chain rules in stochastic calculus. One of these, Itō's lemma, expresses the composite of an Itō process (or more generally a semimartingale) dXt with a twice-differentiable function f. In Itō's lemma, the derivative of the composite function depends not only on dXt and the derivative of f but also on the second derivative of f. The dependence on the second derivative is a consequence of the non-zero quadratic variation of the stochastic process, which broadly speaking means that the process can move up and down in a very rough way. This variant of the chain rule is not an example of a functor because the two functions being composed are of different types.

See also

- Integration by substitution

- Leibniz integral rule

- Quotient rule

- Triple product rule

- Product rule

- Automatic differentiation, a computational method that makes heavy use of the chain rule to compute exact numerical derivatives.

References

- ↑ Omar Hernández Rodríguez and Jorge M. López Fernández (2010). "A Semiotic Reflection on the Didactics of the Chain Rule" (PDF). The Montana Mathematics Enthusiast 7 (2–3): 321–332. ISSN 1551-3440.

- ↑ Apostol, Tom (1974). Mathematical analysis (2nd ed.). Addison Wesley. Theorem 5.5.

- ↑ Kuhn, Stephen (1991), "The Derivative á la Carathéodory", The American Mathematical Monthly 98 (1): 40–44

External links

- Hazewinkel, Michiel, ed. (2001), "Leibniz rule", Encyclopedia of Mathematics, Springer, ISBN 978-1-55608-010-4

- Weisstein, Eric W., "Chain Rule", MathWorld.

- Khan Academy Lesson 1 Lesson 3

- http://calculusapplets.com/chainrule.html

- The Chain Rule explained

![Df_{1..n} = (Df_1 \circ f_{2..n}) (Df_2 \circ f_{3..n}) \dotso (Df_{n-1} \circ f_{n..n}) Df_n = \prod_{k=1}^{n} \left[Df_k \circ f_{(k+1)..n}\right]](../I/m/61d9793e332a893387c4bdd60b85c415.png)

![f'(g(a)) g'(a)h + [f'(g(a)) \varepsilon(h) + \eta(k_h) g'(a) + \eta(k_h) \varepsilon(h)] h.\,](../I/m/9d3afbcbc4f2a414c653354071794768.png)