APL (programming language)

| Paradigm | array, functional, structured, modular |

|---|---|

| Designed by | Kenneth E. Iverson |

| Developer | Kenneth E. Iverson |

| First appeared | 1964 |

| Typing discipline | dynamic |

| Major implementations | |

| Dyalog APL, IBM APL2, APL2000, Sharp APL, APLX, NARS2000,[1] GNU APL[2] | |

| Dialects | |

| A+, Dyalog APL, APLNext, ELI, J | |

| Influenced by | |

| mathematical notation | |

| Influenced | |

| J,[3] K,[4] Mathematica, MATLAB,[5] Nial,[6] PPL, Q, S | |

APL (named after the book A Programming Language)[7] is a programming language developed in the 1960s by Kenneth E. Iverson. Its central datatype is the multidimensional array. It uses a large range of special graphic symbols[8] to represent most operators, leading to very concise code. It has been an important influence on the development of concept modeling, spreadsheets, functional programming,[9] and computer math packages.[5] It has also inspired several other programming languages.[3][4][6] It is still used today for certain applications.[10][11]

History

The mathematical notation for manipulating arrays which developed into the APL programming language was developed by Iverson at Harvard University starting in 1957, and published in his A Programming Language in 1962.[7] The preface states its premise:

Applied mathematics is largely concerned with the design and analysis of explicit procedures for calculating the exact or approximate values of various functions. Such explicit procedures are called algorithms or programs. Because an effective notation for the description of programs exhibits considerable syntactic structure, it is called a programming language.

In 1960, he began work for IBM and, working with Adin Falkoff, created APL based on the notation he had developed. This notation was used inside IBM for short research reports on computer systems, such as the Burroughs B5000 and its stack mechanism when stack machines versus register machines were being evaluated by IBM for upcoming computers.

Also in 1960, Iverson used his notation in a draft of the chapter "A Programming Language", written for a book he was writing with Fred Brooks, Automatic Data Processing, which would be published in 1963.[12][13]

As early as 1962, the first attempt to use the notation to describe a complete computer system happened after Falkoff discussed with Dr. William C. Carter his work in the standardization of the instruction set for the machines that later became the IBM System/360 family.

In 1963, Herbert Hellerman, working at the IBM Systems Research Institute, implemented a part of the notation on an IBM 1620 computer, and it was used by students in a special high school course on calculating transcendental functions by series summation. Students tested their code in Hellerman's lab. This implementation of a portion of the notation was called PAT (Personalized Array Translator).[14]

In 1963, Falkoff, Iverson, and Edward H. Sussenguth Jr., all working at IBM, used the notation for a formal description of the IBM System/360 series machine architecture and functionality, which resulted in a paper published in IBM Systems Journal in 1964. After this was published, the team turned their attention to an implementation of the notation on a computer system. One of the motivations for this focus of implementation was the interest of John L. Lawrence who had new duties with Science Research Associates, an educational company bought by IBM in 1964. Lawrence asked Iverson and his group to help utilize the language as a tool for the development and use of computers in education.[15]

After Lawrence M. Breed and Philip S. Abrams of Stanford University joined the team at IBM Research, they continued their prior work on an implementation programmed in FORTRAN IV for a portion of the notation which had been done for the IBM 7090 computer running under the IBSYS operating system. This work was finished in late 1965 and later known as IVSYS (Iverson System). The basis of this implementation was described in detail by Abrams in a Stanford University Technical Report, "An Interpreter for Iverson Notation" in 1966.[16] this was formally supervised by Niklaus Wirth. Like Hellerman's PAT system earlier, this implementation did not include the APL character set but used special English reserved words for functions and operators. The system was later adapted for a time-sharing system and, by November 1966, it had been reprogrammed for the IBM System/360 Model 50 computer running in a time sharing mode and was used internally at IBM.[17]

A key development in the ability to use APL effectively, before the widespread use of CRT terminals, was the development of a special IBM Selectric typewriter interchangeable typeball with all the special APL characters on it. This was used on paper printing terminal workstations using the Selectric typewriter and typeball mechanism, such as the IBM 1050 and IBM 2741 terminal. Keycaps could be placed over the normal keys to show which APL characters would be entered and typed when that key was struck. For the first time, a programmer could actually type in and see real APL characters as used in Iverson's notation and not be forced to use awkward English keyword representations of them. Falkoff and Iverson had the special APL Selectric typeballs, 987 and 988, designed in late 1964, although no APL computer system was available to use them.[18] Iverson cited Falkoff as the inspiration for the idea of using an IBM Selectric typeball for the APL character set.[19]

Some APL symbols, even with the APL characters on the typeball, still had to be typed in by over-striking two existing typeball characters. An example would be the "grade up" character, which had to be made from a "delta" (shift-H) and a "Sheffer stroke" (shift-M). This was necessary because the APL character set was larger than the 88 characters allowed on the Selectric typeball.

The first APL interactive login and creation of an APL workspace was in 1966 by Larry Breed using an IBM 1050 terminal at the IBM Mohansic Labs near Thomas J. Watson Research Center, the home of APL, in Yorktown Heights, New York.[18]

IBM was chiefly responsible for the introduction of APL to the marketplace. APL was first available in 1967 for the IBM 1130 as APL\1130.[20][21] It would run in as little as 8k 16-bit words of memory, and used a dedicated 1 megabyte hard disk.

APL gained its foothold on mainframe timesharing systems from the late 1960s through the early 1980s, in part because it would run on lower-specification systems that were not equipped with Dynamic Address Translation hardware.[22] Additional improvements in performance for selected IBM System/370 mainframe systems included the "APL Assist Microcode" in which some support for APL execution was included in the actual firmware as opposed to APL being exclusively a software product. Somewhat later, as suitably performing hardware was finally becoming available in the mid- to late-1980s, many users migrated their applications to the personal computer environment.

Early IBM APL interpreters for IBM 360 and IBM 370 hardware implemented their own multi-user management instead of relying on the host services, thus they were timesharing systems in their own right. First introduced in 1966, the APL\360[23][24][25] system was a multi-user interpreter. The ability to programmatically communicate with the operating system for information and setting interpreter system variables was done through special privileged "I-beam" functions, using both monadic and dyadic operations.[26]

In 1973, IBM released APL.SV, which was a continuation of the same product, but which offered shared variables as a means to access facilities outside of the APL system, such as operating system files. In the mid-1970s, the IBM mainframe interpreter was even adapted for use on the IBM 5100 desktop computer, which had a small CRT and an APL keyboard, when most other small computers of the time only offered BASIC. In the 1980s, the VSAPL program product enjoyed widespread usage with CMS, TSO, VSPC, MUSIC/SP and CICS users.

In 1973-1974, Dr. Patrick E. Hagerty directed the implementation of the University of Maryland APL interpreter for the Sperry Univac 1100 Series mainframe computers.[27] At the time, Sperry had nothing. In 1974, student Alan Stebbens was assigned the task of implementing an internal function.[28]

Several timesharing firms sprang up in the 1960s and 1970s that sold APL services using modified versions of the IBM APL\360[25] interpreter. In North America, the better-known ones were I. P. Sharp Associates, STSC, Time Sharing Resources (TSR) and The Computer Company (TCC). CompuServe also entered the fray in 1978 with an APL Interpreter based on a modified version of Digital Equipment Corp and Carnegie Mellon's which ran on DEC's KI and KL 36 bit machines. CompuServe's APL was available both to its commercial market and the consumer information service. With the advent first of less expensive mainframes such as the IBM 4300 and later the personal computer, the timesharing industry had all but disappeared by the mid-1980s.

Sharp APL was available from I. P. Sharp Associates, first on a timesharing basis in the 1960s, and later as a program product starting around 1979. Sharp APL was an advanced APL implementation with many language extensions, such as packages (the ability to put one or more objects into a single variable), file system, nested arrays, and shared variables.

APL interpreters were available from other mainframe and mini-computer manufacturers as well, notably Burroughs, CDC, Data General, DEC, Harris, Hewlett-Packard, Siemens AG, Xerox, and others.

Garth Foster of Syracuse University sponsored regular meetings of the APL implementers' community at Syracuse's Minnowbrook Conference Center in rural upstate New York. In later years, Eugene McDonnell organized similar meetings at the Asilomar Conference Grounds near Monterey, California, and at Pajaro Dunes near Watsonville, California. The SIGAPL special interest group of the Association for Computing Machinery continues to support the APL community.[29]

In 1979, Iverson received the Turing Award for his work on APL.[30]

Filmography, Videos: Over the years APL has been the subject of more than a few films and videos. Some of these include:

- "Chasing Men Who Stare at Arrays" Catherine Lathwell's Film Diaries; 2014, film synopsis - "people who accept significantly different ways of thinking, challenge the status quo and as a result, created an invention that subtly changes the world. And no one knows about it. And a Canadian started it all… I want everyone to know about it."[31]

- "The Origins of APL - 1974 - YouTube", YouTube video, 2012, uploaded by Catherine Lathwell; a talk show style interview with the original developers of APL.[32]

- "50 Years of APL", YouTube, 2009, by Graeme Robertson, uploaded by MindofZiggi, history of APL, quick introduction to APL, a powerful programming language currently finding new life due to its ability to create and implement systems, web-based or otherwise.[33]

- "APL demonstration 1975", YouTube, 2013, uploaded by Imperial College London; 1975 live demonstration of the computer language APL (A Programming Language) by Professor Bob Spence, Imperial College London.[34]

APL2

Starting in the early 1980s, IBM APL development, under the leadership of Dr Jim Brown, implemented a new version of the APL language that contained as its primary enhancement the concept of nested arrays, where an array can contain other arrays, as well as new language features which facilitated the integration of nested arrays into program workflow. Ken Iverson, no longer in control of the development of the APL language, left IBM and joined I. P. Sharp Associates, where one of his major contributions was directing the evolution of Sharp APL to be more in accordance with his vision.[35][36][37]

As other vendors were busy developing APL interpreters for new hardware, notably Unix-based microcomputers, APL2 was almost always the standard chosen for new APL interpreter developments. Even today, most APL vendors or their users cite APL2 compatibility, as a selling point for those products.[38][39]

APL2 for IBM mainframe computers is still available. IBM cites its use for problem solving, system design, prototyping, engineering and scientific computations, expert systems,[40] for teaching mathematics and other subjects, visualization and database access[41] and was first available for CMS and TSO in 1984.[42] The APL2 Workstation edition (Windows, OS/2, AIX, Linux, and Solaris) followed much later in the early 1990s.

Microcomputers

The first microcomputer implementation of APL was on the Intel 8008-based MCM/70, the first general purpose personal computer, in 1973.

IBM's own IBM 5100 microcomputer (1975) offered APL as one of two built-in ROM-based interpreted languages for the computer, complete with a keyboard and display that supported all the special symbols used in the language.

In 1976 DNA Systems introduced an APL interpreter for their TSO Operating System, which ran timesharing on the IBM 1130, Digital Scientific Meta-4, General Automation GA 18/30 and Computer Hardware CHI 21/30.

The VideoBrain Family Computer, released in 1977, only had one programming language available for it, and that was a dialect of APL called APL/S.[43]

A Small APL for the Intel 8080 called EMPL was released in 1977, and Softronics APL, with most of the functions of full APL, for 8080-based CP/M systems was released in 1979.

In 1977, the Canadian firm Telecompute Integrated Systems, Inc. released a business-oriented APL interpreter known as TIS APL, for Z80-based systems. It featured the full set of file functions for APL, plus a full screen input and switching of right and left arguments for most dyadic operators by introducing the ~. prefix to all single character dyadic functions such as - or /.

Vanguard APL was available for Z80 CP/M-based processors in the late 1970s. TCC released APL.68000 in the early 1980s for Motorola 68000-based processors, this system being the basis for MicroAPL Limited's APLX product. I. P. Sharp Associates released a version of their APL interpreter for the IBM PC and PC-XT/370.[44] For the IBM PC, an emulator was written that facilitated reusing much of the IBM 370 mainframe code. Arguably, the best known APL interpreter for the IBM Personal Computer was STSC's APL*Plus/PC.

The Commodore SuperPET, introduced in 1981, included an APL interpreter developed by the University of Waterloo.

In the early 1980s, the Analogic Corporation developed The APL Machine, which was an array processing computer designed to be programmed only in APL. There were actually three processing units, the user's workstation, an IBM PC, where programs were entered and edited, a Motorola 68000 processor that ran the APL interpreter, and the Analogic array processor that executed the primitives.[45] At the time of its introduction, The APL Machine was likely the fastest APL system available. Although a technological success, The APL Machine was a marketing failure. The initial version supported a single process at a time. At the time the project was discontinued, the design had been completed to allow multiple users. As an aside, an unusual aspect of The APL Machine was that the library of workspaces was organized such that a single function or variable that was shared by many workspaces existed only once in the library. Several of the members of The APL Machine project had previously spent a number of years with Burroughs implementing APL\700.

At one stage, it was claimed by Bill Gates in his Open Letter to Hobbyists, Microsoft Corporation planned to release a version of APL, but these plans never materialized.

An early 1978 publication of Rodnay Zaks from Sybex was A microprogrammed APL implementation ISBN 0-89588-005-9, which is the complete source listing for the microcode for a Digital Scientific Corporation Meta 4 microprogrammable processor implementing APL. This topic was also the subject of his PhD thesis.[46][47]

In 1979, William Yerazunis wrote a partial version of APL in Prime Computer FORTRAN, extended it with graphics primitives, and released it. This was also the subject of his Masters thesis.[48]

Extensions

Various implementations of APL by APLX, Dyalog, et al., include extensions for object-oriented programming, support for .NET, XML-array conversion primitives, graphing, operating system interfaces, and lambda expressions.

Design

Unlike traditionally structured programming languages, APL code is typically structured as chains of monadic or dyadic functions, and operators[49] acting on arrays.[50] APL has many nonstandard primitives (functions and operators) that are indicated by a single symbol or a combination of a few symbols. All primitives are defined to have the same precedence, and always associate to the right; hence APL is read or best understood from right-to-left.

Early APL implementations (circa 1970 or so) did not have programming loop-flow control structures, such as "do" or "while" loops, and "if-then-else" constructions. Instead, they used array operations, and use of structured programming constructs was often not necessary, since an operation could be carried out on an entire array in a single statement. For example, the iota function (ι) can replace for-loop iteration: ιN when applied to a scalar positive integer yields a one-dimensional array (vector), 1 2 3 ... N. More recent implementations of APL generally include comprehensive control structures, so that data structure and program control flow can be clearly and cleanly separated.

The APL environment is called a workspace. In a workspace the user can define programs and data, i.e. the data values exist also outside the programs, and the user can also manipulate the data without having to define a program.[51] In the examples below, the APL interpreter first types six spaces before awaiting the user's input. Its own output starts in column one.

n ← 4 5 6 7

|

Assigns vector of values, {4 5 6 7}, to variable n, an array create operation. An equivalent yet more concise APL expression would be n ← 3 + ⍳4. Multiple values are stored in array n, the operation performed without formal loops or control flow language. |

n

4 5 6 7

|

Display the contents of n, currently an array or vector. |

n+4

8 9 10 11

|

4 is now added to all elements of vector n, creating a 4-element vector {8 9 10 11}. As above, APL's interpreter displays the result because the expression's value was not assigned to a variable (with a ←). |

+/n

22

|

APL displays the sum of components of the vector n, i.e. 22 (= 4 + 5 + 6 + 7) using a very compact notation: read +/ as "plus, over..." and a slight change would be "multiply, over..." |

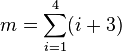

m ← +/(3+⍳4)

m

22

|

These operations can be combined into a single statement, remembering that APL evaluates expressions right to left: first ⍳4 creates an array, [1,2,3,4], then 3 is added to each component, which are summed together and the result stored in variable m, finally displayed.

In conventional mathematical notation, it is equivalent to: |

The user can save the workspace with all values, programs, and execution status.

APL is well known for its use of a set of non-ASCII symbols, which are an extension of traditional arithmetic and algebraic notation. Having single character names for SIMD vector functions is one way that APL enables compact formulation of algorithms for data transformation such as computing Conway's Game of Life in one line of code.[52] In nearly all versions of APL, it is theoretically possible to express any computable function in one expression, that is, in one line of code.

Because of the unusual character set, many programmers use special keyboards with APL keytops to write APL code.[53] Although there are various ways to write APL code using only ASCII characters,[54] in practice it is almost never done. (This may be thought to support Iverson's thesis about notation as a tool of thought.[55]) Most if not all modern implementations use standard keyboard layouts, with special mappings or input method editors to access non-ASCII characters. Historically, the APL font has been distinctive, with uppercase italic alphabetic characters and upright numerals and symbols. Most vendors continue to display the APL character set in a custom font.

Advocates of APL claim that the examples of so-called "write-only code" (badly written and almost incomprehensible code) are almost invariably examples of poor programming practice or novice mistakes, which can occur in any language. Advocates of APL also claim that they are far more productive with APL than with more conventional computer languages, and that working software can be implemented in far less time and with far fewer programmers than using other technology. APL lets an individual solve harder problems faster.

They also may claim that because it is compact and terse, APL lends itself well to larger-scale software development and complexity, because the number of lines of code can be dramatically reduced. Many APL advocates and practitioners also view standard programming languages such as COBOL and Java as being comparatively tedious. APL is often found where time-to-market is important, such as with trading systems.[56][57][58][59]

Iverson later designed the J programming language, which uses ASCII with digraphs instead of special symbols.

Execution

Because APL's core object are arrays,[60] it lends itself well to parallelism,[61] parallel computing,[62][63] massively parallel applications,[64][65] and very-large-scale integration or VLSI.[66][67]

Interpreters

APLNext (formerly APL2000) offers an advanced APL interpreter that operates under Linux, Unix, and Windows. It supports Windows automation, supports calls to operating system and user defined DLLs, has an advanced APL File System, and represents the current level of APL language development. APL2000's product is an advanced continuation of STSC's successful APL*Plus/PC and APL*Plus/386 product line.

Dyalog APL is an advanced APL interpreter that operates under AIX, Linux (including on the Raspberry Pi), Mac OS and Microsoft Windows.[68] Dyalog has extensions to the APL language, which include new object-oriented features, numerous language enhancements, plus a consistent namespace model used for both its Microsoft Automation interface, as well as native namespaces. For the Windows platform, Dyalog APL offers tight integration with .NET, plus limited integration with the Microsoft Visual Studio development platform.

IBM offers a version of IBM APL2 for IBM AIX, Linux, Sun Solaris and Windows systems. This product is a continuation of APL2 offered for IBM mainframes. IBM APL2 was arguably the most influential APL system, which provided a solid implementation standard for the next set of extensions to the language, focusing on nested arrays.

NARS2000 is an open-source APL interpreter written by Bob Smith, a well-known APL developer and implementor from STSC in the 1970s and 1980s. NARS2000 contains advanced features and new datatypes, runs natively under Windows (32- and 64-bit versions), and runs under Linux and Apple Mac OS with Wine.

MicroAPL Limited offers APLX, a full-featured 64 bit interpreter for Linux, Microsoft Windows, and Mac OS systems. The core language is closely modelled on IBM's APL2 with various enhancements. APLX includes close integration with .NET, Java, Ruby and R.

Soliton Incorporated offers the SAX interpreter, which stands for Sharp APL for UniX, for Unix and Linux systems. This is a further development of I. P. Sharp Associates' Sharp APL product. Unlike most other APL interpreters, Kenneth E. Iverson had some influence in the way nested arrays were implemented in Sharp APL and SAX. Nearly all other APL implementations followed the course set by IBM with APL2, thus some important details in Sharp APL differ from other implementations.

OpenAPL is an open source implementation of APL published by Branko Bratkovic. It is based on code by Ken Thompson of Bell Laboratories, together with contributions by others. It is licensed under the GNU General Public License, and runs on Unix systems including Linux on x86, SPARC and other CPUs.

GNU APL is a free implementation of ISO Standard 13751 and hence similar to APL2. It runs on GNU/Linux and on Windows using Cygwin. It uses Unicode internally. GNU APL was written by Jürgen Sauermann.

Compilers

APL programs are normally interpreted and less often compiled. In reality, most APL compilers translated source APL to a lower level language such as C, leaving the machine-specific details to the lower level compiler. Compilation of APL programs was a frequently discussed topic in conferences. Although some of the newer enhancements to the APL language such as nested arrays have rendered the language increasingly difficult to compile, the idea of APL compilation is still under development today.

In the past, APL compilation was regarded as a means to achieve execution speed comparable to other mainstream languages, especially on mainframe computers. Several APL compilers achieved some levels of success, though comparatively little of the development effort spent on APL over the years went to perfecting compilation into machine code.

As is the case when moving APL programs from one vendor's APL interpreter to another, APL programs invariably will require changes to their content. Depending on the compiler, variable declarations might be needed, certain language features would need to be removed or avoided, or the APL programs would need to be cleaned up in some way. Some features of the language, such as the execute function (an expression evaluator) and the various reflection and introspection functions from APL, such as the ability to return a function's text or to materialize a new function from text, are simply not practical to implement in machine code compilation.

A commercial compiler was brought to market by STSC in the mid-1980s as an add-on to IBM's VSAPL Program Product. Unlike more modern APL compilers, this product produced machine code that would execute only in the interpreter environment, it was not possible to eliminate the interpreter component. The compiler could compile many scalar and vector operations to machine code, but it would rely on the APL interpreter's services to perform some more advanced functions, rather than attempt to compile them. However, dramatic speedups did occur, especially for heavily iterative APL code.

Around the same time, the book An APL Compiler by Timothy Budd appeared in print. This book detailed the construction of an APL translator, written in C, which performed certain optimizations such as loop fusion specific to the needs of an array language. The source language was APL-like in that a few rules of the APL language were changed or relaxed to permit more efficient compilation. The translator would emit C code which could then be compiled and run outside of the APL workspace.

The Burroughs/Unisys APLB interpreter (1982) was the first to use dynamic incremental compilation to produce code for an APL-specific virtual machine. It recompiled on-the-fly as identifiers changed their functional meanings. In addition to removing parsing and some error checking from the main execution path, such compilation also streamlines the repeated entry and exit of user-defined functional operands. This avoids the stack setup and take-down for function calls made by APL's built-in operators such as Reduce and Each.

| Array Contraction |

|---|

| Definition: |

|

A program transformation which reduces array size while preserving the correct output. This technique has been used in the past to reduce memory requirement of the program, which can be important to out-of-core computing and embedded systems. 'Improving Data Locality by Array Contraction'[69] |

| Reducing Virtual Memory Requirements |

APEX, a research APL compiler, is available under the GNU Public License, per Snake Island Research Inc. APEX compiles flat APL (a subset of ISO N8485) into SAC, a functional array language with parallel semantics, and currently runs under Linux. APEX-generated code uses loop fusion and 'array contraction', special-case algorithms not generally available to interpreters (e.g., upgrade of permutation matrix/vector), to achieve a level of performance comparable to that of Fortran.

The APLNext VisualAPL system is a departure from a conventional APL system in that VisualAPL is a true .NET language which is fully interoperable with other .NET languages such as VB.NET and C#. VisualAPL is inherently object-oriented and Unicode-based. While VisualAPL incorporates most of the features of standard APL implementations, the VisualAPL language extends standard APL to be .NET-compliant. VisualAPL is hosted in the standard Microsoft Visual Studio IDE and as such, invokes compilation in a manner identical to that of other .NET languages. By producing Common Intermediate Language (CIL) code, it utilizes the Microsoft just-in-time compiler (JIT) to support 32-bit or 64-bit hardware. Substantial performance speed-ups over standard APL have been reported, especially when (optional) strong typing of function arguments is used.

An APL to C# translator is available from Causeway Graphical Systems. This product was designed to allow the APL code, translated to equivalent C#, to run completely outside of the APL environment. The Causeway compiler requires a run-time library of array functions. Some speedup, sometimes dramatic, is visible, but happens on account of the optimisations inherent in Microsoft's .NET Framework.

Matrix optimizations

APL was unique in the speed with which it could perform complicated matrix operations. For example, a very large matrix multiplication would take only a few seconds on a machine that was much less powerful than those today, ref. history of supercomputing and "because it operates on arrays and performs operations like matrix inversion internally, well written APL can be surprisingly fast."[70][71] There were both technical and economic reasons for this advantage:

- Commercial interpreters delivered highly tuned linear algebra library routines.

- Very low interpretive overhead was incurred per-array—not per-element.

- APL response time compared favorably to the runtimes of early optimizing compilers.

- IBM provided microcode assist for APL on a number of IBM370 mainframes.

Phil Abrams' much-cited paper "An APL Machine" illustrated how APL could make effective use of lazy evaluation where calculations would not actually be performed until the results were needed and then only those calculations strictly required. An obvious (and easy to implement) lazy evaluation is the J-vector: when a monadic iota is encountered in the code, it is kept as a representation instead of being expanded in memory; in future operations, a J-vectors contents are the loop's induction register, not reads from memory.

Although such techniques were not widely used by commercial interpreters, they exemplify the language's best survival mechanism: not specifying the order of scalar operations or the exact contents of memory. As standardized, in 1983 by ANSI working group X3J10, APL remains highly data-parallel. This gives language implementers immense freedom to schedule operations as efficiently as possible. As computer innovations such as cache memory, and SIMD execution became commercially available, APL programs are ported with almost no extra effort spent re-optimizing low-level details.

Terminology

APL makes a clear distinction between functions and operators.[49][72] Functions take arrays (variables or constants or expressions) as arguments, and return arrays as results. Operators (similar to higher-order functions) take functions or arrays as arguments, and derive related functions. For example, the "sum" function is derived by applying the "reduction" operator to the "addition" function. Applying the same reduction operator to the "maximum" function (which returns the larger of two numbers) derives a function which returns the largest of a group (vector) of numbers. In the J language, Iverson substituted the terms "verb" for "function" and "adverb" or "conjunction" for "operator".

APL also identifies those features built into the language, and represented by a symbol, or a fixed combination of symbols, as primitives. Most primitives are either functions or operators. Coding APL is largely a process of writing non-primitive functions and (in some versions of APL) operators. However a few primitives are considered to be neither functions nor operators, most noticeably assignment.

Some words used in APL literature have meanings that differ from those in both mathematics and the generality of computer science.

| Term | Description |

|---|---|

| function | operation or mapping that takes zero, one (right) or two (left & right) arguments which may be scalars, arrays, or more complicated structures, and may return a similarly complex result. A function may be: |

| array | data valued object of zero or more orthogonal dimensions in row-major order in which each item is a primitive scalar datum or another array.[74] |

| niladic | not taking or requiring any arguments,[75] |

| monadic | requiring only one argument; on the right for a function, on the left for an operator, unary[75] |

| dyadic | requiring both a left and a right argument, binary[75] |

| ambivalent or nomadic | capable of use in a monadic or dyadic context, permitting its left argument to be elided[73] |

| operator | operation or mapping that takes one (left) or two (left & right) function or array valued arguments (operands) and derives a function. An operator may be: |

Syntax

APL has explicit representations of functions, operators, and syntax, thus providing a basis for the clear and explicit statement of extended facilities in the language, as well as tools for experimentation upon them.[76]

Examples

Hello, World

This displays "Hello, world":

'Hello, world'

'Hello World,' sample user session on YouTube[77]

A design theme in APL is to define default actions in some cases that would produce syntax errors in most other programming languages.

The 'Hello, world' string constant above displays, because display is the default action on any expression for which no action is specified explicitly (e.g. assignment, function parameter).

Exponentiation

Another example of this theme is that exponentiation in APL is written as "2⋆32^32**3⋆3^3⋆32.71828⋆3

"Pick 6" lottery numbers

This following immediate-mode expression generates a typical set of "Pick 6" lottery numbers: six pseudo-random integers ranging from 1 to 40, guaranteed non-repeating, and displays them sorted in ascending order:

x[⍋x←6?40]

The above does a lot, concisely; although it seems complex to a beginning APLer.[78] It combines the following APL functions (also called primitives[79] and glyphs[80]):

- The first to be executed (APL executes from rightmost to leftmost) is dyadic function "?" (named deal when dyadic) that returns a vector consisting of a select number (left argument: 6 in this case) of random integers ranging from 1 to a specified maximum (right argument: 40 in this case), which, if said maximum ≥ vector length, is guaranteed to be non-repeating; thus, generate/create 6 random integers ranging from 1-40.[81]

- This vector is then assigned (

←) to the variable x, because it is needed later. - This vector is then sorted in ascending order by a monadic "⍋" function, which has as its right argument everything to the right of it up to the next unbalanced close-bracket or close-parenthesis. The result of ⍋ is the indices that will put its argument into ascending order.

- Then the output of ⍋ is applied to the variable x, which we saved earlier, and it puts the items of x into ascending sequence.

Since there is no function to the left of the left-most x to tell APL what to do with the result, it simply outputs it to the display (on a single line, separated by spaces) without needing any explicit instruction to do that.

"?" also has a monadic equivalent called roll, which simply returns a single random integer between 1 and its sole operand [to the right of it], inclusive. Thus, a role-playing game program might use the expression "?20" to roll a twenty-sided die.

Prime numbers

The following expression finds all prime numbers from 1 to R. In both time and space, the calculation complexity is  (in Big O notation).

(in Big O notation).

(~R∊R∘.×R)/R←1↓ιR

Executed from right to left, this means:

- Iota

ιRcreates a vector containing integers from1toR(ifR = 6at the beginning of the program,ιRis1 2 3 4 5 6) - Drop first element of this vector (

↓function), i.e.1. So1↓ιRis2 3 4 5 6 - Set

Rto the new vector (←, assignment primitive), i.e.2 3 4 5 6 - The

/compress function is dyadic (binary) and the interpreter first evaluates its left argument (entirely in parentheses): - Generate outer product of

Rmultiplied byR, i.e. a matrix that is the multiplication table of R by R (°.×operator), i.e.

| 4 | 6 | 8 | 10 | 12 |

| 6 | 9 | 12 | 15 | 18 |

| 8 | 12 | 16 | 20 | 24 |

| 10 | 15 | 20 | 25 | 30 |

| 12 | 18 | 24 | 30 | 36 |

- Build a vector the same length as

Rwith1in each place where the corresponding number inRis in the outer product matrix (∈, set inclusion or element of or Epsilon operator), i.e.0 0 1 0 1 - Logically negate (not) values in the vector (change zeros to ones and ones to zeros) (

∼, logical not or Tilde operator), i.e.1 1 0 1 0 - Select the items in

Rfor which the corresponding element is1(/compress operator), i.e.2 3 5

(Note, this assumes the APL origin is 1, i.e. indices start with 1. APL can be set to use 0 as the origin, so that ι6 is 0 1 2 3 4 5, which is convenient for some calculations).

Sorting

The following expression sorts a word list stored in matrix X according to word length:

X[⍋X+.≠' ';]

Game of Life

The following function "life", written in Dyalog APL, takes a boolean matrix and calculates the new generation according to Conway's Game of Life. It demonstrates the power of APL to implement a complex algorithm in very little code, but it is also very hard to follow unless one has advanced knowledge of APL.

life←{↑1 ⍵∨.∧3 4=+/,¯1 0 1∘.⊖¯1 0 1∘.⌽⊂⍵}

HTML tags removal

In the following example, also Dyalog, the first line assigns some HTML code to a variable txt and then uses an APL expression to remove all the HTML tags:

txt←'<nowiki><html><body><p>This is <em>emphasized</em> text.</p></body></html></nowiki>'

⎕←{⍵/⍨~{⍵∨≠\⍵}⍵∊'<>'}txt

This returns the text This is emphasized text.

Character set

APL has been both criticized and praised for its choice of a unique, non-standard character set. Some who learn it become ardent adherents, suggesting that there is some weight behind Iverson's idea that the notation used does make a difference. In the beginning, there were few terminal devices and even display monitors that could reproduce the APL character set—the most popular ones employing the IBM Selectric print mechanism used with a special APL type element. One of the early APL line terminals (line-mode operation only, not full screen) was the Texas Instruments TI Model 745 (circa 1977) with the full APL character set[82] which featured half and full duplex telecommunications modes, for interacting with an APL time-sharing service or remote mainframe to run a remote computer job, called an RJE.

Over time, with the universal use of high-quality graphic displays, printing devices and Unicode support, the APL character font problem has largely been eliminated. However, entering APL characters requires the use of input method editors, keyboard mappings, virtual/on-screen APL symbol sets,[83][84] or easy-reference printed keyboard cards which can frustrate beginners accustomed to other programming languages.[85][86][87]

In defense of the APL community, APL requires less coding to type in, and keyboard mappings become memorized over time. Also, special APL keyboards are manufactured and in use today, as are freely available downloadable fonts for operating system platforms such as Microsoft Windows.[88] The reported productivity gains assume that one will spend enough time working in APL to make memorization of the symbols, their semantics, and keyboard mappings worthwhile.

Use

APL has long had a select, mathematically inclined and curiosity-driven user base, who reference its powerful and symbolic nature: one symbol/character can perform an entire sort, another can perform regression, for example. It was and still is popular in financial, pre-modeling applications, and insurance applications, in simulations, and in mathematical applications. APL has been used in a wide variety of contexts and for many and varied purposes, including artificial intelligence[89][90] and robotics.[91][92] A newsletter titled "Quote-Quad" dedicated to APL has been published since the 1970s by the SIGAPL section of the Association for Computing Machinery (Quote-Quad is the name of the APL character used for text input and output).[93]

Before the advent of full-screen systems and until as late as the mid-1980s, systems were written such that the user entered instructions in his own business specific vocabulary. APL time-sharing vendors delivered applications in this form. On the I. P. Sharp timesharing system, a workspace called 39 MAGIC offered access to financial and airline data plus sophisticated (for the time) graphing and reporting. Another example is the GRAPHPAK workspace supplied with IBM's APL, then APL2.

Because of its matrix operations, APL was for some time quite popular for computer graphics programming, where graphic transformations could be encoded as matrix multiplications. One of the first commercial computer graphics houses, Digital Effects, based in New York City, produced an APL graphics product known as "Visions", which was used to create television commercials and, reportedly, animation for the 1982 film Tron. Digital Effects' use of APL was informally described at a number of SIGAPL conferences in the late 1980s; examples discussed included the early UK Channel 4 TV logo/ident.

Interest in APL has declined from a peak in the mid-1980s. This appears partly due to lack of smooth migration pathways from higher performing memory-intensive mainframe implementations to low-cost personal computer alternatives - APL implementations for computers before the Intel 80386 released in the late 1980s were only suitable for small applications. Another important reason for the decline is the lack of low cost, standardized and robust, compiled APL executables - usable across multiple computer hardware and OS platforms. There are several APL version permutations across various APL implementations, particularly differences between IBM's APL2 and APL2000's APL+ versions. Another practical limitation is that APL has fallen behind modern integrated computing environments with respect to debugging capabilities or test-driven development. Consequently, while APL remains eminently suitable for small-to-medium-sized programs, productivity gains for larger projects involving teams of developers would be questionable.

The growth of end-user computing tools such as Microsoft Excel and Microsoft Access has indirectly eroded potential APL usage. These are frequently appropriate platforms for what may have been APL applications in the 1970s and 1980s. Some APL users migrated to the J programming language, which offers some advanced features. Lastly, the decline was also due in part to the growth of MATLAB, GNU Octave, and Scilab. These scientific computing array-oriented platforms provide an interactive computing experience similar to APL, but more closely resemble conventional programming languages such as Fortran, and use standard ASCII. Other APL users continue to wait for a very low-cost, standardized, broad-hardware-usable APL implementation.[94][95]

Notwithstanding this decline, APL finds continued use in certain fields, such as accounting research, pre-hardcoded modeling, DNA identification technology,[96][97] symbolic mathematical expression and learning. It remains an inspiration to its current user base as well as for other languages.[98]

Standardization

APL has been standardized by the ANSI working group X3J10 and ISO/IEC Joint Technical Committee 1 Subcommittee 22 Working Group 3. The Core APL language is specified in ISO 8485:1989, and the Extended APL language is specified in ISO/IEC 13751:2001.

See also

- A+ (programming language)

- APL EBCDIC code page

- APL Shared Variables

- I. P. Sharp Associates

- TK Solver

- IBM Type-III Library

- IBM 1130

- Iverson Award

- J (programming language)

- K (programming language)

- Q (programming language from Kx Systems)

- LYaPAS

- Scientific Time Sharing Corporation

- Soliton Incorporated

- ELI (programming language)

- RPL (programming language)

References

- ↑ "Nested Arrays Research System - NARS2000: An Experimental APL Interpreter". NARS2000. Sudley Place Software. Retrieved 10 July 2015.

- ↑ "GNU APL". directory.fsf.org. Free Software Directory. Retrieved 28 September 2013.

- 1 2 "A Bibliography of APL and J". Jsoftware.com. Retrieved 2010-02-03.

- 1 2 "Kx Systems — An Interview with Arthur Whitney — Jan 2004". Kx.com. 2004-01-04. Retrieved 2010-02-03.

- 1 2 "The Growth of MatLab — Cleve Moler" (PDF). Retrieved 2010-02-03.

- 1 2 "About Q'Nial". Nial.com. Retrieved 2010-02-03.

- 1 2 Iverson, Kenneth E. (1962). A Programming Language. Wiley. ISBN 0-471-43014-5.

- ↑ McIntyre, Donald B. (1991). "Language as an Intellectual Tool: From Hieroglyphics to APL". IBM Systems Journal 30 (4): 554–581. doi:10.1147/sj.304.0554. Retrieved 9 January 2015.

- ↑ "ACM Award Citation – John Backus. 1977". Awards.acm.org. 1924-12-03. Retrieved 2010-02-03.

- ↑ "APLX version 4 – from the viewpoint of an experimental physicist. Vector 23.3". Vector.org.uk. 2008-05-20. Archived from the original on 25 January 2010. Retrieved 2010-02-03.

- ↑ Bergquist, Gary A. (1999). "The future of APL in the insurance world". ACM SIGAPL APL Quote Quad (New York, N.Y.) 30 (1): 16–21. doi:10.1145/347194.347203. ISSN 0163-6006.

- ↑ Iverson, Kenneth E., "Automatic Data Processing: Chapter 6: A programming language", 1960, DRAFT copy for Brooks and Iverson 1963 book, "Automatic Data Processing".

- ↑ Brooks, Fred; Iverson, Kenneth, (1963), Automatic Data Processing, John Wiley & Sons Inc.

- ↑ Hellerman, H., "Experimental Personalized Array Translator System", Communications of the ACM, 7, 433 (July, 1964).

- ↑ Falkoff, Adin D.; Iverson, Kenneth E., "The Evolution of APL", ACM SIGPLAN Notices 13, 1978-08.

- ↑ Abrams, Philip S., An interpreter for "Iverson notation", Technical Report: CS-TR-66-47, Department of Computer Science, Stanford University, August 1966;

- ↑ Haigh, Thomas, "Biographies: Kenneth E. Iverson", IEEE Annals of the History of Computing, 2005

- 1 2 Breed, Larry, "The First APL Terminal Session", APL Quote Quad, Association for Computing Machinery, Volume 22, Number 1, September 1991, p.2-4.

- ↑ Adin Falkoff - Computer History Museum. "Iverson credited him for choosing the name APL and the introduction of the IBM golf-ball typewriter with the replacement typehead, which provided the famous character set to represent programs."

- ↑ Larry Breed (August 2006). "How We Got to APL\1130". Vector (British APL Association) 22 (3). ISSN 0955-1433.

- ↑ APL\1130 Manual, May 1969

- ↑ "Remembering APL". Quadibloc.com. Retrieved 2013-06-17.

- ↑ Falkoff, Adin; Iverson, Kenneth E., "APL\360 Users Guide", IBM Research, Thomas J. Watson Research Center, Yorktown Heights, NY, August 1968.

- ↑ "APL\360 Terminal System", IBM Research, Thomas J. Watson Research Center, March 1967.

- 1 2 Pakin, Sandra (1968). APL\360 Reference Manual. Science Research Associates, Inc. ISBN 0-574-16135-X.

- ↑ Falkoff, Adin D.; Iverson, Kenneth E.,The Design of APL, IBM Journal of Research and Development, Volume 17, Number 4, July 1973. "These environmental defined functions were based on the use of still another class of functions—called "I-beams" because of the shape of the symbol used for them—which provide a more general facility for communication between APL programs and the less abstract parts of the system. The I-beam functions were first introduced by the system programmers to allow them to execute System/360 instructions from within APL programs, and thus use APL as a direct aid in their programming activity. The obvious convenience of functions of this kind, which appeared to be part of the language, led to the introduction of the monadic I-beam function for direct use by anyone. Various arguments to this function yielded information about the environment such as available space and time of day."

- ↑ Minker, Jack (January 2004). "Beginning of Computing and Computer Sciences at the University of Maryland" (PDF). Section 2.3.4: University of Maryland. p. 38. Archived from the original (PDF) on 10 June 2011. Retrieved 23 May 2011.

- ↑ Stebbens, Alan. "How it all began".

- ↑ "SIGAPL Home Page". Sigapl.org. Retrieved 2013-06-17.

- ↑ "Turing Award Citation 1979". Awards.acm.org. Retrieved 2010-02-03.

- ↑ Lathwell, Catherine. "Chasing Men Who Stare at Arrays". http://www.aprogramminglanguage.com/. Catherine Lathwell. Retrieved 8 January 2015. External link in

|website=(help) - ↑ Lathwell, Catherine. "The Origins of APL - 1974 - YouTube". https://www.youtube.com. Catherine Lathwell on YouTube. Retrieved 8 January 2015. External link in

|website=(help) - ↑ Robertson, Graeme. "50 Years of APL - Video". https://www.youtube.com. Graeme Robertson/YouTube. Retrieved 8 January 2015. External link in

|website=(help) - ↑ Spence, Bob. "APL demonstration 1975". https://www.youtube.com. Imperial College London. Retrieved 8 January 2015. External link in

|website=(help) - ↑ Hui, Roger. "Remembering Ken Iverson". http://keiapl.org. KEIAPL. Retrieved 10 January 2015. External link in

|website=(help) - ↑ ACM A.M. Turing Award. "Kenneth E. Iverson - Citation". http://amturing.acm.org. ACM. Retrieved 10 January 2015. External link in

|website=(help) - ↑ ACM SIGPLAN. "APL2: the Early Years". http://www.sigapl.org. ACM. Retrieved 10 January 2015. External link in

|website=(help) - ↑ Micro APL. "Overview of the APL System". http://www.microapl.co.uk. Micro APL. Retrieved 10 January 2015. External link in

|website=(help) - ↑ Robertson, Graeme. "A Personal View of APL2010". http://archive.vector.org.uk. Vector - Journal of the British APL Association. Retrieved 10 January 2015. External link in

|website=(help) - ↑ Rodriguez, P.; Rojas, J.; Alfonseca, M.; Burgos, J. I. (1989). "An Expert System in Chemical Synthesis written in APL2/PC". ACM SIGAPL APL Quote Quad 19 (4): 299–303. doi:10.1145/75144.75185. Retrieved 10 January 2015.

- ↑ IBM. "APL2: A Programming Language for Problem Solving, Visualization and Database Access". http://www-03.ibm.com. IBM. Retrieved 10 January 2015. External link in

|website=(help) - ↑ Falkoff, Adin D. (1991). "The IBM family of APL systems" (PDF). IBM Systems Journal (IBM) 30 (4): 416–432. doi:10.1147/sj.304.0416. Retrieved 2009-06-13.

- ↑ "VideoBrain Family Computer", Popular Science, November 1978, advertisement.

- ↑ Higgins, Donald S., "PC/370 virtual machine", ACM SIGSMALL/PC Notes, Volume 11, Issue 3 (August 1985), pp.23 - 28, 1985.

- ↑ ,Yahoo! Group APL-L, April, 2003

- ↑ Zaks, Rodnay, "A Microprogrammed APL Implementation,", Ph.D. Thesis, University of California, Berkeley, June 1972.

- ↑ Zaks, Rodnay, "Microprogrammed APL,", Fifth IEEE Computer Conference Proceedings, Sep. 1971 p 193

- ↑ William Yerazunis. "A Partial Implementation of APL with Graphics Primitives for PRIME Computers". Retrieved 2013-08-14.

- 1 2 MicroAPL. "Operators". http://www.microapl.co.uk. MicroAPL. Retrieved 12 January 2015. External link in

|website=(help) - ↑ Primitive Functions. "Primitive Functions". http://www.microapl.co.uk/. Retrieved 1 January 2015. External link in

|website=(help) - ↑ Workspace. "The Workspace". http://www.microapl.co.uk. Retrieved 1 January 2015. External link in

|website=(help) - ↑ "example". Catpad.net. Retrieved 2013-06-17.

- ↑ APL Symbols. "Entering APL Symbols". http://www.microapl.co.uk. Retrieved 1 January 2015. External link in

|website=(help) - ↑ Dickey, Lee, A list of APL Transliteration Schemes, 1993

- ↑ Iverson K.E., "Notation as a Tool of Thought", Communications of the ACM, 23: 444-465 (August 1980).

- ↑ Batenburg. "APL Efficiency". http://www.ekevanbatenburg.nl. Retrieved 1 January 2015. External link in

|website=(help) - ↑ Vaxman. "APL Great Programming" (PDF). http://www.vaxman.de. Retrieved 1 January 2015. External link in

|website=(help) - ↑ Janko, Wolfgang (May 1987). "Investigation into the efficiency of using APL for the programming of an inference machine". ACM Digital Library 17 (4): 450–456. doi:10.1145/384282.28372. Retrieved 1 January 2015.

- ↑ Borealis. "Why APL?". http://www.aplborealis.com. Retrieved 1 January 2015. External link in

|website=(help) - ↑ SIGAPL. "What is APL?". http://www.sigapl.org. SIGAPL. Retrieved 20 January 2015. External link in

|website=(help) - ↑ Ju, Dz-Ching; Ching, Wai-Mee (1991). "Exploitation of APL data parallelism on a shared-memory MIMD machine". Newsletter ACM SIGPLAN Notices 26 (7): 61–72. doi:10.1145/109625.109633. Retrieved 20 January 2015.

- ↑ Hsu, Aaron W.; Bowman, William J. "Revisiting APL in the Modern Era" (PDF). http://www.cs.princeton.edu. Indiana University / Princeton. Retrieved 20 January 2015. External link in

|website=(help) - ↑ Ching, W.-M.; Ju, D. (1991). "Execution of automatically parallelized APL programs on RP3". IBM Journal of Research & Development 35 (5/6): 767–777. doi:10.1147/rd.355.0767. Retrieved 20 January 2015.

- ↑ Blelloch, Guy E.; Sabot, Gary W. "Compiling Collection-Oriented Languages onto Massively Parallel Computers". Carnegie Mellon University / Thinking Machines Corp.: 1–31. CiteSeerX: 10

.1 ..1 .51 .5088 Collection oriented languages include APL, APL2

- ↑ Jendrsczok, Johannes; Hoffmann, Rolf; Ediger, Patrick; Keller, J ̈org. "Implementing APL-like data parallel functions on a GCA machine" (PDF). https://www.fernuni-hagen.de. Fernuni-Hagen.De. pp. 1–6. Retrieved 22 January 2015.

GCA - Global Cellular Automation. Inherently massively parallel. 'APL has been chosen because of the ability to express matrix and vector' structures.

External link in|website=(help) - ↑ Brenner of IBM T.J.Watson Research Center, Norman (1984). "VLSI circuit design using APL with fortran subroutines". ACM SIGAPL APL Quote Quad (ACM SIGAPL) 14 (4): 77–79. doi:10.1145/800058.801079. Retrieved 22 January 2015.

APL for interactiveness and ease of coding

- ↑ Gamble, D.J.; Hobson, R.F. (1989). "Towards a graphics/procedural environment for constructing VLSI module generators". Communications, Computers and Signal Processing, 1989. Conference Proceeding., IEEE Pacific Rim Conference on (Victoria, BC, Canada: IEEE): 606–611. doi:10.1109/PACRIM.1989.48437.

VLSI module generators are described. APL and C, as examples of interpreted and compiled languages, can be interfaced to an advanced graphics display

- ↑ "Dyalog Ltd's website".

- ↑ Song, Yonghong; Xu, Rong; Wang, Cheng; Li, Zhiyuan. "Improving Data Locality by Array Contraction" (PDF). https://www.cs.purdue.edu. Purdue University. Retrieved 4 February 2015.

Array contraction is a program transformation which reduces array size while preserving the correct output. In this paper, we present an aggressive array-contraction technique and study its impact on memory system performance. This technique, called controlled SFC, combines loop shifting and controlled loop fusion to maximize opportunities for array contraction within a given loop nesting. A controlled fusion scheme is used

External link in|website=(help) - ↑ MARTHA and LLAMA. "The APL Computer Language". http://marthallama.org. MarthaLlama. Retrieved 20 January 2015. External link in

|website=(help) - ↑ Lee, Robert S. (1983). "Two Implementations of APL". PC Magazine 2 (5): 379. Retrieved 20 January 2015.

- ↑ Iverson, Kenneth E. "A Dictionary of APL". http://www.jsoftware.com. JSoftware / Iverson Estate. Retrieved 20 January 2015. External link in

|website=(help) - 1 2 3 4 5 6 "APL concepts". Microapl.co.uk. Retrieved 2010-02-03.

- ↑ "Nested array theory". Nial.com. Retrieved 2010-02-03.

- 1 2 3 "Programmera i APL", Bohman, Fröberg, Studentlitteratur, ISBN 91-44-13162-3

- ↑ Iverson, Kenneth E. "APL Syntax and Semantics". http://www.jsoftware.com. I.P. Sharp Assoc. Retrieved 11 January 2015. External link in

|website=(help) - ↑ Dyalog APL/W. "Producing a standalone 'Hello World' program in APL". https://www.youtube.com. Dyalog-APLtrainer. Retrieved 11 January 2015. External link in

|website=(help) - ↑ Wiktionary. "APLer - Wiktionary". https://en.wiktionary.org. Wiktionary. Retrieved 11 January 2015. External link in

|website=(help) - ↑ MicroAPL. "APL Primitives". http://www.microapl.co.uk. MicroAPL. Retrieved 11 January 2015. External link in

|website=(help) - ↑ NARS2000. "APL Font - Extra APL Glyphs". http://wiki.nars2000.org. NARS2000. Retrieved 11 January 2015. External link in

|website=(help) - ↑ Fox, Ralph L. "Systematically Random Numbers". http://www.sigapl.org. SIGAPL. Retrieved 11 January 2015. External link in

|website=(help) - ↑ Texas Instruments (1977). "TI 745 full page ad: Introducing a New Set of Characters". Computerworld 11 (27): 32. Retrieved 20 January 2015.

- ↑ Dyalog. "APL Fonts and Keyboards". http://www.dyalog.com. Dyalog. Retrieved 19 January 2015. External link in

|website=(help) - ↑ Smith, Bob. "NARS2000 Keyboard". http://www.sudleyplace.com. Bob Smith / NARS2000. Retrieved 19 January 2015. External link in

|website=(help) - ↑ MicroAPL Ltd. "Introduction to APL - APL Symbols". http://www.microapl.co.uk. MicroAPL Ltd. Retrieved 8 January 2015. External link in

|website=(help) - ↑ Brown, James A.; Hawks, Brent; Trimble, Ray (1993). "Extending the APL character set". ACM SIGAPL APL Quote Quad 24 (1): 41–46. doi:10.1145/166198.166203. Retrieved 8 January 2015.

- ↑ Kromberg, Morten. "Unicode Support for APL". http://archive.vector.org.uk. Vector, Journal of the British APL Association. Retrieved 8 January 2015. External link in

|website=(help) - ↑ Dyalog, Inc. APL fonts and keyboards. http://www.dyalog.com/apl-font-keyboard.htm

- ↑ Zarri, Gian Piero. "Using APL in an Artificial Intelligence Environment". ACM SIGAPL. Retrieved 6 January 2015.

- ↑ Fordyce, K.; Sullivan, G. (1985). "Artificial Intelligence Development Aids". APL Quote Quad. APL 85 Conf. Proc. (#15): 106–113. doi:10.1145/255315.255347.

- ↑ Kromberg, Morten. "Robot Programming in APL". http://www.dyalog.com/. Retrieved 6 January 2015. External link in

|website=(help) - ↑ Kromberg, Morten. "Robot Controlled by Dyalog APL". http://www.youtube.com. YouTube / Dyalog APL. Retrieved 6 January 2015. External link in

|website=(help) - ↑ Quote-Quad newsletter Archived February 9, 2012 at the Wayback Machine

- ↑ Iverson, Ken. "APL Programming Language". http://www.computerhistory.org. Retrieved 1 January 2015. External link in

|website=(help) - ↑ McCormick, Brad. "About APL". http://www.users.cloud9.net. External link in

|website=(help) - ↑ Brenner, Charles. "DNA Identification Technology and APL". http://dna-view.com. Presentation at the 2005 APL User Conference. Retrieved 9 January 2015. External link in

|website=(help) - ↑ Brenner, Charles. "There's DNA Everywhere - an Opportunity for APL". https://www.youtube.com. YouTube. Retrieved 9 January 2015. External link in

|website=(help) - ↑ "Stanford Accounting PhD requirements". Gsb.stanford.edu. Retrieved 2014-01-09.

Further reading

- An APL Machine (1970 Stanford doctoral dissertation by Philip Abrams)

- A Personal History Of APL (1982 article by Michael S. Montalbano)

- McIntyre, Donald B. (1991). "Language as an intellectual tool: From hieroglyphics to APL" (PDF). IBM Systems Journal 30 (4): 554–581. doi:10.1147/sj.304.0554. Archived from the original (PDF) on May 4, 2006.

- Iverson, Kenneth E. (1991). "A Personal view of APL" (PDF). IBM Systems Journal 30 (4): 582–593. doi:10.1147/sj.304.0582. Archived from the original (PDF) on February 27, 2008.

- A Programming Language by Kenneth E. Iverson

- APL in Exposition by Kenneth E. Iverson

- Brooks, Frederick P.; Kenneth Iverson (1965). Automatic Data Processing, System/360 Edition. ISBN 0-471-10605-4.

- Askoolum, Ajay (August 2006). System Building with APL + Win. Wiley. ISBN 978-0-470-03020-2.

- Falkoff, Adin D.; Iverson, Kenneth E.; Sussenguth, Edward H. (1964). "A Formal Description of SYSTEM/360" (PDF). IBM Systems Journal (New York) 3 (3): 198–261. doi:10.1147/sj.32.0198. Archived from the original (PDF) on February 27, 2008.

- History of Programming Languages, chapter 14

- Banon, Gerald Jean Francis (1989). Bases da Computacao Grafica. Rio de Janeiro: Campus. p. 141.

- LePage, Wilbur R. (1978). Applied A.P.L. Programming. Prentice Hall.

- Mougin, Philippe; Ducasse, Stephane (November 2003). "OOPAL: Integrating Array Programming in ObjectOriented Programming" (PDF). Proceeding OOPSLA '03 Proceedings of the 18th annual ACM SIGPLAN conference on Object-oriented programing, systems, languages, and applications 38 (11): 65–77. doi:10.1145/949343.949312.

- Dyalog Limited (September 2006). An Introduction to Object Oriented Programming For APL Programmers (PDF). Dyalog Limited. Archived from the original (PDF) on 28 February 2008.

External links

| Wikimedia Commons has media related to APL (programming language). |

- SIGAPL - SIGPLAN Chapter on Array Programming languages

- APL Wiki

- APL2C, a source of links to APL compilers

- TryAPL.org, an online APL primer

- Vector, the journal of the British APL Association

- APL at DMOZ

- Dyalog APL

- IBM APL2

- APL2000

- NARS2000

- GNU APL

- OpenAPL

.

.