Inequality of arithmetic and geometric means

In mathematics, the inequality of arithmetic and geometric means, or more briefly the AM–GM inequality, states that the arithmetic mean of a list of non-negative real numbers is greater than or equal to the geometric mean of the same list; and further, that the two means are equal if and only if every number in the list is the same.

The simplest non-trivial case — i.e., with more than one variable — for two non-negative numbers x and y, is the statement that

with equality if and only if x = y. This case can be seen from the fact that the square of a real number is always non-negative (greater than or equal to zero) and from the elementary case (a ± b)2 = a2 ± 2ab + b2 of the binomial formula:

In other words (x + y)2 ≥ 4xy, with equality precisely when (x − y)2 = 0, i.e. x = y. For a geometrical interpretation, consider a rectangle with sides of length x and y, hence it has perimeter 2x + 2y and area xy. Similarly, a square with all sides of length √xy has the perimeter 4√xy and the same area as the rectangle. The simplest non-trivial case of the AM–GM inequality implies for the perimeters that 2x + 2y ≥ 4√xy and that only the square has the smallest perimeter amongst all rectangles of equal area.

The general AM–GM inequality corresponds to the fact that the natural logarithm, which converts multiplication to addition, is a strictly concave function; using Jensen's inequality the general proof of the inequality follows.

Extensions of the AM–GM inequality are available to include weights or generalized means.

Background

The arithmetic mean, or less precisely the average, of a list of n numbers x1, x2, . . . , xn is the sum of the numbers divided by n:

The geometric mean is similar, except that it is only defined for a list of nonnegative real numbers, and uses multiplication and a root in place of addition and division:

If x1, x2, . . . , xn > 0, this is equal to the exponential of the arithmetic mean of the natural logarithms of the numbers:

The inequality

Restating the inequality using mathematical notation, we have that for any list of n nonnegative real numbers x1, x2, . . . , xn,

and that equality holds if and only if x1 = x2 = · · · = xn.

Geometric interpretation

In two dimensions, 2x1 + 2x2 is the perimeter of a rectangle with sides of length x1 and x2. Similarly, 4√x1x2 is the perimeter of a square with the same area as the rectangle previously discussed. Thus for n = 2 the AM–GM inequality states that only the square has the smallest perimeter amongst all rectangles of equal area.

The full inequality is an extension of this idea to n dimensions. Every vertex of an n-dimensional box is connected to n edges. If these edges' lengths are x1, x2, . . . , xn, then x1 + x2 + · · · + xn is the total length of edges incident to the vertex. There are 2n vertices, so we multiply this by 2n; since each edge, however, meets two vertices, every edge is counted twice. Therefore we divide by 2 and conclude that there are 2n−1n edges. There are equally many edges of each length and n lengths; hence there are 2n−1 edges of each length and the total edge-length is 2n−1(x1 + x2 + · · · + xn). On the other hand,

is the total length of edges connected to a vertex on an n-dimensional cube of equal volume. Since the inequality says

we get

with equality if and only if x1 = x2 = · · · = xn.

Thus the AM–GM inequality states that only the n-cube has the smallest sum of lengths of edges connected to each vertex amongst all n-dimensional boxes with the same volume.[1]

Example application

Consider the function

for all positive real numbers x, y and z. Suppose we wish to find the minimal value of this function. First we rewrite it a bit:

with

Applying the AM–GM inequality for n = 6, we get

Further, we know that the two sides are equal exactly when all the terms of the mean are equal:

All the points (x, y, z) satisfying these conditions lie on a half-line starting at the origin and are given by

Practical applications

An important practical application in financial mathematics is to computing the rate of return: the annualized return, computed via the geometric mean, is less than the average annual return, computed by the arithmetic mean (or equal if all returns are equal). This is important in analyzing investments, as the average return overstates the cumulative effect.

Proofs of the AM–GM inequality

There are several ways to prove the AM–GM inequality; for example, it can be inferred from Jensen's inequality, using the concave function ln(x). It can also be proven using the rearrangement inequality. Considering length and required prerequisites, the elementary proof by induction given below is probably the best recommendation for first reading.

Idea of the first two proofs

We have to show that

with equality only when all numbers are equal. If xi ≠ xj, then replacing both xi and xj by (xi + xj)/2 will leave the arithmetic mean on the left-hand side unchanged, but will increase the geometric mean on the right-hand side because

Thus the right-hand side will be largest when all xis are equal to the arithmetic mean

thus as this is then the largest value of right-hand side of the expression, we have

This is a valid proof for the case n = 2, but the procedure of taking iteratively pairwise averages may fail to produce n equal numbers in the case n ≥ 3. An example of this case is x1 = x2 ≠ x3: Averaging two different numbers produces two equal numbers, but the third one is still different. Therefore, we never actually get an inequality involving the geometric mean of three equal numbers.

Hence, an additional trick or a modified argument is necessary to turn the above idea into a valid proof for the case n ≥ 3.

Proof by induction

With the arithmetic mean

of the non-negative real numbers x1, . . . , xn, the AM–GM statement is equivalent to

with equality if and only if α = xi for all i ∈ {1, . . . , n}.

For the following proof we apply mathematical induction and only well-known rules of arithmetic.

Induction basis: For n = 1 the statement is true with equality.

Induction hypothesis: Suppose that the AM–GM statement holds for all choices of n non-negative real numbers.

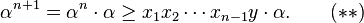

Induction step: Consider n + 1 non-negative real numbers x1, . . . , xn+1, . Their arithmetic mean α satisfies

If all numbers are equal to α, then we have equality in the AM–GM statement and we are done. Otherwise we may find one number that is greater than α and one that is smaller than α, say xn > α and xn+1 < α. Then

Now consider the n numbers x1, . . . , xn–1, y with

which are also non-negative. Since

α is also the arithmetic mean of n numbers x1, . . . , xn–1, y and the induction hypothesis implies

Due to (*) we know that

hence

in particular α > 0. Therefore, if at least one of the numbers x1, . . . , xn–1 is zero, then we already have strict inequality in (**). Otherwise the right-hand side of (**) is positive and strict inequality is obtained by using the estimate (***) to get a lower bound of the right-hand side of (**). Thus, in both cases we get

which completes the proof.

Proof by Cauchy using forward–backward induction

The following proof by cases relies directly on well-known rules of arithmetic but employs the rarely used technique of forward-backward-induction. It is essentially from Augustin Louis Cauchy and can be found in his Cours d'analyse.[2]

The case where all the terms are equal

If all the terms are equal:

then their sum is nx1, so their arithmetic mean is x1; and their product is x1n, so their geometric mean is x1; therefore, the arithmetic mean and geometric mean are equal, as desired.

The case where not all the terms are equal

It remains to show that if not all the terms are equal, then the arithmetic mean is greater than the geometric mean. Clearly, this is only possible when n > 1.

This case is significantly more complex, and we divide it into subcases.

The subcase where n = 2

If n = 2, then we have two terms, x1 and x2, and since (by our assumption) not all terms are equal, we have:

hence

as desired.

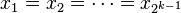

The subcase where n = 2k

Consider the case where n = 2k, where k is a positive integer. We proceed by mathematical induction.

In the base case, k = 1, so n = 2. We have already shown that the inequality holds when n = 2, so we are done.

Now, suppose that for a given k > 1, we have already shown that the inequality holds for n = 2k−1, and we wish to show that it holds for n = 2k. To do so, we apply the inequality twice for 2k-1 numbers and once for 2 numbers to obtain:

where in the first inequality, the two sides are equal only if

and

(in which case the first arithmetic mean and first geometric mean are both equal to x1, and similarly with the second arithmetic mean and second geometric mean); and in the second inequality, the two sides are only equal if the two geometric means are equal. Since not all 2k numbers are equal, it is not possible for both inequalities to be equalities, so we know that:

as desired.

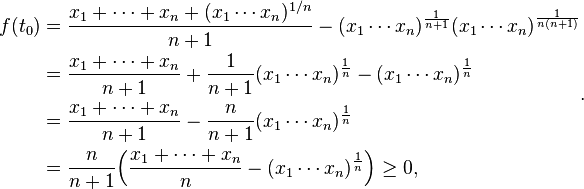

The subcase where n < 2k

If n is not a natural power of 2, then it is certainly less than some natural power of 2, since the sequence 2, 4, 8, . . . , 2k, . . . is unbounded above. Therefore, without loss of generality, let m be some natural power of 2 that is greater than n.

So, if we have n terms, then let us denote their arithmetic mean by α, and expand our list of terms thus:

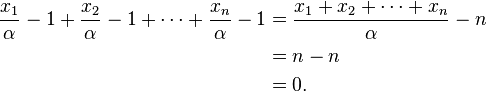

We then have:

so

and

as desired.

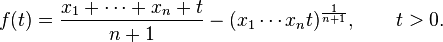

Proof by induction using basic calculus

The following proof uses mathematical induction and some basic differential calculus.

Induction basis: For n = 1 the statement is true with equality.

Induction hypothesis: Suppose that the AM–GM statement holds for all choices of n non-negative real numbers.

Induction step: In order to prove the statement for n + 1 non-negative real numbers x1, . . . , xn, xn+1, we need to prove that

with equality only if all the n + 1 numbers are equal.

If all numbers are zero, the inequality holds with equality. If some but not all numbers are zero, we have strict inequality. Therefore, we may assume in the following, that all n + 1 numbers are positive.

We consider the last number xn+1 as a variable and define the function

Proving the induction step is equivalent to showing that f(t) ≥ 0 for all t > 0, with f(t) = 0 only if x1, . . . , xn and t are all equal. This can be done by analyzing the critical points of f using some basic calculus.

The first derivative of f is given by

A critical point t0 has to satisfy f′(t0) = 0, which means

After a small rearrangement we get

and finally

which is the geometric mean of x1, . . . , xn. This is the only critical point of f. Since f′′(t) > 0 for all t > 0, the function f is strictly convex and has a strict global minimum at t0. Next we compute the value of the function at this global minimum:

where the final inequality holds due to the induction hypothesis. The hypothesis also says that we can have equality only when x1, . . . , xn are all equal. In this case, their geometric mean t0 has the same value, Hence, unless x1, . . . , xn, xn+1 are all equal, we have f(xn+1) > 0. This completes the proof.

This technique can be used in the same manner to prove the generalized AM–GM inequality and Cauchy–Schwarz inequality in Euclidean space Rn.

Proof by Pólya using the exponential function

George Pólya provided a proof similar to what follows. Let f(x) = ex–1 – x for all real x, with first derivative f′(x) = ex–1 – 1 and second derivative f′′(x) = ex–1. Observe that f(1) = 0, f′(1) = 0 and f′′(x) > 0 for all real x, hence f is strictly convex with the absolute minimum at x = 1. Hence x ≤ ex–1 for all real x with equality only for x = 1.

Consider a list of non-negative real numbers x1, x2, . . . , xn. If they are all zero, then the AM–GM inequality holds with equality. Hence we may assume in the following for their arithmetic mean α > 0. By n-fold application of the above inequality, we obtain that

with equality if and only if xi = α for every i ∈ {1, . . . , n}. The argument of the exponential function can be simplified:

Returning to (*),

which produces x1 x2 · · · xn ≤ αn, hence the result[3]

Generalizations

Weighted AM–GM inequality

There is a similar inequality for the weighted arithmetic mean and weighted geometric mean. Specifically, let the nonnegative numbers x1, x2, . . . , xn and the nonnegative weights w1, w2, . . . , wn be given. Set w = w1 + w2 + · · · + wn. If w > 0, then the inequality

holds with equality if and only if all the xk with wk > 0 are equal. Here the convention 00 = 1 is used.

If all wk = 1, this reduces to the above inequality of arithmetic and geometric means.

Proof using Jensen's inequality

Using the finite form of Jensen's inequality for the natural logarithm, we can prove the inequality between the weighted arithmetic mean and the weighted geometric mean stated above.

Since an xk with weight wk = 0 has no influence on the inequality, we may assume in the following that all weights are positive. If all xk are equal, then equality holds. Therefore, it remains to prove strict inequality if they are not all equal, which we will assume in the following, too. If at least one xk is zero (but not all), then the weighted geometric mean is zero, while the weighted arithmetic mean is positive, hence strict inequality holds. Therefore, we may assume also that all xk are positive.

Since the natural logarithm is strictly concave, the finite form of Jensen's inequality and the functional equations of the natural logarithm imply

Since the natural logarithm is strictly increasing,

Other generalizations

Other generalizations of the inequality of arithmetic and geometric means include:

See also

Notes

References

- ↑ Steele, J. Michael (2004). The Cauchy-Schwarz Master Class: An Introduction to the Art of Mathematical Inequalities. MAA Problem Books Series. Cambridge University Press. ISBN 978-0-521-54677-5. OCLC 54079548.

- ↑ Cauchy, Augustin-Louis (1821). Cours d'analyse de l'École Royale Polytechnique, première partie, Analyse algébrique, Paris. The proof of the inequality of arithmetic and geometric means can be found on pages 457ff.

- ↑ Arnold, Denise; Arnold, Graham (1993). Four unit mathematics. Hodder Arnold H&S. p. 242. ISBN 978-0-340-54335-1. OCLC 38328013.

External links

- Arthur Lohwater (1982). "Introduction to Inequalities". Online e-book in PDF format.

![\sqrt[n]{x_1 \cdot x_2 \cdots x_n}.](../I/m/d697f2a744c22e325772f817a6888f88.png)

![\frac{x_1 + x_2 + \cdots + x_n}{n} \ge \sqrt[n]{x_1 \cdot x_2 \cdots x_n}\,,](../I/m/b03a2827ff7bd65d7803cf48913b8d7f.png)

![2^{n-1} n \sqrt[n]{x_1 x_2 \cdots x_n}](../I/m/26168f5611edcc091b6c351e3173001b.png)

![{x_1 + x_2 +\cdots + x_n \over n} \ge \sqrt[n]{x_1 x_2\cdots x_n},](../I/m/d10662dc34f7c36f2e292b62cfba1349.png)

![2^{n-1}(x_1 + x_2 + \cdots + x_n) \ge 2^{n-1} n \sqrt[n]{x_1 x_2\cdots x_n}\,](../I/m/286c91ed8752bae3db1a967b7fb29191.png)

![f(x,y,z) = \frac{x}{y} + \sqrt{\frac{y}{z}} + \sqrt[3]{\frac{z}{x}}](../I/m/7f847e368bbc9196ce8f8ea6486e954a.png)

![\begin{align}

f(x,y,z)

&= 6 \cdot \frac{ \frac{x}{y} + \frac{1}{2} \sqrt{\frac{y}{z}} + \frac{1}{2} \sqrt{\frac{y}{z}} + \frac{1}{3} \sqrt[3]{\frac{z}{x}} + \frac{1}{3} \sqrt[3]{\frac{z}{x}} + \frac{1}{3} \sqrt[3]{\frac{z}{x}} }{6}\\

&=6\cdot\frac{x_1+x_2+x_3+x_4+x_5+x_6}{6}

\end{align}](../I/m/a070aeaef9bab76dff6a60c2d2a32252.png)

![x_1=\frac{x}{y},\qquad x_2=x_3=\frac{1}{2} \sqrt{\frac{y}{z}},\qquad x_4=x_5=x_6=\frac{1}{3} \sqrt[3]{\frac{z}{x}}.](../I/m/81f9e15caa1078300149817fa5b3433c.png)

![\begin{align}

f(x,y,z)

&\ge 6 \cdot \sqrt[6]{ \frac{x}{y} \cdot \frac{1}{2} \sqrt{\frac{y}{z}} \cdot \frac{1}{2} \sqrt{\frac{y}{z}} \cdot \frac{1}{3} \sqrt[3]{\frac{z}{x}} \cdot \frac{1}{3} \sqrt[3]{\frac{z}{x}} \cdot \frac{1}{3} \sqrt[3]{\frac{z}{x}} }\\

&= 6 \cdot \sqrt[6]{ \frac{1}{2 \cdot 2 \cdot 3 \cdot 3 \cdot 3} \frac{x}{y} \frac{y}{z} \frac{z}{x} }\\

&= 2^{2/3} \cdot 3^{1/2}.

\end{align}](../I/m/154aaf24fc68089167090b5f47937a0c.png)

![f(x,y,z) = 2^{2/3} \cdot 3^{1/2} \quad \mbox{when} \quad \frac{x}{y} = \frac{1}{2} \sqrt{\frac{y}{z}} = \frac{1}{3} \sqrt[3]{\frac{z}{x}}.](../I/m/11948796a1a2208c21ba3d0a06b3186e.png)

![(x,y,z)=\biggr(x,\sqrt[3]{2}\sqrt{3}\,x,\frac{3\sqrt{3}}{2}\,x\biggr)\quad\mbox{with}\quad x>0.](../I/m/9e6bfc9454c396b46ce1761934efd343.png)

![\frac{x_1+x_2+\cdots+x_n}{n} \ge \sqrt[n]{x_1x_2 \cdots x_n}](../I/m/59233828a7dfbc48a6432c0e05a91d32.png)

![\frac{x_1+x_2+\ldots+x_n}{n}=\alpha=\sqrt[n]{\alpha\alpha \ldots \alpha}\ge\sqrt[n]{x_1x_2 \ldots x_n}.](../I/m/0f4286f3e8e6032b1e022c84f5f5dd52.png)

![\begin{align}

\frac{x_1 + x_2 + \cdots + x_{2^k}}{2^k} & {} =\frac{\frac{x_1 + x_2 + \cdots + x_{2^{k-1}}}{2^{k-1}} + \frac{x_{2^{k-1} + 1} + x_{2^{k-1} + 2} + \cdots + x_{2^k}}{2^{k-1}}}{2} \\[7pt]

& \ge \frac{\sqrt[2^{k-1}]{x_1 x_2 \cdots x_{2^{k-1}}} + \sqrt[2^{k-1}]{x_{2^{k-1} + 1} x_{2^{k-1} + 2} \cdots x_{2^k}}}{2} \\[7pt]

& \ge \sqrt{\sqrt[2^{k-1}]{x_1 x_2 \cdots x_{2^{k-1}}} \sqrt[2^{k-1}]{x_{2^{k-1} + 1} x_{2^{k-1} + 2} \cdots x_{2^k}}} \\[7pt]

& = \sqrt[2^k]{x_1 x_2 \cdots x_{2^k}}

\end{align}](../I/m/5078f797a4ea4cca823aa39d62d86b2e.png)

![\frac{x_1 + x_2 + \cdots + x_{2^k}}{2^k} > \sqrt[2^k]{x_1 x_2 \cdots x_{2^k}}](../I/m/5be511a9650db2e6ef025a7493d1d91d.png)

![\begin{align}

\alpha & = \frac{x_1 + x_2 + \cdots + x_n}{n} \\[6pt]

& = \frac{\frac{m}{n} \left( x_1 + x_2 + \cdots + x_n \right)}{m} \\[6pt]

& = \frac{x_1 + x_2 + \cdots + x_n + \frac{m-n}{n} \left( x_1 + x_2 + \cdots + x_n \right)}{m} \\[6pt]

& = \frac{x_1 + x_2 + \cdots + x_n + \left( m-n \right) \alpha}{m} \\[6pt]

& = \frac{x_1 + x_2 + \cdots + x_n + x_{n+1} + \cdots + x_m}{m} \\[6pt]

& > \sqrt[m]{x_1 x_2 \cdots x_n x_{n+1} \cdots x_m} \\[6pt]

& = \sqrt[m]{x_1 x_2 \cdots x_n \alpha^{m-n}}\,,

\end{align}](../I/m/bc2e5e9141d6a002a95c74952b8f14ef.png)

![\alpha > \sqrt[n]{x_1 x_2 \cdots x_n}](../I/m/6651782cc5c0056b0273eac35073b32b.png)

![\sqrt[n]{x_1 x_2 \cdots x_n} \le \alpha.](../I/m/da9afd64ce18284a60135133b795fc6b.png)

![\frac{w_1 x_1 + w_2 x_2 + \cdots + w_n x_n}{w} \ge \sqrt[w]{x_1^{w_1} x_2^{w_2} \cdots x_n^{w_n}}](../I/m/cb790c777c65d41ef7169037bad3432a.png)

![\begin{align}

\ln\Bigl(\frac{w_1x_1+\cdots+w_nx_n}w\Bigr) & >\frac{w_1}w\ln x_1+\cdots+\frac{w_n}w\ln x_n \\

& =\ln \sqrt[w]{x_1^{w_1} x_2^{w_2} \cdots x_n^{w_n}}.

\end{align}](../I/m/cab3ce02b8468b834e3641fe9aa20386.png)

![\frac{w_1x_1+\cdots+w_nx_n}w

>\sqrt[w]{x_1^{w_1} x_2^{w_2} \cdots x_n^{w_n}}.](../I/m/fcd7cc113bc47cc738b1932e8b1ccb14.png)