Vector space model

Vector space model or term vector model is an algebraic model for representing text documents (and any objects, in general) as vectors of identifiers, such as, for example, index terms. It is used in information filtering, information retrieval, indexing and relevancy rankings. Its first use was in the SMART Information Retrieval System.

Definitions

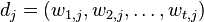

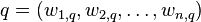

Documents and queries are represented as vectors.

Each dimension corresponds to a separate term. If a term occurs in the document, its value in the vector is non-zero. Several different ways of computing these values, also known as (term) weights, have been developed. One of the best known schemes is tf-idf weighting (see the example below).

The definition of term depends on the application. Typically terms are single words, keywords, or longer phrases. If words are chosen to be the terms, the dimensionality of the vector is the number of words in the vocabulary (the number of distinct words occurring in the corpus).

Vector operations can be used to compare documents with queries.

Applications

Relevance rankings of documents in a keyword search can be calculated, using the assumptions of document similarities theory, by comparing the deviation of angles between each document vector and the original query vector where the query is represented as the same kind of vector as the documents.

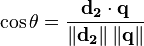

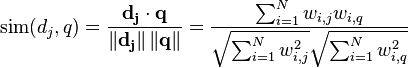

In practice, it is easier to calculate the cosine of the angle between the vectors, instead of the angle itself:

Where  is the intersection (i.e. the dot product) of the document (d2 in the figure to the right) and the query (q in the figure) vectors,

is the intersection (i.e. the dot product) of the document (d2 in the figure to the right) and the query (q in the figure) vectors,  is the norm of vector d2, and

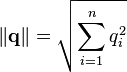

is the norm of vector d2, and  is the norm of vector q. The norm of a vector is calculated as such:

is the norm of vector q. The norm of a vector is calculated as such:

As all vectors under consideration by this model are elementwise nonnegative, a cosine value of zero means that the query and document vector are orthogonal and have no match (i.e. the query term does not exist in the document being considered). See cosine similarity for further information.

Example: tf-idf weights

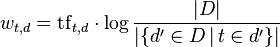

In the classic vector space model proposed by Salton, Wong and Yang [1] the term-specific weights in the document vectors are products of local and global parameters. The model is known as term frequency-inverse document frequency model. The weight vector for document d is ![\mathbf{v}_d = [w_{1,d}, w_{2,d}, \ldots, w_{N,d}]^T](../I/m/3c90fef2940856f7410b65d07e31fd4f.png) , where

, where

and

-

is term frequency of term t in document d (a local parameter)

is term frequency of term t in document d (a local parameter) -

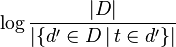

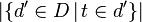

is inverse document frequency (a global parameter).

is inverse document frequency (a global parameter).  is the total number of documents in the document set;

is the total number of documents in the document set;  is the number of documents containing the term t.

is the number of documents containing the term t.

Using the cosine the similarity between document dj and query q can be calculated as:

Advantages

The vector space model has the following advantages over the Standard Boolean model:

- Simple model based on linear algebra

- Term weights not binary

- Allows computing a continuous degree of similarity between queries and documents

- Allows ranking documents according to their possible relevance

- Allows partial matching

Limitations

The vector space model has the following limitations:

- Long documents are poorly represented because they have poor similarity values (a small scalar product and a large dimensionality)

- Search keywords must precisely match document terms; word substrings might result in a "false positive match"

- Semantic sensitivity; documents with similar context but different term vocabulary won't be associated, resulting in a "false negative match".

- The order in which the terms appear in the document is lost in the vector space representation.

- Theoretically assumes terms are statistically independent.

- Weighting is intuitive but not very formal.

Many of these difficulties can, however, be overcome by the integration of various tools, including mathematical techniques such as singular value decomposition and lexical databases such as WordNet.

Models based on and extending the vector space model

Models based on and extending the vector space model include:

- Generalized vector space model

- Latent semantic analysis

- Term Discrimination

- Rocchio Classification

- Random Indexing

Software that implements the vector space model

The following software packages may be of interest to those wishing to experiment with vector models and implement search services based upon them.

Free open source software

- Apache Lucene. Apache Lucene is a high-performance, full-featured text search engine library written entirely in Java.

- Semantic Vectors. Semantic Vector indexes, created by applying a Random Projection algorithm (similar to Latent semantic analysis) to term-document matrices created using Apache Lucene.

- Gensim is a Python+NumPy framework for Vector Space modelling. It contains incremental (memory-efficient) algorithms for Tf–idf, Latent Semantic Indexing, Random Projections and Latent Dirichlet Allocation.

- Weka. Weka is popular data mining package for Java including WordVectors and Bag Of Words models.

- Compressed vector space in C++ by Antonio Gulli

- Text to Matrix Generator (TMG) MATLAB toolbox that can be used for various tasks in text mining specifically i) indexing, ii) retrieval, iii) dimensionality reduction, iv) clustering, v) classification. Most of TMG is written in MATLAB and parts in Perl. It contains implementations of LSI, clustered LSI, NMF and other methods.

- SenseClusters, an open source package, written in Perl, that supports context and word clustering using Latent Semantic Analysis and word co-occurrence matrices.

- S-Space Package, a collection of algorithms for exploring and working with statistical semantics.

- Vector Space Model Software Workbench Collection of 50 source code programs for education.

- word2vec Google's tool for computing continuous distributed representations of words.This tool provides an efficient implementation of the continuous bag-of-words and skip-gram architectures for computing vector representations of words.[2] [3]

Further reading

- G. Salton, A. Wong, and C. S. Yang (1975), "A Vector Space Model for Automatic Indexing," Communications of the ACM, vol. 18, nr. 11, pages 613–620. (Article in which a vector space model was presented)

- David Dubin (2004), The Most Influential Paper Gerard Salton Never Wrote (Explains the history of the Vector Space Model and the non-existence of a frequently cited publication)

- Description of the vector space model

- Description of the classic vector space model by Dr E. Garcia

- Relationship of vector space search to the "k-Nearest Neighbor" search

See also

- Bag-of-words model

- Nearest neighbor search

- Compound term processing

- Inverted index

- w-shingling

- Eigenvalues and eigenvectors

- Conceptual Spaces.

References

- ↑ G. Salton , A. Wong , C. S. Yang, A vector space model for automatic indexing, Communications of the ACM, v.18 n.11, p.613-620, Nov. 1975

- ↑ Tomas Mikolov, Kai Chen, Greg Corrado, and Jeffrey Dean. Efficient Estimation of Word Representations in Vector Space. In Proceedings of Workshop at ICLR, 2013. Url: http://arxiv.org/pdf/1301.3781.pdf

- ↑ Bengio, Yoshua, et al. "A neural probabilistic language model." The Journal of Machine Learning Research 3 (2003): 1137-1155.