Two's complement

Two's complement is a mathematical operation on binary numbers, as well as a binary signed number representation based on this operation. Its wide use in computing makes it the most important example of a radix complement.

The two's complement of an N-bit number is defined as the complement with respect to 2N; in other words, it is the result of subtracting the number from 2N, which in binary is one followed by N zeroes. This is also equivalent to taking the ones' complement and then adding one, since the sum of a number and its ones' complement is all 1 bits. The two's complement of a number behaves like the negative of the original number in most arithmetic, and positive and negative numbers can coexist in a natural way.

| Bits | Unsigned value | 2's complement value |

|---|---|---|

| 011 | 3 | 3 |

| 010 | 2 | 2 |

| 001 | 1 | 1 |

| 000 | 0 | 0 |

| 111 | 7 | -1 |

| 110 | 6 | -2 |

| 101 | 5 | -3 |

| 100 | 4 | -4 |

| Bits | Unsigned value | 2's complement value |

|---|---|---|

| 0111 1111 | 127 | 127 |

| 0111 1110 | 126 | 126 |

| 0000 0010 | 2 | 2 |

| 0000 0001 | 1 | 1 |

| 0000 0000 | 0 | 0 |

| 1111 1111 | 255 | −1 |

| 1111 1110 | 254 | −2 |

| 1000 0010 | 130 | −126 |

| 1000 0001 | 129 | −127 |

| 1000 0000 | 128 | −128 |

In two's-complement representation, positive numbers are simply represented as themselves, and negative numbers are represented by the two's complement of their absolute value;[1] two tables on the right provide examples for N = 3 and N = 8. In general, negation (reversing the sign) is performed by taking the two's complement. This system is the most common method of representing signed integers on computers.[2] An N-bit two's-complement numeral system can represent every integer in the range −(2N − 1) to +(2N − 1 − 1) while ones' complement can only represent integers in the range −(2N − 1 − 1) to +(2N − 1 − 1).

The two's-complement system has the advantage that the fundamental arithmetic operations of addition, subtraction, and multiplication are identical to those for unsigned binary numbers (as long as the inputs are represented in the same number of bits and any overflow beyond those bits is discarded from the result). This property makes the system both simpler to implement and capable of easily handling higher precision arithmetic. Also, zero has only a single representation, obviating the subtleties associated with negative zero, which exists in ones'-complement systems.

History

The method of complements had long been used to perform subtraction in decimal adding machines and mechanical calculators. John von Neumann suggested use of two's complement binary representation is his 1945 First Draft of a Report on the EDVAC proposal for an electronic stored-program digital computer.[3] The 1949 EDSAC, which was inspired by the First Draft, used two's complement representation of binary numbers.

Many early computers, including the CDC 6600, used ones' complement notation. The IBM 700/7000 series scientific machines used sign/magnitude notation, except for the index registers which were two's complement. Early commercial two's complement computers include the Digital Equipment Corporation PDP-5 and the 1963 PDP-6. The System/360, introduced in 1964 by IBM, then the dominant player in the computer industry, made two's complement the most widely used binary representation in the computer industry. The first minicomputer, the PDP-8, introduced in 1965, used two's complement arithmetic as did the 1969 Data General Nova, the 1970 PDP-11, and almost all subsequent minicomputers and microcomputers.

Potential ambiguities of terminology

One should be cautious when using the term two's complement, as it can mean either a number format or a mathematical operator. For example, 0111 represents decimal 7 in two's-complement notation, but the two's complement of 7 in a 4-bit register is actually the "1001" bit string (the same as represents 9 = 24 − 7 in unsigned arithmetics) which is the two's complement representation of −7. The statement "convert x to two's complement" may be ambiguous, since it could describe either the process of representing x in two's-complement notation without changing its value, or the calculation of the two's complement, which is the arithmetic negative of x if two's complement representation is used.

Converting from two's complement representation

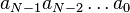

A two's-complement number system encodes positive and negative numbers in a binary number representation. The weight of each bit is a power of two, except for the most significant bit, whose weight is the negative of the corresponding power of two.

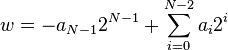

The value w of an N-bit integer  is given by the following formula:

is given by the following formula:

The most significant bit determines the sign of the number and is sometimes called the sign bit. Unlike in sign-and-magnitude representation, the sign bit also has the weight −(2N − 1) shown above. Using N bits, all integers from −(2N − 1) to 2N − 1 − 1 can be represented.

Converting to two's complement representation

In two's complement notation, a non-negative number is represented by its ordinary binary representation; in this case, the most significant bit is 0. Though, the range of numbers represented is not the same as with unsigned binary numbers. For example, an 8-bit unsigned number can represent the values 0 to 255 (11111111). However a two's complement 8-bit number can only represent positive integers from 0 to 127 (01111111), because the rest of the bit combinations with the most significant bit as '1' represent the negative integers −1 to −128.

The two's complement operation is the additive inverse operation, so negative numbers are represented by the two's complement of the absolute value.

From the ones' complement

To get the two's complement of a binary number, the bits are inverted, or "flipped", by using the bitwise NOT operation; the value of 1 is then added to the resulting value, ignoring the overflow which occurs when taking the two's complement of 0.

For example, using 1 byte (= 2 nibbles = 8 bits), the decimal number 5 is represented by

- 0000 01012

The most significant bit is 0, so the pattern represents a non-negative value. To convert to −5 in two's-complement notation, the bits are inverted; 0 becomes 1, and 1 becomes 0:

- 1111 1010

At this point, the numeral is the ones' complement of the decimal value −5. To obtain the two's complement, 1 is added to the result, giving:

- 1111 1011

The result is a signed binary number representing the decimal value −5 in two's-complement form. The most significant bit is 1, so the value represented is negative.

The two's complement of a negative number is the corresponding positive value. For example, inverting the bits of −5 (above) gives:

- 0000 0100

And adding one gives the final value:

- 0000 0101

The two's complement of zero is zero: inverting gives all ones, and adding one changes the ones back to zeros (since the overflow is ignored). Furthermore, the two's complement of the most negative number representable (e.g. a one as the most-significant bit and all other bits zero) is itself. Hence, there appears to be an 'extra' negative number.

Subtraction from 2N

The sum of a number and its ones' complement is an N-bit word with all 1 bits, which is 2N − 1. Then adding a number to its two's complement results in the N lowest bits set to 0 and the carry bit 1, where the latter has the weight 2N. Hence, in the unsigned binary arithmetic the value of two's-complement negative number x* of a positive x satisfies the equality x* = 2N − x.[4]

For example, to find the 4-bit representation of −5 (subscripts denote the base of the representation):

- x = 510 therefore x = 01012

Hence, with N = 4:

- x* = 2N − x = 24 − 510 = 100002 − 01012 = 10112

The calculation can be done entirely in base 10, converting to base 2 at the end:

- x* = 2N − x = 24 − 510 = 1110 = 10112

Working from LSB towards MSB

A shortcut to manually convert a binary number into its two's complement is to start at the least significant bit (LSB), and copy all the zeros (working from LSB toward the most significant bit) until the first 1 is reached; then copy that 1, and flip all the remaining bits (Leave the MSB as a 1 if the initial number was in sign-and-magnitude representation). This shortcut allows a person to convert a number to its two's complement without first forming its ones' complement. For example: the two's complement of "0011 1100" is "1100 0100", where the underlined digits were unchanged by the copying operation (while the rest of the digits were flipped).

In computer circuitry, this method is no faster than the "complement and add one" method; both methods require working sequentially from right to left, propagating logic changes. The method of complementing and adding one can be sped up by a standard carry look-ahead adder circuit; the LSB towards MSB method can be sped up by a similar logic transformation.

Sign extension

| Decimal | 7-bit notation | 8-bit notation |

|---|---|---|

| −42 | 1010110 | 1101 0110 |

| 42 | 0101010 | 0010 1010 |

When turning a two's-complement number with a certain number of bits into one with more bits (e.g., when copying from a 1 byte variable to a two byte variable), the most-significant bit must be repeated in all the extra bits. Some processors do this in a single instruction; on other processors, a conditional must be used followed by code to set the relevant bits or bytes.

Similarly, when a two's-complement number is shifted to the right, the most-significant bit, which contains magnitude and the sign information, must be maintained. However when shifted to the left, a 0 is shifted in. These rules preserve the common semantics that left shifts multiply the number by two and right shifts divide the number by two.

Both shifting and doubling the precision are important for some multiplication algorithms. Note that unlike addition and subtraction, precision extension and right shifting are done differently for signed vs unsigned numbers.

Most negative number

With only one exception, starting with any number in two's-complement representation, if all the bits are flipped and 1 added, the two's-complement representation of the negative of that number is obtained. Positive 12 becomes negative 12, positive 5 becomes negative 5, zero becomes zero(+overflow), etc.

| −128 | 1000 0000 |

| invert bits | 0111 1111 |

| add one | 1000 0000 |

The two's complement of the minimum number in the range will not have the desired effect of negating the number. For example, the two's complement of −128 in an 8-bit system results in the same binary number. This is because a positive value of 128 cannot be represented with an 8-bit signed binary numeral.

This phenomenon is fundamentally about the mathematics of binary numbers, not the details of the representation as two's complement. Mathematically, this is complementary to the fact that the negative of 0 is again 0. For a given number of bits k there is an even number of binary numbers 2k, taking negatives is a group action (of the group of order 2) on binary numbers, and since the orbit of zero has order 1, at least one other number must have an orbit of order 1 for the orders of the orbits to add up to the order of the set. Thus some other number must be invariant under taking negatives (formally, by the orbit-stabilizer theorem). Geometrically, one can view the k-bit binary numbers as the cyclic group  , which can be visualized as a circle (or properly a regular 2k-gon), and taking negatives is a reflection, which fixes the elements of order dividing 2: 0 and the opposite point, or visually the zenith and nadir.

, which can be visualized as a circle (or properly a regular 2k-gon), and taking negatives is a reflection, which fixes the elements of order dividing 2: 0 and the opposite point, or visually the zenith and nadir.

Note that this negative being the same number is detected as an overflow condition since there was a carry into but not out of the most-significant bit. This can lead to unexpected bugs in that an unchecked implementation of absolute value could return a negative number in the case of the minimum negative. The abs family of integer functions in C typically has this behaviour. This is also true for Java.[5] In this case it is for the developer to decide if there will be a check for the minimum negative value before the call of the function.

The most negative number in two's complement is sometimes called "the weird number," because it is the only exception.[6][7]

Although the number is an exception, it is a valid number in regular two's complement systems. All arithmetic operations work with it both as an operand and (unless there was an overflow) a result.

Why it works

Given a set of all possible N-bit values, we can assign the lower (by the binary value) half to be the integers from 0 to (2N − 1 − 1) inclusive and the upper half to be −2N − 1 to −1 inclusive. The upper half (again, by the binary value) can be used to represent negative integers from −2N − 1 to −1 because, under addition modulo 2N they behave the same way as those negative integers. That is to say that because i + j mod 2N = i + (j + 2N) mod 2N any value in the set { j + k 2N | k is an integer } can be used in place of j.[8]

For example, with eight bits, the unsigned bytes are 0 to 255. Subtracting 256 from the top half (128 to 255) yields the signed bytes −128 to −1.

The relationship to two's complement is realised by noting that 256 = 255 + 1, and (255 − x) is the ones' complement of x.

| Decimal | Two's complement |

|---|---|

| 127 | 0111 1111 |

| 64 | 0100 0000 |

| 1 | 0000 0001 |

| 0 | 0000 0000 |

| −1 | 1111 1111 |

| −64 | 1100 0000 |

| −127 | 1000 0001 |

| −128 | 1000 0000 |

Example

−95 modulo 256 is equivalent to 161 since

- −95 + 256

- = −95 + 255 + 1

- = 255 − 95 + 1

- = 160 + 1

- = 161

1111 1111 255 − 0101 1111 − 95 =========== ===== 1010 0000 (ones' complement) 160 + 1 + 1 =========== ===== 1010 0001 (two's complement) 161

| Two's complement | Decimal |

|---|---|

| 0111 | 7 |

| 0110 | 6 |

| 0101 | 5 |

| 0100 | 4 |

| 0011 | 3 |

| 0010 | 2 |

| 0001 | 1 |

| 0000 | 0 |

| 1111 | −1 |

| 1110 | −2 |

| 1101 | −3 |

| 1100 | −4 |

| 1011 | −5 |

| 1010 | −6 |

| 1001 | −7 |

| 1000 | −8 |

Fundamentally, the system represents negative integers by counting backward and wrapping around. The boundary between positive and negative numbers is arbitrary, but the de facto rule is that all negative numbers have a left-most bit (most significant bit) of one. Therefore, the most positive 4-bit number is 0111 (7) and the most negative is 1000 (−8). Because of the use of the left-most bit as the sign bit, the absolute value of the most negative number (|−8| = 8) is too large to represent. For example, an 8-bit number can only represent every integer from −128 to 127 (28 − 1 = 128) inclusive. Negating a two's complement number is simple: Invert all the bits and add one to the result. For example, negating 1111, we get 0000 + 1 = 1. Therefore, 1111 must represent −1.[9]

The system is useful in simplifying the implementation of arithmetic on computer hardware. Adding 0011 (3) to 1111 (−1) at first seems to give the incorrect answer of 10010. However, the hardware can simply ignore the left-most bit to give the correct answer of 0010 (2). Overflow checks still must exist to catch operations such as summing 0100 and 0100.

The system therefore allows addition of negative operands without a subtraction circuit and a circuit that detects the sign of a number. Moreover, that addition circuit can also perform subtraction by taking the two's complement of a number (see below), which only requires an additional cycle or its own adder circuit. To perform this, the circuit merely pretends an extra left-most bit of 1 exists.

Arithmetic operations

Addition

Adding two's-complement numbers requires no special processing if the operands have opposite signs: the sign of the result is determined automatically. For example, adding 15 and −5:

11111 111 (carry) 0000 1111 (15) + 1111 1011 (−5) ================== 0000 1010 (10)

This process depends upon restricting to 8 bits of precision; a carry to the (nonexistent) 9th most significant bit is ignored, resulting in the arithmetically correct result of 1010.

The last two bits of the carry row (reading right-to-left) contain vital information: whether the calculation resulted in an arithmetic overflow, a number too large for the binary system to represent (in this case greater than 8 bits). An overflow condition exists when these last two bits are different from one another. As mentioned above, the sign of the number is encoded in the MSB of the result.

In other terms, if the left two carry bits (the ones on the far left of the top row in these examples) are both 1s or both 0s, the result is valid; if the left two carry bits are "1 0" or "0 1", a sign overflow has occurred. Conveniently, an XOR operation on these two bits can quickly determine if an overflow condition exists. As an example, consider the signed 4-bit addition of 7 and 3:

0111 (carry) 0111 (7) + 0011 (3) ============= 1010 (−6) invalid!

In this case, the far left two (MSB) carry bits are "01", which means there was a two's-complement addition overflow. That is, 10102 = 1010 is outside the permitted range of −8 to 7.

In general, any two N-bit numbers may be added without overflow, by first sign-extending both of them to N + 1 bits, and then adding as above. The N + 1 bits result is large enough to represent any possible sum (N = 5 two's complement can represent values in the range −16 to 15) so overflow will never occur. It is then possible, if desired, to 'truncate' the result back to N bits while preserving the value if and only if the discarded bit is a proper sign extension of the retained result bits. This provides another method of detecting overflow—which is equivalent to the method of comparing the carry bits—but which may be easier to implement in some situations, because it does not require access to the internals of the addition.

Subtraction

Computers usually use the method of complements to implement subtraction. Using complements for subtraction is closely related to using complements for representing negative numbers, since the combination allows all signs of operands and results; direct subtraction works with two's-complement numbers as well. Like addition, the advantage of using two's complement is the elimination of examining the signs of the operands to determine if addition or subtraction is needed. For example, subtracting −5 from 15 is really adding 5 to 15, but this is hidden by the two's-complement representation:

11110 000 (borrow) 0000 1111 (15) − 1111 1011 (−5) =========== 0001 0100 (20)

Overflow is detected the same way as for addition, by examining the two leftmost (most significant) bits of the borrows; overflow has occurred if they are different.

Another example is a subtraction operation where the result is negative: 15 − 35 = −20:

11100 000 (borrow) 0000 1111 (15) − 0010 0011 (35) =========== 1110 1100 (−20)

As for addition, overflow in subtraction may be avoided (or detected after the operation) by first sign-extending both inputs by an extra bit.

Multiplication

The product of two N-bit numbers requires 2N bits to contain all possible values.[10]

If the precision of the two operands using two's complement is doubled before the multiplication, direct multiplication (discarding any excess bits beyond that precision) will provide the correct result.[11] For example, take 6 × −5 = −30. First, the precision is extended from four bits to eight. Then the numbers are multiplied, discarding the bits beyond the eighth bit (as shown by "x"):

00000110 (6)

* 11111011 (−5)

============

110

1100

00000

110000

1100000

11000000

x10000000

xx00000000

============

xx11100010

This is very inefficient; by doubling the precision ahead of time, all additions must be double-precision and at least twice as many partial products are needed than for the more efficient algorithms actually implemented in computers. Some multiplication algorithms are designed for two's complement, notably Booth's multiplication algorithm. Methods for multiplying sign-magnitude numbers don't work with two's-complement numbers without adaptation. There isn't usually a problem when the multiplicand (the one being repeatedly added to form the product) is negative; the issue is setting the initial bits of the product correctly when the multiplier is negative. Two methods for adapting algorithms to handle two's-complement numbers are common:

- First check to see if the multiplier is negative. If so, negate (i.e., take the two's complement of) both operands before multiplying. The multiplier will then be positive so the algorithm will work. Because both operands are negated, the result will still have the correct sign.

- Subtract the partial product resulting from the MSB (pseudo sign bit) instead of adding it like the other partial products. This method requires the multiplicand's sign bit to be extended by one position, being preserved during the shift right actions.[12]

As an example of the second method, take the common add-and-shift algorithm for multiplication. Instead of shifting partial products to the left as is done with pencil and paper, the accumulated product is shifted right, into a second register that will eventually hold the least significant half of the product. Since the least significant bits are not changed once they are calculated, the additions can be single precision, accumulating in the register that will eventually hold the most significant half of the product. In the following example, again multiplying 6 by −5, the two registers and the extended sign bit are separated by "|":

0 0110 (6) (multiplicand with extended sign bit) × 1011 (−5) (multiplier) =|====|==== 0|0110|0000 (first partial product (rightmost bit is 1)) 0|0011|0000 (shift right, preserving extended sign bit) 0|1001|0000 (add second partial product (next bit is 1)) 0|0100|1000 (shift right, preserving extended sign bit) 0|0100|1000 (add third partial product: 0 so no change) 0|0010|0100 (shift right, preserving extended sign bit) 1|1100|0100 (subtract last partial product since it's from sign bit) 1|1110|0010 (shift right, preserving extended sign bit) |1110|0010 (discard extended sign bit, giving the final answer, −30)

Comparison (ordering)

Comparison is often implemented with a dummy subtraction, where the flags in the computer's status register are checked, but the main result is ignored. The zero flag indicates if two values compared equal. If the exclusive-or of the sign and overflow flags is 1, the subtraction result was less than zero, otherwise the result was zero or greater. These checks are often implemented in computers in conditional branch instructions.

Unsigned binary numbers can be ordered by a simple lexicographic ordering, where the bit value 0 is defined as less than the bit value 1. For two's complement values, the meaning of the most significant bit is reversed (i.e. 1 is less than 0).

The following algorithm (for an n-bit two's complement architecture) sets the result register R to −1 if A < B, to +1 if A > B, and to 0 if A and B are equal:

Reversed comparison of sign bit:

if A(n-1) == 0 and B(n-1) == 1 then

R := +1

break

else if A(n-1) == 1 and B(n-1) == 0 then

R := -1

break

end

Comparison of remaining bits:

for i = n-2...0 do

if A(i) == 0 and B(i) == 1 then

R := -1

break

else if A(i) == 1 and B(i) == 0 then

R := +1

break

end

end

R := 0

Two's complement and 2-adic numbers

In a classic HAKMEM published by the MIT AI Lab in 1972, Bill Gosper noted that whether or not a machine's internal representation was two's-complement could be determined by summing the successive powers of two. In a flight of fancy, he noted that the result of doing this algebraically indicated that "algebra is run on a machine (the universe) which is two's-complement."[13]

Gosper's end conclusion is not necessarily meant to be taken seriously, and it is akin to a mathematical joke. The critical step is "...110 = ...111 − 1", i.e., "2X = X − 1", and thus X = ...111 = −1. This presupposes a method by which an infinite string of 1s is considered a number, which requires an extension of the finite place-value concepts in elementary arithmetic. It is meaningful either as part of a two's-complement notation for all integers, as a typical 2-adic number, or even as one of the generalized sums defined for the divergent series of real numbers 1 + 2 + 4 + 8 + ···.[14] Digital arithmetic circuits, idealized to operate with infinite (extending to positive powers of 2) bit strings, produce 2-adic addition and multiplication compatible with two's complement representation.[15] Continuity of binary arithmetical and bitwise operations in 2-adic metric also has some use in cryptography.[16]

See also

- Division algorithm, including restoring and non-restoring division in two's-complement representations

- Offset binary

- p-adic number

References

- ↑ David J. Lilja and Sachin S. Sapatnekar, Designing Digital Computer Systems with Verilog, Cambridge University Press, 2005 online

- ↑ E.g. "Signed integers are two's complement binary values that can be used to represent both positive and negative integer values.", Section 4.2.1 in Intel 64 and IA-32 Architectures Software Developer's Manual, Volume 1: Basic Architecture, November 2006

- ↑ von Neumann, John (1945), First Draft of a Report on the EDVAC, retrieved February 20, 2015

- ↑ For x = 0 we have 2N − 0 = 2N, which is equivalent to 0* = 0 modulo 2N (i.e. after restricting to N least significant bits).

- ↑ "Math ( Java Platform SE 7 )".

- ↑ Reynald Affeldt and Nicolas Marti. "Formal Verification of Arithmetic Functions in SmartMIPS Assembly".

- ↑ google.com; "Digital Design and Computer Architecture", by David Harris, David Money Harris, Sarah L. Harris. 2007. Page 18.

- ↑ "3.9. Two's Complement". Chapter 3. Data Representation. cs.uwm.edu. 2012-12-03. Retrieved 2014-06-22.

- ↑ Thomas Finley (April 2000). "Two's Complement". cs.cornell.edu. Retrieved 2014-06-22.

- ↑ Bruno Paillard. An Introduction To Digital Signal Processors, Sec. 6.4.2. Génie électrique et informatique Report, Université de Sherbrooke, April 2004.

- ↑ Karen Miller (August 24, 2007). "Two's Complement Multiplication". cs.wisc.edu. Retrieved April 13, 2015.

- ↑ Wakerly, John F. (2000). Digital Design Principles & Practices (3rd ed.). Prentice Hall. p. 47. ISBN 0-13-769191-2.

- ↑ Hakmem - Programming Hacks - Draft, Not Yet Proofed

- ↑ For the summation of 1 + 2 + 4 + 8 + ··· without recourse to the 2-adic metric, see Hardy, G.H. (1949). Divergent Series. Clarendon Press. LCC QA295 .H29 1967. (pp. 7–10)

- ↑ Vuillemin, Jean (1993). On circuits and numbers. Paris: Digital Equipment Corp. p. 19. Retrieved 2012-01-24., Chapter 7, especially 7.3 for multiplication.

- ↑ Anashin, Vladimir; Bogdanov, Andrey; Kizhvatov, Ilya (2007). "ABC Stream Cipher". Russian State University for the Humanities. Retrieved 24 January 2012.

Further reading

- Koren, Israel (2002). Computer Arithmetic Algorithms. A.K. Peters. ISBN 1-56881-160-8.

- Flores, Ivan (1963). The Logic of Computer Arithmetic. Prentice-Hall.