Total derivative

| Calculus | ||||||

|---|---|---|---|---|---|---|

|

||||||

|

||||||

|

Specialized |

||||||

In the mathematical field of differential calculus, the term total derivative has a number of closely related meanings.

- The total derivative (full derivative) of a function

, of several variables, e.g.,

, of several variables, e.g.,  ,

,  ,

,  , etc., with respect to one of its input variables, e.g.,

, etc., with respect to one of its input variables, e.g.,  , is different from its partial derivative (

, is different from its partial derivative ( ). Calculation of the total derivative of

). Calculation of the total derivative of  with respect to

with respect to  does not assume that the other arguments are constant while

does not assume that the other arguments are constant while  varies; instead, it allows the other arguments to depend on

varies; instead, it allows the other arguments to depend on  . The total derivative adds in these indirect dependencies to find the overall dependency of

. The total derivative adds in these indirect dependencies to find the overall dependency of  on

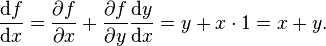

on  [1]:198-203 For example, the total derivative of

[1]:198-203 For example, the total derivative of  with respect to

with respect to  is

is

which simplifies to

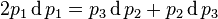

Consider multiplying both sides of the equation by the differential

:

: in the function

in the function  . Because

. Because  depends on

depends on  , some of that change will be due to the partial derivative of

, some of that change will be due to the partial derivative of  with respect to

with respect to  . However, some of that change will also be due to the partial derivatives of

. However, some of that change will also be due to the partial derivatives of  with respect to the variables

with respect to the variables  and

and  . So, the differential

. So, the differential  is applied to the total derivatives of

is applied to the total derivatives of  and

and  to find differentials

to find differentials  and

and  , which can then be used to find the contribution to

, which can then be used to find the contribution to  .

. - It refers to the (total) differential df of a function, either in the traditional language of infinitesimals or the modern language of differential forms.

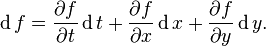

- A differential of the form

refers to the partial derivative of

refers to the partial derivative of  with respect to

with respect to  ) is called a total differential or an exact differential if it is the differential of a function. Again this can be interpreted infinitesimally, or by using differential forms and the exterior derivative.

) is called a total differential or an exact differential if it is the differential of a function. Again this can be interpreted infinitesimally, or by using differential forms and the exterior derivative. - It is another name for the derivative as a linear map, i.e., if f is a differentiable function from Rn to Rm, then the (total) derivative (or differential) of f at x∈Rn is the linear map from Rn to Rm whose matrix is the Jacobian matrix of f at x.

- It is sometimes used as a synonym for the material derivative,

, in fluid mechanics.

, in fluid mechanics.

Differentiation with indirect dependencies

Suppose that f is a function of two variables, x and y. Normally these variables are assumed to be independent. However, in some situations they may be dependent on each other. For example y could be a function of x, constraining the domain of f to a curve in  . In this case the partial derivative of f with respect to x does not give the true rate of change of f with respect to changing x because changing x necessarily changes y. The total derivative takes such dependencies into account.

. In this case the partial derivative of f with respect to x does not give the true rate of change of f with respect to changing x because changing x necessarily changes y. The total derivative takes such dependencies into account.

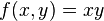

For example, suppose

.

.

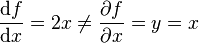

The rate of change of f with respect to x is usually the partial derivative of f with respect to x; in this case,

.

.

However, if y depends on x, the partial derivative does not give the true rate of change of f as x changes because it holds y fixed.

Suppose we are constrained to the line

then

.

.

In that case, the total derivative of f with respect to x is

.

.

Instead of immediately substituting for y in terms of x, this can be found equivalently using the chain rule:

Notice that this is not equal to the partial derivative:

.

.

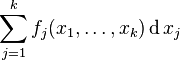

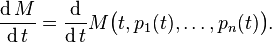

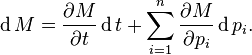

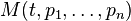

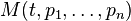

While one can often perform substitutions to eliminate indirect dependencies, the chain rule provides for a more efficient and general technique. Suppose M(t, p1, ..., pn) is a function of time t and n variables  which themselves depend on time. Then, the total time derivative of M is

which themselves depend on time. Then, the total time derivative of M is

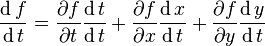

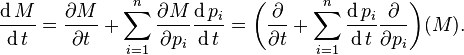

The chain rule for differentiating a function of several variables implies that

This expression is often used in physics for a gauge transformation of the Lagrangian, as two Lagrangians that differ only by the total time derivative of a function of time and the n generalized coordinates lead to the same equations of motion. An interesting example concerns the resolution of causality concerning the Wheeler–Feynman time-symmetric theory. The operator in brackets (in the final expression) is also called the total derivative operator (with respect to t).

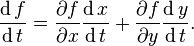

For example, the total derivative of f(x(t), y(t)) is

Here there is no ∂f / ∂t term since f itself does not depend on the independent variable t directly.

The total derivative via differentials

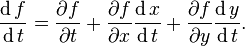

Differentials provide a simple way to understand the total derivative. For instance, suppose  is a function of time t and n variables

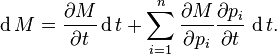

is a function of time t and n variables  as in the previous section. Then, the differential of M is

as in the previous section. Then, the differential of M is

This expression is often interpreted heuristically as a relation between infinitesimals. However, if the variables t and  are interpreted as functions, and

are interpreted as functions, and  is interpreted to mean the composite of M with these functions, then the above expression makes perfect sense as an equality of differential 1-forms, and is immediate from the chain rule for the exterior derivative. The advantage of this point of view is that it takes into account arbitrary dependencies between the variables. For example, if

is interpreted to mean the composite of M with these functions, then the above expression makes perfect sense as an equality of differential 1-forms, and is immediate from the chain rule for the exterior derivative. The advantage of this point of view is that it takes into account arbitrary dependencies between the variables. For example, if  then

then  . In particular, if the variables

. In particular, if the variables  are all functions of t, as in the previous section, then

are all functions of t, as in the previous section, then

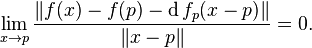

The total derivative as a linear map

Let  be an open subset. Then a function

be an open subset. Then a function  is said to be (totally) differentiable at a point

is said to be (totally) differentiable at a point  , if there exists a linear map

, if there exists a linear map  (also denoted Dpf or Df(p)) such that

(also denoted Dpf or Df(p)) such that

The linear map  is called the (total) derivative or (total) differential of

is called the (total) derivative or (total) differential of  at

at  . A function is (totally) differentiable if its total derivative exists at every point in its domain.

. A function is (totally) differentiable if its total derivative exists at every point in its domain.

Note that f is differentiable if and only if each of its components  is differentiable. For this it is necessary, but not sufficient, that the partial derivatives of each function fj exist. However, if these partial derivatives exist and are continuous, then f is differentiable and its differential at any point is the linear map determined by the Jacobian matrix of partial derivatives at that point.

is differentiable. For this it is necessary, but not sufficient, that the partial derivatives of each function fj exist. However, if these partial derivatives exist and are continuous, then f is differentiable and its differential at any point is the linear map determined by the Jacobian matrix of partial derivatives at that point.

Total differential equation

A total differential equation is a differential equation expressed in terms of total derivatives. Since the exterior derivative is a natural operator, in a sense that can be given a technical meaning, such equations are intrinsic and geometric.

Application of the total differential to error estimation

In measurement, the total differential is used in estimating the error Δf of a function f based on the errors Δx, Δy, ... of the parameters x, y, .... Assuming that the interval is short enough for the change to be approximately linear:

- Δf(x) = f'(x) × Δx

and that all variables are independent, then for all variables,

This is because the derivative fx with respect to the particular parameter x gives the sensitivity of the function f to a change in x, in particular the error Δx. As they are assumed to be independent, the analysis describes the worst-case scenario. The absolute values of the component errors are used, because after simple computation, the derivative may have a negative sign. From this principle the error rules of summation, multiplication etc. are derived, e.g.:

- Let f(a, b) = a × b;

- Δf = faΔa + fbΔb; evaluating the derivatives

- Δf = bΔa + aΔb; dividing by f, which is a × b

- Δf/f = Δa/a + Δb/b

That is to say, in multiplication, the total relative error is the sum of the relative errors of the parameters.

To illustrate how this depends on the function considered, consider the case where the function is f(a, b) = a ln b instead. Then, it can be computed that the error estimate is

- Δf/f = Δa/a + Δb/(b ln b)

with an extra 'ln b' factor not found in the case of a simple product. This additional factor tends to make the error smaller, as ln b is not as large as a bare b.

References

- ↑ Chiang, Alpha C. Fundamental Methods of Mathematical Economics, McGraw-Hill, third edition, 1984.

- A. D. Polyanin and V. F. Zaitsev, Handbook of Exact Solutions for Ordinary Differential Equations (2nd edition), Chapman & Hall/CRC Press, Boca Raton, 2003. ISBN 1-58488-297-2

- From thesaurus.maths.org total derivative