Swarm intelligence

Swarm intelligence (SI) is the collective behavior of decentralized, self-organized systems, natural or artificial. The concept is employed in work on artificial intelligence. The expression was introduced by Gerardo Beni and Jing Wang in 1989, in the context of cellular robotic systems.[1]

SI systems consist typically of a population of simple agents or boids interacting locally with one another and with their environment. The inspiration often comes from nature, especially biological systems. The agents follow very simple rules, and although there is no centralized control structure dictating how individual agents should behave, local, and to a certain degree random, interactions between such agents lead to the emergence of "intelligent" global behavior, unknown to the individual agents. Examples in natural systems of SI include ant colonies, bird flocking, animal herding, bacterial growth, fish schooling and microbial intelligence.

The application of swarm principles to robots is called swarm robotics, while 'swarm intelligence' refers to the more general set of algorithms. 'Swarm prediction' has been used in the context of forecasting problems.

Example algorithms

Particle swarm optimization

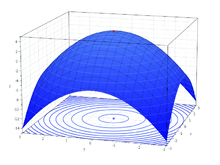

Particle swarm optimization (PSO) is a global optimization algorithm for dealing with problems in which a best solution can be represented as a point or surface in an n-dimensional space. Hypotheses are plotted in this space and seeded with an initial velocity, as well as a communication channel between the particles.[2][3] Particles then move through the solution space, and are evaluated according to some fitness criterion after each timestep. Over time, particles are accelerated towards those particles within their communication grouping which have better fitness values. The main advantage of such an approach over other global minimization strategies such as simulated annealing is that the large number of members that make up the particle swarm make the technique impressively resilient to the problem of local minima.

Ant colony optimization

Ant colony optimization (ACO), introduced by Dorigo in his doctoral dissertation, is a class of optimization algorithms modeled on the actions of an ant colony. ACO is a probabilistic technique useful in problems that deal with finding better paths through graphs. Artificial 'ants'—simulation agents—locate optimal solutions by moving through a parameter space representing all possible solutions. Natural ants lay down pheromones directing each other to resources while exploring their environment. The simulated 'ants' similarly record their positions and the quality of their solutions, so that in later simulation iterations more ants locate better solutions.[4]

Ant lion optimizer

The Ant Lion Optimizer (ALO) mimics the hunting mechanism of antlions in nature. Five main steps of hunting prey such as the random walk of ants, building traps, entrapment of ants in traps, catching preys, and re-building traps are implemented in this algorithm. This algorithm was propose in 2015 [5] (also see Grey wolf optimizer).

Artificial bee colony algorithm

Artificial bee colony algorithm (ABC) is a meta-heuristic algorithm introduced by Karaboga in 2005,[6] and simulates the foraging behaviour of honey bees. The ABC algorithm has three phases: employed bee, onlooker bee and scout bee. In the employed bee and the onlooker bee phases, bees exploit the sources by local searches in the neighbourhood of the solutions selected based on deterministic selection in the employed bee phase and the probabilistic selection in the onlooker bee phase. In the scout bee phase which is an analogy of abandoning exhausted food sources in the foraging process, solutions that are not beneficial anymore for search progress are abandoned, and new solutions are inserted instead of them to explore new regions in the search space. The algorithm has a well-balanced exploration and exploitation ability.

Bacterial colony optimization

The algorithm is based on a lifecycle model that simulates some typical behaviors of E. coli bacteria during their whole lifecycle, including chemotaxis, communication, elimination, reproduction, and migration.[7]

Bacteria communication and self-organization in the context of Network theory has been investigated by Eshel Ben-Jacob research group at Tel Aviv University which developed a fractal model of bacterial colony and identified linguistic and social patterns in colony lifecycle [8] (also see Ben-Jacob's bacteria, Microbial intelligence and Microbial cooperation).

Differential evolution

Differential evolution is similar to genetic algorithm and pattern search. It uses multiagents or search vectors to carry out search. It has mutation and crossover, but do not have the global best solution in its search equations, in contrast with the particle swarm optimization.

The bees algorithm

The bees algorithm in its basic formulation was created by Pham and his co-workers in 2005,[9] and further refined in the following years.[10] Modelled on the foraging behaviour of honey bees, the algorithm combines global explorative search with local exploitative search. A small number of artificial bees (scouts) explores randomly the solution space (environment) for solutions of high fitness (highly profitable food sources), whilst the bulk of the population search (harvest) the neighbourhood of the fittest solutions looking for the fitness optimum. A deterministics recruitment procedure which simulates the waggle dance of biological bees is used to communicate the scouts' findings to the foragers, and distribute the foragers depending on the fitness of the neighbourhoods selected for local search. Once the search in the neighbourhood of a solution stagnates, the local fitness optimum is considered to be found, and the site is abandoned. In summary, the Bees Algorithm searches concurrently the most promising regions of the solution space, whilst continuously sampling it in search of new favourable regions.

Artificial immune systems

Artificial immune systems (AIS) concerns the usage of abstract structure and function of the immune system to computational systems, and investigating the application of these systems towards solving computational problems from mathematics, engineering, and information technology. AIS is a sub-field of Biologically inspired computing, and natural computation, with interests in Machine Learning and belonging to the broader field of Artificial Intelligence.

Grey wolf optimizer

The Grey wolf optimizer (GWO) algorithm mimics the leadership hierarchy and hunting mechanism of gray wolves in nature proposed by Mirjalili et al. in 2014.[11] Four types of grey wolves such as alpha, beta, delta, and omega are employed for simulating the leadership hierarchy. In addition, three main steps of hunting, searching for prey, encircling prey, and attacking prey, are implemented to perform optimization.

Bat algorithm

Bat algorithm (BA) is a swarm-intelligence-based algorithm, inspired by the echolocation behavior of microbats. BA uses a frequency-tuning and automatic balance of exploration and exploitation by controlling loudness and pulse emission rates.[12]

Multi-Verse Optimizer

The main inspirations of the Multi-Verse Optimizer (MVO) are based on three concepts in cosmology: white hole, black hole, and wormhole. The mathematical models of these three concepts are developed to perform exploration, exploitation, and local search respectively. [13]

Gravitational search algorithm

Gravitational search algorithm (GSA) based on the law of gravity and the notion of mass interactions. The GSA algorithm uses the theory of Newtonian physics and its searcher agents are the collection of masses. In GSA, there is an isolated system of masses. Using the gravitational force, every mass in the system can see the situation of other masses. The gravitational force is therefore a way of transferring information between different masses (Rashedi, Nezamabadi-pour and Saryazdi 2009).[14] In GSA, agents are considered as objects and their performance is measured by their masses. All these objects attract each other by a gravity force, and this force causes a movement of all objects globally towards the objects with heavier masses. The heavy masses correspond to good solutions of the problem. The position of the agent corresponds to a solution of the problem, and its mass is determined using a fitness function. By lapse of time, masses are attracted by the heaviest mass, which would ideally present an optimum solution in the search space. The GSA could be considered as an isolated system of masses. It is like a small artificial world of masses obeying the Newtonian laws of gravitation and motion (Rashedi, Nezamabadi-pour and Saryazdi 2009). A multi-objective variant of GSA, called Non-dominated Sorting Gravitational Search Algorithm (NSGSA), was proposed by Nobahari and Nikusokhan in 2011.[15]

Altruism algorithm

Researchers in Switzerland have developed an algorithm based on Hamilton's rule of kin selection. This algorithm shows how altruism in a swarm of entities can, over time, evolve and result in more effective swarm behaviour.[16][17]

Glowworm swarm optimization

Glowworm swarm optimization (GSO), introduced by Krishnanand and Ghose in 2005 for simultaneous computation of multiple optima of multimodal functions.[18][19][20][21] The algorithm shares a few features with some better known algorithms, such as ant colony optimization and particle swarm optimization, but with several significant differences. The agents in GSO are thought of as glowworms that carry a luminescence quantity called luciferin along with them. The glowworms encode the fitness of their current locations, evaluated using the objective function, into a luciferin value that they broadcast to their neighbors. The glowworm identifies its neighbors and computes its movements by exploiting an adaptive neighborhood, which is bounded above by its sensor range. Each glowworm selects, using a probabilistic mechanism, a neighbor that has a luciferin value higher than its own and moves toward it. These movements—based only on local information and selective neighbor interactions—enable the swarm of glowworms to partition into disjoint subgroups that converge on multiple optima of a given multimodal function.

River formation dynamics

River formation dynamics (RFD) is based on imitating how water forms rivers by eroding the ground and depositing sediments. After drops transform the landscape by increasing/decreasing the altitude of places, solutions are given in the form of paths of decreasing altitudes. Decreasing gradients are constructed, and these gradients are followed by subsequent drops to compose new gradients and reinforce the best ones. This heuristic optimization method was first presented in 2007 by Rabanal et al.[22] The applicability of RFD to other NP-complete problems was studied in,[23][24][25] Some other authors have also applied RFD in robot navigation [26] or in routing protocols.[27]

Self-propelled particles

Self-propelled particles (SPP), also referred to as the Vicsek model, was introduced in 1995 by Vicsek et al.[28] as a special case of the boids model introduced in 1986 by Reynolds.[29] A swarm is modelled in SPP by a collection of particles that move with a constant speed but respond to a random perturbation by adopting at each time increment the average direction of motion of the other particles in their local neighbourhood.[30] SPP models predict that swarming animals share certain properties at the group level, regardless of the type of animals in the swarm.[31] Swarming systems give rise to emergent behaviours which occur at many different scales, some of which are turning out to be both universal and robust. It has become a challenge in theoretical physics to find minimal statistical models that capture these behaviours.[32][33][34]

Stochastic diffusion search

Stochastic diffusion search (SDS)[35][36] is an agent-based probabilistic global search and optimization technique best suited to problems where the objective function can be decomposed into multiple independent partial-functions. Each agent maintains a hypothesis which is iteratively tested by evaluating a randomly selected partial objective function parameterised by the agent's current hypothesis. In the standard version of SDS such partial function evaluations are binary, resulting in each agent becoming active or inactive. Information on hypotheses is diffused across the population via inter-agent communication. Unlike the stigmergic communication used in ACO, in SDS agents communicate hypotheses via a one-to-one communication strategy analogous to the tandem running procedure observed in Leptothorax acervorum.[37] A positive feedback mechanism ensures that, over time, a population of agents stabilise around the global-best solution. SDS is both an efficient and robust global search and optimisation algorithm, which has been extensively mathematically described.[38][39][40] Recent work has involved merging the global search properties of SDS with other swarm intelligence algorithms.[41][42]

Multi-swarm optimization

Multi-swarm optimization is a variant of particle swarm optimization (PSO) based on the use of multiple sub-swarms instead of one (standard) swarm. The general approach in multi-swarm optimization is that each sub-swarm focuses on a specific region while a specific diversification method decides where and when to launch the sub-swarms. The multi-swarm framework is especially fitted for the optimization on multi-modal problems, where multiple (local) optima exist.

Applications

Swarm Intelligence-based techniques can be used in a number of applications. The U.S. military is investigating swarm techniques for controlling unmanned vehicles. The European Space Agency is thinking about an orbital swarm for self-assembly and interferometry. NASA is investigating the use of swarm technology for planetary mapping. A 1992 paper by M. Anthony Lewis and George A. Bekey discusses the possibility of using swarm intelligence to control nanobots within the body for the purpose of killing cancer tumors.[43] Conversely al-Rifaie and Aber have used Stochastic Diffusion Search to help locate tumours.[44][45] Swarm intelligence has also been applied for data mining.[46]

Ant-based routing

The use of Swarm Intelligence in telecommunication networks has also been researched, in the form of ant-based routing. This was pioneered separately by Dorigo et al. and Hewlett Packard in the mid-1990s, with a number of variations since. Basically this uses a probabilistic routing table rewarding/reinforcing the route successfully traversed by each "ant" (a small control packet) which flood the network. Reinforcement of the route in the forwards, reverse direction and both simultaneously have been researched: backwards reinforcement requires a symmetric network and couples the two directions together; forwards reinforcement rewards a route before the outcome is known (but then you pay for the cinema before you know how good the film is). As the system behaves stochastically and is therefore lacking repeatability, there are large hurdles to commercial deployment. Mobile media and new technologies have the potential to change the threshold for collective action due to swarm intelligence (Rheingold: 2002, P175).

The location of transmission infrastructure for wireless communication networks is an important engineering problem involving competing objectives. A minimal selection of locations (or sites) are required subject to providing adequate area coverage for users. A very different-ant inspired swarm intelligence algorithm, stochastic diffusion search (SDS), has been successfully used to provide a general model for this problem, related to circle packing and set covering. It has been shown that the SDS can be applied to identify suitable solutions even for large problem instances.[47]

Airlines have also used ant-based routing in assigning aircraft arrivals to airport gates. At Southwest Airlines a software program uses swarm theory, or swarm intelligence—the idea that a colony of ants works better than one alone. Each pilot acts like an ant searching for the best airport gate. "The pilot learns from his experience what's the best for him, and it turns out that that's the best solution for the airline," Douglas A. Lawson explains. As a result, the "colony" of pilots always go to gates they can arrive at and depart from quickly. The program can even alert a pilot of plane back-ups before they happen. "We can anticipate that it's going to happen, so we'll have a gate available," Lawson says.[48]

Crowd simulation

Artists are using swarm technology as a means of creating complex interactive systems or simulating crowds.

Stanley and Stella in: Breaking the Ice was the first movie to make use of swarm technology for rendering, realistically depicting the movements of groups of fish and birds using the Boids system. Tim Burton's Batman Returns also made use of swarm technology for showing the movements of a group of bats. The Lord of the Rings film trilogy made use of similar technology, known as Massive, during battle scenes. Swarm technology is particularly attractive because it is cheap, robust, and simple.

Airlines have used swarm theory to simulate passengers boarding a plane. Southwest Airlines researcher Douglas A. Lawson used an ant-based computer simulation employing only six interaction rules to evaluate boarding times using various boarding methods.(Miller, 2010, xii-xviii).[49]

Swarmic art

In a series of works al-Rifaie et al.[50] have successfully used two swarm intelligence algorithms – one mimicking the behaviour of one species of ants (Leptothorax acervorum) foraging (stochastic diffusion search, SDS) and the other algorithm mimicking the behaviour of birds flocking (particle swarm optimization, PSO) – to describe a novel integration strategy exploiting the local search properties of the PSO with global SDS behaviour. The resulting hybrid algorithm is used to sketch novel drawings of an input image, exploiting an artistic tension between the local behaviour of the ‘birds flocking’ - as they seek to follow the input sketch - and the global behaviour of the "ants foraging" - as they seek to encourage the flock to explore novel regions of the canvas. The "creativity" of this hybrid swarm system has been analysed under the philosophical light of the "rhizome" in the context of Deleuze’s "Orchid and Wasp" metaphor.[51]

In a more recent work of al-Rifaie et al., "Swarmic Sketches and Attention Mechanism",[52] introduces a novel approach deploying the mechanism of 'attention' by adapting SDS to selectively attend to detailed areas of a digital canvas. Once the attention of the swarm is drawn to a certain line within the canvas, the capability of PSO is used to produce a 'swarmic sketch' of the attended line. The swarms move throughout the digital canvas in an attempt to satisfy their dynamic roles – attention to areas with more details – associated to them via their fitness function. Having associated the rendering process with the concepts of attention, the performance of the participating swarms creates a unique, non-identical sketch each time the ‘artist’ swarms embark on interpreting the input line drawings. In other works while PSO is responsible for the sketching process, SDS controls the attention of the swarm.

In a similar work, "Swarmic Paintings and Colour Attention",[53] non-photorealistic images are produced using SDS algorithm which, in the context of this work, is responsible for colour attention.

The "computational creativity" of the above-mentioned systems are discussed in[50][54][55][56] through the two prerequisites of creativity (i.e. freedom and constraints) within the swarm intelligence's two infamous phases of exploration and exploitation.

In popular culture

Swarm intelligence-related concepts and references can be found throughout popular culture, frequently as some form of collective intelligence or group mind involving far more agents than used in current applications.

- Science fiction writer Olaf Stapledon may have been the first to discuss swarm intelligences equal or superior to humanity. In Last and First Men (1931), a swarm intelligence from Mars consists of tiny individual cells that communicate with each other by radio waves; in Star Maker (1937) swarm intelligences founded numerous civilizations.

- The Invincible (1964), a science fiction novel by Stanisław Lem where a human spaceship finds intelligent behavior in a flock of small particles that were able to defend themselves against what they found as a menace.

- In the dramatic novel and subsequent mini-series The Andromeda Strain (1969) by Michael Crichton, an extraterrestrial virus communicates between individual cells and displays the ability to think and react individually and as a whole, and as such displays a semblance of "swarm intelligence".

- Ygramul, the Many - an intelligent being consisting of a swarm of many wasp-like insects, a character in the novel The Neverending Story (1979) written by Michael Ende. Ygramul is also mentioned in a scientific paper, "Flocks, Herds, and Schools" written by Knut Hartmann (Computer Graphics and Interactive Systems, Otto-von-Guericke University of Magdeburg).[57]

- Swarm (1982), a short story by Bruce Sterling about a mission undertaken by a faction of humans, to understand and exploit a space-faring swarm intelligence.

- In the book Ender's Game (1985), the Formics (known popularly as Buggers) are a swarm intelligence with colonies or armadas each directed by a single queen.

- In Star Trek: The Next Generation (1989) and Star Trek: Voyager (1995), the Borg are a hierarchical swarm intelligence that assimilates humanoid species to grow.

- The Hacker and the Ants (1994), a book by Rudy Rucker on AI ants within a virtual environment.

- Hallucination (1995), a posthumously-published short story by Isaac Asimov about an alien insect-like swarm, capable of organization and provided with a sort of swarm intelligence.

- The Zerg (1998) of the Starcraft universe demonstrate such concepts when in groups and enhanced by the psychic control of taskmaster breeds.

- Decipher (2001) by Stel Pavlou deals with the swarm intelligence of nanobots that guard against intruders in Atlantis.

- In the video game series Halo, the Covenant (2001) species known as the Hunters are made up of thousands of worm-like creatures which are individually non-sentient, but, collectively form a sentient being.

- Prey (2002), by Michael Crichton deals with the danger of nanobots escaping from human control and developing a swarm intelligence.

- In the Legends of Dune series (2002), Omnius becomes a swarm intelligence by taking over almost all of the artificial intelligence that exists in the universe

- The science fiction novel The Swarm (2004), by Frank Schätzing, deals with underwater single-celled creatures who act in unison to destroy humanity.

- In the video game Mass Effect (2007), a galactic race known as the Quarians created a race of humanoid machines known as the Geth which worked as a swarm intelligence in order to avoid restrictions on true-AI. However the Geth obtained a shared sentience through the combined processing power of every geth unit.

- In Sandworms of Dune (2007), the Face Dancers are revealed to have developed into a swarm intelligence represented by Khrone

- In the video game Penumbra: Black Plague (2008), the Tuurngait is a hivemind that grows by infecting other organisms with a virus.

- Kill Decision (2012), a novel by Daniel Suarez features autonomous drones programmed with the aggressive swarming intelligence of Weaver ants.[58]

Notable researchers

See also

- Cellular automaton

- Complex systems

- Differential evolution

- Evolutionary algorithm

- Evolutionary computation

- Global brain

- Harmony search

- Metaheuristic

- Microbial Intelligence

- Multi-agent system

- Myrmecology

- Promise theory

- Quorum sensing

- Reinforcement learning

- Rule 110

- Self-organization

- Self-organized criticality

- Stochastic optimization

- Stochastic search

- Swarm Development Group

- Swarming

- SwisTrack

- Symmetry breaking of escaping ants

- The Wisdom of Crowds

- Wisdom of the crowd

References

- ↑ Beni, G., Wang, J. Swarm Intelligence in Cellular Robotic Systems, Proceed. NATO Advanced Workshop on Robots and Biological Systems, Tuscany, Italy, June 26–30 (1989)

- ↑ Parsopoulos, K. E.; Vrahatis, M. N. (2002). "Recent Approaches to Global Optimization Problems Through Particle Swarm Optimization". Natural Computing 1 (2-3): 235–306. doi:10.1023/A:1016568309421.

- ↑ Particle Swarm Optimization by Maurice Clerc, ISTE, ISBN 1-905209-04-5, 2006.

- ↑ Ant Colony Optimization by Marco Dorigo and Thomas Stützle, MIT Press, 2004. ISBN 0-262-04219-3

- ↑ Seyedali Mirjalili (2015). "The Ant Lion Optimizer". Advances in Engineering Software 83:: 80–98.

- ↑ Karaboga, Dervis (2010). "Artificial bee colony algorithm". Scholarpedia 5 (3): 6915. doi:10.4249/scholarpedia.6915.

- ↑ Niu, Ben (2012). "Bacterial colony optimization". Discrete Dynamics in Nature and Society. Volume 2012 (2012) (Article ID 698057).

- ↑ Cohen, Inon et al. (1999). "Continuous and discrete models of cooperation in complex bacterial colonies" (PDF). Fractals. 7.03 (1999):: 235–247.

- ↑ Pham DT, Ghanbarzadeh A, Koc E, Otri S, Rahim S and Zaidi M. The Bees Algorithm. Technical Note, Manufacturing Engineering Centre, Cardiff University, UK, 2005.

- ↑ Pham, D.T., Castellani, M. (2009), The Bees Algorithm – Modelling Foraging Behaviour to Solve Continuous Optimisation Problems. Proc. ImechE, Part C, 223(12), 2919-2938.

- ↑ S. Mirjalili, S. M. Mirjalili, and A. Lewis, "Grey Wolf Optimizer," Advances in Engineering Software, vol. 69, pp. 46-61, 2014.

- ↑ X. S. Yang, A New Metaheuristic Bat-Inspired Algorithm, in: Nature Inspired Cooperative Strategies for Optimization (NISCO 2010) (Eds. J. R. Gonzalez et al.), Studies in Computational Intelligence, Springer Berlin, 284, Springer, 65-74 (2010).

- ↑ Mirjalili, S.; Mirjalili, S. M.; Hatamlou, A. (2015). "Multi-Versin press". doi:10.1007/s00521-015-1870-7.

- ↑ Rashedi, E.; Nezamabadi-pour, H.; Saryazdi, S. (2009). "GSA: a gravitational search algorithm". Information Science 179 (13): 2232–2248. doi:10.1016/j.ins.2009.03.004.

- ↑ Nobahari, H.; Nikusokhan, M. "Non-dominated Sorting Gravitational Search Algorithm". International Conference on Swarm Intelligence.

- ↑ Altruism helps swarming robots fly better genevalunch.com, 4 May 2011.

- ↑ Waibel, M; Floreano, D; Keller, L (2011). "A quantitative test of Hamilton's rule for the evolution of altruism". PLoS Biology 9 (5): e1000615. doi:10.1371/journal.pbio.1000615.

- ↑ Krishnanand K.N. and D. Ghose (2005) "Detection of multiple source locations using a glowworm metaphor with applications to collective robotics". IEEE Swarm Intelligence Symposium, Pasadena, California, USA, pp. 84–91.

- ↑ Krishnanand, K.N.; Ghose, D. (2009). "Glowworm swarm optimization for simultaneous capture of multiple local optima of multimodal functions". Swarm Intelligence 3 (2): 87–124. doi:10.1007/s11721-008-0021-5.

- ↑ Krishnanand, K.N.; Ghose, D. (2008). "Theoretical foundations for rendezvous of glowworm-inspired agent swarms at multiple locations". Robotics and Autonomous Systems 56 (7): 549–569. doi:10.1016/j.robot.2007.11.003.

- ↑ Krishnanand, K.N.; Ghose, D. (2006). "Glowworm swarm based optimization algorithm for multimodal functions with collective robotics applications". Multi-agent and Grid Systems 2 (3): 209–222.

- ↑ P. Rabanal, I. Rodríguez, and F. Rubio (2007) "Using River Formation Dynamics to Design Heuristic Algorithms". Unconventional Computation, Springer, LNCS 4616, pp. 163–177.

- ↑ P. Rabanal, I. Rodríguez, and F. Rubio (2008) "Finding minimum spanning/distances trees by using river formation dynamics". Ant Colony Optimization and Swarm Intelligence, Springer, LNCS 5217, pp. 60–71.

- ↑ P. Rabanal, I. Rodríguez, and F. Rubio (2009) "Applying River Formation Dynamics to Solve NP-Complete Problems". Nature-Inspired Algorithms for Optimisation, Springer, SCI 193, pp. 333–368.

- ↑ P. Rabanal, I. Rodríguez, and F. Rubio (2013) "Testing restorable systems: formal definition and heuristic solution based on river formation dynamics". Formal Aspects of Computing, Springer, Volume 25, Number 5, pp. 743–768.

- ↑ G. Redlarski, A. Pałkowski, M. Dąbkowski (2013) "Using River Formation Dynamics Algorithm in Mobile Robot Navigation". Solid State Phenomena, Volume 198, pp. 138–143.

- ↑ S. Hameed-Amin, H.S. Al-Raweshidy, R. Sabbar-Abbas (2014) "Smart data packet ad hoc routing protocol". Computer Networks, Volume 62, pp. 162–181.

- ↑ Vicsek, T.; Czirok, A.; Ben-Jacob, E.;; Cohen, I.; Shochet, O. (1995). "Novel type of phase transition in a system of self-driven particles". Physical Review Letters 75: 1226–1229. arXiv:cond-mat/0611743. Bibcode:1995PhRvL..75.1226V. doi:10.1103/PhysRevLett.75.1226. PMID 10060237.

- ↑ Reynolds, C. W. (1987). "Flocks, herds and schools: A distributed behavioral model". Computer Graphics 21 (4): 25–34. doi:10.1145/37401.37406. CiteSeerX: 10

.1 ..1 .103 .7187 - ↑ Czirók, A.; Vicsek, T. (2006). "Collective behavior of interacting self-propelled particles". Physica A 281: 17–29. arXiv:cond-mat/0611742. Bibcode:2000PhyA..281...17C. doi:10.1016/S0378-4371(00)00013-3.

- ↑ Buhl, J.; Sumpter, D.J.T.; Couzin, D.; Hale, J.J.; Despland, E.; Miller, E.R.; Simpson, S.J. et al. (2006). "From disorder to order in marching locusts" (PDF). Science 312 (5778): 1402–1406. Bibcode:2006Sci...312.1402B. doi:10.1126/science.1125142. PMID 16741126.

- ↑ Toner, J.; Tu, Y.; Ramaswamy, S. (2005). "Hydrodynamics and phases of flocks" (PDF). Annals of Physics 318: 170. Bibcode:2005AnPhy.318..170T. doi:10.1016/j.aop.2005.04.011.

- ↑ Bertin, E.; Droz, M.; Grégoire, G. (2009). "Hydrodynamic equations for self-propelled particles: microscopic derivation and stability analysis". J. Phys. A 42 (44): 445001. arXiv:0907.4688. Bibcode:2009JPhA...42R5001B. doi:10.1088/1751-8113/42/44/445001.

- ↑ Li, Y.X.; Lukeman, R.; Edelstein-Keshet, L. et al. (2007). "Minimal mechanisms for school formation in self-propelled particles" (PDF). Physica D: Nonlinear Phenomena 237 (5): 699–720. Bibcode:2008PhyD..237..699L. doi:10.1016/j.physd.2007.10.009.

- ↑ Bishop, J.M., Stochastic Searching Networks, Proc. 1st IEE Int. Conf. on Artificial Neural Networks, pp. 329-331, London, UK, (1989).

- ↑ Nasuto, S.J. & Bishop, J.M., (2008), Stabilizing swarm intelligence search via positive feedback resource allocation, In: Krasnogor, N., Nicosia, G, Pavone, M., & Pelta, D. (eds), Nature Inspired Cooperative Strategies for Optimization, Studies in Computational Intelligence, vol 129, Springer, Berlin, Heidelberg, New York, pp. 115-123.

- ↑ Moglich, M.; Maschwitz, U.; Holldobler, B., Tandem Calling: A New Kind of Signal in Ant Communication, Science, Volume 186, Issue 4168, pp. 1046-1047

- ↑ Nasuto, S.J., Bishop, J.M. & Lauria, S., Time complexity analysis of the Stochastic Diffusion Search, Proc. Neural Computation '98, pp. 260-266, Vienna, Austria, (1998).

- ↑ Nasuto, S.J., & Bishop, J.M., (1999), Convergence of the Stochastic Diffusion Search, Parallel Algorithms, 14:2, pp: 89-107.

- ↑ Myatt, D.M., Bishop, J.M., Nasuto, S.J., (2004), Minimum stable convergence criteria for Stochastic Diffusion Search, Electronics Letters, 22:40, pp. 112-113.

- ↑ al-Rifaie, M.M., Bishop, J.M. & Blackwell, T., An investigation into the merger of stochastic diffusion search and particle swarm optimisation, Proc. 13th Conf. Genetic and Evolutionary Computation, (GECCO), pp.37-44, (2012).

- ↑ al-Rifaie, Mohammad Majid, John Mark Bishop, and Tim Blackwell. "Information sharing impact of stochastic diffusion search on differential evolution algorithm." Memetic Computing 4.4 (2012): 327-338.

- ↑ Lewis, M. Anthony; Bekey, George A. "The Behavioral Self-Organization of Nanorobots Using Local Rules". Proceedings of the 1992 IEEE/RSJ International Conference on Intelligent Robots and Systems.

- ↑ Identifying metastasis in bone scans with Stochastic Diffusion Search, al-Rifaie, M.M. & Aber, A., Proc. IEEE Information Technology in Medicine and Education, ITME 2012, pp. 519-523.

- ↑ al-Rifaie, Mohammad Majid, Ahmed Aber, and Ahmed Majid Oudah. "Utilising Stochastic Diffusion Search to identify metastasis in bone scans and microcalcifications on mammographs." In Bioinformatics and Biomedicine Workshops (BIBMW), 2012 IEEE International Conference on, pp. 280-287. IEEE, 2012.

- ↑ Martens, D.; Baesens, B.; Fawcett, T. (2011). "Editorial Survey: Swarm Intelligence for Data Mining". Machine Learning 82 (1): 1–42. doi:10.1007/s10994-010-5216-5.

- ↑ Whitaker, R.M., Hurley, S.. An agent based approach to site selection for wireless networks. Proc ACM Symposium on Applied Computing, pp. 574–577, (2002).

- ↑ "Planes, Trains and Ant Hills: Computer scientists simulate activity of ants to reduce airline delays". Science Daily. April 1, 2008. Retrieved December 1, 2010.

- ↑ Miller, Peter (2010). The Smart Swarm: How understanding flocks, schools, and colonies can make us better at communicating, decision making, and getting things done. New York: Avery. ISBN 978-1-58333-390-7.

- ↑ 50.0 50.1 al-Rifaie, MM, Bishop, J.M., & Caines, S., Creativity and Autonomy in Swarm Intelligence Systems, Cognitive Computing 4:3, pp. 320-331, (2012).

- ↑ Deleuze G, Guattari F, Massumi B. A thousand plateaus. Minneapolis: University of Minnesota Press; 2004.

- ↑ al-Rifaie, Mohammad Majid, and John Mark Bishop. "Swarmic sketches and attention mechanism". Evolutionary and Biologically Inspired Music, Sound, Art and Design. Springer Berlin Heidelberg, 2013. 85-96.

- ↑ al-Rifaie, Mohammad Majid, and John Mark Bishop. "Swarmic paintings and colour attention". Evolutionary and Biologically Inspired Music, Sound, Art and Design. Springer Berlin Heidelberg, 2013. 97-108.

- ↑ al-Rifaie, Mohammad Majid, Mark JM Bishop, and Ahmed Aber. "Creative or Not? Birds and Ants Draw with Muscle." Proceedings of AISB'11 Computing and Philosophy (2011): 23-30.

- ↑ al-Rifaie, Mohammad Majid, Ahmed Aber and John Mark Bishop. "Cooperation of Nature and Physiologically Inspired Mechanisms in Visualisation." Biologically-Inspired Computing for the Arts: Scientific Data through Graphics. IGI Global, 2012. 31-58. Web. 22 Aug. 2013. doi:10.4018/978-1-4666-0942-6.ch003

- ↑ al-Rifaie MM, Bishop M (2013) Swarm intelligence and weak artificial creativity. In: The Association for the Advancement of Artificial Intelligence (AAAI) 2013: Spring Symposium, Stanford University, Palo Alto, California, U.S.A., pp 14–19

- ↑ Flocks, Herds, and Schools

- ↑ Kelly, James Floyd. "Book Review and Author Interview: Kill Decision by Daniel Suarez". Wired. Condé Nast. Retrieved 11 January 2015.

Further reading

- Bernstein, Jeremy. "Project Swarm". Report on technology inspired by swarms in nature.

- Bonabeau, Eric; Dorigo, Marco; Theraulaz, Guy (1999). Swarm Intelligence: From Natural to Artificial Systems. ISBN 0-19-513159-2.

- Engelbrecht, Andries. Fundamentals of Computational Swarm Intelligence. Wiley & Sons. ISBN 0-470-09191-6.

- Fisher, L. (2009). The Perfect Swarm : The Science of Complexity in Everyday Life. Basic Books.

- Fister I, XS Yang, I Fister, J Brest and D Fister (2013) "A Brief Review of Nature-Inspired Algorithms for Optimization" Elektrotehniski Vestnik, 80 (3): 1–7.

- Kennedy, James; Eberhart, Russell C. Swarm Intelligence. ISBN 1-55860-595-9.

- Miller, Peter (July 2007), "Swarm Theory", National Geographic Magazine

- Resnick, Mitchel. Turtles, Termites, and Traffic Jams: Explorations in Massively Parallel Microworlds. ISBN 0-262-18162-2.

- Ridge, E.; Curry, E. (2007). "A roadmap of nature-inspired systems research and development". Multiagent and Grid Systems 3 (1): 3–8. CiteSeerX: 10

.1 ..1 .67 .1030 - Ridge, E.; Kudenko, D.; Kazakov, D.; Curry, E. (2005). "Moving Nature-Inspired Algorithms to Parallel, Asynchronous and Decentralised Environments". Self-Organization and Autonomic Informatics (I) 135: 35–49. CiteSeerX: 10

.1 ..1 .64 .3403 - Swarm Intelligence (journal). Chief Editor: Marco Dorigo. Springer New York. ISSN 1935-3812 (Print) 1935-3820 (Online)

- Waldner, Jean-Baptiste (2007). Nanocomputers and Swarm Intelligence. ISTE. ISBN 978-1-84704-002-2.

- Yang, Xin-She (2011). "Metaheuristic Optimization". Scholarpedia 6 (8): 11472. Bibcode:2011SchpJ...611472Y. doi:10.4249/scholarpedia.11472.

- Zimmer, Carl (November 13, 2007). "From Ants to People: an Instinct to Swarm". The New York Times.

| ||||||||||||||||||

| |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||