Sum rule in differentiation

| Calculus | ||||||

|---|---|---|---|---|---|---|

|

||||||

|

||||||

|

Specialized |

||||||

In calculus, the sum rule in differentiation is a method of finding the derivative of a function that is the sum of two other functions for which derivatives exist. This is a part of the linearity of differentiation. The sum rule in integration follows from it. The rule itself is a direct consequence of differentiation from first principles.

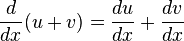

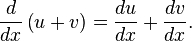

The sum rule tells us that for two functions u and v:

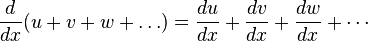

This rule also applies to subtraction and to additions and subtractions of more than two functions

Proof

Simple Proof

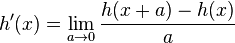

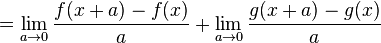

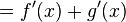

Let h(x) = f(x) + g(x), and suppose that f and g are each differentiable at x. We want to prove that h is differentiable at x and that its derivative h'(x) is given by f'(x)+g'(x).

![= \lim_{a\to 0} \frac{[f(x+a)+g(x+a)]-[f(x)+g(x)]}{a}](../I/m/362dd29ec273060d521913dea6b600d2.png)

.

.

More Complicated Proof

Let y be a function given by the sum of two functions u and v, such that:

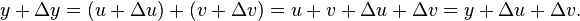

Now let y, u and v be increased by small increases Δy, Δu and Δv respectively. Hence:

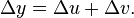

So:

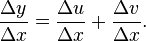

Now divide throughout by Δx:

Let Δx tend to 0:

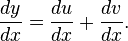

Now recall that y = u + v, giving the sum rule in differentiation:

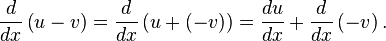

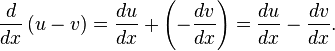

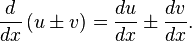

The rule can be extended to subtraction, as follows:

Now use the special case of the constant factor rule in differentiation with k=−1 to obtain:

Therefore, the sum rule can be extended so it "accepts" addition and subtraction as follows:

The sum rule in differentiation can be used as part of the derivation for both the sum rule in integration and linearity of differentiation.

Generalization to finite sums

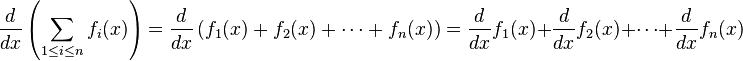

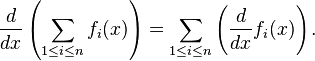

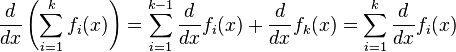

Consider a set of functions f1, f2,..., fn. Then

so

In other words, the derivative of any finite sum of functions is the sum of the derivatives of those functions.

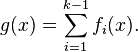

This follows easily by induction; we have just proven this to be true for n = 2. Assume it is true for all n < k, then define

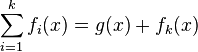

Then

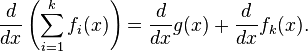

and it follows from the proof above that

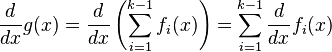

By the inductive hypothesis,

so

which ends the proof of the sum rule of differentiation.

Note this does not automatically extend to infinite sums. An intuitive reason for why things can go wrong is that there is more than one limit involved (specifically, one for the sum and one in the definition of the derivative). Uniform convergence deals with these sorts of issues.

References

- Gilbert Strang: Calculus. SIAM 1991, ISBN 0-9614088-2-0, p. 71 (restricted online version (google books))

- sum rule at PlanetMath