Softmax function

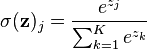

In mathematics, in particular probability theory and related fields, the softmax function, or normalized exponential,[1]:198 is a generalization of the logistic function that "squashes" a K-dimensional vector  of arbitrary real values to a K-dimensional vector

of arbitrary real values to a K-dimensional vector  of real values in the range (0, 1). The function is given by

of real values in the range (0, 1). The function is given by

for j = 1, ..., K.

for j = 1, ..., K.

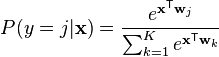

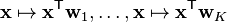

Since the components of the vector  sum to one and are all strictly between zero and one, they represent a categorical probability distribution. For this reason, the softmax function is used in various probabilistic multiclass classification methods including multinomial logistic regression,[1]:206–209 multiclass linear discriminant analysis, naive Bayes classifiers and artificial neural networks.[2] Specifically, in multinomial logistic regression and linear discriminant analysis, the input to the function is the result of K distinct linear functions, and the predicted probability for the j'th class given a sample vector x is:

sum to one and are all strictly between zero and one, they represent a categorical probability distribution. For this reason, the softmax function is used in various probabilistic multiclass classification methods including multinomial logistic regression,[1]:206–209 multiclass linear discriminant analysis, naive Bayes classifiers and artificial neural networks.[2] Specifically, in multinomial logistic regression and linear discriminant analysis, the input to the function is the result of K distinct linear functions, and the predicted probability for the j'th class given a sample vector x is:

This can be seen as the composition of K linear functions  and the softmax function.

and the softmax function.

Artificial neural networks

In neural network simulations, the softmax function is often implemented at the final layer of a network used for classification. Such networks are then trained under a log loss (or cross-entropy) regime, giving a non-linear variant of multinomial logistic regression.

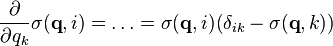

Since the function maps a vector and a specific index i to a real value, the derivative needs to take the index into account:

Here, the Kronecker delta is used for simplicity (cf. the derivative of a sigmoid function, being expressed via the function itself).

See Multinomial logit for a probability model which uses the softmax activation function.

Reinforcement learning

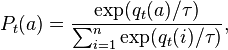

In the field of reinforcement learning, a softmax function can be used to convert values into action probabilities. The function commonly used is:[3]

where the action value  corresponds to the expected reward of following action a and

corresponds to the expected reward of following action a and  is called a temperature parameter (in allusion to chemical kinetics). For high temperatures (

is called a temperature parameter (in allusion to chemical kinetics). For high temperatures ( ), all actions have nearly the same probability and the lower the temperature, the more expected rewards affect the probability. For a low temperature (

), all actions have nearly the same probability and the lower the temperature, the more expected rewards affect the probability. For a low temperature ( ), the probability of the action with the highest expected reward tends to 1.

), the probability of the action with the highest expected reward tends to 1.

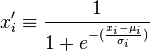

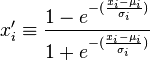

Softmax Normalization

Sigmoidal or Softmax normalization is a way of reducing the influence of extreme values or outliers in the data without removing them from the dataset. It is useful given outlier data, which we wish to include in the dataset while still preserving the significance of data within a standard deviation of the mean. The data are nonlinearly transformed using a sigmoidal function, either the logistic sigmoid function or the hyperbolic tangent function:[4]

or

This puts the normalized data in the range of 0 to 1. The transformation is almost linear near the mean and has smooth nonlinearity at both extremes, ensuring that all data points are within a limited range. This maintains the resolution of most values within a standard deviation of the mean.

References

- ↑ 1.0 1.1 Bishop, Christopher M. (2006). Pattern Recognition and Machine Learning. Springer.

- ↑ ai-faq What is a softmax activation function?

- ↑ Sutton, R. S. and Barto A. G. Reinforcement Learning: An Introduction. The MIT Press, Cambridge, MA, 1998.Softmax Action Selection

- ↑ Artificial Neural Networks: An Introduction. 2005. pp. 16–17.