Schnirelmann density

In additive number theory, the Schnirelmann density of a sequence of numbers is a way to measure how "dense" the sequence is. It is named after Russian mathematician L.G. Schnirelmann, who was the first to study it.[1][2]

Definition

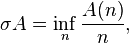

The Schnirelmann density of a set of natural numbers A is defined as

where A(n) denotes the number of elements of A not exceeding n and inf is infimum.[3]

The Schnirelmann density is well-defined even if the limit of A(n)/n as n → ∞ fails to exist (see asymptotic density).

Properties

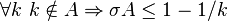

By definition, 0 ≤ A(n) ≤ n and n σA ≤ A(n) for all n, and therefore 0 ≤ σA ≤ 1, and σA = 1 if and only if A = N. Furthermore,

Sensitivity

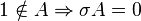

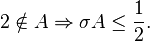

The Schnirelmann density is sensitive to the first values of a set:

-

.

.

In particular,

and

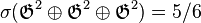

Consequently, the Schnirelmann densities of the even numbers and the odd numbers, which one might expect to agree, are 0 and 1/2 respectively. Schnirelmann and Yuri Linnik exploited this sensitivity as we shall see.

Schnirelmann's theorems

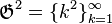

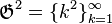

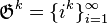

If we set  , then Lagrange's four-square theorem can be restated as

, then Lagrange's four-square theorem can be restated as  . (Here the symbol

. (Here the symbol  denotes the sumset of

denotes the sumset of  and

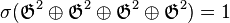

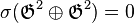

and  .) It is clear that

.) It is clear that  . In fact, we still have

. In fact, we still have  , and one might ask at what point the sumset attains Schnirelmann density 1 and how does it increase. It actually is the case that

, and one might ask at what point the sumset attains Schnirelmann density 1 and how does it increase. It actually is the case that  and one sees that sumsetting

and one sees that sumsetting  once again yields a more populous set, namely all of

once again yields a more populous set, namely all of  . Schnirelmann further succeeded in developing these ideas into the following theorems, aiming towards Additive Number Theory, and proving them to be a novel resource (if not greatly powerful) to attack important problems, such as Waring's problem and Goldbach's conjecture.

. Schnirelmann further succeeded in developing these ideas into the following theorems, aiming towards Additive Number Theory, and proving them to be a novel resource (if not greatly powerful) to attack important problems, such as Waring's problem and Goldbach's conjecture.

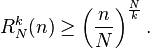

Theorem. Let

and

be subsets of

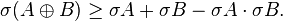

. Then

Note that  . Inductively, we have the following generalization.

. Inductively, we have the following generalization.

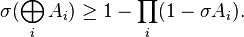

Corollary. Let

be a finite family of subsets of

. Then

The theorem provides the first insights on how sumsets accumulate. It seems unfortunate that its conclusion stops short of showing  being superadditive. Yet, Schnirelmann provided us with the following results, which sufficed for most of his purpose.

being superadditive. Yet, Schnirelmann provided us with the following results, which sufficed for most of his purpose.

Theorem. Let

and

be subsets of

. If

, then

Theorem. (Schnirelmann) Let

. If

then there exists

such that

Additive bases

A subset  with the property that

with the property that  for a finite sum, is called an additive basis, and the least number of summands required is called the degree (sometimes order) of the basis. Thus, the last theorem states that any set with positive Schnirelmann density is an additive basis. In this terminology, the set of squares

for a finite sum, is called an additive basis, and the least number of summands required is called the degree (sometimes order) of the basis. Thus, the last theorem states that any set with positive Schnirelmann density is an additive basis. In this terminology, the set of squares  is an additive basis of degree 4. (About an open problem for additive bases, see Erdős–Turán conjecture on additive bases.)

is an additive basis of degree 4. (About an open problem for additive bases, see Erdős–Turán conjecture on additive bases.)

Mann's theorem

Historically the theorems above were pointers to the following result, at one time known as the  hypothesis. It was used by Edmund Landau and was finally proved by Henry Mann in 1942.

hypothesis. It was used by Edmund Landau and was finally proved by Henry Mann in 1942.

Theorem. (Mann 1942) Let

and

be subsets of

. In case that

, we still have

An analogue of this theorem for lower asymptotic density was obtained by Kneser.[4] At a later date, E. Artin and P. Scherk simplified the proof of Mann's theorem.[5]

Waring's problem

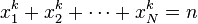

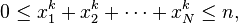

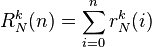

Let  and

and  be natural numbers. Let

be natural numbers. Let  . Define

. Define  to be the number of non-negative integral solutions to the equation

to be the number of non-negative integral solutions to the equation

and  to be the number of non-negative integral solutions to the inequality

to be the number of non-negative integral solutions to the inequality

in the variables  , respectively. Thus

, respectively. Thus  . We have

. We have

The volume of the  -dimensional body defined by

-dimensional body defined by  , is bounded by the volume of the hypercube of size

, is bounded by the volume of the hypercube of size  , hence

, hence  . The hard part is to show that this bound still works on the average, i.e.,

. The hard part is to show that this bound still works on the average, i.e.,

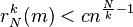

Lemma. (Linnik) For all

there exists

and a constant

, depending only on

, such that for all

,

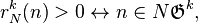

for all

With this at hand, the following theorem can be elegantly proved.

Theorem. For all

there exists

for which

.

We have thus established the general solution to Waring's Problem:

Corollary. (Hilbert 1909) For all

there exists

, depending only on

, such that every positive integer

can be expressed as the sum of at most

many

-th powers.

Schnirelmann's constant

In 1930 Schnirelmann used these ideas in conjunction with the Brun sieve to prove Schnirelmann's theorem,[1][2] that any natural number greater than one can be written as the sum of not more than C prime numbers, where C is an effectively computable constant:[6] Schnirelmann obtained C < 800000.[7] Schnirelmann's constant is the lowest number C with this property.[6]

Olivier Ramaré showed in (Ramaré 1995) that Schnirelmann's constant is at most 7,[6] improving the earlier upper bound of 19 obtained by Hans Riesel and R. C. Vaughan.

Schnirelmann's constant is at least 3; Goldbach's conjecture implies that this is the constant's actual value.[6]

Essential components

Khintchin proved that the sequence of squares, though of zero Schnirelmann density, when added to a sequence of Schnirelmann density between 0 and 1, increases the density:

This was soon simplified and extended by Erdős, who showed, that if A is any sequence with Schnirelmann density α and B is an additive basis of order k then

and this was improved by Plünnecke to

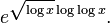

Sequences with this property, of increasing density less than one by addition, were named essential components by Khintchin. Linnik showed that an essential component need not be an additive basis[10] as he constructed an essential component that has xo(1) elements less than x. More precisely, the sequence has

elements less than x for some c < 1. This was improved by E. Wirsing to

For a while, it remained an open problem how many elements an essential component must have. Finally, Ruzsa determined that an essential component has at least (log x)c elements up to x, for some c > 1, and for every c > 1 there is an essential component which has at most (log x)c elements up to x.[11]

References

- ↑ 1.0 1.1 Schnirelmann, L.G. (1930). "On the additive properties of numbers", first published in "Proceedings of the Don Polytechnic Institute in Novocherkassk" (in Russian), vol XIV (1930), pp. 3-27, and reprinted in "Uspekhi Matematicheskikh Nauk" (in Russian), 1939, no. 6, 9–25.

- ↑ 2.0 2.1 Schnirelmann, L.G. (1933). First published as "Über additive Eigenschaften von Zahlen" in "Mathematische Annalen" (in German), vol 107 (1933), 649-690, and reprinted as "On the additive properties of numbers" in "Uspekhin. Matematicheskikh Nauk" (in Russian), 1940, no. 7, 7–46.

- ↑ Nathanson (1996) pp.191–192

- ↑ Nathanson (1990) p.397

- ↑ E. Artin and P. Scherk (1943) On the sums of two sets of integers, Ann. of Math 44, page=138-142.

- ↑ 6.0 6.1 6.2 6.3 Nathanson (1996) p.208

- ↑ Gelfond & Linnik (1966) p.136

- ↑ Ruzsa (2009) p.177

- ↑ Ruzsa (2009) p.179

- ↑ Linnik, Yu. V. (1942). "On Erdõs's theorem on the addition of numerical sequences". Mat. Sb. 10: 67–78. Zbl 0063.03574.

- ↑ Ruzsa (2009) p.184

- Hilbert, David (1909). "Beweis für die Darstellbarkeit der ganzen Zahlen durch eine feste Anzahl nter Potenzen (Waringsches Problem)". Mathematische Annalen 67 (3): 281–300. doi:10.1007/BF01450405. ISSN 0025-5831. MR 1511530.

- Schnirelmann, L.G. (1930). "On additive properties of numbers". Ann. Inst. polytechn. Novočerkassk (in Russian) 14: 3–28. Zbl JFM 56.0892.02.

- Schnirelmann, L.G. (1933). "Über additive Eigenschaften von Zahlen". Math. Ann. (in German) 107: 649–690. doi:10.1007/BF01448914. Zbl 0006.10402.

- Mann, Henry B. (1942). "A proof of the fundamental theorem on the density of sums of sets of positive integers". Annals of Mathematics. Second Series (Annals of Mathematics) 43 (3): 523–527. doi:10.2307/1968807. ISSN 0003-486X. JSTOR 1968807. MR 0006748. Zbl 0061.07406.

- Gelfond, A.O.; Linnik, Yu. V. (1966). L.J. Mordell, ed. Elementary Methods in Analytic Number Theory. George Allen & Unwin.

- Mann, Henry B. (1976). Addition Theorems: The Addition Theorems of Group Theory and Number Theory (Corrected reprint of 1965 Wiley ed.). Huntington, New York: Robert E. Krieger Publishing Company. ISBN 0-88275-418-1. MR 424744.

- Nathanson, Melvyn B. (1990). "Best possible results on the density of sumsets". In Berndt, Bruce C.; Diamond, Harold G.; Halberstam, Heini et al. Analytic number theory. Proceedings of a conference in honor of Paul T. Bateman, held on April 25-27, 1989, at the University of Illinois, Urbana, IL (USA). Progress in Mathematics 85. Boston: Birkhäuser. pp. 395–403. ISBN 0-8176-3481-9. Zbl 0722.11007.

- Ramaré, O. (1995). "On Šnirel'man's constant". Annali della Scuola Normale Superiore di Pisa. Classe di Scienze. Serie IV 22 (4): 645–706. Zbl 0851.11057. Retrieved 2011-03-28.

- Nathanson, Melvyn B. (1996). Additive Number Theory: the Classical Bases. Graduate Texts in Mathematics 164. Springer-Verlag. ISBN 0-387-94656-X. Zbl 0859.11002.

- Nathanson, Melvyn B. (2000). Elementary Methods in Number Theory. Graduate Texts in Mathematics 195. Springer-Verlag. pp. 359–367. ISBN 0-387-98912-9. Zbl 0953.11002.

- Khinchin, A. Ya. (1998). "Three Pearls of Number Theory". Mineola, NY: Dover. ISBN 978-0-486-40026-6. Has a proof of Mann's theorem and the Schnirelmann-density proof of Waring's conjecture.

- Artin, Emil; Scherk, P. (1943). "On the sums of two set of integers". Ann. of Math. 44. pp. 138–142.

- Cojocaru, Alina Carmen; Murty, M. Ram (2005). An introduction to sieve methods and their applications. London Mathematical Society Student Texts 66. Cambridge University Press. pp. 100–105. ISBN 0-521-61275-6.

- Ruzsa, Imre Z. (2009). "Sumsets and structure". In Geroldinger, Alfred; Ruzsa, Imre Z. Combinatorial number theory and additive group theory. Advanced Courses in Mathematics CRM Barcelona. Elsholtz, C.; Freiman, G.; Hamidoune, Y. O.; Hegyvári, N.; Károlyi, G.; Nathanson, M.; Solymosi, J.; Stanchescu, Y. With a foreword by Javier Cilleruelo, Marc Noy and Oriol Serra (Coordinators of the DocCourse). Basel: Birkhäuser. pp. 87–210. ISBN 978-3-7643-8961-1. Zbl 1221.11026.