Row space

In linear algebra, the row space of a matrix is the set of all possible linear combinations of its row vectors. Let K be a field (such as real or complex numbers). The row space of an m × n matrix with components from K is a linear subspace of the n-space Kn. The dimension of the row space is called the row rank of the matrix.[1]

A definition for matrices over a ring K (such as integers) is also possible.[2]

Definition

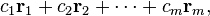

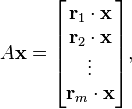

Let K be a field of scalars. Let A be an m × n matrix, with row vectors r1, r2, ... , rm. A linear combination of these vectors is any vector of the form

where c1, c2, ... , cm are scalars. The set of all possible linear combinations of r1, ... , rm is called the row space of A. That is, the row space of A is the span of the vectors r1, ... , rm.

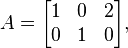

For example, if

then the row vectors are r1 = (1, 0, 2) and r2 = (0, 1, 0). A linear combination of r1 and r2 is any vector of the form

The set of all such vectors is the row space of A. In this case, the row space is precisely the set of vectors (x, y, z) ∈ K3 satisfying the equation z = 2x (using Cartesian coordinates, this set is a plane through the origin in three-dimensional space).

For a matrix that represents a homogeneous system of linear equations, the row space consists of all linear equations that follow from those in the system.

The column space of A is equal to the row space of AT.

Basis

The row space is not affected by elementary row operations. This makes it possible to use row reduction to find a basis for the row space.

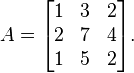

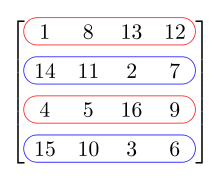

For example, consider the matrix

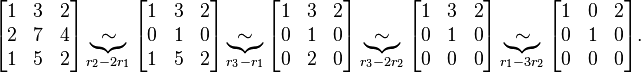

The rows of this matrix span the row space, but they may not be linearly independent, in which case the rows will not be a basis. To find a basis, we reduce A to row echelon form:

r1, r2, r3 represents the rows.

Once the matrix is in echelon form, the nonzero rows are a basis for the row space. In this case, the basis is { (1, 3, 2), (0, 1, 0) }. Another possible basis { (1, 0, 2), (0, 1, 0) } comes from a further reduction.[3]

This algorithm can be used in general to find a basis for the span of a set of vectors. If the matrix is further simplified to reduced row echelon form, then the resulting basis is uniquely determined by the row space.

Dimension

The dimension of the row space is called the rank of the matrix. This is the same as the maximum number of linearly independent rows that can be chosen from the matrix, or equivalently the number of pivots. For example, the 3 × 3 matrix in the example above has rank two.[3]

The rank of a matrix is also equal to the dimension of the column space. The dimension of the null space is called the nullity of the matrix, and is related to the rank by the following equation:

where n is the number of columns of the matrix A. The equation above is known as the rank-nullity theorem.

Relation to the null space

The null space of matrix A is the set of all vectors x for which Ax = 0. The product of the matrix A and the vector x can be written in terms of the dot product of vectors:

where r1, ... , rm are the row vectors of A. Thus Ax = 0 if and only if x is orthogonal (perpendicular) to each of the row vectors of A.

It follows that the null space of A is the orthogonal complement to the row space. For example, if the row space is a plane through the origin in three dimensions, then the null space will be the perpendicular line through the origin. This provides a proof of the rank-nullity theorem (see dimension above).

The row space and null space are two of the four fundamental subspaces associated with a matrix A (the other two being the column space and left null space).

Relation to coimage

If V and W are vector spaces, then the kernel of a linear transformation T: V → W is the set of vectors v ∈ V for which T(v) = 0. The kernel of a linear transformation is analogous to the null space of a matrix.

If V is an inner product space, then the orthogonal complement to the kernel can be thought of as a generalization of the row space. This is sometimes called the coimage of T. The transformation T is one-to-one on its coimage, and the coimage maps isomorphically onto the image of T.

When V is not an inner product space, the coimage of T can be defined as the quotient space V / ker(T).

Notes

- ↑ Linear algebra, as discussed in this article, is a very well established mathematical discipline for which there are many sources. Almost all of the material in this article can be found in Lay 2005, Meyer 2001, and Strang 2005.

- ↑ A definition and certain properties for rings are the same with replacement of the "vector n-space" Kn with "left free module" and "linear subspace" with "submodule". For non-commutative rings this row space is sometimes disambiguated as left row space.

- ↑ 3.0 3.1 The example is valid over real, rational numbers, and other number fields. It is not necessarily correct over fields and rings with non-zero characteristic.

References

Textbooks

- Axler, Sheldon Jay (1997), Linear Algebra Done Right (2nd ed.), Springer-Verlag, ISBN 0-387-98259-0

- Lay, David C. (August 22, 2005), Linear Algebra and Its Applications (3rd ed.), Addison Wesley, ISBN 978-0-321-28713-7

- Meyer, Carl D. (February 15, 2001), Matrix Analysis and Applied Linear Algebra, Society for Industrial and Applied Mathematics (SIAM), ISBN 978-0-89871-454-8

- Poole, David (2006), Linear Algebra: A Modern Introduction (2nd ed.), Brooks/Cole, ISBN 0-534-99845-3

- Anton, Howard (2005), Elementary Linear Algebra (Applications Version) (9th ed.), Wiley International

- Leon, Steven J. (2006), Linear Algebra With Applications (7th ed.), Pearson Prentice Hall

External links

| Wikibooks has a book on the topic of: Linear Algebra/Column and Row Spaces |

- Weisstein, Eric W., "Row Space", MathWorld.

- Gilbert Strang, MIT Linear Algebra Lecture on the Four Fundamental Subspaces at Google Video, from MIT OpenCourseWare