Power transform

In statistics, the power transform corresponds to a family of functions that are applied to create a rank-preserving transformation of data using power functions. This is a useful data transformation technique used to stabilize variance, make the data more normal distribution-like, improve the validity of measures of association such as the Pearson correlation between variables and for other data stabilization procedures.

Definition

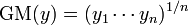

The power transformation is defined as a continuously varying function, with respect to the power parameter λ, in a piece-wise function form that makes it continuous at the point of singularity (λ = 0). For data vectors (y1,..., yn) in which each yi > 0, the power transform is

where

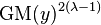

is the geometric mean of the observations y1, ..., yn.

The inclusion of the (λ − 1)th power of the geometric mean in the denominator simplifies the scientific interpretation of any equation involving  , because the units of measurement do not change as λ changes.

, because the units of measurement do not change as λ changes.

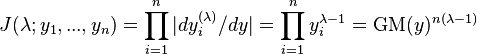

Box and Cox (1964) introduced the geometric mean into this transformation by first including the Jacobian of rescaled power transformation

-

.

.

with the likelihood. This Jacobian is as follows:

This allows the normal log likelihood at its maximum to be written as follows:

From here, absorbing  into the expression for

into the expression for  produces an expression that establishes that minimizing the sum of squares of residuals from

produces an expression that establishes that minimizing the sum of squares of residuals from  is equivalent to maximizing the sum of the normal log likelihood of deviations from

is equivalent to maximizing the sum of the normal log likelihood of deviations from  and the log of the Jacobian of the transformation.

and the log of the Jacobian of the transformation.

The value at Y = 1 for any λ is 0, and the derivative with respect to Y there is 1 for any λ. Sometimes Y is a version of some other variable scaled to give Y = 1 at some sort of average value.

The transformation is a power transformation, but done in such a way as to make it continuous with the parameter λ at λ = 0. It has proved popular in regression analysis, including econometrics.

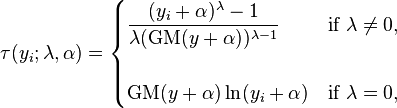

Box and Cox also proposed a more general form of the transformation that incorporates a shift parameter.

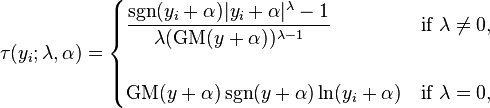

which holds if yi + α > 0 for all i. If τ(Y, λ, α) follows a truncated normal distribution, then Y is said to follow a Box–Cox distribution.

Bickel and Doksum eliminated the need to use a truncated distribution by extending the range of the transformation to all y, as follows:

,

,

where sgn(.) is the Sign function. This change in definition has little practical import as long as  is less than

is less than  , which it usually is.[1]

, which it usually is.[1]

Bickel and Doksum also proved that the parameter estimates are consistent and asymptotically normal under appropriate regularity conditions, though the standard Cramér–Rao lower bound can substantially underestimate the variance when parameter values are small relative to the noise variance.[2] However, this problem of underestimating the variance may not be a substantive problem in many applications.[3][4]

Box–Cox transformation

The one-parameter Box–Cox transformations are defined as:

![y_i^{(\lambda)} =

\begin{cases}

\dfrac{y_i^\lambda - 1}{\lambda} & \text{if } \lambda \neq 0, \\[8pt]

\ln{(y_i)} & \text{if } \lambda = 0,

\end{cases}](../I/m/9c4e7d20d90c16d8ddb739e3508c62cb.png)

and the two-parameter Box-Cox transformations as:

![y_i^{(\boldsymbol{\lambda})} =

\begin{cases}

\dfrac{(y_i + \lambda_2)^{\lambda_1} - 1}{\lambda_1} & \text{if } \lambda_1 \neq 0, \\[8pt]

\ln{(y_i + \lambda_2)} & \text{if } \lambda_1 = 0,

\end{cases}](../I/m/a511b97a047781da18dbcd9283ad3379.png)

as described in the original article.[5] Moreover, the first transformations hold for  and the second for

and the second for  .[5]

.[5]

The parameter  is estimated using the profile likelihood function.

is estimated using the profile likelihood function.

Use of the power transform

- Power transforms are ubiquitously used in various fields. For example, multi-resolution and wavelet analysis, statistical data analysis, medical research, modeling of physical processes, geochemical data analysis, epidemiology[6] and many other clinical, environmental and social research areas.

Example

The BUPA liver data set[7] contains data on liver enzymes ALT and γGT. Suppose we are interested in using log(γGT) to predict ALT. A plot of the data appears in panel (a) of the figure. There appears to be non-constant variance, and a Box–Cox transformation might help.

The log-likelihood of the power parameter appears in panel (b). The horizontal reference line is at a distance of χ12/2 from the maximum and can be used to read off an approximate 95% confidence interval for λ. It appears as though a value close to zero would be good, so we take logs.

Possibly, the transformation could be improved by adding a shift parameter to the log transformation. Panel (c) of the figure shows the log-likelihood. In this case, the maximum of the likelihood is close to zero suggesting that a shift parameter is not needed. The final panel shows the transformed data with a superimposed regression line.

Note that although Box–Cox transformations can make big improvements in model fit, there are some issues that the transformation cannot help with. In the current example, the data are rather heavy-tailed so that the assumption of normality is not realistic and a robust regression approach leads to a more precise model.

Econometric application

Economists often characterize production relationships by some variant of the Box–Cox transformation.

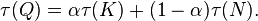

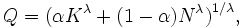

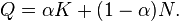

Consider a common representation of production Q as dependent on services provided by a capital stock K and by labor hours N:

Solving for Q by inverting the Box–Cox transformation we find

which is known as the constant elasticity of substitution (CES) production function.

The CES production function is a homogeneous function of degree one.

When λ = 1, this produces the linear production function:

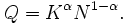

When λ → 0 this produces the famous Cobb–Douglas production function:

Activities and demonstrations

The SOCR resource pages contain a number of hands-on interactive activities[8] demonstrating the Box–Cox (Power) Transformation using Java applets and charts. These directly illustrate the effects of this transform on Q-Q plots, X-Y scatterplots, time-series plots and histograms.

Notes

- ↑ Bickel and Doksum (1981)

- ↑ Bickel and Doksum (1981)

- ↑ Sakia, R. M. (1992), "The Box-Cox transformation technique: a review", The Statistician 41: 169–178, doi:10.2307/2348250

- ↑ Li, Fengfei (April 11, 2005), Box-Cox Transformations: An Overview (slide presentation), Sao Paulo, Brazil: University of Sao Paulo, Brazil, retrieved 2014-11-02

- ↑ 5.0 5.1 Box, George E. P.; Cox, D. R. (1964). "An analysis of transformations". Journal of the Royal Statistical Society, Series B 26 (2): 211–252. JSTOR 2984418. MR 192611.

- ↑ Peters, J. L.; Rushton, L.; Sutton, A. J.; Jones, D. R.; Abrams, K. R.; Mugglestone, M. A. (2005). "Bayesian methods for the cross-design synthesis of epidemiological and toxicological evidence". Journal of the Royal Statistical Society: Series C (Applied Statistics) 54: 159. doi:10.1111/j.1467-9876.2005.00476.x.

- ↑ BUPA liver disorder dataset

- ↑ Power Transform Family Graphs, SOCR webpages

References

- Box, George E. P.; Cox, D. R. (1964). "An analysis of transformations". Journal of the Royal Statistical Society, Series B 26 (2): 211–252. JSTOR 2984418. MR 192611.

- Bickel, Peter J.; Doksum, Kjell A. (June 1981), "An analysis of transformations revisted", Journal of the American Statistical Association (American Statistical Association) 76 (374): 296–311, doi:10.1080/01621459.1981.10477649

- Carroll, RJ and Ruppert, D. On prediction and the power transformation family. Biometrika 68: 609–615.

- DeGroot, M. H. (1987). "A Conversation with George Box". Statistical Science 2 (3): 239–258. doi:10.1214/ss/1177013223.

- Handelsman, DJ. Optimal Power Transformations for Analysis of Sperm Concentration and Other Semen Variables. Journal of Andrology, Vol. 23, No. 5, September/October 2002.

- Gluzman, S and Yukalov, VI. Self-similar power transforms in extrapolation problems. Journal of Mathematical Chemistry, Volume 39, Number 1 / January, 2006, doi:10.1007/s10910-005-9003-7, 47–56.

- Howarth, RJ and Earle, SAM. Application of a generalized power transformation to geochemical data Journal Mathematical Geology, Volume 11, Number 1 / February, 1979, DOI 10.1007/BF01043245, pages 45–62.

External links

- Nishii, R. (2001), "Box–Cox transformation", in Hazewinkel, Michiel, Encyclopedia of Mathematics, Springer, ISBN 978-1-55608-010-4 (fixed link)

![y_i^{(\lambda)} =

\begin{cases}

\dfrac{y_i^\lambda-1}{\lambda(\operatorname{GM}(y))^{\lambda -1}} , &\text{if } \lambda \neq 0 \\[12pt]

\operatorname{GM}(y)\ln{y_i} , &\text{if } \lambda = 0

\end{cases}](../I/m/d785cf0215f048b1fbce2a02e7f5a00b.png)