Pointwise mutual information

Pointwise mutual information (PMI),[1] or point mutual information, is a measure of association used in information theory and statistics.

Definition

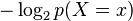

The PMI of a pair of outcomes x and y belonging to discrete random variables X and Y quantifies the discrepancy between the probability of their coincidence given their joint distribution and their individual distributions, assuming independence. Mathematically:

The mutual information (MI) of the random variables X and Y is the expected value of the PMI over all possible outcomes (with respect to the joint distribution  ).

).

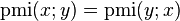

The measure is symmetric ( ). It can take positive or negative values, but is zero if X and Y are independent. Note that even though PMI may be negative or positive, its expected outcome over all joint events (MI) is positive. PMI maximizes when X and Y are perfectly associated (i.e.

). It can take positive or negative values, but is zero if X and Y are independent. Note that even though PMI may be negative or positive, its expected outcome over all joint events (MI) is positive. PMI maximizes when X and Y are perfectly associated (i.e.  or

or  ), yielding the following bounds:

), yielding the following bounds:

Finally,  will increase if

will increase if  is fixed but

is fixed but  decreases.

decreases.

Here is an example to illustrate:

| x | y | p(x, y) |

|---|---|---|

| 0 | 0 | 0.1 |

| 0 | 1 | 0.7 |

| 1 | 0 | 0.15 |

| 1 | 1 | 0.05 |

Using this table we can marginalize to get the following additional table for the individual distributions:

| p(x) | p(y) | |

|---|---|---|

| 0 | .8 | 0.25 |

| 1 | .2 | 0.75 |

With this example, we can compute four values for  . Using base-2 logarithms:

. Using base-2 logarithms:

| pmi(x=0;y=0) | −1 |

| pmi(x=0;y=1) | 0.222392421 |

| pmi(x=1;y=0) | 1.584962501 |

| pmi(x=1;y=1) | −1.584962501 |

(For reference, the mutual information  would then be 0.214170945)

would then be 0.214170945)

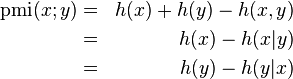

Similarities to mutual information

Pointwise Mutual Information has many of the same relationships as the mutual information. In particular,

Where  is the self-information, or

is the self-information, or  .

.

Normalized pointwise mutual information (npmi)

Pointwise mutual information can be normalized between [-1,+1] resulting in -1 (in the limit) for never occurring together, 0 for independence, and +1 for complete co-occurrence.

![\operatorname{npmi}(x;y) = \frac{\operatorname{pmi}(x;y)}{-\log \left[ p(x, y) \right] }](../I/m/3fcb09bbe16337bdee0ed561c4b5c3a3.png)

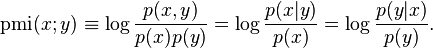

Chain-rule for pmi

Pointwise mutual information follows the chain rule, that is,

This is easily proven by:

References

- ↑ Kenneth Ward Church and Patrick Hanks (March 1990). "Word association norms, mutual information, and lexicography". Comput. Linguist. 16 (1): 22–29.

- Bouma, Gerlof (2009). "Normalized (Pointwise) Mutual Information in Collocation Extraction". Proceedings of the Biennial GSCL Conference.

- Fano, R M (1961). "chapter 2". Transmission of Information: A Statistical Theory of Communications. MIT Press, Cambridge, MA. ISBN 978-0262561693.

External links

- Demo at Rensselaer MSR Server (PMI values normalized to be between 0 and 1)

![-\infty \leq \operatorname{pmi}(x;y) \leq \min\left[ -\log p(x), -\log p(y) \right] .](../I/m/464207317c0a7e9c6f927eb4966431b5.png)

![\begin{align}

\operatorname{pmi}(x;y) + \operatorname{pmi}(x;z|y) & {} = \log\frac{p(x,y)}{p(x)p(y)} + \log\frac{p(x,z|y)}{p(x|y)p(z|y)} \\

& {} = \log \left[ \frac{p(x,y)}{p(x)p(y)} \frac{p(x,z|y)}{p(x|y)p(z|y)} \right] \\

& {} = \log \frac{p(x|y)p(y)p(x,z|y)}{p(x)p(y)p(x|y)p(z|y)} \\

& {} = \log \frac{p(x,yz)}{p(x)p(yz)} \\

& {} = \operatorname{pmi}(x;yz)

\end{align}](../I/m/a3a53dc39219ed9aa447b03e9e852205.png)